| CARVIEW |

We are actively looking for research interns and full-time researchers to work on cutting-edge research topics. If you're interested in exploring these opportunities, please reach out to me at xintao.wang@outlook.com.

I received my Ph.D. from Multimedia Lab (MMLab), the Chinese University of Hong Kong, advised by Prof. Xiaoou Tang and Prof. Chen Change Loy. I obtained my bachelor's degree from Zhejiang University.

News

- [3/2025] We release several interesting papers: ReCamMaster, DiffMoE and IGV-as-GGE.

- [2/2025] Three papers (StyleMaster, PatchVSR and SketchVideo) are accepted to CVPR 2025.

- [1/2025] Two papers (SynCamMaster and 3DTrajMaster) are accepted to ICLR 2025.

- [12/2024] Three papers (ImageConductor, CustomCrafter and Anti-Diffusion[oral]) are accepted to AAAI 2025.

- [9/2024] Ranked as Top 2% Scientists Worldwide 2024 (Single Year) by Stanford University.

- [9/2024] Three papers (MiraData, ReVideo and VideoTetris) are accepted to NeurIPS 2024.

- [9/2024] One paper (StyleAdapter) is accepted to IJCV.

- [8/2024] Two papers (ToonCrafter and StyleCrafter) are accepted to SIGGRAPH Asia 2024.

- [7/2024] One paper (CustomNet) is accepted to ACM MM 2024.

- [7/2024] Five papers (DynamiCrafter[oral], BrushNet, DreamDiffusion, MOFA-Video, CheapScaling) are accepted to ECCV 2024.

- [5/2024] One paper (PromptGIP) is accepted to ICML 2024.

- [3/2024] One paper (MotionCtrl) is accepted to SIGGRAPH 2024.

- [3/2024] Nine papers (PhotoMaker, SUPIR, VideoCrafter2, SmartEdit[highlight], Rethink VQ Tokenizer, EvalCrafter, X-Adapter, DiffEditor and Seeing&Hearing) are accepted to CVPR 2024.

- [1/2024] Four papers (DragonDiffusion[spotlight], ScaleCrafter[spotlight], FreeNoise and SEED-LlaMa) are accepted to ICLR 2024.

- [12/2023] Three papers are accepted to AAAI 2024.

- [10/2023] Ranked as Top 2% Scientists Worldwide 2023 (Single Year) by Stanford University.

- [10/2023] Two papers are accepted to NeurIPS 2023.

- [09/2023] Release T2I-Adapter for SDXL: the most efficient control models, collaborating with HuggingFace.

- [07/2023] Three papers are accepted to ICCV 2023.

- [04/2023] One paper is accepted to ICML 2023.

- [03/2023] We are holding the 360° Super-Resolution Challenge as a part of the NTIRE workshop in conjunction with CVPR 2023.

- [02/2023] Three papers to appear in CVPR 2023.

- [11/2022] Two papers to appear in AAAI 2023.

- [09/2022] Ranked as Top 2% Scientists Worldwide 2022 (Single Year) by Stanford University.

- [09/2022] Two papers to appear in NeurIPS 2022.

- [07/2022] Two papers to appear in ECCV 2022. VQFR is accepted as oral (2.7%).

- [06/2022] Two papers to appear in ACM MM 2022.

- [05/2022] BasicSR joins the XPixel Group!

- [04/2022] We release a high-quality face video dataset (VFHQ). Please refer to the project page and our paper.

- [12/2021] One paper to appear in NeurIPS 2021 as spotlight (2.85%): FAIG: Finding Discriminative Filters for Specific Degradations in Blind Super-Resolution. Codes are released in TencentARC/FAIG.

- [10/2021] Real-ESRGAN is accepted by ICCV 2021 AIM workshop with Honorary Nomination Paper Award.

- [07/2021] One paper to appear in ICCV 2021: Towards Vivid and Diverse Image Colorization with Generative Color Prior

- [07/2021] The codes for practical image restoration Real-ESRGAN are released on Github.

- [06/2021] The training and testing codes of GFPGAN are released on TencentARC.

- [03/2021] 5 papers to appear in CVPR 2021.

- [03/2021] A brand-new HandyView online!.

- [08/2020] A brand-new BasicSR v1.0.0 online!

- [06/2019] We have released the EDVR training and testing codes and also updated BasicSR codes!

- [06/2019] Got my first outstanding reviewer recognition from CVPR 2019!

- [05/2019] Our video restoration method, EDVR, won all four tracks in the NTIRE 2019 video restoration and enhancement challenges. Check our paper for more details.

- [03/2019] Our paper Deep Network Interpolation for Continuous Imagery Effect Transition to appear in CVPR 2019.

- [08/2018] Our SuperSR team won the third track of the 2018 PIRM Challenge on Perceptual Super-Resolution. Check the report ESRGAN for more details.

- [06/2018] We won the NTIRE 2018 Challenge on Single Image Super-Resolution as first runner-up and ranked the first in the Realistic Wild ×4 conditions track.

- [02/2018] Our paper Recovering Realistic Texture in Image Super-resolution by Deep Spatial Feature Transform to appear in CVPR 2018.

- [07/2017] Our HelloSR team won the NTIRE 2017 Challenge on Single Image Super-Resolution as first runner-up.

Click for More

Publications [Full List]

Selected Preprint

ReCamMaster: Camera-Controlled Generative Rendering from A Single Video

Jianhong Bai, Menghan Xia, Xiao Fu, Xintao Wang, Lianrui Mu, Jinwen Cao, Zuozhu Liu, Haoji Hu, Xiang Bai, Pengfei Wan, Di Zhang

arXiv preprint: 2503.11647.

Project Page

Paper

(arXiv)

Codes

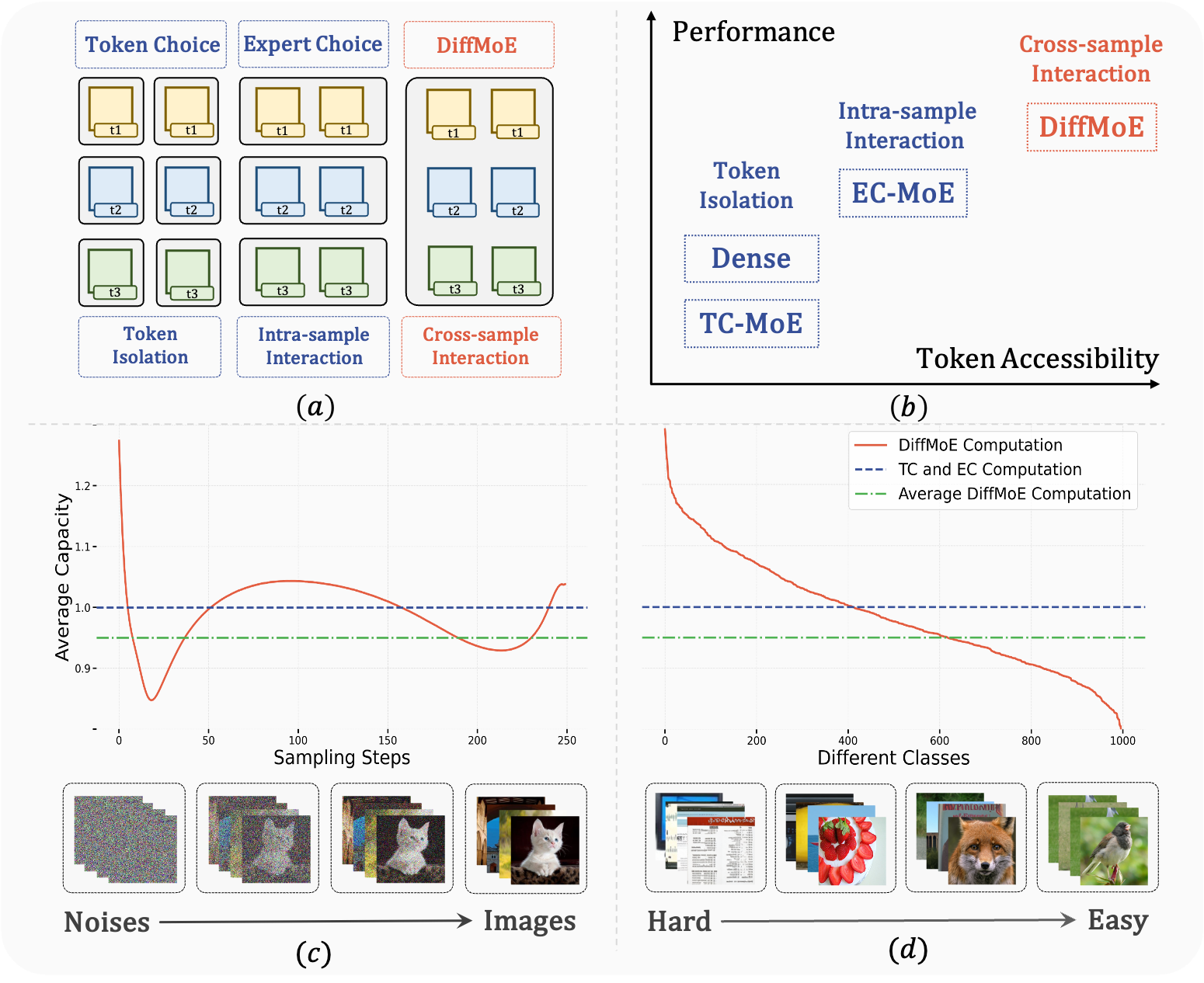

DiffMoE: Dynamic Token Selection for Scalable Diffusion Transformers

Minglei Shi, Ziyang Yuan, Haotian Yang, Xintao Wang#, Mingwu Zheng, Xin Tao, Wenliang Zhao, Wenzhao Zheng, Jie Zhou, Jiwen Lu#, Pengfei Wan, Di Zhang, Kun Gai

arXiv preprint: 2503.14487.

Project Page

Paper

(arXiv)

Codes

Improving Video Generation with Human Feedback

Jie Liu, Gongye Liu, Jiajun Liang, Ziyang Yuan, Xiaokun Liu, Mingwu Zheng, Xiele Wu, Qiulin Wang, Wenyu Qin, Menghan Xia, Xintao Wang, Xiaohong Liu, Fei Yang, Pengfei Wan, Di Zhang, Kun Gai, Yujiu Yang, Wanli Ouyang

arXiv preprint: 2501.13918.

Project Page

Paper

(arXiv)

Codes

GameFactory: Creating New Games with Generative Interactive Videos

Jiwen Yu, Yiran Qin, Xintao Wang#, Pengfei Wan, Di Zhang, Xihui Liu#

arXiv preprint: 2501.08325.

Project Page

Paper

(arXiv)

Codes

2024

SynCamMaster: Synchronizing Multi-Camera Video Generation from Diverse Viewpoints

Jianhong Bai, Menghan Xia, Xintao Wang, Ziyang Yuan, Xiao Fu, Zuozhu Liu, Haoji Hu, Pengfei Wan, Di Zhang

arXiv preprint: 2412.07760

ICLR, 2025.

Project Page Paper

(arXiv)

Codes

StyleMaster: Stylize Your Video with Artistic Generation and Translation

Zixuan Ye, Huijuan Huang, Xintao Wang, Pengfei Wan, Di Zhang, Wenhan Luo

arXiv preprint: 2412.07744

CVPR, 2025.

Project Page Paper

(arXiv)

Codes

3DTrajMaster: Mastering 3D Trajectory for Multi-Entity Motion in Video Generation

Xiao Fu, Xian Liu, Xintao Wang#, Sida Peng, Menghan Xia, Xiaoyu Shi, Ziyang Yuan, Pengfei Wan, Di Zhang, Dahua Lin#

arXiv preprint: 2412.07759

ICLR, 2025.

Project Page Paper

(arXiv)

Codes

MiraData: A Large-Scale Video Dataset with Long Durations and Structured Captions

Xuan Ju, Yiming Gao, Zhaoyang Zhang, Ziyang Yuan, Xintao Wang, Ailing Zeng, Yu Xiong, Qiang Xu, Ying Shan

arXiv preprint: 2407.06358

NeurIPS (Datasets & Benchmarks Track), 2024.

Project Page Paper

(arXiv)

Codes

MOFA-Video: Controllable Image Animation via Generative Motion Field Adaptions in Frozen Image-to-Video Diffusion Model

Muyao Niu, Xiaodong Cun, Xintao Wang, Yong Zhang, Ying Shan, Yinqiang Zheng

arXiv preprint: 2405.20222

ECCV, 2024.

Project Page Paper

(arXiv)

Codes

ToonCrafter: Generative Cartoon Interpolation

Jinbo Xing, Hanyuan Liu, Menghan Xia, Yong Zhang, Xintao Wang, Ying Shan, Tien-Tsin Wong

arXiv preprint: 2405.17933

SIGGRAPH Asia, 2024.

Project Page Paper

(arXiv)

Codes

ReVideo: Remake a Video with Motion and Content Control

Chong Mou, Mingdeng Cao, Xintao Wang#, Zhaoyang Zhang, Ying Shan, Jian Zhang#

arXiv preprint: 2405.13865

NeurIPS, 2024.

Project Page Paper

(arXiv)

Codes

BrushNet: A Plug-and-Play Image Inpainting Model with Decomposed Dual-Branch Diffusion

Xuan Ju, Xian Liu, Xintao Wang#, Yuxuan Bian, Ying Shan, Qiang Xu#

arXiv preprint: 2403.06976

ECCV, 2024.

Project Page Paper

(arXiv)

Codes

Scaling Up to Excellence: Practicing Model Scaling for Photo-Realistic Image Restoration In the Wild

Fanghua Yu, Jinjin Gu, Zheyuan Li, Jinfan Hu, Xiangtao Kong, Xintao Wang, Jingwen He, Yu Qiao, Chao Dong

arXiv preprint: 2401.13627

CVPR, 2024.

Project Page Paper

(arXiv)

Codes

VideoCrafter2: Overcoming Data Limitations for High-Quality Video Diffusion Models

Haoxin Chen, Yong Zhang, Xiaodong Cun, Menghan Xia, Xintao Wang, Chao Wen, Ying Shan

arXiv preprint: 2401.09047

CVPR, 2024.

Project Page Paper

(arXiv)

Codes

2023

SmartEdit: Exploring Complex Instruction-based Image Editing with Multimodal Large Language Models

Yuzhou Huang, Liangbin Xie, Xintao Wang#, Ziyang Yuan, Xiaodong Cun, Yixiao Ge, Jiantao Zhou, Chao Dong, Rui Huang, Ruimao Zhang#, Ying Shan

arXiv preprint: 2312.06739

CVPR, 2024 (hilight).

Project Page Paper

(arXiv)

Codes

PhotoMaker: Customizing Realistic Human Photos via Stacked ID Embedding

Zhen Li, Mingdeng Cao, Xintao Wang#, Zhongang Qi, Ming-Ming Cheng#, Ying Shan

arXiv preprint: 2312.04461

CVPR, 2024.

Project Page Paper

(arXiv)

Codes

MotionCtrl: A Unified and Flexible Motion Controller for Video Generation

Zhouxia Wang, Ziyang Yuan, Xintao Wang#, Tianshui Chen, Menghan Xia, Ping Luo#, Ying Shan

arXiv preprint: 2312.03641

SIGGRAPH, 2024.

Project Page Paper

(arXiv)

Codes

StyleCrafter: Enhancing Stylized Text-to-Video Generation with Style Adapter

Gongye Liu, Menghan Xia, Yong Zhang, Haoxin Chen, Jinbo Xing, Yibo Wang, Xintao Wang, Yujiu Yang, Ying Shan

arXiv preprint: 2312.00330

SIGGRAPH Asia, 2024.

Project Page Paper

(arXiv)

Codes

CustomNet: Object Customization with Variable-Viewpoints in Text-to-Image Diffusion Models

Ziyang Yuan, Mingdeng Cao, Xintao Wang#, Zhongang Qi, Chun Yuan#, Ying Shan

arXiv preprint: 2310.19784 ACM MM, 2024. Project Page Paper (arXiv)

FreeNoise: Tuning-Free Longer Video Diffusion via Noise Rescheduling

Haonan Qiu, Menghan Xia, Yong Zhang, Yingqing He, Xintao Wang, Ying Shan, Ziwei Liu

arXiv preprint: 2310.15169.

ICLR, 2024

Project Page

Paper

(arXiv)

Codes

DynamiCrafter: Animating Open-domain Images with Video Diffusion Priors

Jinbo Xing, Menghan Xia, Yong Zhang, Haoxin Chen, Wangbo Yu, Hanyuan Liu, Gongye Liu, Xintao Wang, Ying Shan, Tien-Tsin Wong

arXiv preprint: 2310.12190

ECCV, 2024 (oral).

Project Page Paper

(arXiv)

Codes

EvalCrafter: Benchmarking and Evaluating Large Video Generation Models

Yaofang Liu, Xiaodong Cun, Xuebo Liu, Xintao Wang, Yong Zhang, Haoxin Chen, Yang Liu, Tieyong Zeng, Raymond H. Chan, Ying Shan

arXiv preprint: 2310.11440

CVPR, 2024.

Project Page Paper

(arXiv)

Codes

ScaleCrafter: Tuning-free Higher-Resolution Visual Generation with Diffusion Models

Yingqing He, Shaoshu Yang, Haoxin Chen, Xiaodong Cun, Menghan Xia, Yong Zhang, Xintao Wang, Ran He, Qifeng Chen, Ying Shan

arXiv preprint: 2310.07702.

ICLR, 2024 (spotlight)

Project Page

Paper

(arXiv)

Codes

Making LLaMA SEE and Draw with SEED Tokenizer

Yuying Ge, Sijie Zhao, Ziyun Zeng, Yixiao Ge, Chen Li, Xintao Wang, Ying Shan Haonan Qiu, Menghan Xia, Yong Zhang, Yingqing He, , Ying Shan, Ziwei Liu

arXiv preprint: 2310.01218.

ICLR, 2024

Project Page

Paper

(arXiv)

Codes

StyleAdapter: A Unified Stylized Image Generation Model

Zhouxia Wang, Xintao Wang#, Liangbin Xie, Zhongang Qi, Ying Shan, Wenping Wang, Ping Luo#

arXiv preprint: 2309.01770 IJCV, 2024. Paper (arXiv)

DragonDiffusion: Enabling Drag-style Manipulation on Diffusion Models

Chong Mou, Xintao Wang, Jiechong Song, Ying Shan, Jian Zhang

arXiv preprint: 2307.02421.

ICLR, 2024 (spotlight)

Project Page

Paper

(arXiv)

Codes

DreamDiffusion: Generating High-Quality Images from Brain EEG Signals

Yunpeng Bai, Xintao Wang, Yan-Pei Cao, Yixiao Ge, Chun Yuan, Ying Shan

arXiv preprint: 2306.16934

ECCV, 2024.

Paper

(arXiv)

Codes

MasaCtrl: Tuning-Free Mutual Self-Attention Control for Consistent Image Synthesis and Editing

Mingdeng Cao, Xintao Wang#, Zhongang Qi, Ying Shan, Xiaohu Qie, Yinqiang Zheng#

arXiv preprint: 2304.08465

ICCV, 2023.

Project Page

Paper

(arXiv)

Codes

Follow Your Pose: Pose-Guided Text-to-Video Generation using Pose-Free Videos

Yue Ma, Yingqing He, Xiaodong Cun, Xintao Wang, Ying Shan, Xiu Li, Qifeng Chen

arXiv preprint: 2304.01186.

AAAI, 2024.

Project Page

Paper

(arXiv)

Codes

FateZero: Fusing Attentions for Zero-shot Text-based Video Editing

Chenyang Qi, Xiaodong Cun, Yong Zhang, Chenyang Lei, Xintao Wang, Ying Shan, Qifeng Chen

arXiv preprint: 2303.09535

ICCV, 2023 (oral).

Project Page

Paper

(arXiv)

Codes

T2I-Adapter: Learning Adapters to Dig out More Controllable Ability for Text-to-Image Diffusion Models

Chong Mou, Xintao Wang#, Liangbin Xie, Yanze Wu, Jian Zhang#, Zhongang Qi, Ying Shan, Xiaohu Qie

arXiv preprint: 2302.08453

AAAI, 2023.

Paper

(arXiv)

Codes

2022

Tune-A-Video: One-Shot Tuning of Image Diffusion Models for Text-to-Video Generation

Jay Zhangjie Wu, Yixiao Ge, Xintao Wang, Weixian Lei, Yuchao Gu, Yufei Shi, Wynne Hsu, Ying Shan, Xiaohu Qie, Mike Zheng Shou

arXiv preprint: 2212.11565

ICCV, 2023.

Project Page

Paper

(arXiv)

Codes

DeSRA: Detect and Delete the Artifacts of GAN-based Real-World Super-Resolution Models

Liangbin Xie*, Xintao Wang*, Xiangyu Chen*, Gen Li, Ying Shan, Jiantao Zhou, Chao Dong

ICML, 2023.

Paper

(arXiv)

Codes

Dream3D: Zero-Shot Text-to-3D Synthesis Using 3D Shape Prior and Text-to-Image Diffusion Models

Jiale Xu, Xintao Wang#, Weihao Cheng, Yan-Pei Cao, Ying Shan, Xiaohu Qie, Shenghua Gao#

CVPR, 2023. Project Page Paper (arXiv) Codes (Coming Soon)

Rethinking the Objectives of Vector-Quantized Tokenizers for Image Synthesis

Yuchao Gu, Xintao Wang, Yixiao Ge, Ying Shan, Mike Zheng Shou

arXiv preprint: 2212.03185 CVPR, 2024. Paper (arXiv)

OSRT: Omnidirectional Image Super-Resolution with Distortion-aware Transformer

Fanghua Yu*, Xintao Wang*, Mingdeng Cao, Gen Li, Ying Shan, Chao Dong#

CVPR, 2023.

Paper

(arXiv)

Codes

HAT: Activating More Pixels in Image Super-Resolution Transformer

Xiangyu Chen, Xintao Wang, Jiantao Zhou, Chao Dong

CVPR, 2023.

Paper

(arXiv)

Codes

Mitigating Artifacts in Real-World Video Super-Resolution Models

Liangbin Xie, Xintao Wang, Shuwei Shi, Jinjin Gu, Chao Dong, Ying Shan

AAAI, 2022.

Paper

(arXiv)

Codes

Accelerating the Training of Video Super-resolution Models

Lijian Lin, Xintao Wang#, Zhongang Qi, Ying Shan

AAAI, 2022.

Paper

(arXiv)

Codes

AnimeSR: Learning Real-World Super-Resolution Models for Animation Videos

Yanze Wu*, Xintao Wang*, Gen Li, Ying Shan

NeurIPS, 2022.

Paper

(arXiv)

Codes

Rethinking Alignment in Video Super-Resolution Transformers

Shuwei Shi, Jinjin Gu, Liangbin Xie, Xintao Wang, Yujiu Yang, Chao Dong

NeurIPS, 2022.

Paper

(arXiv)

Codes

VQFR: Blind Face Restoration with Vector-Quantized Dictionary and Parallel Decoder

Yuchao Gu, Xintao Wang, Liangbie Xie, Chao Dong, Gen Li, Ying Shan, Ming-Ming Cheng

Selected as oral (2.7%)

ECCV, 2022.

Paper

(arXiv)

Codes

MM-RealSR: Metric Learning based Interactive Modulation for Real-World Super-Resolution

Chong Mou, Yanze Wu, Xintao Wang, Chao Dong, Jian Zhang, Ying Shan

ECCV, 2022.

Paper

(arXiv)

Codes

2021

Real-ESRGAN: Training Real-World Blind Super-Resolution with Pure Synthetic Data

Xintao Wang, Liangbie Xie, Chao Dong, Ying Shan

ICCVW, 2021.

Paper

(arXiv)

Codes