| CARVIEW |

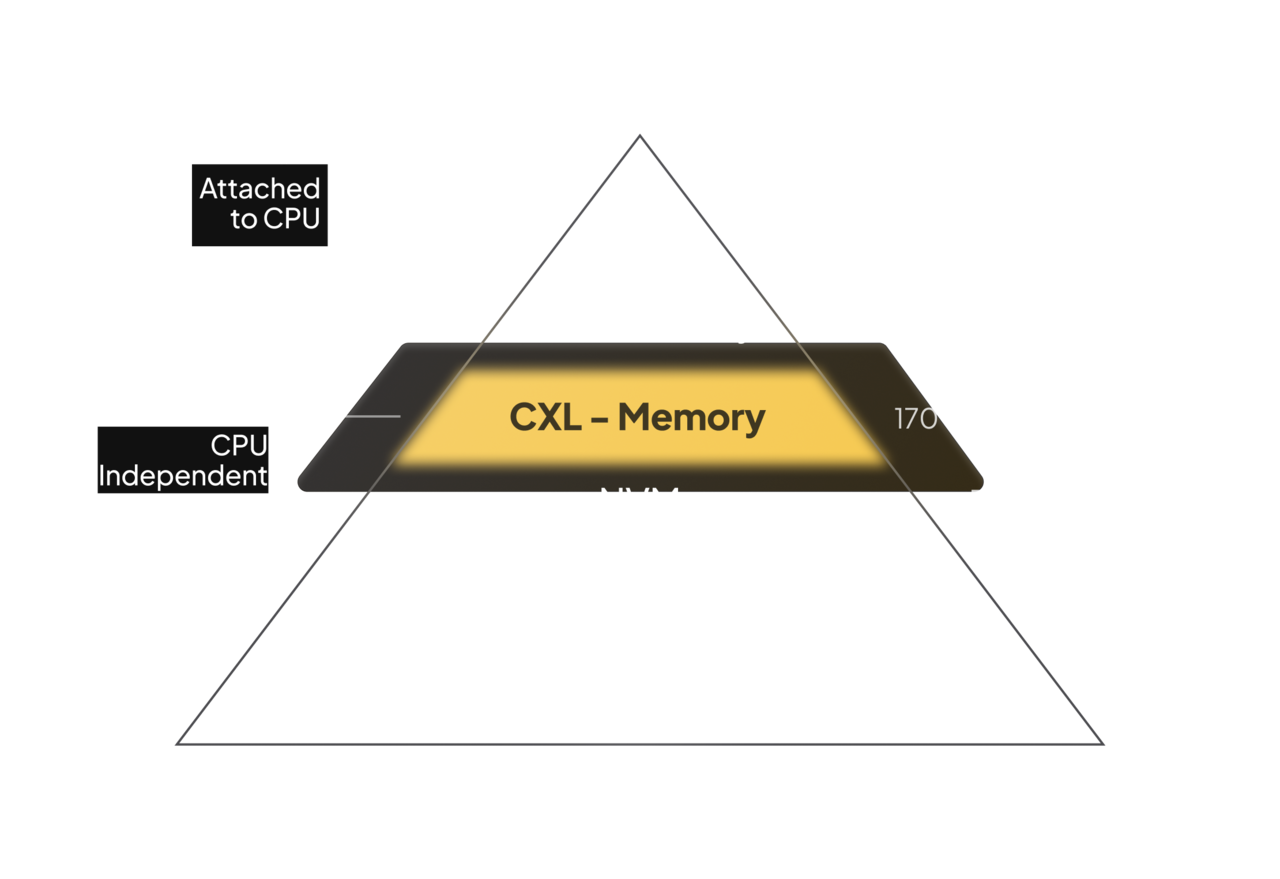

CXL-Based

Memory Scalability

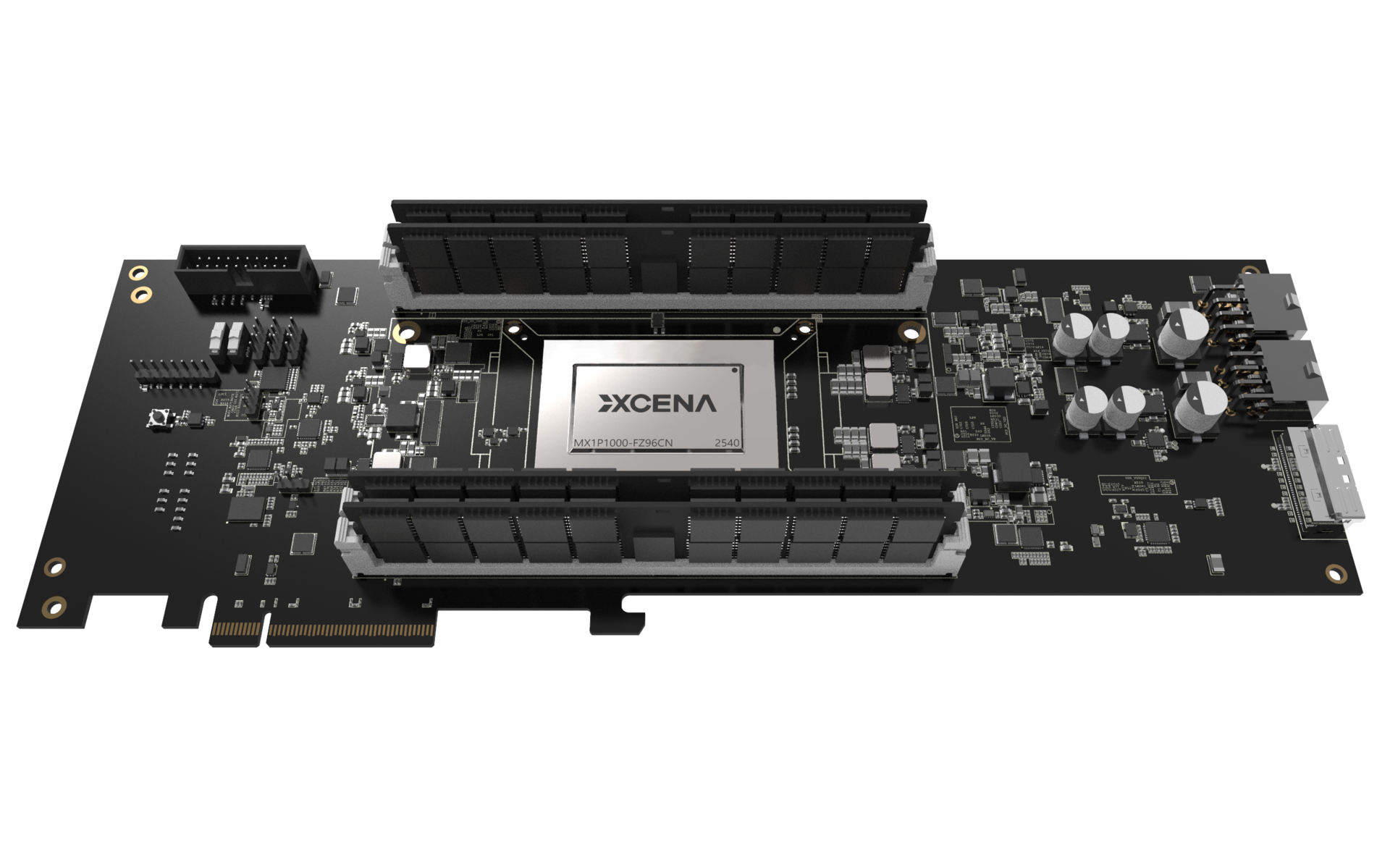

Computational Memory supports CXL 3.0, allowing main memory expansion up to 1TB by connecting four channels of 256GB DDR5 DIMMs without increasing the number of CPU memory channels.

This reduces unnecessary data replication and movement, enhancing overall system efficiency for applications requiring large-scale data processing.

Additionally, it supports bandwidth expansion up to 128GB/s through the PCIe Gen6 interface.

Enhanced Data Processing Efficiency with Near Data Processing Technology

Computational Memory is equipped with

a multi-core processor,

performing parallel offloading tasks (Near Data Processing, NDP) near the data, thus improving data processing speed and efficiency.

NDP technology minimizes latency from data movement across interfaces and significantly reduces TCO for applications requiring large-scale data processing.

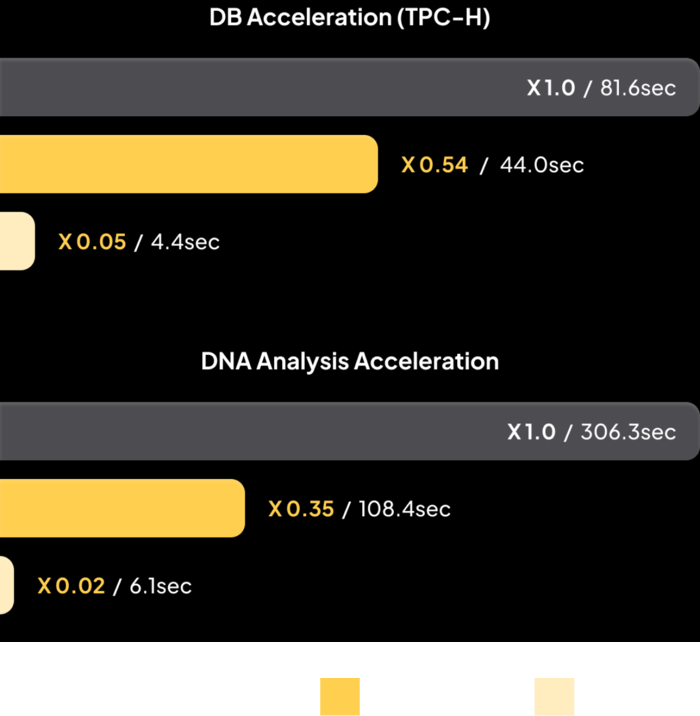

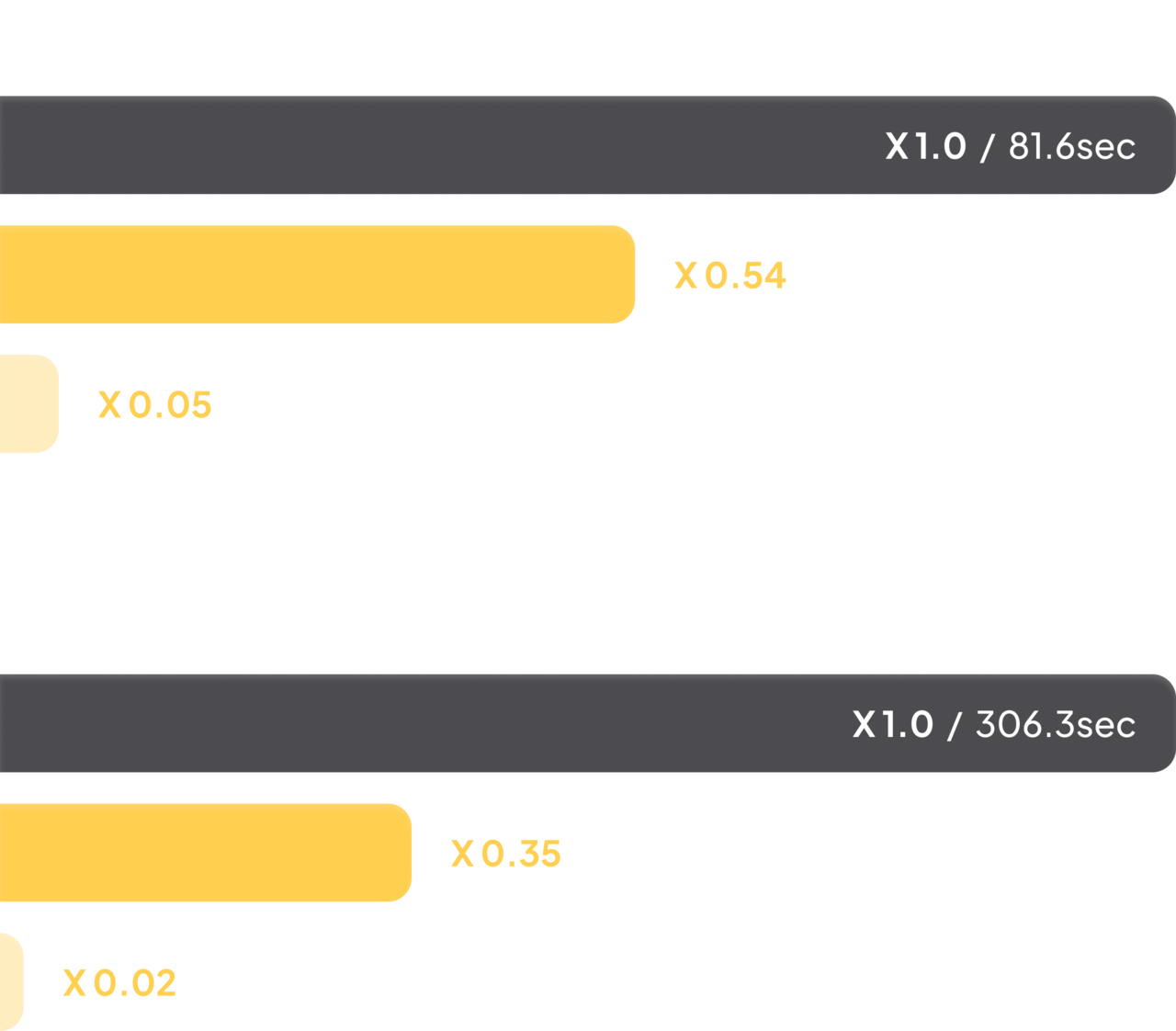

Proven Performance through FPGA Prototypes

Computational Memory FPGA prototype

has demonstrated a 46% reduction in query processing time compared to server CPUs in database acceleration, with potential reductions up to 95% in ASIC(based on TPC-H benchmarks)

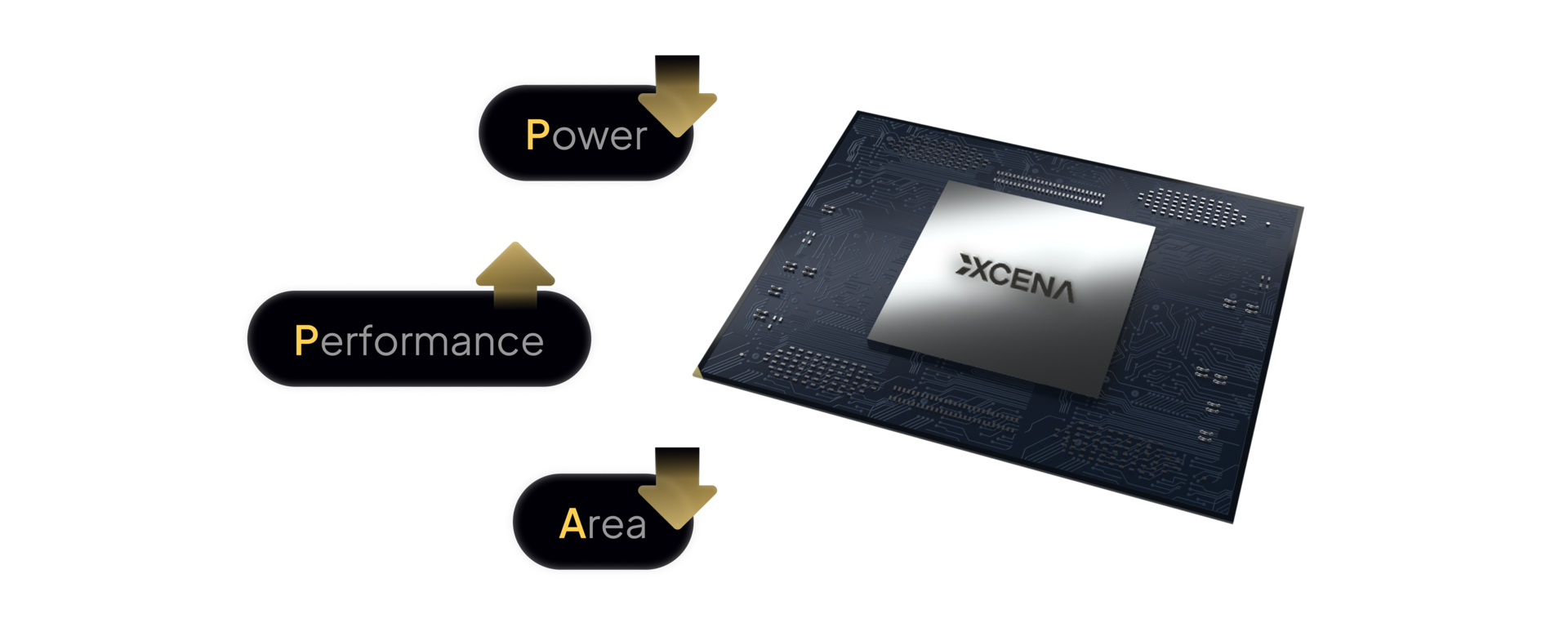

Maximized Performance

with Cutting-Edge Process Technology

Computational Memory utilizes Samsung Foundry's advanced 4nm process, maximizing power efficiency and performance.

Strong RAS feature

- Double die Chipkill Correction

* RAS : Reliability, Availability, Serviceabiltiy

It supports multi-bit/multi-die DRAM ECC (Error Correction Code) and Chipkill, preventing critical system errors caused by various issues, and includes SSD RAID functionality for enhanced reliability.

Applications

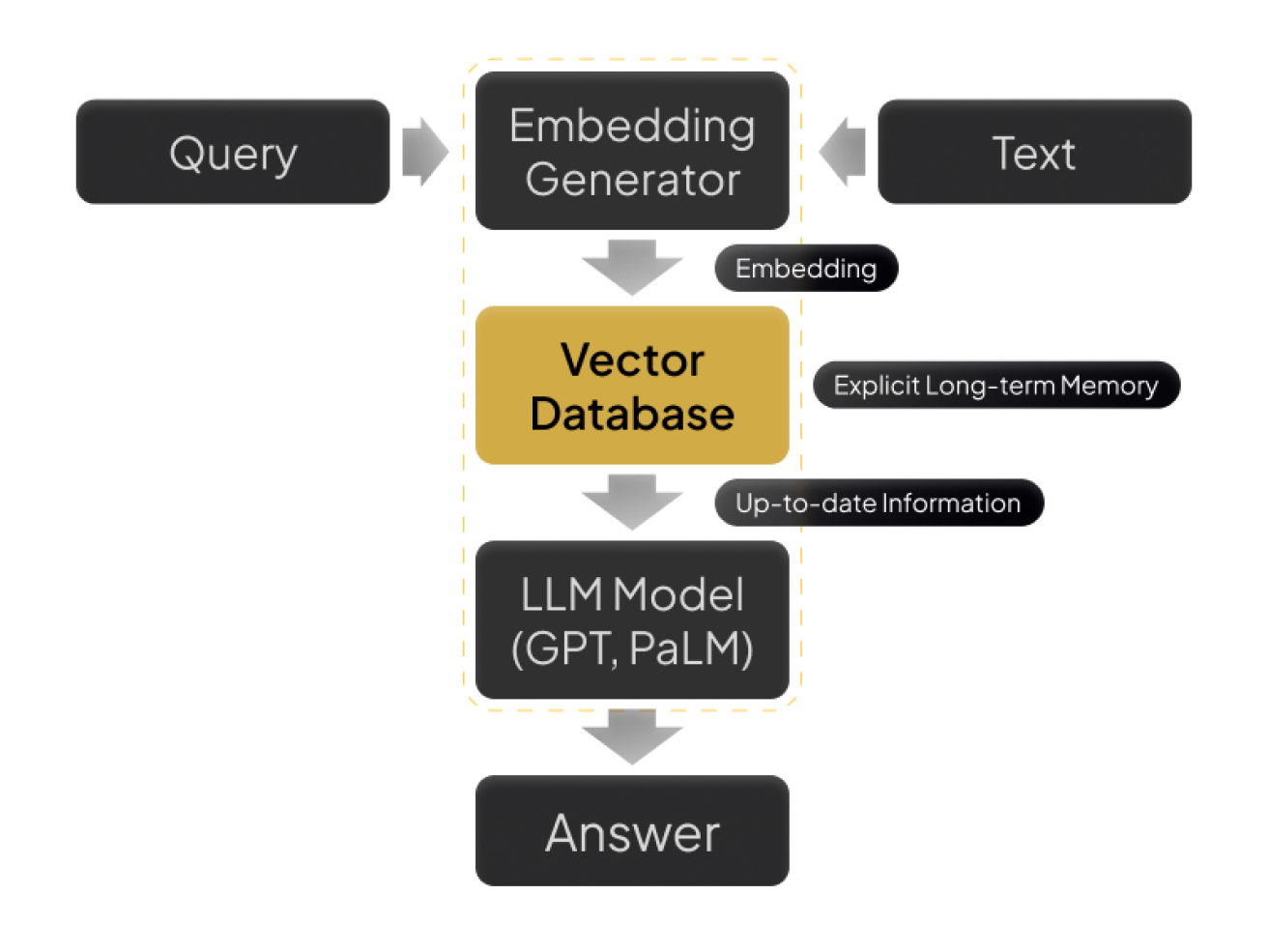

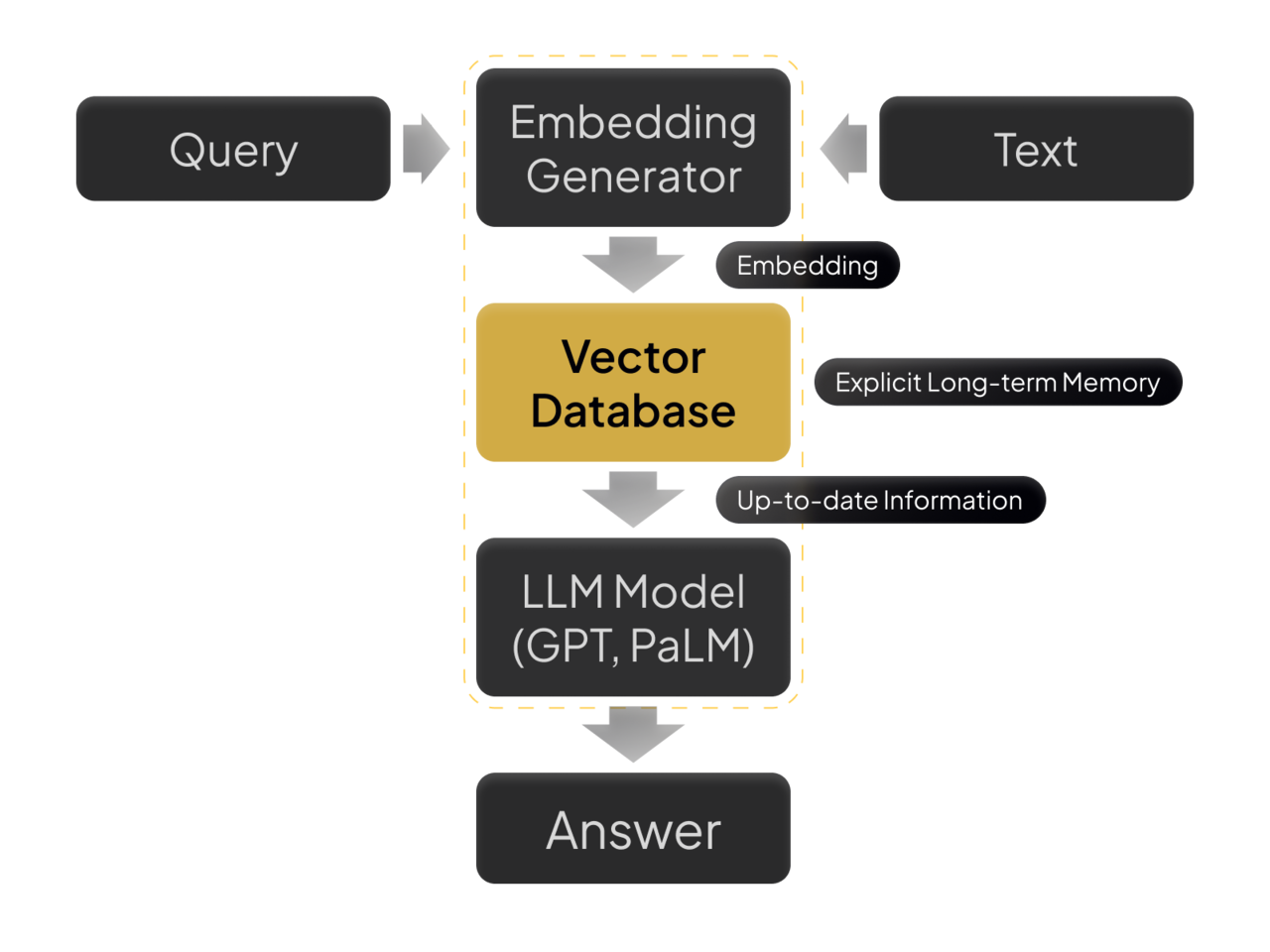

LLM VECTOR DATABASES

- Recent LLMs utilize vector databases to retrieve updated information after training.

- To curb the rapid increase in model size, vector databases are expected to be utilized more intensively.

- The acceleration of vector databases in memory can play a crucial role in the advancement of LLMs.

SCALE-OUT DATABASES

- A large volume of data needs to be processed to create value from it even before AI training/interference.

- Scale-out database clusters like Spark, Databricks, Snowflake are extensively used in ETL. These clusters typically consist of numerous servers.

- By offloading the analytics query engine to computational memory, we could significantly reduce the cluster size.

GRAPH DATABASES

- Graph databases are extensively used in social networks handling enormous amounts of data based on nodes and relationships.

- Graph algorithms mostly involve traversing the relationships between nodes. The key is to traverse pointers in parallel.

- Many small cores with memory-optimized architecture are much more suitable for handling pointer traversing than CPUs.

CXL-Based

Memory Scalability

Computational Memory supports CXL 3.0, allowing main memory expansion up to 1TB by connecting four channels of 256GB DDR5 DIMMs

without increasing the number of CPU memory channels.

This reduces unnecessary data replication and movement,

enhancing overall system efficiency for applications

requiring large-scale data processing.

Additionally, it supports bandwidth expansion up to 128GB/s

through the PCIe Gen6 interface.

Enhanced Data

Processing Efficiency with

Near Data Processing Technology

Computational Memory is equipped with a multi-core processor,

performing parallel offloading tasks (Near Data Processing, NDP)

near the data, thus improving data processing speed and efficiency.

NDP technology minimizes latency from data movement

across interfaces and significantly reduces TCO for applications

requiring large-scale data processing.

Proven Performance

through FPGA Prototypes

Our FPGA prototype has demonstrated a 46% reduction

in query processing time compared to server CPUs in database acceleration, with potential reductions up to 95% in ASIC

(based on TPC-H benchmarks)

Maximized Performance

with Cutting-Edge Process Technology

Computational Memory utilizes Samsung Foundry's advanced 4nm process, maximizing power efficiency and performance.

Strong RAS feature - Double die Chipkill Correction

* RAS : Reliability, Availability, Serviceabiltiy

Computational Memory supports multi-bit/multi-die DRAM ECC (Error Correction Code) and Chipkill, preventing critical system errors caused by various reasons.

It also provides SSD RAID functionality for enhanced reliability.

Applications

LLM VECTOR DATABASES

- Recent LLMs utilize vector databases to retrieve updated information after training.

- To curb the rapid increase in model size, vector databases are expected to be utilized more intensively.

- The acceleration of vector databases in memory can play a crucial role in the advancement of LLMs.

SCALE-OUT DATABASES

- A large volume of data needs to be processed to create value from it even before AI training/inference.

- Scale-out database clusters like Spark, Databricks, Snowflake are extensively used in ETL. These clusters typically consist of numerous servers.

- By offloading the analytics query engine to computational memory, we could significantly reduce the cluster size.

GRAPH DATABASES

- Graph databases are extensively used in social networks handling enormous amounts of data based on nodes and relationships.

- Graph algorithms mostly involve traversing the relationships between nodes. The key is to traverse pointers in parallel.

- Many small cores with memory-optimized architecture are much more suitable for handling pointer traversing than CPUs.

Company Registration Number : 710-81-02837

Address : 20, Pangyoyeok-ro 241beon-gil, Bundang-gu, Seongnam-si, Gyeonggi-do, Republic of Korea

CEO : Jin Kim

© 2024 XCENA Inc. | All Rights Reserved

XCENA Inc.

CEO : Jin Kim

Company Registration Number : 710-81-02837

Address : 20, Pangyoyeok-ro 241beon-gil, Bundang-gu,

Seongnam-si, Gyeonggi-do, Republic of Korea

© 2025 XCENA Inc. | All Rights Reserved