ॐ

Hello, my name is Sonia.

About me

I care about understanding humans as thinking, feeling, computational machines and using these insights to build artificial intelligence that better serves a diversity of human intelligence.

My current work applies models and methodologies from computational cognitive science and human-AI interaction to evaluate and improve LLMs’ abilities to be safe, flexible, and cooperative social partners.

In particular, I approach challenges in AI safety and value alignment through the lenses of pragmatic communication and theory-of-mind reasoning in humans. I use computational models of these cognitive abilities to guide improvements in LLM training and interpretability.

Kempner Institute at Harvard University

I am a fourth-year PhD student in Computer Science at Harvard University, where I am advised by Tomer Ullman in the Computation, Cognition, and Development Lab. I also collaborate with Hidenori Tanaka and Ekdeep Singh Lubana in the Science of Intelligence for Alignment group. I am grateful to be supported by an NSF Graduate Research Fellowship and a Kempner Institute Graduate Fellowship.

ML Alignment & Theory Scholars, UK AI Security Institute

As a MATS scholar, I have the pleasure of working with Tomek Korbak and others at the UK AI Security Institute on evaluating human-AI complementarity techniques for improving scalable oversight of AI agents.

The Allen Institute for Artificial Intelligence

Previously, I was a Predoctoral Young Investigator on the Semantic Scholar team, working with Doug Downey and Daniel Weld on generating diverse descriptions of scientific concepts.

Princeton Computational Cognitive Science Lab

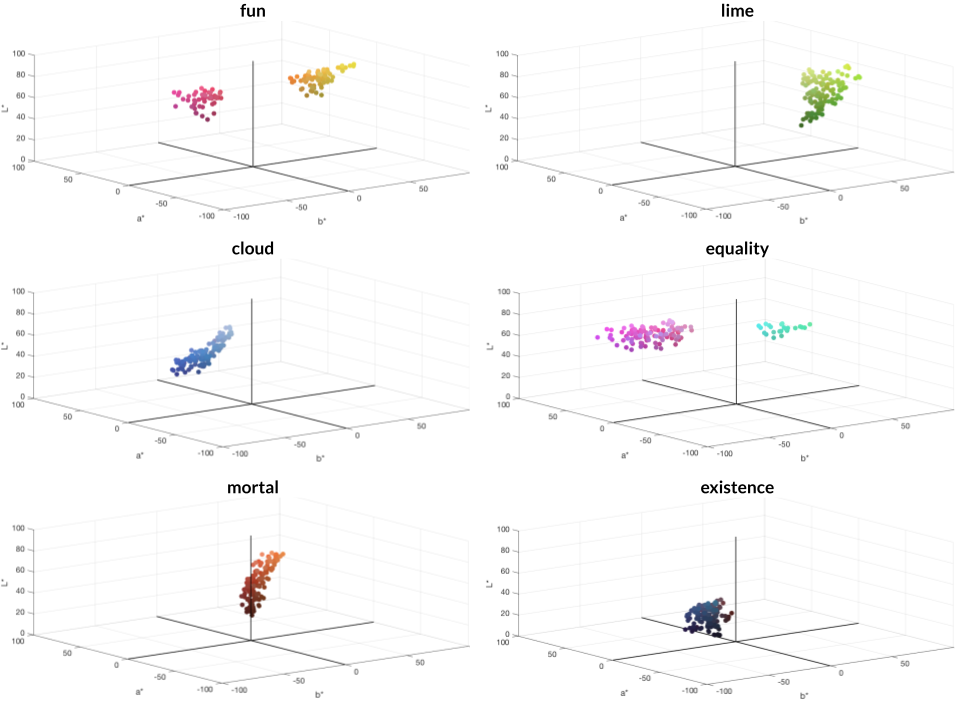

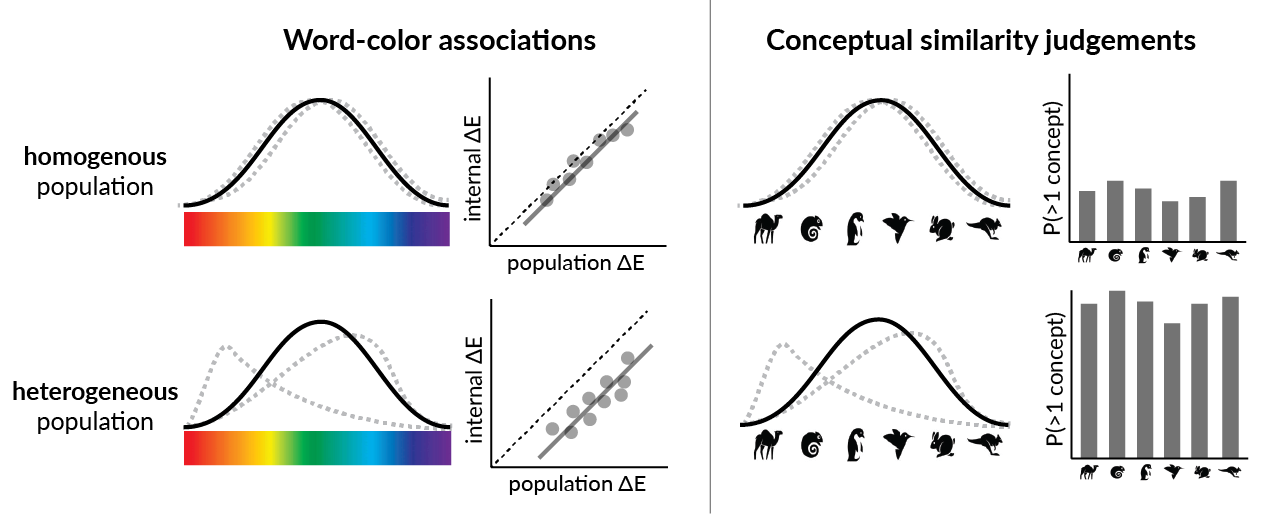

I am also grateful to have worked with Tom Griffiths and Robert Hawkins on models of human word-color associations and studying the mental representations that enable us to flexibly communicate.

If you would like to chat about research, or are a woman/minority student considering graduate school in Psychology or Computer Science, please feel free to reach out to me at soniamurthy [at] g [dot] harvard [dot] edu.

Publications

🌀 spotlight talk

Inside you are many wolves: Using cognitive models to reveal value trade-offs in language models

Sonia K. Murthy, Rosie Zhao, Jennifer Hu, Sham Kakade, Markus Wulfmeier, Peng Qian, Tomer Ullman

🌀 Pragmatic Reasoning in Language Models workshop @ COLM 2025

🌀 Interpreting Cognition in Deep Learning Models workshop @ NeurIPS 2025

Under review @ ICLR 2026

[preprint][code]

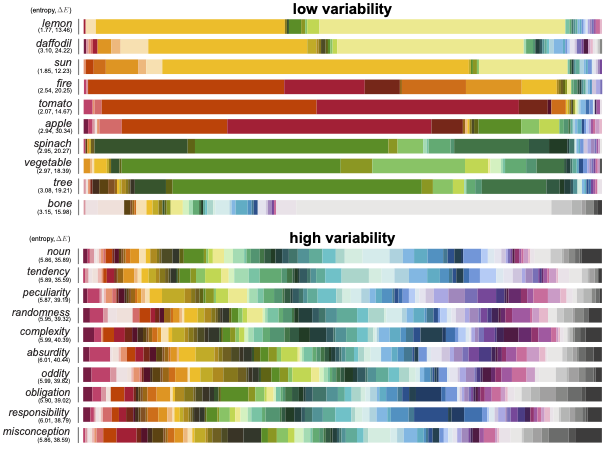

One fish, two fish, but not the whole sea: Alignment reduces language models’ conceptual diversity

Sonia K. Murthy, Tomer Ullman, Jennifer Hu

NAACL (2025)

[pdf][code][Kempner Institute blog post]

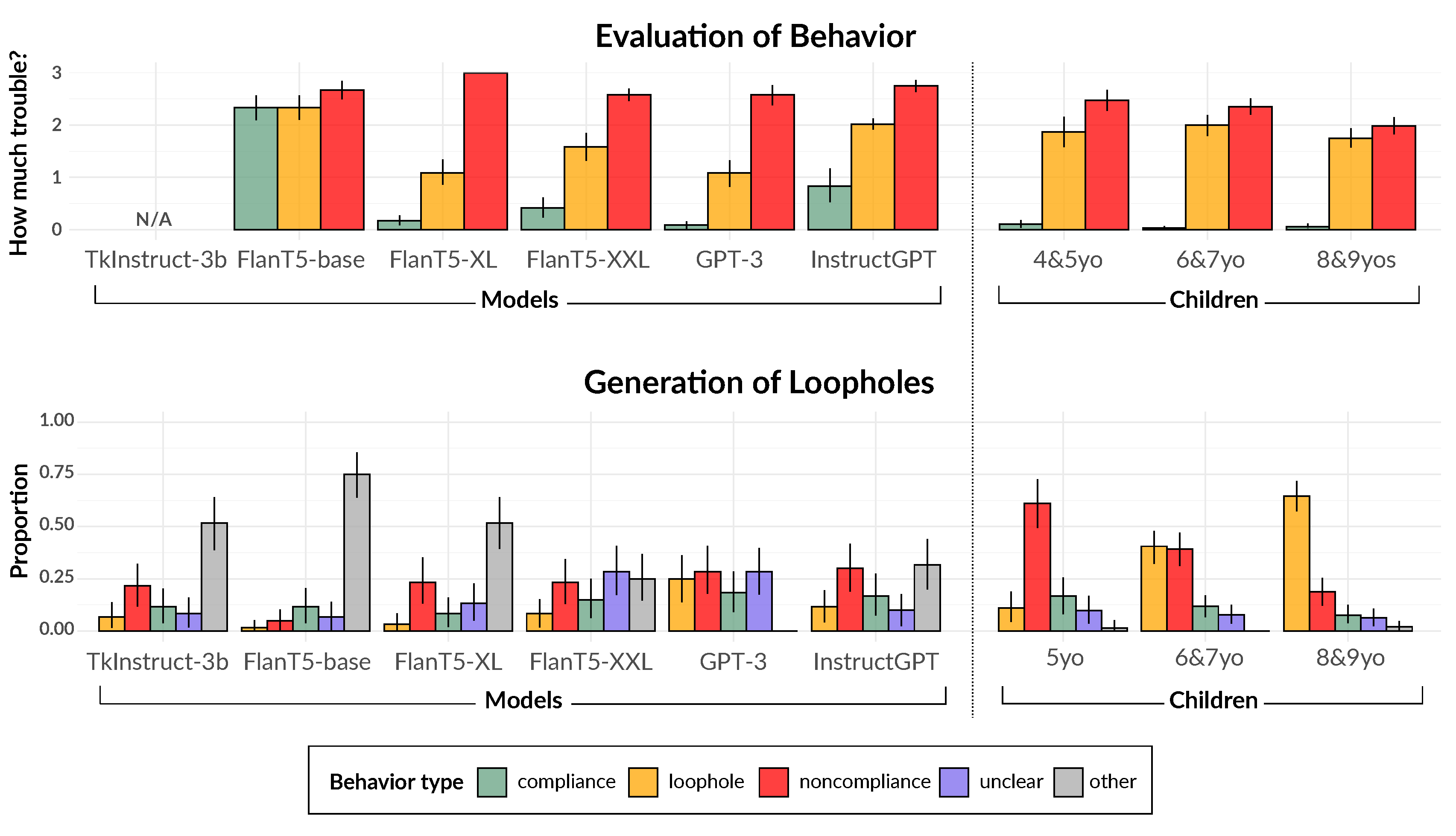

Comparing the Evaluation and Production of Loophole Behavior in Humans and Large Language Models

Sonia K. Murthy, Kiera Parece, Sophie Bridgers, Peng Qian, Tomer Ullman

EMNLP Findings (2023)

[pdf]

an earlier iteration of this work appeared in the Proceedings of the First Workshop on Theory of Mind in Communicating Agents @ ICML 2023

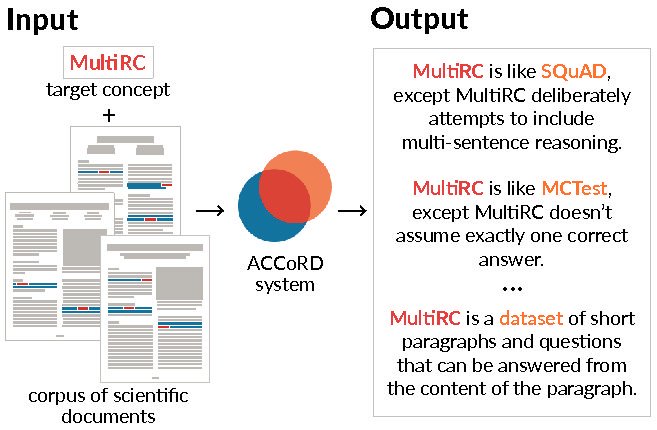

ACCoRD: A Multi-Document Approach to Generating Diverse Descriptions of Scientific Concepts

Sonia K. Murthy, Kyle Lo, Daniel King, Chandra Bhagavatula, Bailey Kuehl, Sophie Johnson, Jon Borchardt, Daniel S. Weld, Tom Hope, Doug Downey

EMNLP System Demonstrations (2022)

[pdf][demo][dataset + code][AI2 blog post]

an earlier iteration of this work appeared in the Proceedings of the Fifth Widening Natural Language Processing Workshop @ EMNLP 2021.