| CARVIEW |

Planet Python

Last update: January 22, 2026 04:44 PM UTC

January 22, 2026

Reuven Lerner

Learn to code with AI — not just write prompts

The AI revolution is here. Engineers at major companies are now using AI instead of writing code directly.

But there’s a gap: Most developers know how to write code OR how to prompt AI, but not both. When working with real data, vague AI prompts produce code that might work on sample datasets but creates silent errors, performance issues, or incorrect analyses with messy, real-world data that requires careful handling.

I’ve spent 30 years teaching Python at companies like Apple, Intel, and Cisco, plus at conferences worldwide. I’m adapting my teaching for the AI era.

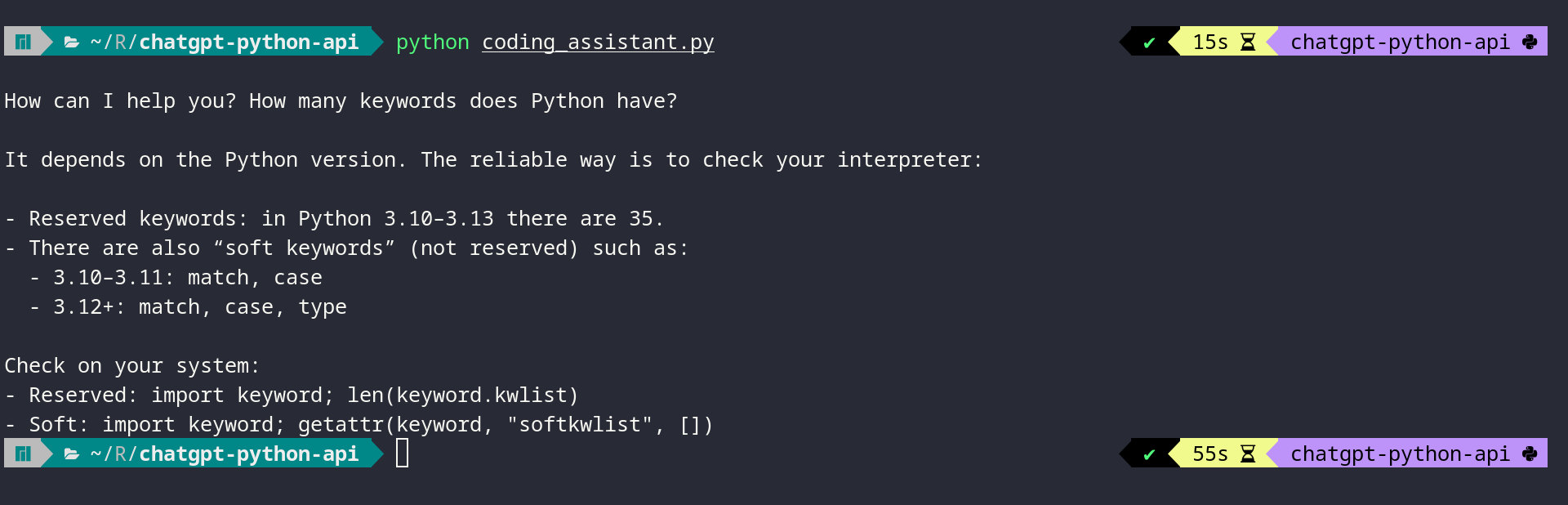

Specifically: I’m launching AI-Powered Python Practice Workshops. These are hands-on sessions where you’ll solve real problems using Claude Code, then learn to critically evaluate and improve the results.

Here’s how it works:

- I present a problem

- You solve it using Claude Code

- We compare prompts, discuss what worked (and what didn’t)

- I provide deep-dives on both the Python concepts AND the AI collaboration techniques

In 3 hours, we’ll cover 3-4 exercises. That’ll give you a chance to learn two skills: Python/Pandas AND effective AI collaboration. That’ll make you more effective at coding, and at the data analysis techniques that actually work with messy, real-world datasets.

Each workshop costs $200 for LernerPython members. Not a member? Total cost is $700 ($500 annual membership + $200 workshop fee). Want both workshops? $900 total ($500 membership + $400 for both workshops). Plus you get 40+ courses, 500+ exercises, office hours, Discord, and personal mentorship.

AI-Powered Python Practice Workshop

- Focus is on the Python language, standard library, and common packages

- Monday, February 2nd

- 10 a.m. – 1 p.m. Eastern / 3 p.m. – 6 p.m. London / 5 p.m. – 8 p.m. Israel

- Sign up here: https://lernerpython.com/product/ai-python-workshop-1/

AI-Powered Pandas Practice Workshop

- Focus is on data analysis with Pandas

- Monday, February 9th

- 10 a.m. – 1 p.m. Eastern / 3 p.m. – 6 p.m. London / 5 p.m. – 8 p.m. Israel

- Sign up here: https://lernerpython.com/product/ai-pandas-workshop-1/

I want to encourage lots of discussion and interactions, so I’m limiting the class to 20 total participants. Both sessions will be recorded, and will be available to all participants.

Questions? Just e-mail me at reuven@lernerpython.com.

The post Learn to code with AI — not just write prompts appeared first on Reuven Lerner.

Python Software Foundation

Announcing Python Software Foundation Fellow Members for Q4 2025! 🎉

The PSF is pleased to announce its fourth batch of PSF Fellows for 2025! Let us welcome the new PSF Fellows for Q4! The following people continue to do amazing things for the Python community:

Chris Brousseau

Website, LinkedIn, GitHub, Mastodon, X, PyBay, PyBay GitHub

Dave Forgac

Website, Mastodon, GitHub, LinkedIn

Inessa Pawson

James Abel

Website, LinkedIn, GitHub, Bluesky

Karen Dalton

Mia Bajić

Tatiana Andrea Delgadillo Garzofino

Website, GitHub, LinkedIn, Instagram

Thank you for your continued contributions. We have added you to our Fellows Roster.

The above members help support the Python ecosystem by being phenomenal leaders, sustaining the growth of the Python scientific community, maintaining virtual Python communities, maintaining Python libraries, creating educational material, organizing Python events and conferences, starting Python communities in local regions, and overall being great mentors in our community. Each of them continues to help make Python more accessible around the world. To learn more about the new Fellow members, check out their links above.

Let's continue recognizing Pythonistas all over the world for their impact on our community. The criteria for Fellow members is available on our PSF Fellow Membership page. If you would like to nominate someone to be a PSF Fellow, please send a description of their Python accomplishments and their email address to psf-fellow at python.org. We are accepting nominations for Quarter 1 of 2026 through February 20th, 2026.

Are you a PSF Fellow and want to help the Work Group review nominations? Contact us at psf-fellow at python.org.

January 21, 2026

Django Weblog

Djangonaut Space - Session 6 Accepting Applications

We are thrilled to announce that Djangonaut Space, a mentorship program for contributing to Django, is open for applicants for our next cohort! 🚀

Djangonaut Space is holding a sixth session! This session will start on March 2nd, 2026. We are currently accepting applications until February 2nd, 2026 Anywhere on Earth. More details can be found in the website.

Djangonaut Space is a free, 8-week group mentoring program where individuals will work self-paced in a semi-structured learning environment. It seeks to help members of the community who wish to level up their current Django code contributions and potentially take on leadership roles in Django in the future.

“I'm so grateful to have been a part of the Djangonaut Space program. It's a wonderfully warm, diverse, and welcoming space, and the perfect place to get started with Django contributions. The community is full of bright, talented individuals who are making time to help and guide others, which is truly a joy to experience. Before Djangonaut Space, I felt as though I wasn't the kind of person who could become a Django contributor; now I feel like I found a place where I belong.” - Eliana, Djangonaut Session 1

Enthusiastic about contributing to Django but wondering what we have in store for you? No worries, we have got you covered! 🤝

Python Software Foundation

Departing the Python Software Foundation (Staff)

This week will be my last as the Director of Infrastructure at the Python Software Foundation and my last week as a staff member. Supporting the mission of this organization with my labor has been unbelievable in retrospect and I am filled with gratitude to every member of this community, volunteer, sponsor, board member, and staff member of this organization who have worked alongside me and entrusted me with root@python.org for all this time.

But, it is time for me to do something new. I don’t believe there would ever be a perfect time for this transition, but I do believe that now is one of the best. The PSF has built out a team that shares the responsibilities I carried across our technical infrastructure, the maintenance and support of PyPI, relationships with our in-kind sponsors, and the facilitation of PyCon US. I’m also not “burnt-out” or worse, I knew that one day I would move on “dead or alive” and it is so good to feel alive in this decision, literally and figuratively.

“The PSF and the Python community are very lucky to have had Ee at the helm for so many years. Ee’s approach to our technical needs has been responsive and resilient as Python, PyPI, PSF staff and the community have all grown, and their dedication to the community has been unmatched and unwavering. Ee is leaving the PSF in fantastic shape, and I know I join the rest of the staff in wishing them all the best as they move on to their next endeavor.”

- Deb Nicholson, Executive Director

The health and wellbeing of the PSF and the Python community is of utmost importance to me, and was paramount as I made decisions around this transition. Given that, I am grateful to be able to commit 20% of my time over the next six months to the PSF to provide support and continuity. Over the past few weeks we’ve been working internally to set things up for success, and I look forward to meeting the new staff and what they accomplish with the team at the PSF!

My participation in the Python community and contributions to the infrastructure began long before my role as a staff member. As I transition out of participating as PSF staff I look forward to continuing to participate in and contribute to this community as a volunteer, as long as I am lucky enough to have the chance.

Reuven Lerner

We’re all VCs now: The skills developers need in the AI era

Many years ago, a friend of mine described how software engineers solve problems:

- When you’re starting off, you solve problems with code.

- When you get more experienced, you solve problems with people.

- When you get even more experienced, you solve problems with money.

In other words: You can be the person writing the code, and solving the problem directly. Or you can manage people, specifying what they should do. Or you can invest in teams, telling them about the problems you want to solve, but letting them set specific goals and managing the day-to-day work.

Up until recently, I was one of those people who said, “Generative AI is great, but it’s not nearly ready to write code on our behalf.” I spoke and wrote about how AI presents an amazing learning opportunity, and how I’ve integrated AI-based learning into my courses.

Things have changed… and are still changing

I’ve recently realized that my perspective is oh-so-last year. Because in 2026, many companies and individuals are using AI to write code on their behalf. In just the last two weeks, I’ve spoken with developers who barely touch code, having AI to develop it for them. And in case you’re wondering whether this only applies to freelancers, I’ve spoken with people from several large, well-known companies, who have said something similar.

And it’s not just me: Gergely Orosz, who writes the Pragmatic Engineer newsletter, recently wrote that AI-written code is “mega-trend set to hit the tech industry,” and that a growing number of companies are already relying on AI to specify, write, and test code (https://newsletter.pragmaticengineer.com/p/when-ai-writes-almost-all-code-what).

And Simon Willison, who has been discussing and evaluating AI models in great depth for several years, has seen a sea change in model-generated code quality in just the last few months. He predicts that within six years, it’ll be as quaint for a human to type code as it is to use punch cards (https://simonwillison.net/2026/Jan/8/llm-predictions-for-2026/#6-years-typing-code-by-hand-will-go-the-way-of-punch-cards).

An inflection point in the tech industry

This is mind blowing. I still remember taking an AI course during my undergraduate years at MIT, learning about cutting-edge AI research… and finding it quite lacking. I did a bit of research at MIT’s AI Lab, and saw firsthand how hard language recognition was. To think that we can now type or talk to an AI model, and get coherent, useful results, continues to astound me, in part because I’ve seen just how far this industry has gone.

When ChatGPT first came out, it was breathtaking to see that it could code. It didn’t code that well, and often made mistakes, but that wasn’t the point. It was far better than nothing at all. In some ways, it was like the old saw about dancing bears, amazing that it could dance at all, never mind dancing well.

Over the last few years, GenAI companies have been upping their game, slowly but surely. They still get things wrong, and still give me bad coding advice and feedback. But for the most part, they’re doing an increasingly impressive job. And from everything I’m seeing, hearing, and reading, this is just the beginning.

Whether the current crop of AI companies survives their cash burn is another question entirely. But the technology itself is here to stay, much like how the dot-com crash of 2000 didn’t stop the Internet.

We’re at an inflection point in the computer industry, one that is increasingly allowing one person to create a large, complex software system without writing it directly. In other words: Over the coming years, programmers will spend less and less time writing code. They’ll spend more and more time partnering with AI systems — specifying what the code should do, what is considered success, what errors will be tolerated, and how scalable the system will be.

This is both exciting and a bit nerve-wracking.

Engineering >> Coding

The shift from “coder” to “engineer” has been going on for years. We abstracted away machine code, then assembly, then manual memory management. AI represents the biggest abstraction leap yet. Instead of abstracting away implementation details, we’re abstracting away implementation itself.

But software engineering has long been more than just knowing how to code. It’s about problem solving, about critical thinking, and about considering not just how to build something, but how to maintain it. It’s true that coding might go away as an individual discipline, much as there’s no longer much of a need for professional scribes in a world where everyone knows how to write.

However, it does mean that to succeed in the software world, it’ll no longer be enough to understand how computers work, and how to effectively instruct them with code. You’ll have to have many more skills, skills which are almost never taught to coders, because there were already so many fundamentals you needed to learn.

In this new age, creating software will be increasingly similar to being an investor. You’ll need to have a sense of the market, and what consumers want. You’ll need to know what sorts of products will potentially succeed in the market. You’ll need to set up a team that can come up with a plan, and execute on it. And then you’ll need to be able to evaluate the results. If things succeed, then great! And if not, that’s OK — you’ll invest in a number of other ventures, hoping that one or more will get the 10x you need to claim success.

If that seems like science fiction, it isn’t. I’ve seen and heard about amazing success with Claude Code from other people, and I’ve started to experience it myself, as well. You can have it set up specifications. You can have it set up tests. You can have it set up a list of tasks. You can have it work through those tasks. You can have it consult with other GenAI systems, to bring in third-party advice. And this is just the beginning.

Programming in English?

When ChatGPT was first released, many people quipped that the hottest programming language is now English. I laughed at that then, less because of the quality of AI coding, and more because most people, even given a long time, don’t have the experience and training to specify a programming project. I’ve been to too many meetings in which developers and project managers exchange harsh words because they interpreted vaguely specified features differently. And that’s with humans, who presumably understand the specifications better!

As someone said to me many years ago, computers do what you tell them to do, not what you want them to do. Engineers still make plenty of mistakes, even with their training and experience. But non-technical people, attempting to specify a software system to a GenAI model, will almost certainly fail much of the time.

So yes, technical chops will still be needed! But just as modern software engineers don’t think too much about the object code emitted by a compiler, assuming that it’ll be accurate and useful, future software engineers won’t need to check the code emitted by AI systems. (We still have some time before that happens, I expect.) The ability to break a problem into small parts, think precisely, and communicate clearly, will be more valuable than ever.

Even when AI is writing code for us, we’ll still need developers. But the best, most successful developers won’t be the ones who have mastered Python syntax. Rather, they’ll be the best architects, the clearest communicators, and the most critical thinkers.

Preparing yourself: We’re all VCs now

So, how do you prepare for this new world? How can you acquire this VC mindset toward creating software?

Learn to code: You can only use these new AI systems if you have a strong understanding of the underlying technology. AI is like a chainsaw, in that it does wonders for people with experience, but is super dangerous for the untrained. So don’t believe the hype, that you don’t need to learn to program, because we’re now in an age of AI. You still need to learn it. The language doesn’t matter nearly as much as the underlying concepts. For the time being, you will also need to inspect the code that GenAI produces, and that requires coding knowledge and experience.

Communication is key: You need to learn to communicate clearly. AI uses text, which means that the better you are at articulating your plans and thoughts, the better off you’ll be. Remember “Let me Google that for you,” the snarky way that many techies responded to people who asked for help searching the Web? Well, guess what: Searching on the Internet is a skill that demands some technical understanding. People who can’t search well aren’t dumb; they just don’t have the needed skills. Similarly, working with GenAI is a skill, one that requires far more lengthy, detailed, and precise language than Google searches ever did. Improving your writing skills will make you that much more powerful as a modern developer.

High-level problem solving: An engineering education teaches you (often the hard way) how to break problems apart into small pieces, solve each piece, and then reassemble them. But how do you do that with AI agents? That’s especially where the VC mindset comes into play: Given a budget, what is the best team of AI agents you can assemble to solve a particular problem? What role will each agent play? What skills will they need? How will they communicate with one another? How do you do so efficiently, so that you don’t burn all of your tokens in one afternoon?

Push back: When I was little, people would sometimes say that something must be true, because it was in the newspaper. That mutated to: It must be true, because I read it online. Today, people believe that Gemini is AI, so it must be true. Or unbiased. Or smart. But of course, that isn’t the case; AI tools regularly make mistakes, and you need to be willing to push back, challenge them, and bring counter-examples. Sadly, people don’t do this enough. I call this “AI-mposter syndrome,” when people believe that the AI must be smarter than they are. Just today, while reading up on the Model Context Protocol, Claude gave me completely incorrect information about how it works. Only providing counter-examples got Claude to admit that actually, I was right, and it was wrong. But it would have been very easy for me to say, “Well, Claude knows better than I do.” Confidence and skepticism will go a long way in this new world.

The more checking, the better: I’ve been using Python for a long time, but I’ve spent no small amount of time with other dynamic languages, such as Ruby, Perl, and Lisp. We’ve already seen that you can only use Python in serious production environments with good testing, and even more so with type hints. When GenAI is writing your code for you, there’s zero room for compromise on these fronts. (Heck, if it’s writing the code, and the tests, then why not go all the way with test-driven development?) If you aren’t requiring a high degree of safety checks and testing, you’re asking for trouble — and potentially big trouble. Not everyone will be this serious about code safety. There will be disasters – code that seemed fine until it wasn’t, corners that seemed reasonable to cut until they weren’t. Don’t let that be you.

Learn how to learn: This has always been true in the computer industry; the faster you can learn new things and synthesize them into your existing knowledge, the better. But the pace has sped up considerably in the last few years. Things are changing at a dizzying pace. It’s hard to keep up. But you really have no choice but to learn about these new technologies, and how to use them effectively. It has long been common for me to learn about something one month, and then use it in a project the next month. Lately, though, I’ve been using newly learned ideas just days after coming across them.

What about juniors?

A big question over the last few years has been: If AI makes senior engineers 100x more productive, then why would companies hire juniors? And if juniors can’t find work, then how will they gain the experience to make them attractive, AI-powered seniors?

This is a real problem. I attended conferences in five countries in 2025, and young engineers in all of them were worried about finding a job, or keeping their current one. There aren’t any easy answers, especially for people who were looking forward to graduating, joining a company, gradually gaining experience, and finally becoming a senior engineer or hanging out their own shingle.

I can say that AI provides an excellent opportunity for learning, and the open-source world offers many opportunities for professional development, as well as interpersonal connections. Perhaps the age in which junior engineers gained their experience on the job are fading, and that participating in open-source projects will need to be part of the university curriculum or something people do in their spare time. And pairing with an AI tool can be extremely rewarding and empowering. Much as Waze doesn’t scold you for missing a turn, AI systems are extremely polite, and patient when you make a mistake, or need to debug a problem. Learning to work with such tools, alongside working with people, might be a good way for many to improve their skills.

Standards and licensing

Beyond skill development, AI-written code raises some other issues. For example: Software is one of the few aspects of our lives that has no official licensing requirements. Doctors, nurses, lawyers, and architects, among others, can’t practice without appropriate education and certification. They’re often required to take courses throughout their career, and to get re-certified along the way.

No doubt, part of the reason for this type of certification is to maintain the power (and profits) of those inside of the system. But it also does help to ensure quality and accountability. As we transition to a world of AI-generated software, part of me wonders whether we’ll eventually need to feed the AI system a set of government- mandated codes that will ensure user safety and privacy. Or that only certified software engineers will be allowed to write the specifications fed into AI to create software.

After all, during most of human history, you could just build a house. There weren’t any standards or codes you needed to follow. You used your best judgment — and if it fell down one day, then that kinda happened, and what can you do? Nowadays, of course, there are codes that restrict how you can build, and only someone who has been certified and licensed can try to implement those codes.

I can easily imagine the pushback that a government would get for trying to impose such restrictions on software people. But as AI-generated code becomes ubiquitous in safety-critical systems, we’ll need some mechanism for accountability. Whether that’s licensing, industry standards, or something entirely new remains to be seen.

Conclusions

The last few weeks have been among the most head-spinning in my 30-year career. I see that my future as a Python trainer isn’t in danger, but is going to change — and potentially quite a bit — even in the coming months and years. I’m already rolling out workshops in which people solve problems not using Python and Pandas, but using Claude Code to write Python and Pandas on their behalf. It won’t be enough to learn how to use Claude Code, but it also won’t be enough to learn Python and Pandas. Both skills will be needed, at least for the time being. But the trend seems clear and unstoppable, and I’m both excited and nervous to see what comes down the pike.

But for now? I’m doubling down on learning how to use AI systems to write code for me. I’m learning how to get them to interact, to help one another, and to critique one another. I’m thinking of myself as a VC, giving “smart money” to a bunch of AI agents that have assembled to solve a particular problem.

And who knows? In the not-too-distant future, an updated version of my friend’s statement might look like this:

- When you’re starting off, you solve problems with code.

- When you get more experienced, you solve problems with an AI agent.

- When you get even more experienced, you solve problems with teams of AI agents.

The post We’re all VCs now: The skills developers need in the AI era appeared first on Reuven Lerner.

Real Python

How to Integrate Local LLMs With Ollama and Python

Integrating local large language models (LLMs) into your Python projects using Ollama is a great strategy for improving privacy, reducing costs, and building offline-capable AI-powered apps.

Ollama is an open-source platform that makes it straightforward to run modern LLMs locally on your machine. Once you’ve set up Ollama and pulled the models you want to use, you can connect to them from Python using the ollama library.

Here’s a quick demo:

In this tutorial, you’ll integrate local LLMs into your Python projects using the Ollama platform and its Python SDK.

You’ll first set up Ollama and pull a couple of LLMs. Then, you’ll learn how to use chat, text generation, and tool calling from your Python code. These skills will enable you to build AI-powered apps that run locally, improving privacy and cost efficiency.

Get Your Code: Click here to download the free sample code that you’ll use to integrate LLMs With Ollama and Python.

Take the Quiz: Test your knowledge with our interactive “How to Integrate Local LLMs With Ollama and Python” quiz. You’ll receive a score upon completion to help you track your learning progress:

Interactive Quiz

How to Integrate Local LLMs With Ollama and PythonCheck your understanding of using Ollama with Python to run local LLMs, generate text, chat, and call tools for private, offline apps.

Prerequisites

To work through this tutorial, you’ll need the following resources and setup:

- Ollama installed and running: You’ll need Ollama to use local LLMs. You’ll get to install it and set it up in the next section.

- Python 3.8 or higher: You’ll be using Ollama’s Python software development kit (SDK), which requires Python 3.8 or higher. If you haven’t already, install Python on your system to fulfill this requirement.

- Models to use: You’ll use

llama3.2:latestandcodellama:latestin this tutorial. You’ll download them in the next section. - Capable hardware: You need relatively powerful hardware to run Ollama’s models locally, as they may require considerable resources, including memory, disk space, and CPU power. You may not need a GPU for this tutorial, but local models will run much faster if you have one.

With these prerequisites in place, you’re ready to connect local models to your Python code using Ollama.

Step 1: Set Up Ollama, Models, and the Python SDK

Before you can talk to a local model from Python, you need Ollama running and at least one model downloaded. In this step, you’ll install Ollama, start its background service, and pull the models you’ll use throughout the tutorial.

Get Ollama Running

To get started, navigate to Ollama’s download page and grab the installer for your current operating system. You’ll find installers for Windows 10 or newer and macOS 14 Sonoma or newer. Run the appropriate installer and follow the on-screen instructions. For Linux users, the installation process differs slightly, as you’ll learn soon.

On Windows, Ollama will run in the background after installation, and the CLI will be available for you. If this doesn’t happen automatically for you, then go to the Start menu, search for Ollama, and run the app.

On macOS, the app manages the CLI and setup details, so you just need to launch Ollama.app.

If you’re on Linux, install Ollama with the following command:

$ curl -fsSL https://ollama.com/install.sh | sh

Once the process is complete, you can verify the installation by running:

$ ollama -v

If this command works, then the installation was successful. Next, start Ollama’s service by running the command below:

$ ollama serve

That’s it! You’re now ready to start using Ollama on your local machine. In some Linux distributions, such as Ubuntu, this final command may not be necessary, as Ollama may start automatically when the installation is complete. In that case, running the command above will result in an error.

Read the full article at https://realpython.com/ollama-python/ »

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Quiz: How to Integrate Local LLMs With Ollama and Python

In this quiz, you’ll test your understanding of How to Integrate Local LLMs With Ollama and Python.

By working through this quiz, you’ll revisit how to set up Ollama, pull models, and use chat, text generation, and tool calling from Python.

You’ll connect to local models through the ollama Python library and practice sending prompts and handling responses. You’ll also see how local inference can improve privacy and cost efficiency while keeping your apps offline-capable.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Reuven Lerner

Build YOUR data dashboard — join my next 8-week HOPPy studio cohort

Want to analyze data? Good news: Python is the leading language in the data world. Libraries like NumPy and Pandas make it easy to load, clean, analyze, and visualize your data.

But wait: If your colleagues aren’t coders, how can they explore your data?

The answer: A data dashboard, which uses UI elements (e.g., sliders, text fields, and checkboxes). Your colleagues get a custom, dynamic app, rather than static graphs, charts, and tables.

One of the newest and hottest ways to create a data dashboard in Python is Marimo. Among other things, Marimo offers UI widgets, real-time updating, and easy distribution. This makes it a great choice for creating a data dashboard.

In the upcoming (4th) cohort of HOPPy (Hands-On Projects in Python), you’ll learn to create a data dashboard. You’ll make all of the important decisions, from the data set to the design. But you’ll do it all under my personal mentorship, along with a small community of other learners.

The course starts on Sunday, February 1st, and will meet every Sunday for eight weeks. When you’re done, you’ll have a dashboard you can share with colleagues, or just add to your personal portfolio.

If you’ve taken Python courses, but want to sink your teeth into a real-world project, then HOPPy is for you. Among other things:

- Go beyond classroom learning: You’ll learn by doing, creating your own personal product

- Live instruction: Our cohort will meet, live, for two hours every Sunday to discuss problems you’ve had and provide feedback.

- You decide what to do: This isn’t a class in which the instructor dictates what you’ll create. You can choose whatever data set you want. But I’ll be there to support and advise you every step of the way.

- Learn about Marimo: Get experience with one of the hottest new Python technologies.

- Learn about modern distribution: Use Molab and WASM to share your dashboard with others

Want to learn more? Join me for an info session on Monday, January 26th. You can register here: https://us02web.zoom.us/webinar/register/WN_YbmUmMSgT2yuOqfg8KXF5A

Ready to join right now? Get full details, and sign up, at https://lernerpython.com/hoppy-4.

Questions? Just reply to this e-mail. It’ll go straight to my inbox, and I’ll answer you as quickly as I can.

I look forward to seeing you in HOPPy 4!

The post Build YOUR data dashboard — join my next 8-week HOPPy studio cohort appeared first on Reuven Lerner.

Seth Michael Larson

mGBA → Dolphin not working? You need a GBA BIOS

The GBA emulator “mGBA” supports emulating the Game Boy Advance Link Cable (not to be confused with the Game Boy Advance /Game/ Link Cable) and connecting to a running Dolphin emulator instance. I am interested in this functionality for Legend of Zelda: Four Swords Adventures, specifically the “Navi Trackers” game mode that was announced for all regions but was only released in Japan and Korea. In the future I want to explore the English language patches.

After reading the documentation to connect the two emulators I configured the controllers to be “GBA (TCP)” in Dolphin and ensured that Dolphin had the permissions it needed to do networking (Dolphin is installed as a Flatpak). I selected “Connect” on mGBA from the “Connect to Dolphin” popup screen and there was zero feedback... no UI changes, errors, or success messages. Hmmm...

I found out in a random Reddit comment section that a GBA BIOS was needed to connect to Dolphin, so I set off to legally obtain the BIOSes from my hardware. I opted to use the BIOS-dump ROM developed by the mGBA team to dump the BIOS from my Game Boy Advance SP and DS Lite.

Below is a guide on how to build the BIOS ROM from source on Ubuntu 24.04, and then dump GBA BIOSes. Please note you'll likely need a GBA flash cartridge for running homebrew on your Game Boy Advance. I used an EZ-Flash Omega flash cartridge, but I've heard Everdrive GBA is also popular.

Installing devKitARM on Ubuntu 24.04

To build this ROM from source you'll need devKitARM.

If you already have devKitARM installed you can skip these steps.

The devKitPro team supplies an easy script for installing

devKitPro toolsets, but unfortunately the apt.devkitpro.org domain

appears to be behind an aggressive “bot” filter right now

so their instructions to use wget are not working as written.

Instead, download their GPG key with a browser and then run the commands yourself:

apt-get install apt-transport-https

if ! [ -f /usr/local/share/keyring/devkitpro-pub.gpg ]; then

mkdir -p /usr/local/share/keyring/

mv devkitpro-pub.gpg /usr/local/share/keyring/

fi

if ! [ -f /etc/apt/sources.list.d/devkitpro.list ]; then

echo "deb [signed-by=/usr/local/share/keyring/devkitpro-pub.gpg] https://apt.devkitpro.org stable main" > /etc/apt/sources.list.d/devkitpro.list

fi

apt-get update

apt-get install devkitpro-pacman

Once you've installed devKitPro pacman (for Ubuntu: dkp-pacman)

you can install the GBA development tools package group:

dkp-pacman -S gba-dev

After this you can set the DEVKITARM environment variable

within your shell profile to /opt/devkitpro/devkitARM.

Now you should be ready to build the GBA BIOS dumping ROM.

Building the bios-dump ROM

Once devKitARM toolkit is installed the next step is much easier.

You basically download the source, run make with the DEVKITARM environment variable

set properly, and if all the tools are installed you'll quickly have

your ROM:

apt-get install build-essential curl unzip

curl -L -o bios-dump.zip \

https://github.com/mgba-emu/bios-dump/archive/refs/heads/master.zip

unzip bios-dump.zip

cd bios-dump-master

export DEVKITARM=/opt/devkitpro/devkitARM/

make

You should end up with a GBA ROM file titled bios-dump.gba.

Add this .gba file to your microSD card for the flash cartridge.

Boot up the flash cartridge using the device you are trying to dump

BIOS of and after boot-up the screen should quickly show a success message

along with checksums of the BIOS file. As noted in the mGBA bios-dump README, there are two GBA BIOSes:

sha256:fd2547: GBA, GBA SP, GBA SP “AGS-101”, GBA Micro, and Game Boy Player.sha256:782eb3: DS, DS Lite, and all 3DS variants

I own a GBA SP, a Game Boy Player, and a DS Lite, so I was able to dump three different GBA BIOSes, two of which are identical:

sha256sum *.bin

fd2547... gba_sp_bios.bin

fd2547... gba_gbp_bios.bin

782eb3... gba_ds_bios.bin

From here I was able to configure mGBA with a GBA BIOS file (Tools→Settings→BIOS) and successfully connect to Dolphin running four instances of mGBA; one for each of the Links!

💚❤️💙💜

mGBA probably could have shown an error message when the “connecting” phase requires a BIOS. Looks like this behavior been known since 2021.

Thanks for keeping RSS alive! ♥

January 20, 2026

PyCoder’s Weekly

Issue #718: pandas 3.0, deque, tprof, and More (Jan. 20, 2026)

#718 – JANUARY 20, 2026

View in Browser »

What’s New in pandas 3.0

Learn what’s new in pandas 3.0: pd.col expressions for cleaner code, Copy-on-Write for predictable behavior, and PyArrow-backed strings for 5-10x faster operations.

CODECUT.AI • Shared by Khuyen Tran

Python’s deque: Implement Efficient Queues and Stacks

Use a Python deque to efficiently append and pop elements from both ends of a sequence, build queues and stacks, and set maxlen for history buffers.

REAL PYTHON

B2B Authentication for any Situation - Fully Managed or BYO

What your sales team needs to close deals: multi-tenancy, SAML, SSO, SCIM provisioning, passkeys…What you’d rather be doing: almost anything else. PropelAuth does it all for you, at every stage. →

PROPELAUTH sponsor

Introducing tprof, a Targeting Profiler

Adam has written tprof a targeting profiler for Python 3.12+. This article introduces you to the tool and why he wrote it.

ADAM JOHNSON

Articles & Tutorials

Anthropic Invests $1.5M in the PSF

Anthropic has entered a two-year partnership with the PSF, contributing $1.5 million. The investment will focus on Python ecosystem security including advances to CPython and PyPI.

PYTHON SOFTWARE FOUNDATION

The Coolest Feature in Python 3.14

Svaannah has written a debugging tool called debugwand that help access Python applications running in Kubernetes and Docker containers using Python 3.14’s sys.remote_exec() function.

SAVANNAH OSTROWSKI

AI Code Review with Comments You’ll Actually Implement

Unblocked is the AI code review that surfaces real issues and meaningful feedback instead of flooding your PRs with stylistic nitpicks and low-value comments. “Finally, a tool that surfaces context only someone with a full view of the codebase could provide.” - Senior developer, Clio →

UNBLOCKED sponsor

Avoiding Duplicate Objects in Django Querysets

When filtering Django querysets across relationships, you can easily end up with duplicate objects in your results. Learn why this happens and the best ways to avoid it.

JOHNNY METZ

diskcache: Your Secret Python Perf Weapon

Talk Python interviews Vincent Warmerdam and they discuss DiskCache, an SQLite-based caching mechanism that doesn’t require you to spin up extra services like Redis.

TALK PYTHON podcast

How to Create a Django Project

Learn how to create a Django project and app in clear, guided steps. Use it as a reference for any future Django project and tutorial you’ll work on.

REAL PYTHON

Get Job-Ready With Live Python Training

Real Python’s 2026 cohorts are open. Python for Beginners teaches fundamentals the way professional developers actually use them. Intermediate Python Deep Dive goes deeper into decorators, clean OOP, and Python’s object model. Live instruction, real projects, expert feedback. Learn more at realpython.com/live →

REAL PYTHON sponsor

Intro to Object-Oriented Programming (OOP) in Python

Learn Python OOP fundamentals fast: master classes, objects, and constructors with hands-on lessons in this beginner-friendly video course.

REAL PYTHON course

Fun With Mypy: Reifying Runtime Relations on Types

This post describes how to implement a safer version of typing.cast which guarantees a cast type is also an appropriate sub-type.

LANGSTON BARRETT

How to Type Hint a Decorator in Python

Writing a decorator itself can be a little tricky, but adding type hints makes it a little harder. This article shows you how.

MIKE DRISCOLL

How to Integrate ChatGPT’s API With Python Projects

Learn how to use the ChatGPT Python API with the openai library to build AI-powered features in your Python applications.

REAL PYTHON

Raw String Literals in Python

Exploring the pitfalls of raw string literals in Python and why backslash can still escape some things in raw mode.

SUBSTACK.COM • Shared by Vivis Dev

Need a Constant in Python? Enums Can Come in Useful

Python doesn’t have constants, but it does have enums. Learn when you might want to use them in your code.

STEPHEN GRUPPETTA

Projects & Code

Events

Weekly Real Python Office Hours Q&A (Virtual)

January 21, 2026

REALPYTHON.COM

Python Leiden User Group

January 22, 2026

PYTHONLEIDEN.NL

PyDelhi User Group Meetup

January 24, 2026

MEETUP.COM

PyLadies Amsterdam: Robotics Beginner Class With MicroPython

January 27, 2026

MEETUP.COM

Python Sheffield

January 27, 2026

GOOGLE.COM

Python Southwest Florida (PySWFL)

January 28, 2026

MEETUP.COM

Happy Pythoning!

This was PyCoder’s Weekly Issue #718.

View in Browser »

[ Subscribe to 🐍 PyCoder’s Weekly 💌 – Get the best Python news, articles, and tutorials delivered to your inbox once a week >> Click here to learn more ]

Real Python

uv vs pip: Python Packaging and Dependency Management

When it comes to Python package managers, the choice often comes down to uv vs pip. You may choose pip for out-of-the-box availability, broad compatibility, and reliable ecosystem support. In contrast, uv is worth considering if you prioritize fast installs, reproducible environments, and clean uninstall behavior, or if you want to streamline workflows for new projects.

In this video course, you’ll compare both tools. To keep this comparison meaningful, you’ll focus on the overlapping features, primarily package installation and dependency management.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

PyCharm

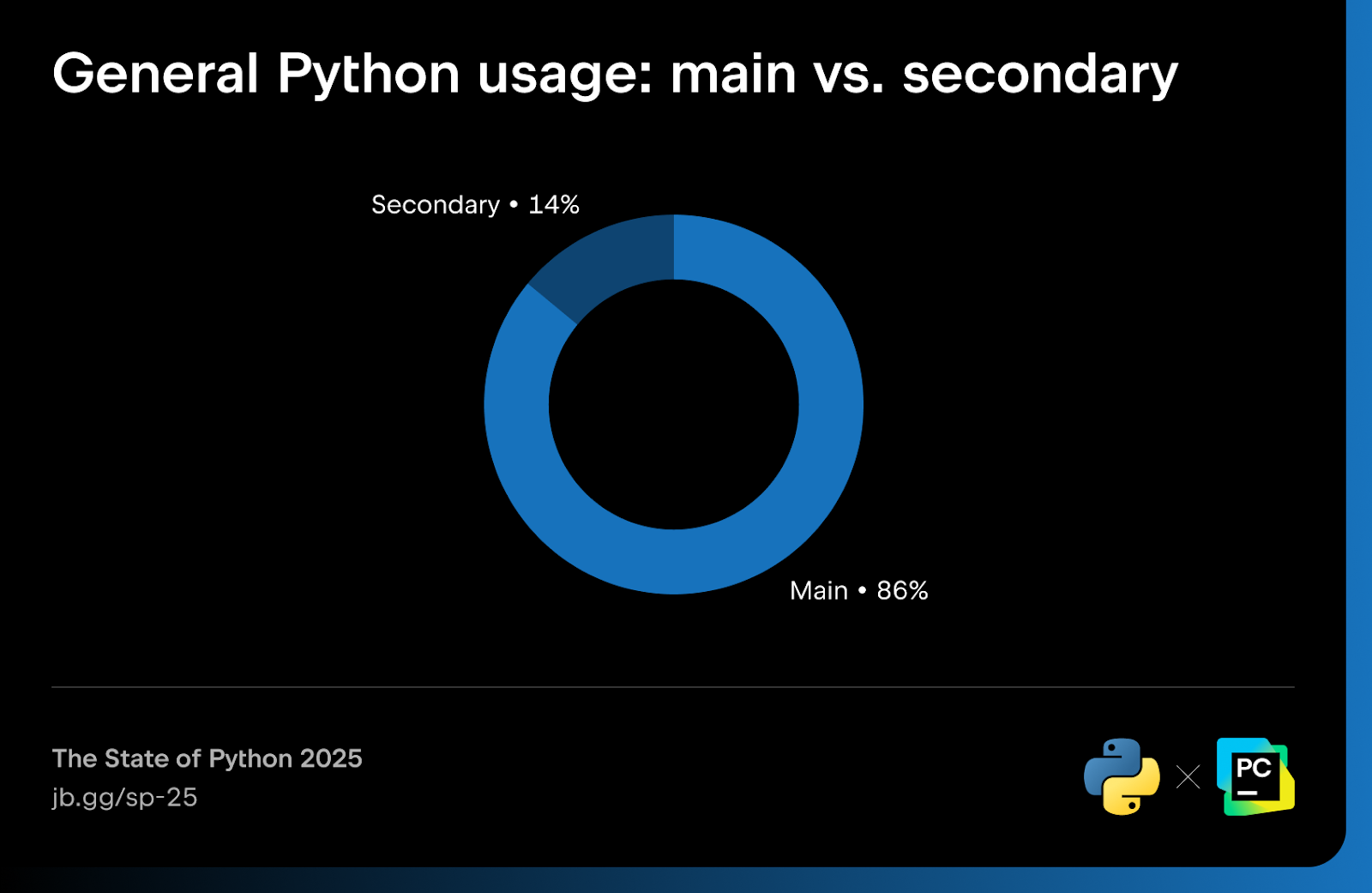

While other programming languages come and go, Python has stood the test of time and firmly established itself as a top choice for developers of all levels, from beginners to seasoned professionals.

Whether you’re working on intelligent systems or data-driven workflows, Python has a pivotal role to play in how your software is built, scaled, and optimized.

Many surveys, including our Developer Ecosystem Survey 2025, confirm Python’s continued popularity. The real question is why developers keep choosing it, and that’s what we’ll explore.

Whether you’re choosing your first language or building production-scale services, this post will walk you through why Python remains a top choice for developers.

How popular is Python in 2025?

In our Developer Ecosystem Survey 2025, Python ranks as the second most-used programming language in the last 12 months, with 57% of developers reporting that they use it.

More than a third (34%) said Python is their primary programming language. This places it ahead of JavaScript, Java, and TypeScript in terms of primary use. It’s also performing well despite fierce competition from newer systems and niche domain tools.

These stats tell a story of sustained relevance across diverse developer segments, from seasoned backend engineers to first-time data analysts.

This continued success is down to Python’s ability to grow with you. It doesn’t just serve as a first step; it continues adding value in advanced environments as you gain skills and experience throughout your career.

Let’s explore why Python remains a popular choice in 2025.

1. Dominance in AI and machine learning

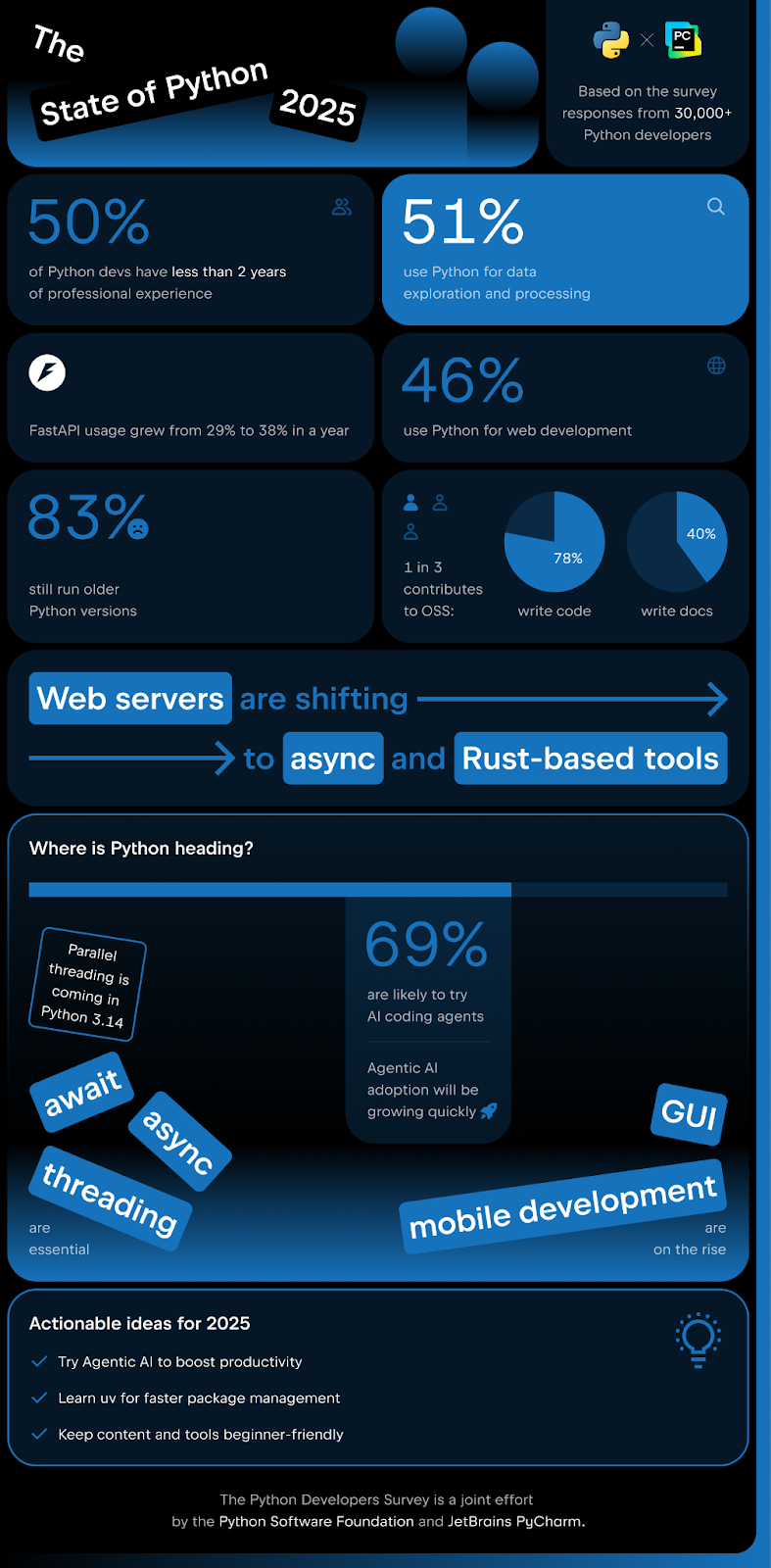

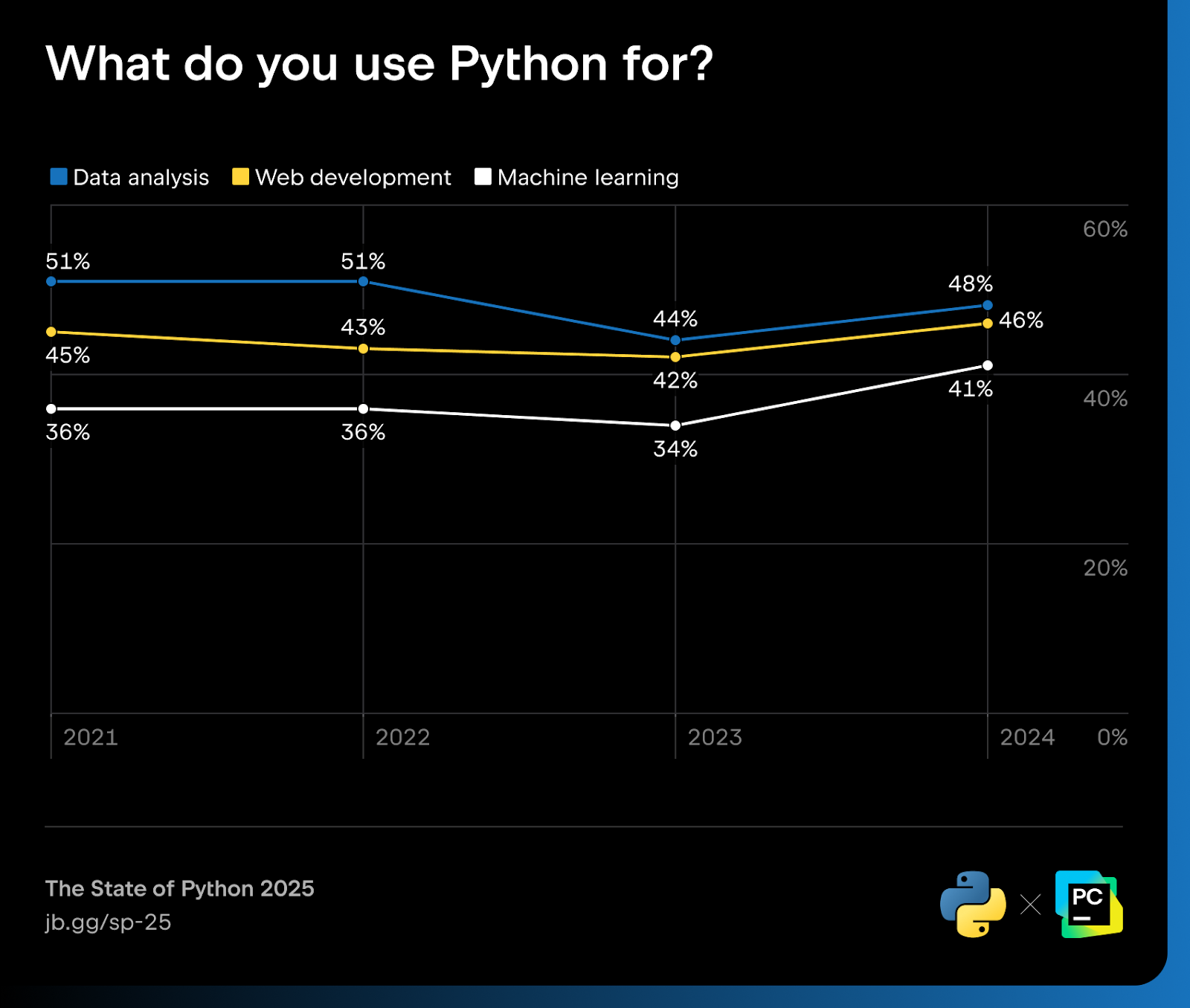

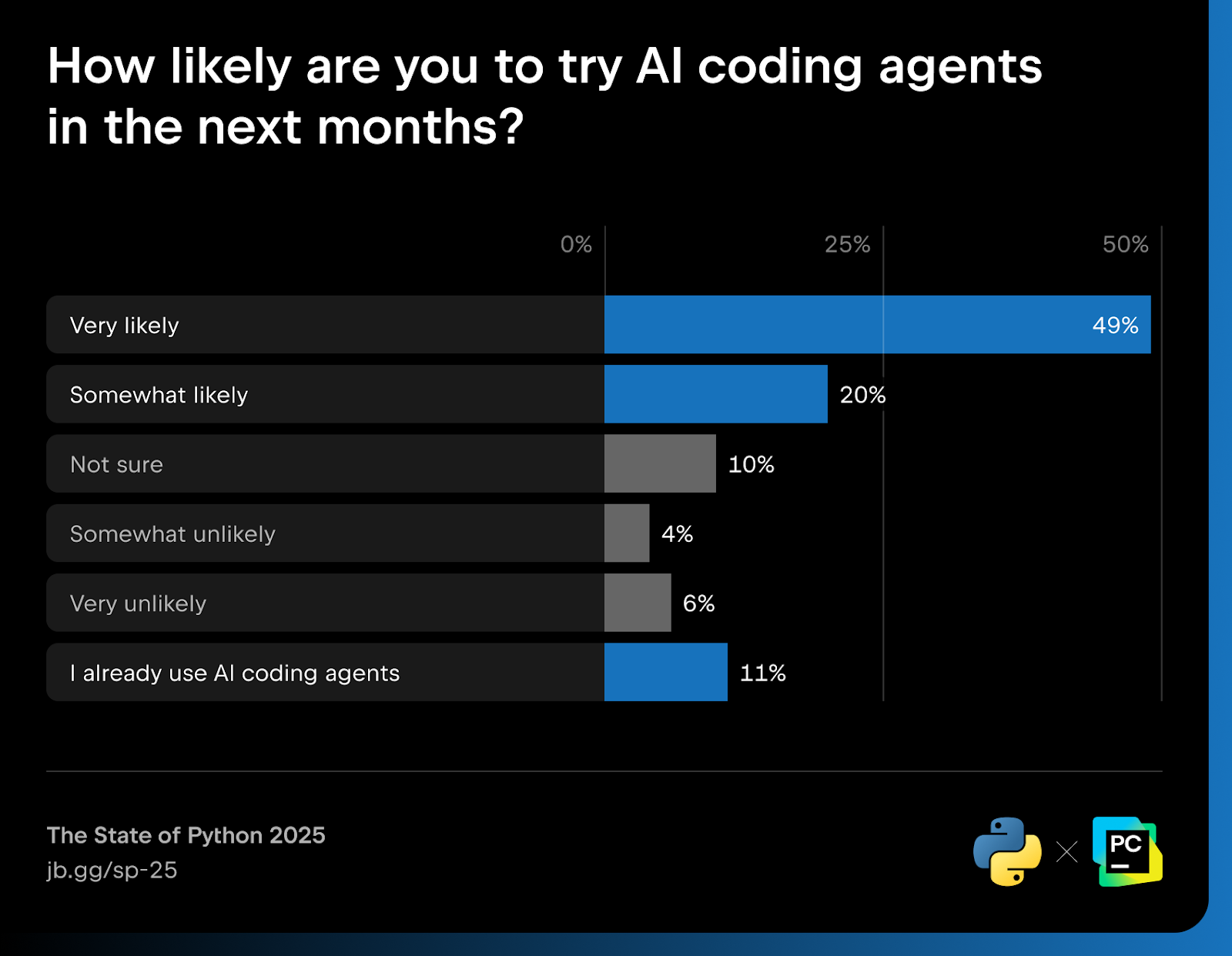

Our recently released report, The State of Python 2025, shows that 41% of Python developers use the language specifically for machine learning.

This is because Python drives innovation in areas like natural language processing, computer vision, and recommendation systems.

Python’s strength in this area comes from the fact that it offers support at every stage of the process, from prototyping to production. It also integrates into machine learning operations (MLOps) pipelines with minimal friction and high flexibility.

One of the most significant reasons for Python’s popularity is its syntax, which is expressive, readable, and dynamic. This allows developers to write training loops, manipulate tensors, and orchestrate workflows without boilerplate friction.

However, it’s Python’s ecosystem that makes it indispensable.

Core frameworks include:

- PyTorch – for research-oriented deep learning

- TensorFlow – for production deployment and scalability

- Keras – for rapid prototyping

- scikit-learn – for classical machine learning

- Hugging Face Transformers – for natural language processing and generative models

These frameworks are mature, well-documented, and interoperable, benefitting from rapid open-source development and extensive community contributions. They support everything from GPU acceleration and distributed training to model export and quantization.

Python also integrates cleanly across the machine learning (ML) pipeline, from data preprocessing with pandas and NumPy to model serving via FastAPI or Flask to inference serving for LLMs with vLLM.

It all comes together to provide a solution that allows you to deliver a working AI solution without ever really having to work outside Python.

2. Strength in data science and analytics

From analytics dashboards to ETL scripts, Python’s flexibility drives fast, interpretable insights across industries. It’s particularly adept at handling complex data, such as time-series analyses.

The State of Python 2025 reveals that 51% of respondents are involved in data exploration and processing. This includes tasks like:

- Data extraction, transformation, and loading (ETL)

- Exploratory data analysis (EDA)

- Statistical and predictive modeling

- Visualization and reporting

- Real-time data analysis

- Communication of insights

Core libraries such as pandas, NumPy, Matplotlib, Plotly, and Jupyter Notebook form a mature ecosystem that’s supported by strong documentation and active community development.

Python offers a unique balance. It’s accessible enough for non-engineers, but powerful enough for production-grade pipelines. It also integrates with cloud platforms, supports multiple data formats, and works seamlessly with SQL and NoSQL data stores.

3. Syntax that’s simple and scalable

Python’s most visible strength remains its readability. Developers routinely cite Python’s low barrier to entry and clean syntax as reasons for initial adoption and longer-term loyalty. In Python, even model training syntax reads like plain English:

def train(model):

for item in model.data:

model.learn(item)

Code snippets like this require no special decoding. That clarity isn’t just beginner-friendly; it also lowers maintenance costs, shortens onboarding time, and improves communication across mixed-skill teams.

This readability brings practical advantages. Teams spend less time deciphering logic and more time improving functionality. Bugs surface faster. Reviews run more smoothly. And non-developers can often read Python scripts without assistance.

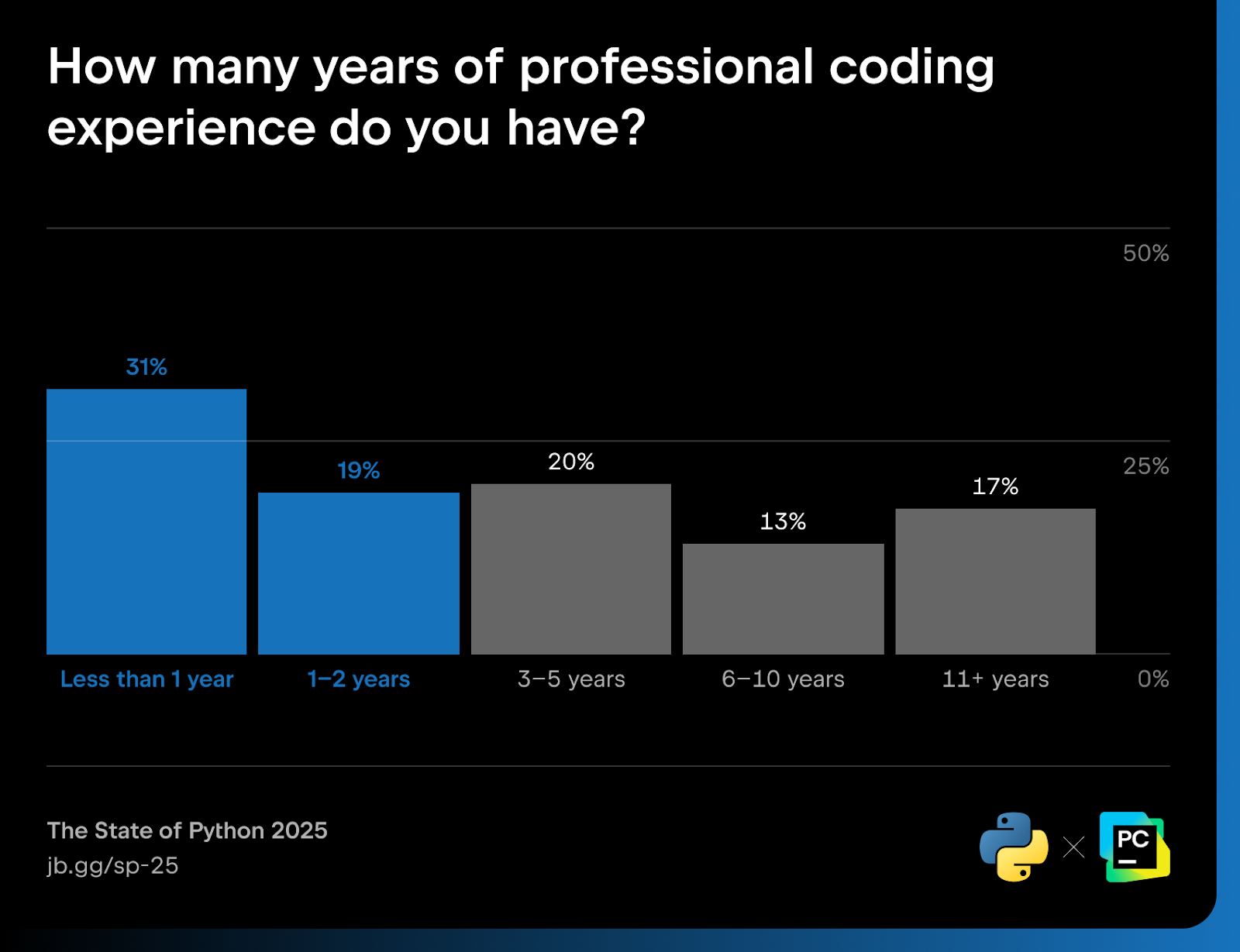

The State of Python 2025 revealed that 50% of respondents had less than two years of total coding experience. Over a third (39%) had been coding in Python for two years or less, even in hobbyist or educational settings.

This is where Python really stands out. Though its simple syntax makes it an ideal entry point for new coders, it scales with users, which means retention rates remain high. As projects grow in complexity, Python’s simplicity becomes a strength, not a limitation.

Add to this the fact that Python supports multiple programming paradigms (procedural, object-oriented, and functional), and it becomes clear why readability is important. It’s what enables developers to move between approaches without friction.

4. A mature and versatile ecosystem

Python’s power lies in its vast network of libraries that span nearly every domain of modern software development.

Our survey shows that developers rely on Python for everything from web applications and API integration to data science, automation, and testing.

Its deep, actively maintained toolset means you can use Python at all stages of production.

Here’s a snapshot of Python’s core domains and the main libraries developers reach for:

| Domain | Popular Libraries |

| Web development | Django, Flask, FastAPI |

| AI and ML | TensorFlow, PyTorch, scikit-learn, Keras |

| Testing | pytest, unittest, Hypothesis |

| Automation | Click, APScheduler, Rich |

| Data science | pandas, NumPy, Plotly, Matplotlib |

This breadth translates to real-world agility. Developers can move between back-end APIs and machine learning pipelines without changing language or tooling. They can prototype with high-level wrappers and drop to lower-level control when needed.

Critically, Python’s packaging and dependency management systems like pip, conda, and poetry support modular development and reproducible environments. Combined with frameworks like FastAPI for APIs, pytest for testing, and pandas for data handling, Python offers unrivaled scalability.

5. Community support and shared knowledge

Python’s enduring popularity owes much to its global, engaged developer community.

From individual learners to enterprise teams, Python users benefit from open forums, high-quality tutorials, and a strong culture of mentorship. The community isn’t just helpful, it’s fast-moving and inclusive, fostering a welcoming environment for developers of all levels.

Key pillars include:

- The Python Software Foundation, which supports education, events, and outreach.

- High activity on Stack Overflow, ensuring quick answers to real-world problems, and active participation in open-source projects and local user groups.

- A rich landscape of resources (Real Python, Talk Python, and PyCon), serving both beginners and professionals.

This network doesn’t just solve problems; it also shapes the language’s evolution. Python’s ecosystem is sustained by collaboration, continual refinement, and shared best practices.

When you choose Python, you tap into a knowledge base that grows with the language and with you over time.

6. Cross-domain versatility

Python’s reach is not limited to AI and ML or data science and analytics. It’s equally at home in automation, scripting, web APIs, data workflows, and systems engineering. Its ability to move seamlessly across platforms, domains, and deployment targets makes it the default language for multipurpose development.

The State of Python 2025 shows just how broadly developers rely on Python:

| Functionality | Percentage of Python users |

| Data analysis | 48% |

| Web development | 46% |

| Machine learning | 41% |

| Data engineering | 31% |

| Academic research | 27% |

| DevOps and systems administration | 26% |

That spread illustrates Python’s domain elasticity. The same language that powers model training can also automate payroll tasks, control scientific instruments, or serve REST endpoints. Developers can consolidate tools, reduce context-switching, and streamline team workflows.

Python’s platform independence (Windows, Linux, macOS, cloud, and browser) reinforces this versatility. Add in a robust packaging ecosystem and consistent cross-library standards, and the result is a language equally suited to both rapid prototyping and enterprise production.

Few languages match Python’s reach, and fewer still offer such seamless continuity. From frontend interfaces to backend logic, Python gives developers one cohesive environment to build and ship full solutions.

That completeness is part of the reason people stick with it. Once you’re in, you rarely need to reach for anything else.

Python in the age of intelligent development

As software becomes more adaptive, predictive, and intelligent, Python is strongly positioned to retain its popularity.

Its abilities in areas like AI, ML, and data handling, as well as its mature libraries, make it a strong choice for systems that evolve over time.

Python’s popularity comes from its ability to easily scale across your projects and platforms. It continues to be a great choice for developers of all experience levels and across projects of all sizes, from casual automation scripts to enterprise AI platforms.

And when working with PyCharm, Python is an intelligent, fast, and clean option.

For a deeper dive, check out The State of Python 2025 by Michael Kennedy, Python expert and host of the Talk Python to Me podcast.

Michael analyzed over 30,000 responses from our Python Developers Survey 2024, uncovering fascinating insights and identifying the latest trends.

Whether you’re a beginner or seasoned developer, The State of Python 2025 will give you the inside track on where the language is now and where it’s headed.

As tools like Astral’s uv show, Python’s evolution is far from over, despite its relative maturity. With a growing ecosystem and proven staying power, it’s well-positioned to remain a popular choice for developers for years to come.

Whether you’re building APIs, dashboards, or machine learning pipelines, choosing the right framework can make or break your project.

Every year, we survey thousands of Python developers to help you understand how the ecosystem is evolving, from tooling and languages to frameworks and libraries. Our insights from the State of Python 2025 offer a snapshot of what frameworks developers are using in 2025.

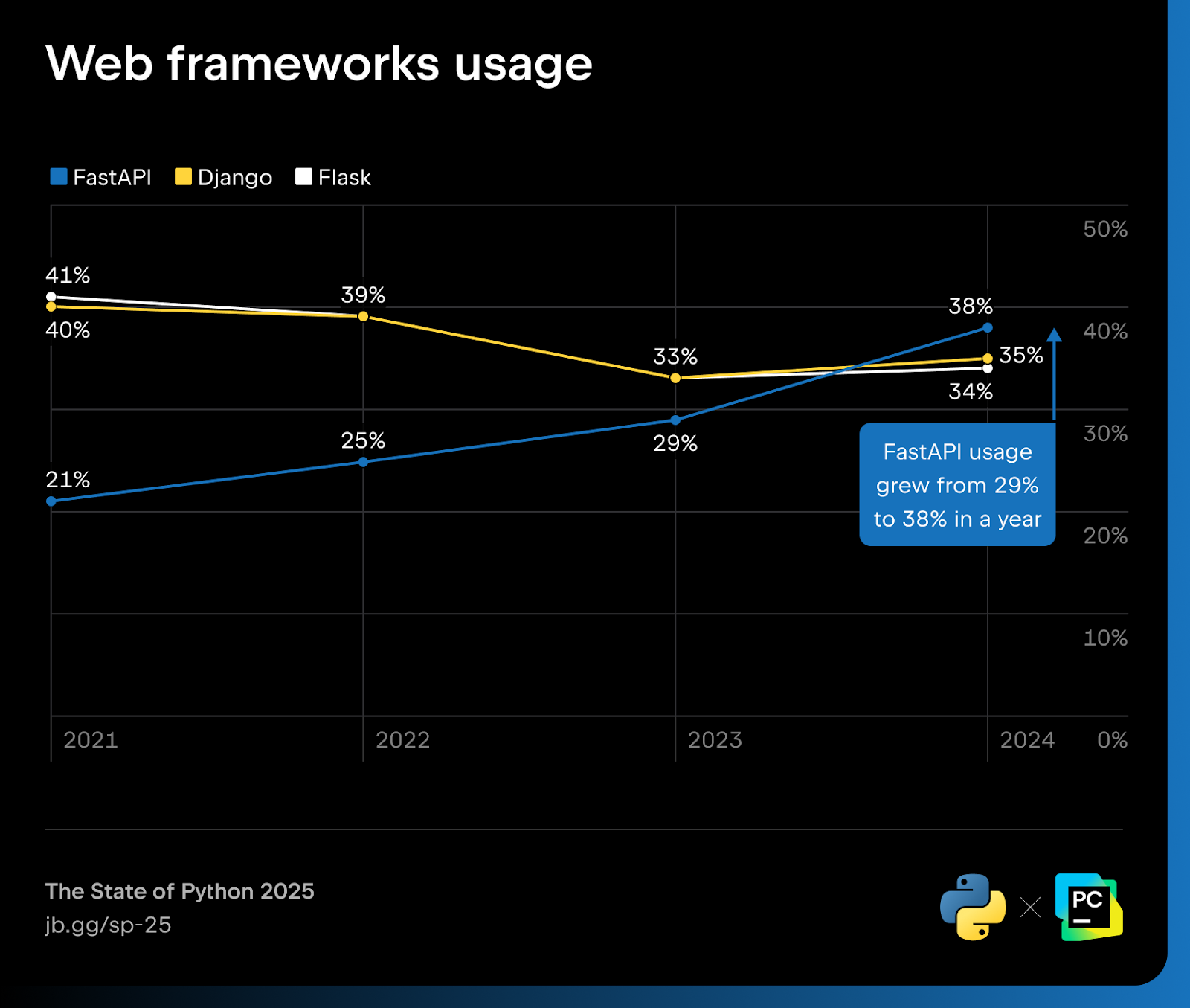

In this article, we’ll look at the most popular Python frameworks and libraries. While some long-standing favorites like Django and Flask remain strong, newer contenders like FastAPI are rapidly gaining ground in areas like AI, ML, and data science.

1. FastAPI

2024 usage: 38% (+9% from 2023)

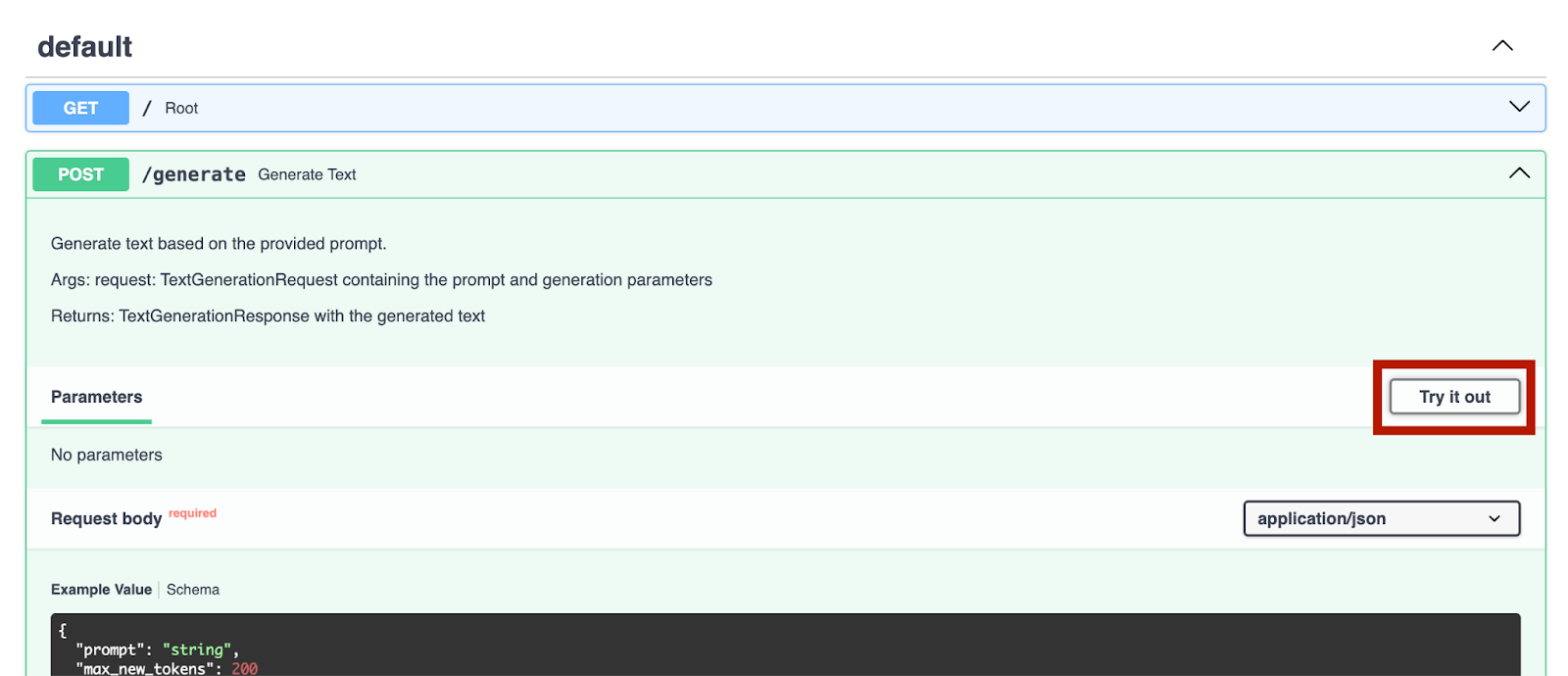

Top of the table is FastAPI, a modern, high-performance web framework for building APIs with Python 3.8+. It was designed to combine Python’s type hinting, asynchronous programming, and OpenAPI standards into a single, developer-friendly package.

Built on top of Starlette (for the web layer) and Pydantic (for data validation), FastAPI offers automatic request validation, serialization, and interactive documentation, all with minimal boilerplate.

FastAPI is ideal for teams prioritizing speed, simplicity, and standards. It’s especially popular among both web developers and data scientists.

FastAPI advantages

- Great for AI/ML: FastAPI is widely used to deploy machine learning models in production. It integrates well with libraries like TensorFlow, PyTorch, and Hugging Face, and supports async model inference pipelines for maximum throughput.

- Asynchronous by default: Built on ASGI, FastAPI supports native async/await, making it ideal for real-time apps, streaming endpoints, and low-latency ML services.

- Type-safe and modern: FastAPI uses Python’s type hints to auto-validate requests and generate clean, editor-friendly code, reducing runtime errors and boosting team productivity.

- Auto-generated docs: FastAPI creates interactive documentation via Swagger UI and ReDoc, making it easy for teams to explore and test endpoints without writing any extra docs.

- Strong community momentum: Though it’s relatively young, FastAPI has built a large and active community and has a growing ecosystem of extensions, tutorials, and integrations.

FastAPI disadvantages

- Steeper learning curve for asynchronous work: async/await unlocks performance, but debugging, testing, and concurrency management can challenge developers new to asynchronous programming.

- Batteries not included: FastAPI lacks built-in tools for authentication, admin, and database management. You’ll need to choose and integrate these manually.

- Smaller ecosystem: FastAPI’s growing plugin landscape still trails Django’s, with fewer ready-made tools for tasks like CMS integration or role-based access control.

2. Django

2024 usage: 35% (+2% from 2023)

Django once again ranks among the most popular Python frameworks for developers.

Originally built for rapid development with built-in security and structure, Django has since evolved into a full-stack toolkit. It’s trusted for everything from content-heavy websites to data science dashboards and ML-powered services.

It follows the model-template-view (MTV) pattern and comes with built-in tools for routing, data access, and user management. This allows teams to move from idea to deployment with minimal setup.

Django advantages

- Batteries included: Django has a comprehensive set of built-in tools, including an ORM, a user authenticator, an admin panel, and a templating engine. This makes it ideal for teams that want to move quickly without assembling their own stack.

- Secure by default: It includes built-in protections against CSRF, SQL injection, XSS, and other common vulnerabilities. Django’s security-first approach is one reason it’s trusted by banks, governments, and large enterprises.

- Scalable and production-ready: Django supports horizontal scaling, caching, and asynchronous views. It’s been used to power high-traffic platforms like Instagram, Pinterest, and Disqus.

- Excellent documentation: Django’s official docs are widely praised for their clarity and completeness, making it accessible to developers at all levels.

- Mature ecosystem: Thousands of third-party packages are available for everything from CMS platforms and REST APIs to payments and search.

- Long-term support: Backed by the Django Software Foundation, Django receives regular updates, security patches, and LTS releases, making it a safe choice for long-term projects.

Django disadvantages

- Heavyweight for small apps: For simple APIs or microservices, Django’s full-stack approach can feel excessive and slow to configure.

- Tightly coupled components: Swapping out parts of the stack, such as the ORM or templating engine, often requires workarounds or deep customization.

- Steeper learning curve: Django’s conventions and depth can be intimidating for beginners or teams used to more minimal frameworks.

3. Flask

2024 usage: 34% (+1% from 2023)

Flask is one of the most popular Python frameworks for small apps, APIs, and data science dashboards.

It is a lightweight, unopinionated web framework that gives you full control over application architecture. Flask is classified as a “microframework” because it doesn’t enforce any particular project structure or include built-in tools like ORM or form validation.

Instead, it provides a simple core and lets you add only what you need. Flask is built on top of Werkzeug (a WSGI utility library) and Jinja2 (a templating engine). It’s known for its clean syntax, intuitive routing, and flexibility.

It scales well when paired with extensions like SQLAlchemy, Flask-Login, or Flask-RESTful.

Flask advantages

- Lightweight and flexible: Flask doesn’t impose structure or dependencies, making it ideal for microservices, APIs, and teams that want to build a stack from the ground up.

- Popular for data science and ML workflows: Flask is frequently used for experimentation like building dashboards, serving models, or turning notebooks into lightweight web apps.

- Beginner-friendly: With minimal setup and a gentle learning curve, Flask is often recommended as a first web framework for Python developers.

- Extensible: A rich ecosystem of extensions allows you to add features like database integration, form validation, and authentication only when needed.

- Modular architecture: Flask’s design makes it easy to break your app into blueprints or integrate with other services, which is perfect for teams working on distributed systems.

- Readable codebase: Flask’s source code is compact and approachable, making it easier to debug, customize, or fork for internal tooling.

Flask disadvantages

- Bring-your-own everything: Unlike Django, Flask doesn’t include an ORM, admin panel, or user management. You’ll need to choose and integrate these yourself.

- DIY security: Flask provides minimal built-in protections, so you implement CSRF protection, input validation, and other best practices manually.

- Potential to become messy: Without conventions or structure, large Flask apps can become difficult to maintain unless you enforce your own architecture and patterns.

4. Requests

2024 usage: 33% (+3% from 2023)

Requests isn’t a web framework, it’s a Python library for making HTTP requests, but its influence on the Python ecosystem is hard to overstate. It’s one of the most downloaded packages on PyPI and is used in everything from web scraping scripts to production-grade microservices.

Requests is often paired with frameworks like Flask or FastAPI to handle outbound HTTP calls. It abstracts away the complexity of raw sockets and urllib, offering a clean, Pythonic interface for sending and receiving data over the web.

Requests advantages

- Simple and intuitive: Requests makes HTTP feel like a native part of Python. Its syntax is clean and readable – requests.get(url) is all it takes to fetch a resource.

- Mature and stable: With over a decade of development, Requests is battle-tested and widely trusted. It’s used by millions of developers and is a default dependency in many Python projects.

- Great for REST clients: Requests is ideal for consuming APIs, integrating with SaaS platforms, or building internal tools that rely on external data sources.

- Excellent documentation and community: The official docs are clear and concise, and the library is well-supported by tutorials, Stack Overflow answers, and GitHub issues.

- Broad compatibility: Requests works seamlessly across Python versions and platforms, with built-in support for sessions, cookies, headers, and timeouts.

Requests disadvantages

- Not async: Requests is synchronous and blocking by design. For high-concurrency workloads or async-native frameworks, alternatives like HTTPX or AIOHTTP are better.

- No built-in retry logic: While it supports connection pooling and timeouts, retry behavior must be implemented manually or via third-party wrappers like urllib3.

- Limited low-level control: Requests simplifies HTTP calls but abstracts networking details, making advanced tuning (e.g. sockets, DNS, and connection reuse) difficult.

5. Asyncio

2024 usage: 23% (+3% from 2023)

Asyncio is Python’s native library for asynchronous programming. It underpins many modern async frameworks and enables developers to write non-blocking code using coroutines, event loops, and async/await syntax.

While not a web framework itself, Asyncio excels at handling I/O-bound tasks such as network requests and subprocesses. It’s often used behind the scenes, but remains a powerful tool for building custom async workflows or integrating with low-level protocols.

Asyncio advantages

- Native async support: Asyncio is part of the Python standard library and provides first-class support for asynchronous I/O using async/await syntax.

- Foundation for modern frameworks: It powers many of today’s most popular async web frameworks, including FastAPI, Starlette, and AIOHTTP.

- Fine-grained control: Developers can manage event loops, schedule coroutines, and coordinate concurrent tasks with precision, which is ideal for building custom async systems.

- Efficient for I/O-bound workloads: Asyncio excels at handling large volumes of concurrent I/O operations, such as API calls, socket connections, or file reads.

Asyncio disadvantages

- Steep learning curve: Concepts like coroutines, event loops, and task scheduling can be difficult for developers new to asynchronous programming.

- Not a full framework: Asyncio doesn’t provide routing, templating, or request handling. It’s a low-level tool that requires additional libraries for web development.

- Debugging complexity: Async code can be harder to trace and debug, especially when dealing with race conditions or nested coroutines.

6. Django REST Framework

2024 usage: 20% (+2% from 2023)

Django REST Framework (DRF) is the most widely used extension for building APIs on top of Django. It provides a powerful, flexible toolkit for serializing data, managing permissions, and exposing RESTful endpoints – all while staying tightly integrated with Django’s core components.

DRF is especially popular in enterprise and backend-heavy applications where teams are already using Django and want to expose a clean, scalable API without switching stacks. It’s also known for its browsable API interface, which makes testing and debugging endpoints much easier during development.

Django REST Framework advantages

- Deep Django integration: DRF builds directly on Django’s models, views, and authentication system, making it a natural fit for teams already using Django.

- Browsable API interface: One of DRF’s key features is its interactive web-based API explorer, which helps developers and testers inspect endpoints without needing external tools.

- Flexible serialization: DRF’s serializers can handle everything from simple fields to deeply nested relationships, and they support both ORM and non-ORM data sources.

- Robust permissions system: DRF includes built-in support for role-based access control, object-level permissions, and custom authorization logic.

- Extensive documentation: DRF is well-documented and widely taught, with a large community and plenty of tutorials, examples, and third-party packages.

Django REST Framework disadvantages

- Django-dependent with heavier setup: DRF is tightly tied to Django and requires more configuration than lightweight frameworks like FastAPI, especially when customizing behavior.

- Less flexible serialization: DRF’s serializers work well for common cases, but customizing them for complex or non-standard data often demands verbose overrides.

Best of the rest: Frameworks 7–10

While the most popular Python frameworks dominate usage across the ecosystem, several others continue to thrive in more specialized domains. These tools may not rank as high overall, but they play important roles in backend services, data pipelines, and async systems.

| Framework | Overview | Advantages | Disadvantages |

| httpx 2024 usage: 15% (+3% from 2023) | Modern HTTP client for sync and async workflows | Async support, HTTP/2, retries, and type hints | Not a web framework, no routing or server-side features |

| aiohttp 2024 usage: 13% (+1% from 2023) | Async toolkit for HTTP servers and clients | ASGI-ready, native WebSocket handling, and flexible middleware | Lower-level than FastAPI, less structured for large apps. |

| Streamlit 2024 usage: 12% (+4% from 2023) | Dashboard and data app builder for data workflows | Fast UI prototyping, with zero front-end knowledge required | Limited control over layout, less suited for complex UIs. |

| Starlette 2024 usage: 8% (+2% from 2023) | Lightweight ASGI framework used by FastAPI | Exceptional performance, composable design, fine-grained routing | Requires manual integration, fewer built-in conveniences |

Choosing the right framework and tools

Whether you’re building a blazing-fast API with FastAPI, a full-stack CMS with Django, or a lightweight dashboard with Flask, the most popular Python web frameworks offer solutions for every use case and developer style.

Insights from the State of Python 2025 show that while Django and Flask remain strong, FastAPI is leading a new wave of async-native, type-safe development. Meanwhile, tools like Requests, Asyncio, and Django REST Framework continue to shape how Python developers build and scale modern web services.

But frameworks are only part of the equation. The right development environment can make all the difference, from faster debugging to smarter code completion and seamless framework integration.

That’s where PyCharm comes in. Whether you’re working with Django, FastAPI, Flask, or all three, PyCharm offers deep support for Python web development. This includes async debugging, REST client tools, and rich integration with popular libraries and frameworks.

Ready to build something great? Try PyCharm and see how much faster and smoother Python web development can be.

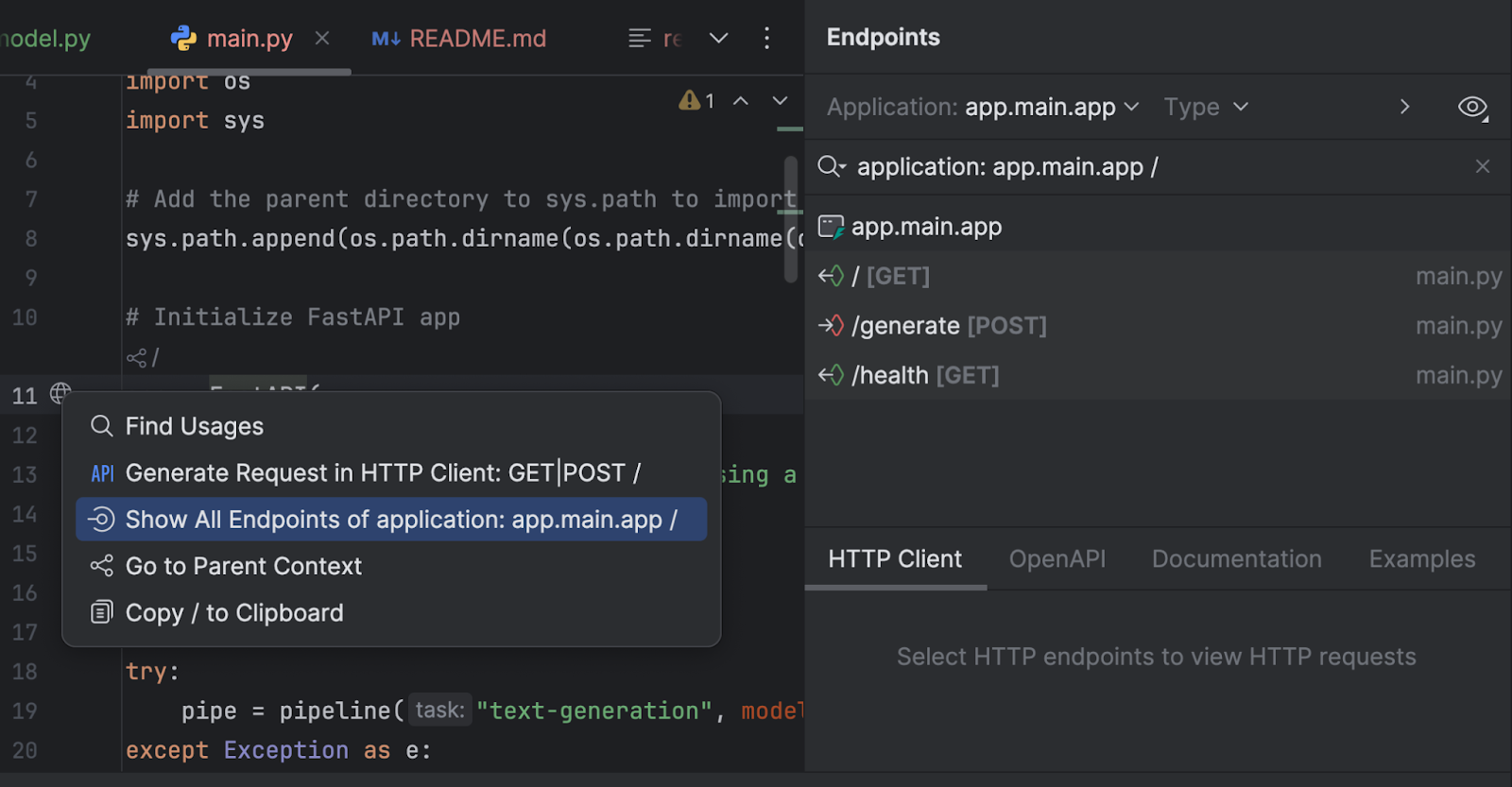

Hugging Face is currently a household name for machine learning researchers and enthusiasts. One of their biggest successes is Transformers, a model-definition framework for machine learning models in text, computer vision, audio, and video. Because of the vast repository of state-of-the-art machine learning models available on the Hugging Face Hub and the compatibility of Transformers with the majority of training frameworks, it is widely used for inference and model training.

Why do we want to fine-tune an AI model?

Fine-tuning AI models is crucial for tailoring their performance to specific tasks and datasets, enabling them to achieve higher accuracy and efficiency compared to using a general-purpose model. By adapting a pre-trained model, fine-tuning reduces the need for training from scratch, saving time and resources. It also allows for better handling of specific formats, nuances, and edge cases within a particular domain, leading to more reliable and tailored outputs.

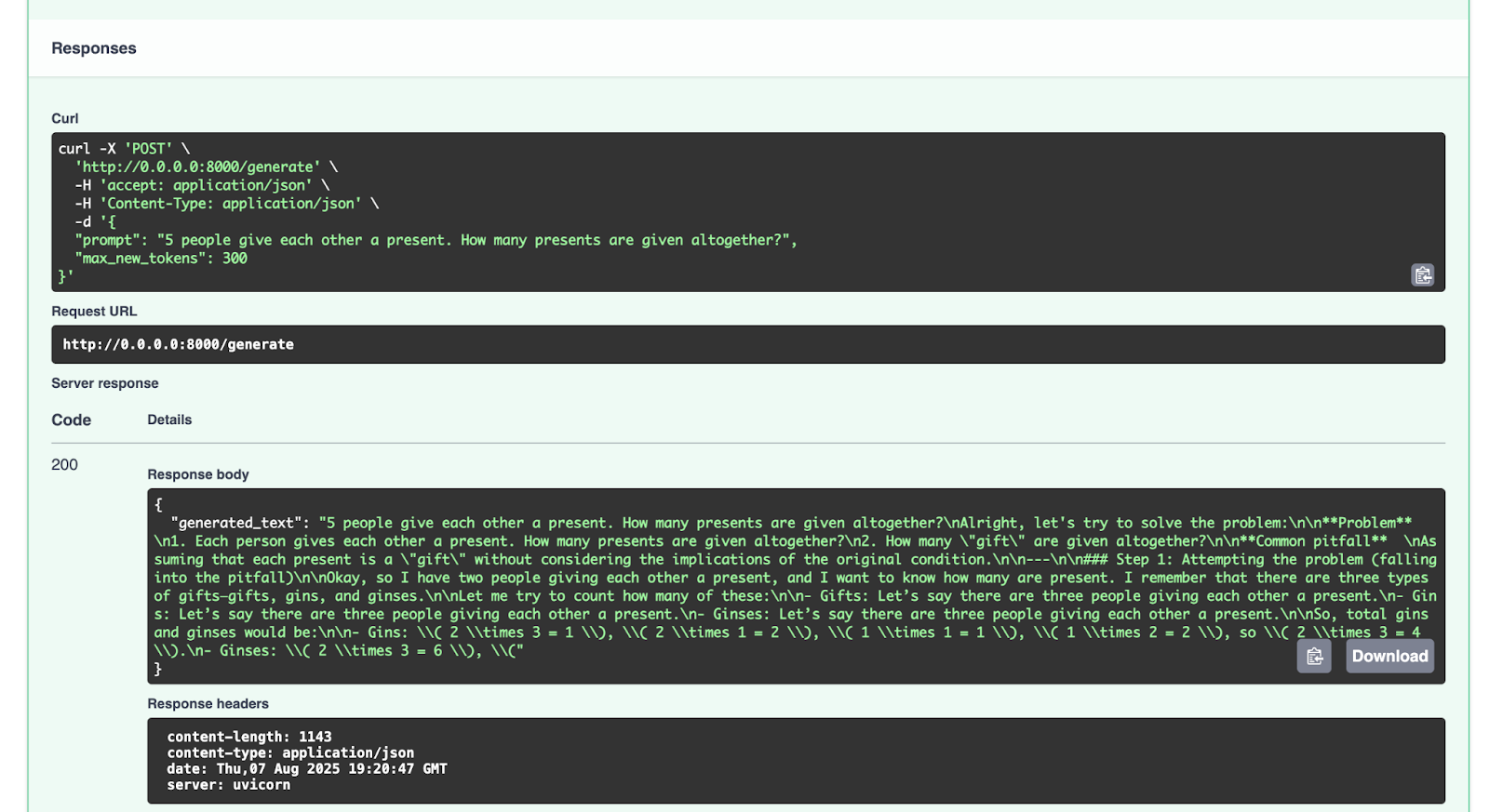

In this blog post, we will fine-tune a GPT model with mathematical reasoning so it better handles math questions.

Using models from Hugging Face

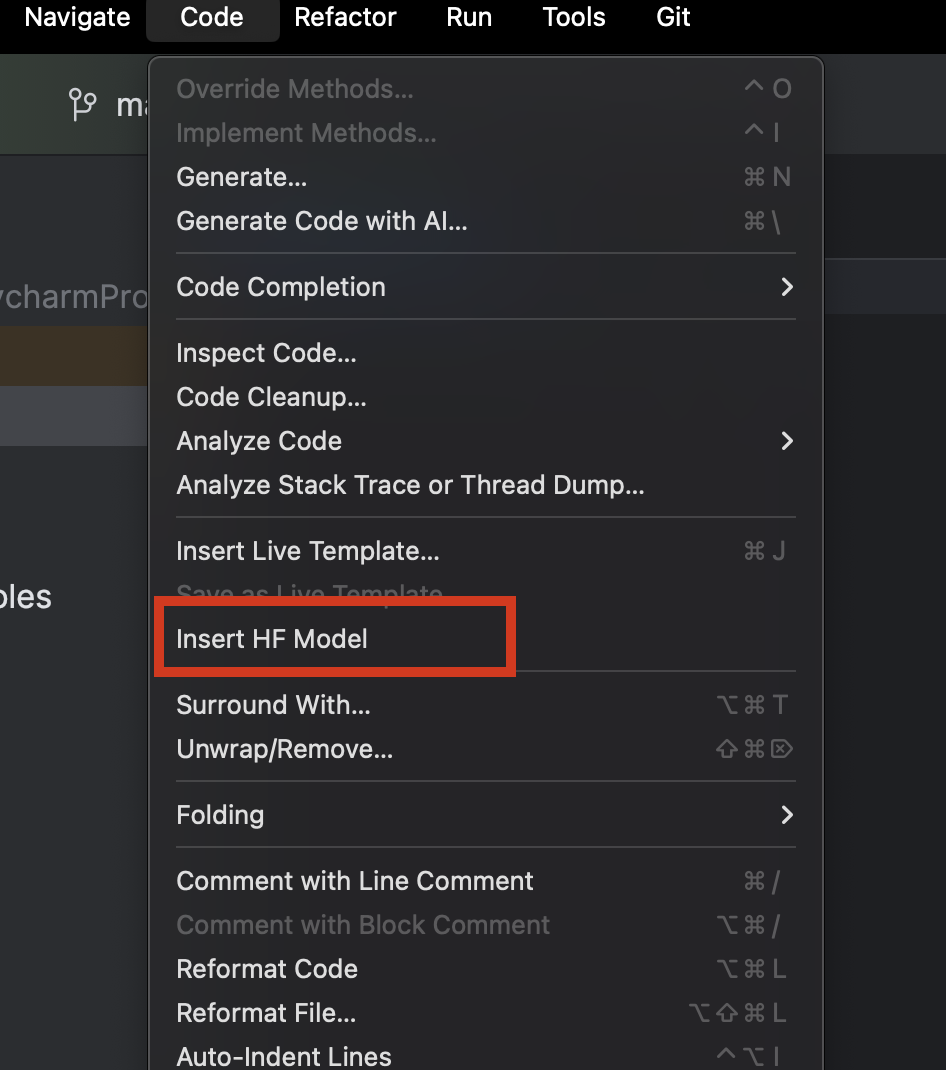

After downloading PyCharm, we can easily browse and add any models from Hugging Face. In a new Python file, from the Code menu at the top, select Insert HF Model.

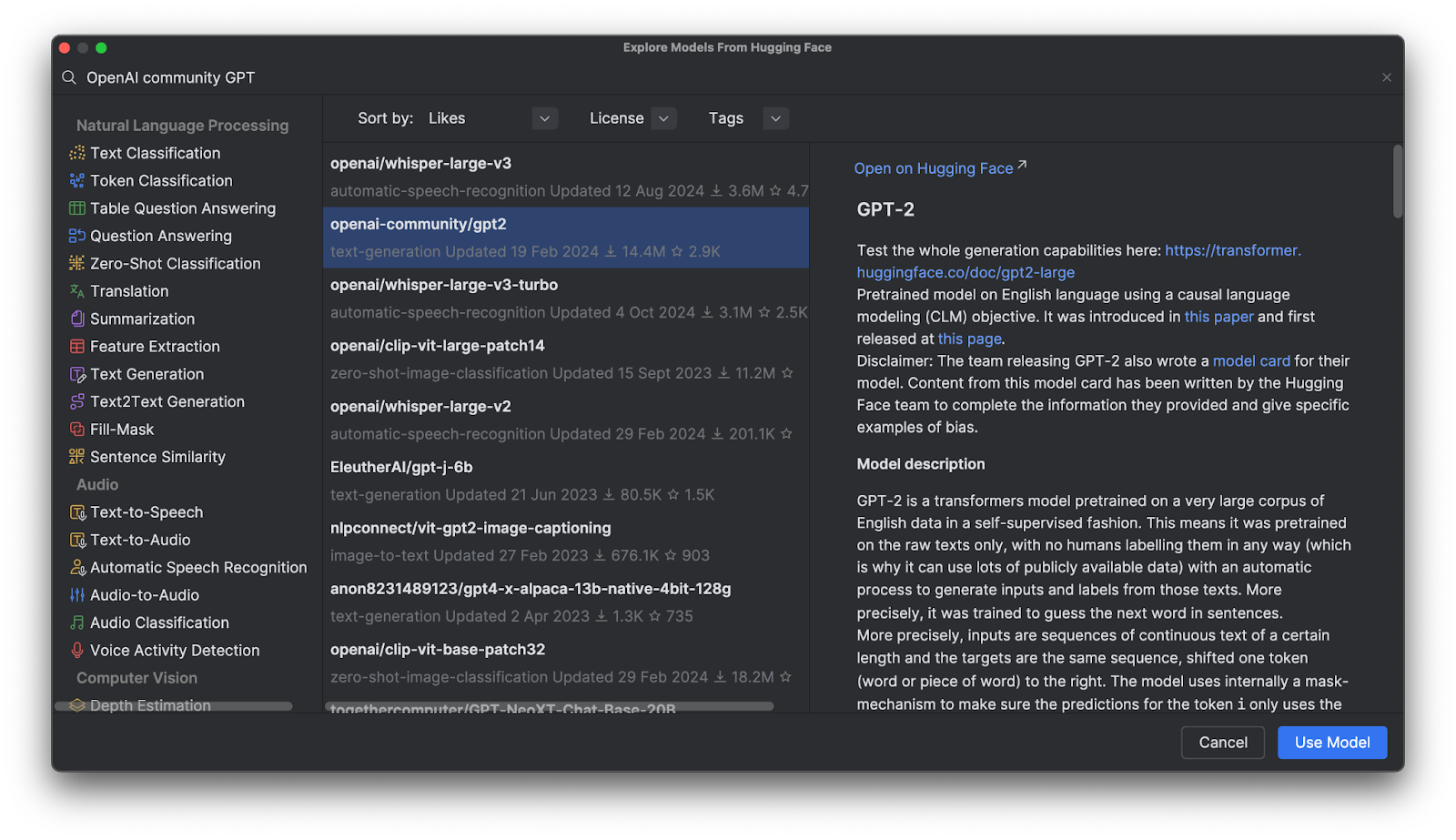

In the menu that opens, you can browse models by category or start typing in the search bar at the top. When you select a model, you can see its description on the right.

When you click Use Model, you will see a code snippet added to your file. And that’s it – You’re ready to start using your Hugging Face model.

GPT (Generative Pre-Trained Transformer) models

GPT models are very popular on the Hugging Face Hub, but what are they? GPTs are trained models that understand natural language and generate high-quality text. They are mainly used in tasks related to textual entailment, question answering, semantic similarity, and document classification. The most famous example is ChatGPT, created by OpenAI.

A lot of OpenAI GPT models are available on the Hugging Face Hub, and we will learn how to use these models with Transformers, fine-tune them with our own data, and deploy them in an application.

Benefits of using Transformers

Transformers, together with other tools provided by Hugging Face, provides high-level tools for fine-tuning any sophisticated deep learning model. Instead of requiring you to fully understand a given model’s architecture and tokenization method, these tools help make models “plug and play” with any compatible training data, while also providing a large amount of customization in tokenization and training.

Transformers in action

To get a closer look at Transformers in action, let’s see how we can use it to interact with a GPT model.

Inference using a pretrained model with a pipeline

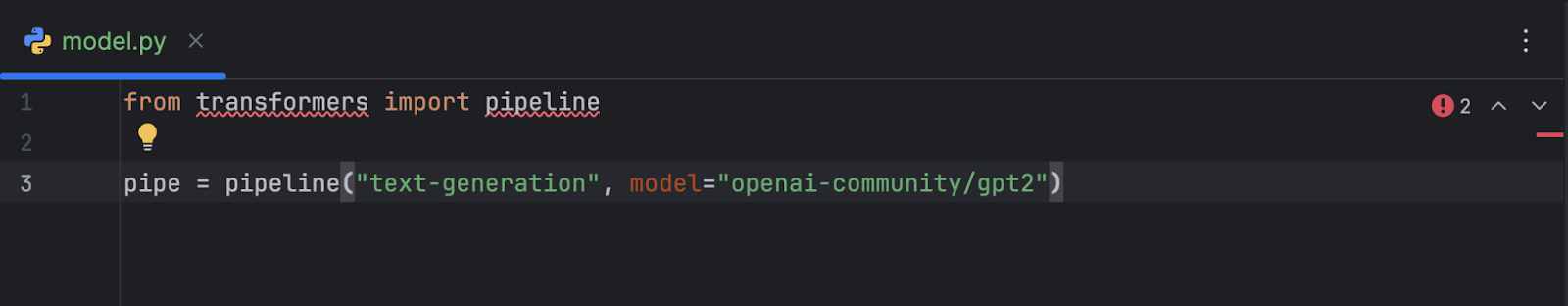

After selecting and adding the OpenAI GPT-2 model to the code, this is what we’ve got:

from transformers import pipeline

pipe = pipeline("text-generation", model="openai-community/gpt2")

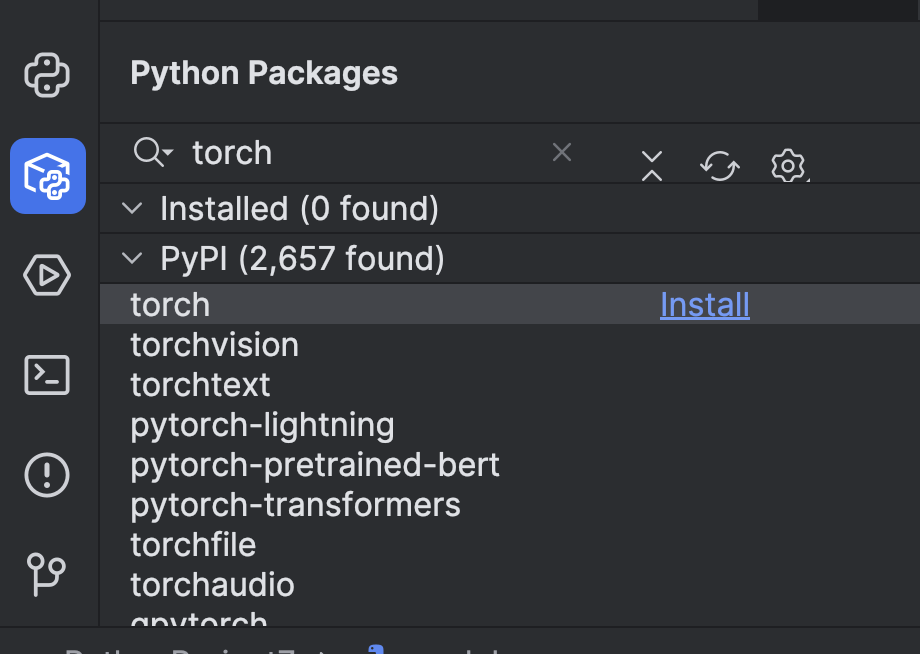

Before we can use it, we need to make a few preparations. First, we need to install a machine learning framework. In this example, we chose PyTorch. You can install it easily via the Python Packages window in PyCharm.

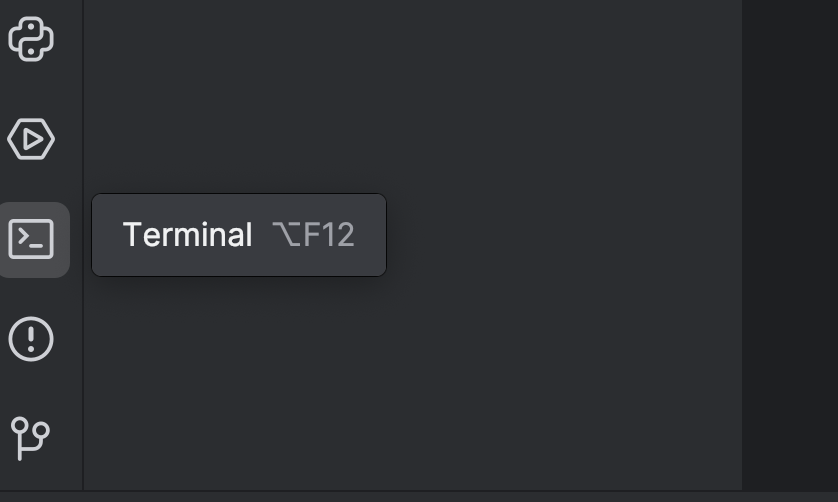

Then we need to install Transformers using the `torch` option. You can do that by using the terminal – open it using the button on the left or use the ⌥ F12 (macOS) or Alt + F12 (Windows) hotkey.

In the terminal, since we are using uv, we use the following commands to add it as a dependency and install it:

uv add “transformers[torch]” uv sync

If you are using pip:

pip install “transformers[torch]”

We will also install a couple more libraries that we will need later, including python-dotenv, datasets, notebook, and ipywidgets. You can use either of the methods above to install them.

After that, it may be best to add a GPU device to speed up the model. Depending on what you have on your machine, you can add it by setting the device parameter in pipeline. Since I am using a Mac M2 machine, I can set device="mps" like this:

pipe = pipeline("text-generation", model="openai-community/gpt2", device="mps")

If you have CUDA GPUs you can also set device="cuda".

Now that we’ve set up our pipeline, let’s try it out with a simple prompt:

from transformers import pipeline

pipe = pipeline("text-generation", model="openai-community/gpt2", device="mps")

print(pipe("A rectangle has a perimeter of 20 cm. If the length is 6 cm, what is the width?", max_new_tokens=200))

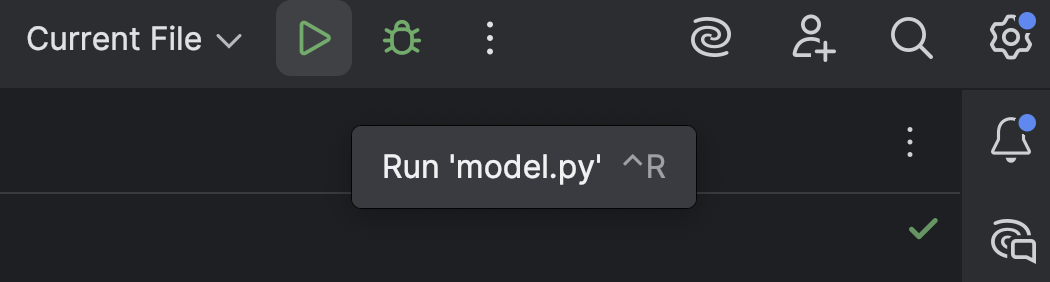

Run the script with the Run button ( ) at the top:

) at the top:

The result will look something like this:

[{'generated_text': 'A rectangle has a perimeter of 20 cm. If the length is 6 cm, what is the width?nnA rectangle has a perimeter of 20 cm. If the length is 6 cm, what is the width? A rectangle has a perimeter of 20 cm. If the width is 6 cm, what is the width? A rectangle has a perimeter of 20 cm. If the width is 6 cm, what is the width? A rectangle has a perimeter of 20 cm. If the width is 6 cm, what is the width?nnA rectangle has a perimeter of 20 cm. If the width is 6 cm, what is the width? A rectangle has a perimeter of 20 cm. If the width is 6 cm, what is the width? A rectangle has a perimeter of 20 cm. If the width is 6 cm, what is the width? A rectangle has a perimeter of 20 cm. If the width is 6 cm, what is the width?nnA rectangle has a perimeter of 20 cm. If the width is 6 cm, what is the width? A rectangle has a perimeter'}]

There isn’t much reasoning in this at all, only a bunch of nonsense.

You may also see this warning:

Setting `pad_token_id` to `eos_token_id`:50256 for open-end generation.

This is the default setting. You can also manually add it as below, so this warning disappears, but we don’t have to worry about it too much at this stage.

print(pipe("A rectangle has a perimeter of 20 cm. If the length is 6 cm, what is the width?", max_new_tokens=200, pad_token_id=pipe.tokenizer.eos_token_id))

Now that we’ve seen how GPT-2 behaves out of the box, let’s see if we can make it better at math reasoning with some fine-tuning.

Load and prepare a dataset from the Hugging Face Hub

Before we work on the GPT model, we first need training data. Let’s see how to get a dataset from the Hugging Face Hub.

If you haven’t already, sign up for a Hugging Face account and create an access token. We only need a `read` token for now. Store your token in a `.env` file, like so:

HF_TOKEN=your-hugging-face-access-token

We will use this Math Reasoning Dataset, which has text describing some math reasoning. We will fine-tune our GPT model with this dataset so it can solve math problems more effectively.

Let’s create a new Jupyter notebook, which we’ll use for fine-tuning because it lets us run different code snippets one by one and monitor the progress.

In the first cell, we use this script to load the dataset from the Hugging Face Hub:

from datasets import load_dataset

from dotenv import load_dotenv

import os

load_dotenv()

dataset = load_dataset("Cheukting/math-meta-reasoning-cleaned", token=os.getenv("HF_TOKEN"))

dataset

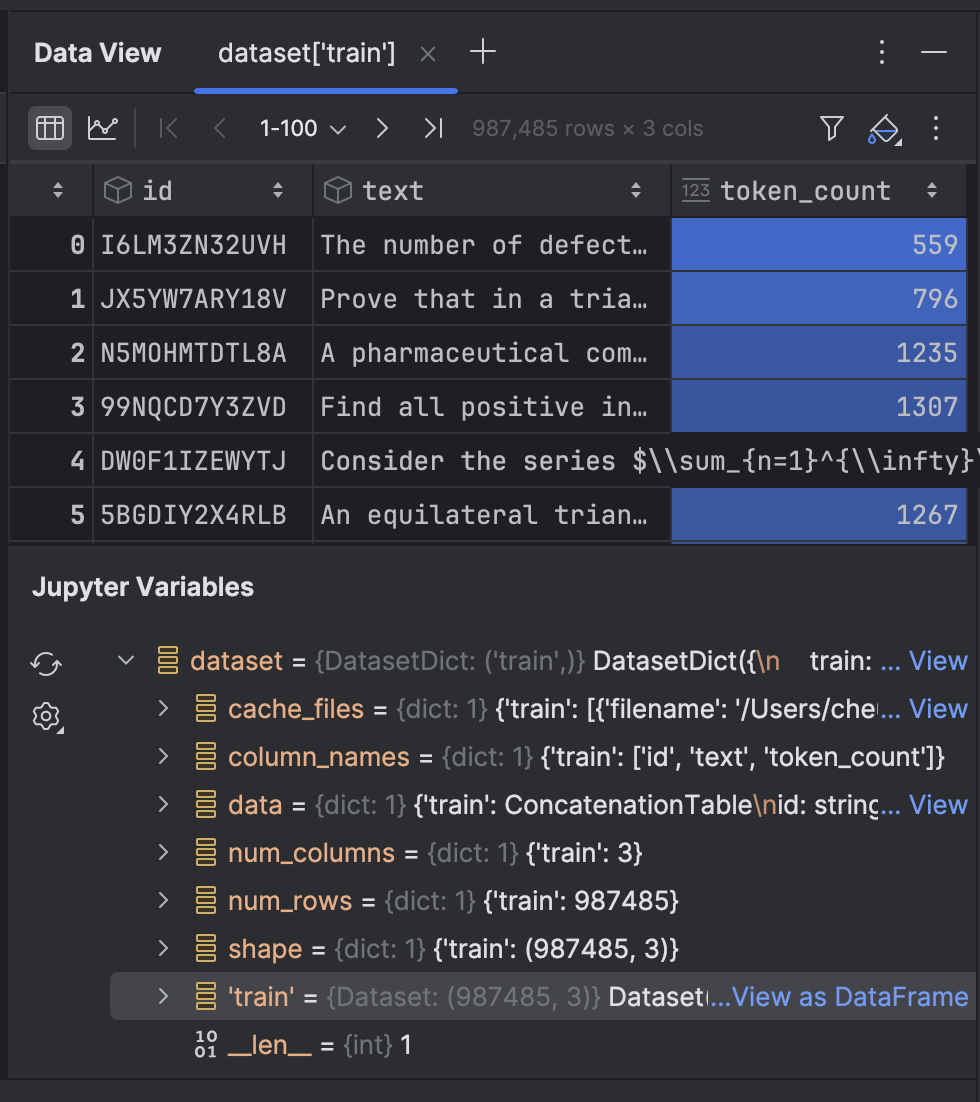

Run this cell (it may take a while, depending on your internet speed), which will download the dataset. When it’s done, we can have a look at the result:

DatasetDict({

train: Dataset({

features: ['id', 'text', 'token_count'],

num_rows: 987485

})

})

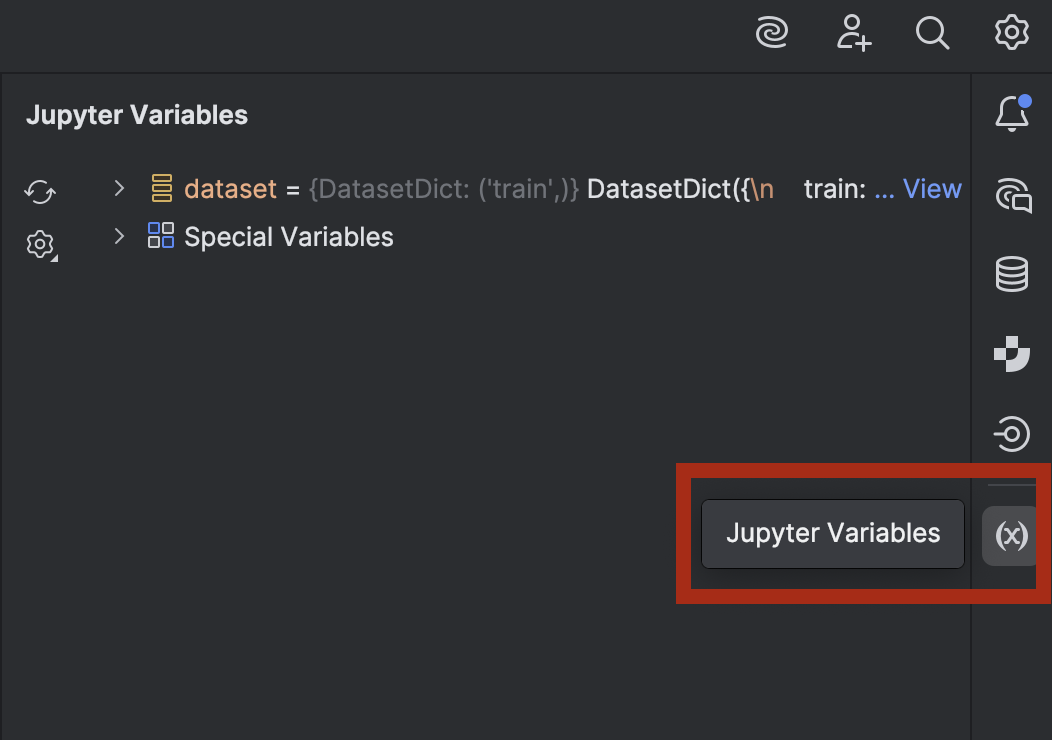

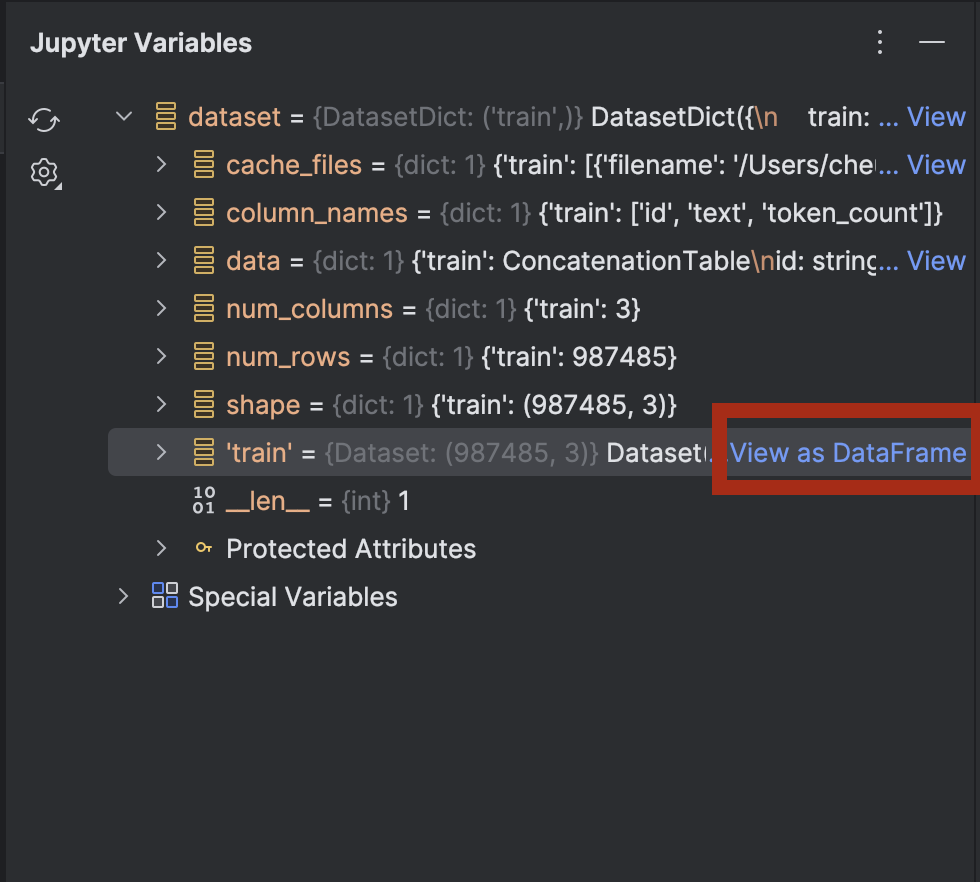

If you are curious and want to have a peek at the data, you can do so in PyCharm. Open the Jupyter Variables window using the button on the right:

Expand dataset and you will see the View as DataFrame option next to dataset[‘train’]:

Click on it to take a look at the data in the Data View tool window:

Next, we will tokenize the text in the dataset:

from transformers import GPT2Tokenizer

tokenizer = GPT2Tokenizer.from_pretrained("openai-community/gpt2")

tokenizer.pad_token = tokenizer.eos_token

def tokenize_function(examples):

return tokenizer(examples['text'], truncation=True, padding='max_length', max_length=512)

tokenized_datasets = dataset.map(tokenize_function, batched=True)

Here we use the GPT-2 tokenizer and set the pad_token to be the eos_token, which is the token indicating the end of line. After that, we will tokenize the text with a function. It may take a while the first time you run it, but after that it will be cached and will be faster if you have to run the cell again.

The dataset has almost 1 million rows for training. If you have enough computing power to process all of them, you can use them all. However, in this demonstration we’re training locally on a laptop, so I’d better only use a small portion!

tokenized_datasets_split = tokenized_datasets["train"].shard(num_shards=100, index=0).train_test_split(test_size=0.2, shuffle=True) tokenized_datasets_split

Here I take only 1% of the data, and then perform train_test_split to split the dataset into two:

DatasetDict({

train: Dataset({

features: ['id', 'text', 'token_count', 'input_ids', 'attention_mask'],

num_rows: 7900

})

test: Dataset({