| CARVIEW |

Real security for AI-fueled deception and malicious digital media.

Prevent deepfake and impersonation attacks from compromising the integrity of your digital communications and business processes.

Why we matter

Restore trust to digital communications. Go beyond just authenticating the ‘who’ to verifying the ‘what.’ Get advanced image, audio, and video content verification from the world’s leading authorities in synthetic media forensics and deepfake detection.

Our Approach to Synthetic Media Detection

Explore why our multi-dimensional, digital forensics approach is a must for today’s and tomorrow’s threats.

Content Credential Analysis

We scan the content to verify authenticity, origin, and edits by identifying embedded signatures, watermarks, or C2PA credentials.

Pixel Analysis

We examine the content for pixel-level signals, compression artifacts, and inconsistencies from editing software.

Physical Analysis

We analyze the image’s physical environment for inconsistencies with the real world.

Provenance Analysis

We examine the recorded journey and packaging of content for additional context.

Semantic Analysis

We analyze the content for contextual meaning and coherence.

Human Signals Analysis

We inspect content for faces and other human attributes to run more targeted analysis.

Biometric Analysis

We conduct identity-specific analysis through face and voice modelling.

Behavioral Analysis

We compare patterns in human behavior and interactions to detect inconsistencies.

Environmental Analysis

We assess physical surroundings for context and 3D authenticity.

A brief history of deepfakes

July 2025

A deepfake of Marco Rubio exposed the alarming ease of AI voice scams.

March 2025

A viral audio clip, which claims to be of Vice President JD Vance criticizing Elon Musk, is fake.

March 2025

Phishing deepfake video of YouTube CEO Neal Mohan highlights increased impersonation attacks against business executives.

February 2025

Fake AI-generated audio of Donald Trump Jr. expressing support for Russia over Ukraine stokes geopolitical tensions.

January 2025

AI-generated image of burning Hollywood sign during LA wildfires prompts concern over misinformation’s impact on emergency response.

December 2024

Nation-state threat actors behind Salt Typhoon steal a database of voicemails for future weaponization against public figures.

November 2024

Documentary featuring GetReal released on deepfake voice attack against London Mayor Sadiq Khan highlights generative AI’s ability to disrupt society.

November 2024

U.S. Department of the Treasury’s Financial Crimes Enforcement Network issues an alert on fraud involving deepfake media.

October 2024

Wiz CEO Assaf Rappaport's voice is impersonated by cyber attackers, targeting employees for credential theft.

September 2024

U.S. Sen. Ben Cardin is targeted by a deepfake video call impersonating former Ukrainian Foreign Affairs Minister Dmytro Kuleba.

July 2024

KnowBe4 reveals the hiring and onboarding of a North Korean operative after he deceived HR with AI-manipulated images and video.

March 2024

AP flags Princess Kate photo for manipulation, illuminating the need for verification of video, image and audio authenticity.

February 2024

A successful real-time video conferencing attack leads to $25 million loss, the largest incident of financial fraud to date.

May 2023

Fake image of Pentagon explosion briefly crashes U.S. stock market, highlighting the susceptibility of market indexes to deepfakes.

March 2023

Ridiculous AI-generated video of Will Smith eating spaghetti spreads online, showcasing both the potential and shortfalls of generative AI tools.

March 2023

Viral photo of Pope Francis in a puffer jacket highlights the increasing difficulty for the average person to detect manipulated images.

September 2022

Generative AI tool DALL·E 2 becomes widely available – a milestone for synthetic image creation at scale.

July 2020

MIT Center for Advanced Virtuality releases deepfake video of former President Richard Nixon giving an alternate moon landing speech.

June 2020

Deepfake video of a news interview with Mark Zuckerberg is posted to Instagram to test Meta’s misinformation removal policy.

December 2017

Motherboard’s Sam Cole reports that anonymous Reddit user “deepfakes” used AI tools to superimpose celebrity faces onto pornographic material.

Our Platform

The GetReal Security platform delivers layered deepfake protection against malicious generative AI threats integrating GetReal products and services into a cohesive user experience.

Prepare

Don’t be caught flat-footed by deepfake attacks. Get ready with deepfake awareness training, policy development, and solution deployment services.

Protect

Prevent deepfakes and impersonation attacks in real-time with advanced content verification for video conferencing and collaboration platforms.

Inspect

Dig deeper into the authenticity of an image, audio, or video file for high assurance and complex cases with our digital forensics software and worldrenowned researchers.

Respond

Don’t let deepfake AI incidents damage your brand or C-Suite’s reputation. World-class digital forensics experts provide evidence-based assessments for the boardroom, newsroom, and courtroom.

Enterprise-grade detection and mitigation of malicious generative AI threats

“We’ve reached a pivotal moment. The need for solutions that can quickly and accurately verify and authenticate digital media has never been more critical.”

Ted Schlein,

Co-Founder and General Partner

“With the rise of GenAI and synthetic media, businesses and governments have become prime targets for the manipulation and exploitation of digital content. With GetReal, organizations can now defend against this new attack vector.”

Alberto Yépez

Co-Founder and Managing Director

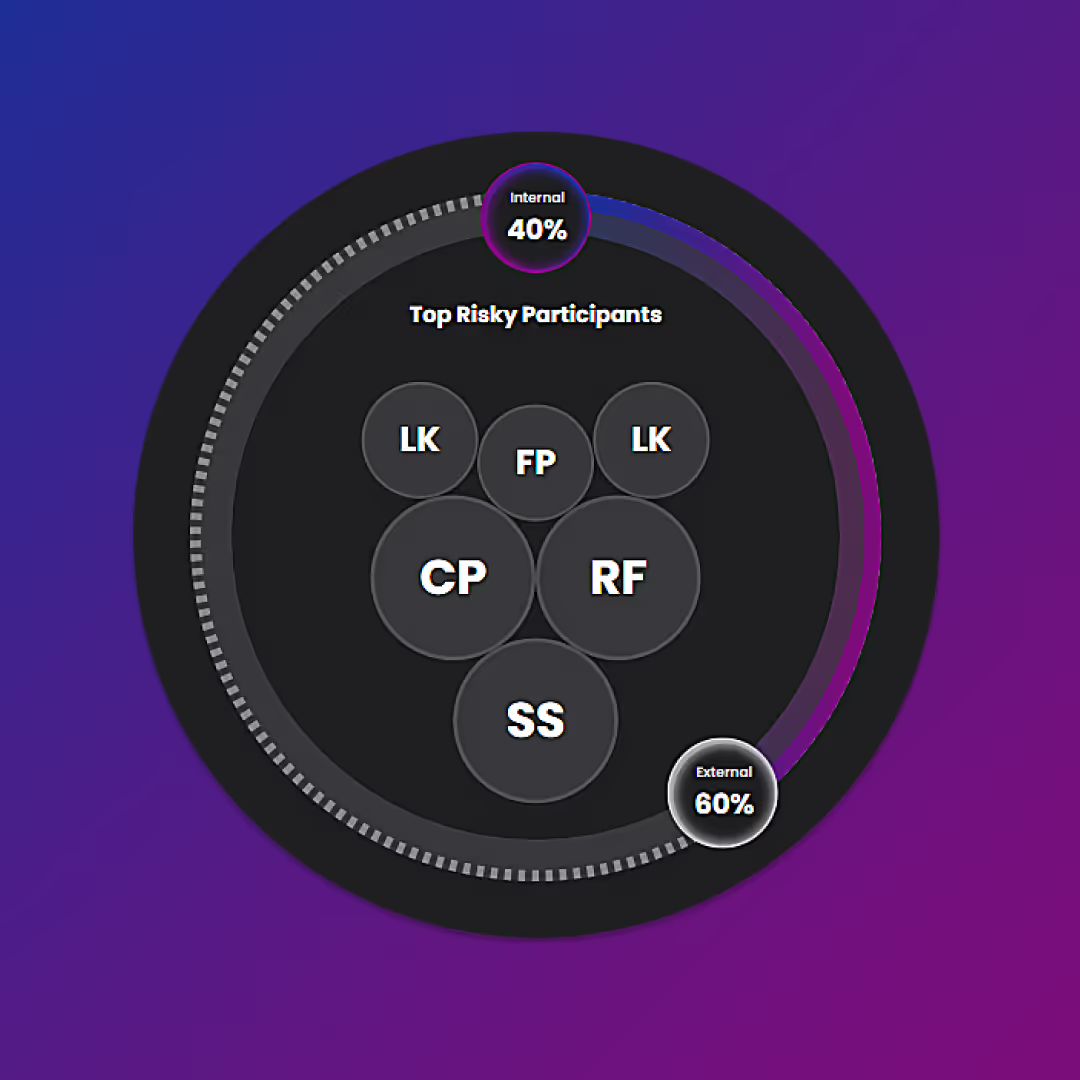

Adaptive threat intelligence

Benefit from real-time forensic insights to stop zero-day impersonation attacks

Our forensic experts continuously monitor the latest research, tools, and techniques used by threat actors to create malicious digital media. Our algorithms extract traces left by adversaries to synthetically generate or manipulate digital media and publish them to our multi-dimensional digital integrity platform.

Latest from GetReal Security

Video

December 2025

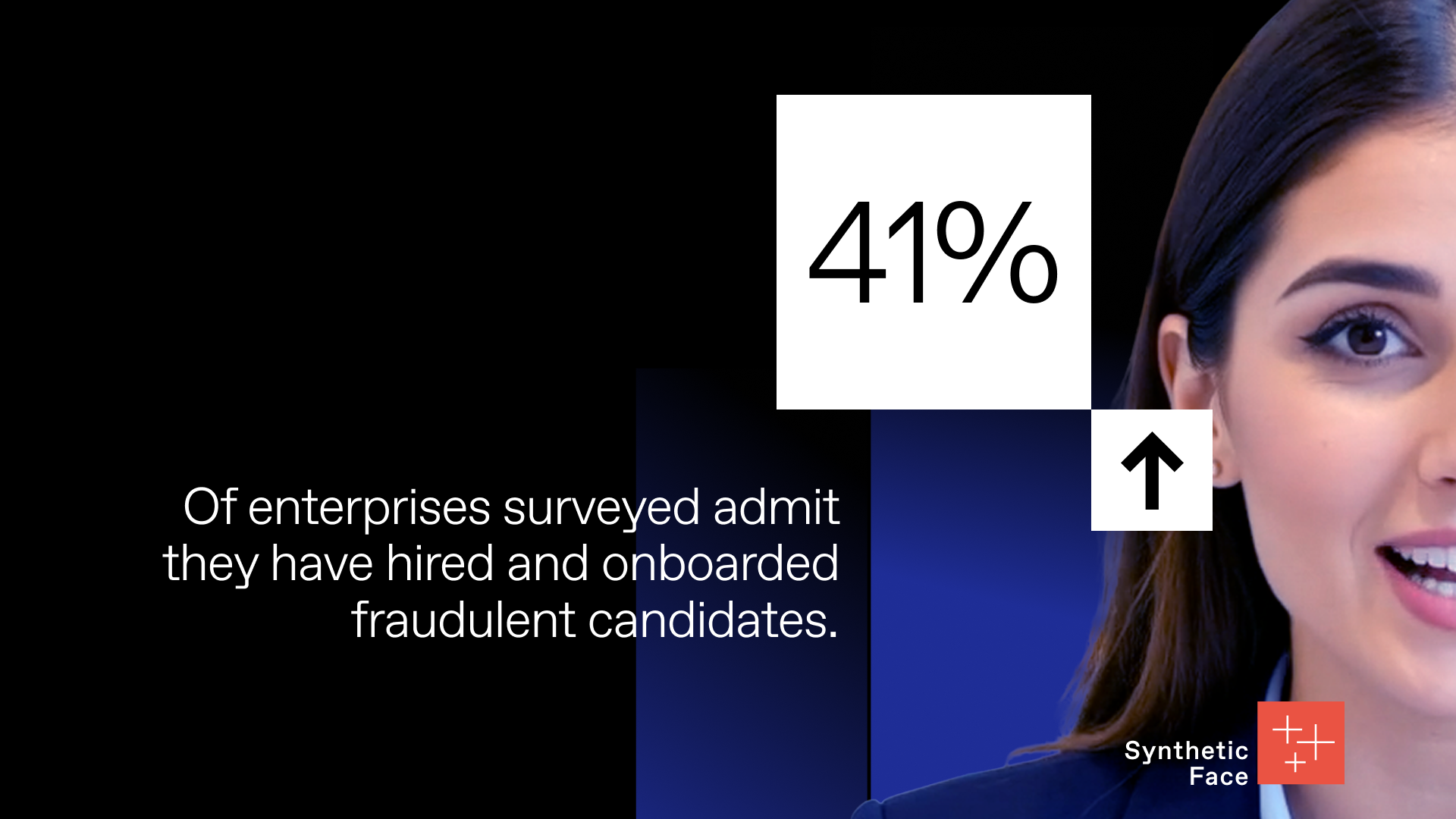

As recruiting moved online, a new class of adversary followed – using AI to fabricate identities, pass interviews, and infiltrate companies at scale. In some cases, these aren’t just scammers. They’re nation-state operatives. In this investigation, we look at how deepfake candidates are already moving through enterprise hiring pipelines – and why traditional checks no longer work.

Go to resources to see more news on what is happening at GetReal.