| CARVIEW |

A Tale of Two Lambdas: A Haskeller’s Journey Into Ocaml

November 6, 2025

Jane Street, 250 Vesey St, New York, NY 10007

https://www.meetup.com/ny-haskell/events/311160463

NOTE: Please RSVP if you plan to attend. If you arrive unannounced, we’ll do our best to get you a visitor badge so you can attend, but it’s a last minute scramble for the security staff.

Schedule

6:00–6:30: Meet and Greet

6:30–8:30: Presentation

8:30–10:00: Optional Social Gathering @ a nearby bar

Speaker: Richard Eisenberg

Richard Eisenberg is a Principal Researcher at Jane Street and a leading figure in the Haskell community. His work focuses on programming language design and implementation, with major contributions to GHC, including dependent types and type system extensions. He is widely recognized for advancing the expressiveness and power of Haskell’s type system while making these ideas accessible to the broader functional programming community.

Abstract

After spending a decade focusing mostly on Haskell, I have spent the last three years looking deeply at Ocaml. This talk will capture some lessons learned about my work in the two languages and their communities — how they are similar, how they differ, and how each might usefully grow to become more like the other. I will compare Haskell’s purity against Ocaml’s support for mutation, type classes against modules as abstraction paradigms, laziness against strictness, along with some general thoughts about language philosophy. We’ll also touch on some of the challenges both languages face as open-source products, in need of both volunteers and funding. While some functional programming experience will definitely be helpful, I’ll explain syntax as we go — no Haskell or Ocaml knowledge required, as I want this talk to be accessible equally to the two communities.

I have written previously about how approval and range voting methods are intrinsically tactical. This doesn’t mean that they are more tactical than other election systems (nearly all of which are shown to sometimes be tactical by Gibbard’s Theorem when there are three or more options). Rather, it means that tactical voting is unavoidable. Voting in such a system requires answering the question of where to set your approval threshold or how to map your preferences to a ranged voting scale. These questions don’t have more or less “honest” answers. They are always tactical choices.

But I haven’t dug deeper into what these tactics look like. Here, I’ll do the mathematical analysis to show what effective voting looks like in these systems, and make some surprising observations along the way.

Mathematical formalism for approval voting

We’ll start by assuming an approval election, so the question is where to put your threshold. At what level of approval do you switch from voting not to approve a candidate to approving them?

We’ll keep the notation minimal:

- As is standard in probability, I’ll write ℙ[X] for the probability of an event X, and 𝔼[X] for the expected value of a (numerical) random variable X.

- I will use B to refer to a random collection (multiset) of ballots, drawn from some probability distribution reflecting what we know from polling and other information sources on other voters. B will usually not include the approval vote that you’re considering casting, and to include that approval, we’ll write B ∪ {c}, where c is the candidate you contemplate approving.

- I’ll write W(·) to indicate the winner of an election with a given set of ballots. This is the candidate with the most approvals. We’ll assume some tiebreaker is in place that’s independent of individual voting decisions; for instance, candidates could be shuffled into a random order before votes are cast, in in the event of a tie for number of approvals, we’ll pick the candidate who comes first in that shuffled order.

- U(·) will be your utility function, so U(c) is the utility (i.e., happiness, satisfaction, or perceived social welfare) that you personally will get from candidate c winning the election. This doesn’t mean you have to be selfish, per se, as accomplishing some altruistic goal is still a form of utility, but we evaluate that utility from your point of view even though other voters may disagree.

With this notation established, we can clearly state, almost tautologically, when you should approve of a candidate c. You should approve of c whenever:

𝔼[U(W(B ∪ {c}))] > 𝔼[U(W(B))]

That’s just saying you should approve of c if your expected utility from the election with your approval of c is more than your utility without it.

The role of pivotal votes and exact strategy

This inequality can be made more useful by isolating the circumstances in which your vote makes a difference in the outcome. That is, W(B ∪ {c}) ≠ W(B). Non-pivotal votes contribute zero to the net expectation, and can be ignored.

In approval voting, approving a candidate can only change the outcome by making that candidate the winner. This means a pivotal vote is equivalent to both of:

- W(B ∪ {c}) = c

- W(B) ≠ c

It’s useful to have notation for this, so we’ll define V(B, c) to mean that W(B ∪ {c}) ≠ W(B), or equivalently, that W(B ∪ {c}) = c and W(B) ≠ c. To remember this notation, recall that V is the pivotal letter in the word “pivot”, and also visually resembles a pivot.

With this in mind, the expected gain in utility from approving c is:

- 𝔼[U(W(B ∪ {c}))] - 𝔼[U(W(B))]. But since the utility gain is zero except for pivotal votes, this is the same as

- ℙ[V(B, c)] · (𝔼[U(W(B ∪ {c})) | V(B, c)] - 𝔼[U(W(B)) | V(B, c)]). But since V(B, c) implies that W(B ∪ {c}) = c, so this simplifies to

- ℙ[V(B, c)] · (U(c) - 𝔼[U(W(B)) | V(B, c)])

Therefore, you ought to approve of a candidate c whenever

U(c) > 𝔼[U(W(B)) | V(B, c)]

This is much easier to interpret. You should approve of a candidate c precisely when the utility you obtain from c winning is greater than the expected utility in cases where c is right on the verge of winning (but someone else wins instead).

There are a few observations worth making about this:

- The expectation clarifies why the threshold setting part of approval voting is intrinsically tactical. It involves evaluating how likely each other candidate is to win, and using that information to compute an expectation. That means advice to vote only based on internal feelings like whether you consider a candidate acceptable is always wrong. An effective vote takes into account external information about how others are likely to vote, including polling and understanding of public opinion and mood.

- The conditional expectation, assuming V(B, c), tells us that the optimal strategy for whether to approve of some candidate c depends on the very specific situation where c is right on the verge of winning the election. If c is a frontrunner in the election, this scenario isn’t likely to be too different from the general case, and the conditional probability doesn’t change much. However, if c is a long-shot candidate from some minor party, but somehow nearly ties for a win, we’re in a strange situation indeed: perhaps a major last-minute scandal, a drastic polling error, or a fundamental misunderstanding of the public mood. Here, the conditonal expected utility of an alternate winner might be quite different from your unconditional expectation. If, say, voters prove to have an unexpected appetite for extremism, this can affect the runner-ups, as well.

- Counter-intuitively, an optimal strategy might even involve approving some candidates that you like less than some that you don’t approve! This can happen because different candidates are evaluated against different thresholds. Therefore, a single voter’s best approval ballot isn’t necessarily monotonic in their utility rankings. This adds a level of strategic complexity I hadn’t anticipated in my earlier writings on strategy in approval voting.

Approximate strategy

The strategy described above is rigorously optimal, but not at all easy to apply. Imagining the bizarre scenarios in which each candidate, no matter how minor, might tie for a win, is challenging to do well. We’re fortunate, then, that there’s a good approximation. Remember that the utility gain from approving a candidate was equal to

ℙ[V(B, c)] · (U(c) - 𝔼[U(W(B)) | V(B, c)])

In precisely the cases where V(B, c) is a bizarre assumption that’s difficult to imagine, we’re also multiplying by ℙ[V(B, c)], which is vanishingly small, so this vote is very unlikely to make a difference in the outcome. For front-runners, who are relatively much more likely to be in a tie for the win, the conditional probability changes a lot less: scenarios that end in a near-tie are not too different from the baseline expectation.

This happens because ℙ[V(B, c)] falls off quite quickly indeed as the popularity of c decreases, especially for large numbers of voters. For a national scale election (say, about 10 million voters), if c expects around 45% of approvals, then ℙ[V(B, c)] is around one in a million. That’s a small number, telling us that very large elections aren’t likely to be decided by a one-vote margin anyway. But it’s gargantuan compared to the number if c expects only 5% of approvals. Then ℙ[V(B, c)] is around one in 10^70. That’s about one in a quadrillion-vigintillion, if you want to know, and near the scale of possibly picking one atom at random from the entire universe! The probability of casting a pivotal vote drops off exponentially, and by this point it’s effectively zero.

With that in mind, we can drop the condition on the probability in the second term, giving us a new rule: Approve of a candidate c any time that:

U(c) > 𝔼[U(W(B))]

That is, approve of any candidate whose win you would like better than you expect to like the outcome of the election. In other words, imagine you have no other information on election night, and hear that this candidate has won. If this would be good news, approve of the candidate on your ballot. If it would be bad news, don’t.

- This rule is still tactical. To determine how much you expect to like the outcome of the election, you need to have beliefs about who else is likely to win, which still requires an understanding of polling and public opinion and mood.

- However, there is one threshold, derived from real polling data in realistic scenarios, and you can cast your approval ballot monotonically based on that single threshold.

This is no longer a true optimal strategy, but with enough voters, the exponential falloff in ℙ[V(B, c)] as c becomes less popular is a pretty good assurance that the incorrect votes you might cast by using this strategy instead of the optimal ones are extremely unlikely to matter. In practice, this is probably the best rule to communicate to voters in an approval election with moderate to large numbers of voters.

We can get closer with the following hypothetical: Imagine that on election night, you have no information on the results except for a headline that proclaims: Election Too Close To Call. With that as your prior, you ask of each candidate, is it good or bad news to hear now that this candidate has won. If it would be good news, then you approve of them. This still leaves one threshold, but we’re no longer making the leap that the pivotal condition for front-runners is unnecessary; we’re imagining a world in which at least some candidates, almost surely the front-runners, are tied. If this changes your decision (which it likely would only in very marginal cases), you can use this more accurate approximation.

Reducing range to approval voting

I promised to look at strategy for range voting, as well. Armed with an appreciation of approval strategy, it’s easy to extend this to an optimal range strategy, as well, for large-scale elections.

The key is to recognize that a range voting election with options 0, 1, 2, …, n is mathematically equivalent to an approval election where everyone is just allowed to vote n times. The number you mark on the range ballot can be interpreted as saying how many of your approval ballots you want to mark as approving that candidate.

Looking at it this way presents the obvious question: why would you vote differently on some ballots than others? In what situation could that possibly be the right choice?

- For small elections, say if you’re voting on places to go out and eat with your friends or coworkers, it’s possible that adding in a handful of approvals materially changes the election so that the optimal vote is different. Then it may well be optimal to cast a range ballot using some intermediate number.

- For large elections, though, you’re presented with pretty much exactly the same question each time, and you may as well give the same answer. Therefore, in large-scale elections, the optimal way to vote with a range ballot is always to rate everyone either the minimum or maximum possible score. This reduces a range election exactly to an approval election. The additional expressiveness of a range ballot is a siren call: by using it, you always vote less effectively than you would have by ignoring it and using only the two extreme choices.

Since we’re discussing political elections, which have relatively large numbers of voters, this answers the question for range elections, as well: Rate a candidate the maximum score if you like them better than you expect to like the outcome of the election. Otherwise, rate them the minimum score.

Summing it up

What we’ve learned, then, is that optimal voting in approval or range systems boils down to two nested rules.

- Exact rule (for the mathematically fearless): approve c iff U(c) > 𝔼[ U(W(B)) | your extra vote for c is pivotal ]. This Bayesian test weighs each candidate against the expected utility in the razor-thin worlds where they tie for first.

- Large-electorate shortcut (for everyone else): because those pivotal worlds become astronomically rare as the field grows, the condition shrinks to a single cutoff: approve (or give a maximum score) to every candidate whose victory you expect to enjoy more than you expected to like the result. (If you can, imagine only cases where you know the election is close.)

We’ve seen why the first rule is the gold standard; but the second captures virtually all of its benefit when millions are voting. Either way, strategy is inseparable from sincerity: you must translate beliefs about polling into a utility threshold, and then measure every candidate against it. We’ve also seen by a clear mathematical equivalence why range ballots add no real leverage in large-scale elections, instead only offering false choices that are always wrong.

The entire playbook fits on a sticky note: compute the threshold, vote all-or-nothing, and let the math do the rest.

Seeing Tom’s article reminded me of a CodeWorld feature which was implemented long ago, but I’m excited to share again in this brief note.

CodeWorld Recap

If you’re not familiar with CodeWorld, it’s a web-based programming environment I created mainly to teach mathematics and computational thinking to students in U.S. middle school, ages around 11 to 14 years old. The programming language is based on Haskell — well, it is technically Haskell, but with a lot of preprocessing and tricks aimed at smoothing out the rough edges. There’s also a pure Haskell mode, giving you the full power of the idiomatic Haskell language.

In CodeWorld, the standard library includes primitives for putting pictures on the screen. This includes:

- A few primitive pictures: circles, rectangles, and the like

- Transformations to rotate, translate, scale, clip, and and recolor an image

- Compositions to overlay and combine multiple pictures into a more complex picture.

Because the environment is functional and declarative — and this will be important — there isn’t a primitive to draw a circle. There is a primitive that represents the concept of a circle. You can include a circle in your drawing, of course, but you compose a picture by combining simpler pictures declaratively, and then draw the whole thing only at the very end.

Debugging in CodeWorld

CodeWorld’s declarative interface enables a number of really fun kinds of interactivity… what programmers might call “debugging”, but for my younger audience, I view as exploratory tools: ways they can pry open the lid of their program and explore what it’s doing.

There are a few of these that are pretty awesome. Lest I seem to be claiming the credit, the implementation for these features is due to two students in Summer of Haskell and then in Google Summer of Code: Eric Roberts, and Krystal Maughan.

- Not the point here, but there are some neat features for rewinding and replaying programs, zooming in, etc.

- There’s also an “inspect” mode, in which you not only see the final result, but the whole structure of the resulting picture (e.g., maybe it’s an overlay of three other pictures: a background, and two characters, and each of those is transformed in some way, and the base picture for the transformation is some other overlay of multiple parts…) This is possible because pictures are represented not as bitmaps, but as data structures that remember how the picture was built from its individual parts

Krystal’s recap blog post contains demonstrations of not only her own contributions, but the inspect window as well. Here’s a section showing what I’ll talk about now.

The inspect window is linked to the code editor! Hover over a structural part of the picture, and you can see which expression in your own code produced that part of the picture.

This is another application of the technique from Tom’s post. The data type representing pictures in CodeWorld stores a call stack captured at each part of the picture, so that when you inspect the picture and hover over some part, the environment knows where in your code you described that part, and it highlights the code for you, and jumps there when clicked.

While it’s the same technique, I really like this example because it’s not at all like an exception. We aren’t reporting errors or anything of the sort. Just using this nice feature of GHC that makes the connection between code and declarative data observable to help our users observe things about their own code.

I got nerd-sniped, so I’ll share my investigation.

For me, since the solution to the general problem wasn’t obvious, it made sense to specialize. Let’s say there are n players, and just to make the game finite, let’s say that instead of choosing any natural number, you choose a number from 1 to m. Choosing very large numbers is surely a bad strategy anyway, so intuitively I expect any reasonably large choice of m to give very similar results.

n = 2

Let’s start with the case where n = 2. This one turns out to be easy: you should always pick 1, daring your opponent to pick 1, as well. We can induct on m to prove this. If m = 1, then you are required to pick 1 by the rules. But if m > 1, suppose you pick m. Either your opponent also picks m and you both lose, or your opponent picks a number smaller than m and you still lose. Clearly, this is a bad strategy, and you always do at least as well choosing one of the first m - 1 options instead. This reduces the game to one where we already know the best strategy is to pick 1.

That wasn’t very interesting, so let’s try more players.

n = 3, m = 2

Suppose there are three players, each choosing either 1 or 2. It’s impossible for all three players to choose a different number! If you do manage to pick a unique number, then, you will be the only player to do so, so it will always be the least unique number simply because it’s the only one!

If you don’t think your opponents will have figured this out, you might be tempted to pick 2, in hopes that your opponents go for 1 to try to get the least number, and you’ll be the only one choosing 2. But this makes you predictable, so the other players can try to take advantage. But if one of the other players reasons the same way, you both are guaranteed to lose! What we want here is a Nash equilibrium: a strategy for all players such that no single player can do better by deviating from that strategy.

It’s not hard to see that all players should flip a coin, choosing either 1 or 2 with equal probability. There’s a 25% chance each that a player picks the unique number and wins, and there’s a 25% chance that they all choose the same number and all lose. Regrettable, but anything you do to try to avoid that outcome just makes your play more predictable so that the other players could exploit that.

It’s interesting to look at the actual computation. When computing a Nash equilibrium, we generally rely on the indifference principle: a player should always be indifferent between any choice that they make at random, since otherwise, they would take the one with the better outcome and always play that instead.

This is a bit counter-intuitive! Naively, you might think that the optimal strategy is the one that gives the best expected result, but when a Nash equilibrium involves a random choice— known as a mixed strategy — then any single player actually does equally well against other optimal players no matter which mix of those random choices they make! In this game, though, predictability is a weakness. Just as a poker player tries to avoid ‘tells’ that give away the strength of their hand, players in this number-choosing game need to be unpredictable. The reason for playing the Nash equilibrium isn’t that it gives the best expected result against optimal opponents, but rather that it can’t be exploited by an opponent.

Let’s apply this indifference principle. This game is completely symmetric — there’s no order of turns, and all players have the same choices and payoffs available — so an optimal strategy ought to be the same for any player. Then, let’s say p is the probability that any single player will choose 1. Then if you choose 1, you will win with probability (1 — p)², while if you choose 2, you’ll win with probability p². If you set these equal to each other as per the indifference principle, and solve the equation, you get p = 0.5, as we reasoned above.

n = 3, m = 3

Things get more interesting if each player can choose 1, 2, or 3. Now it’s possible for each player to choose uniquely, so it starts to matter which unique number you pick. Let’s say each player chooses 1, 2, and 3 with the probabilities p, q, and r respectively. We can analyze the probability of winning with each choice.

- If you pick 1, then you always win unless someone else also picks a 1. Your chance of winning, then, is (q + r)².

- If you pick 2, then for you to win, either both other players need to pick 1 (eliminating each other because of uniqueness and leaving you to win by default), or both other players need to pick 3, so that you’ve picked the least number. Your chance of winning is p² + r².

- If you pick 3, then you need your opponents to pick the same different number: either 1 or 2. Your chance of winning is p² + q².

Setting these equal to each other immediately shows us that since p² + q² = p² + r², we must conclude that q = r. Then p² + q² = (q + r)² = 4q², so p² = 3q² = 3r². Together with p + q + r = 1, we can conclude that p = 2√3 - 3 ≈ 0.464, while q = r = 2 - √3 ≈ 0.268.

This is our first really interesting result. Can we generalize?

n = 3, in general

The reasoning above generalizes well. If there are three players, and you pick a number k, you are betting that either the other two players will pick the same number less than k, or they will each pick numbers greater than k (regardless of whether they are the same one).

I’ll switch notation here for convenience. Let X be a random variable representing a choice by a player from the Nash equilibrium strategy. Then if you choose k, your probability of winning is P(X=1)² + … + P(X=k-1)² + P(X>k)². The indifference principle tells us that this should be equal for any choice of k. Equivalently, for any k from 1 to m - 1, the probability of winning when choosing k is the same as the probability when choosing k + 1. So:

- P(X=1)² + … + P(X=k-1)² + P(X>k)² = P(X=1)² + … + P(X=k)² + P(X>k+1)²

- Cancelling the common terms: P(X>k)² = P(X=k)² + P(X>k+1)²

- Rearranging: P(X=k) = √(P(X≥k+1)² - P(X>k+1)²)

This gives us a recursive formula that we can use (in reverse) to compute P(X=k), if only we knew P(X=m) to get started. If we just pick something arbitrary, though, it turns out that all the results are just multiples of that choice. We can then divide by the sum of them all to normalize the probabilities to sum to 1.

Here I can write some code (in Haskell):

import Probability.Distribution (Distribution, categorical, probabilities)

nashEquilibriumTo :: Integer -> Distribution Double Integer

nashEquilibriumTo m = categorical (zip allPs [1 ..])

where

allPs = go m 1 0 []

go 1 pEqual pGreater ps = (/ (pEqual + pGreater)) <$> (pEqual : ps)

go k pEqual pGreater ps =

let pGreaterEqual = pEqual + pGreater

in go

(k - 1)

(sqrt (pGreaterEqual * pGreaterEqual - pGreater * pGreater))

pGreaterEqual

(pEqual : ps)

main :: IO ()

main = print (probabilities (nashEquilibriumTo 100))

I’ve used a probability library from https://github.com/cdsmith/prob that I wrote with Shae Erisson during a fun hacking session a few years ago. It doesn’t help yet, but we’ll play around with some of its further features below.

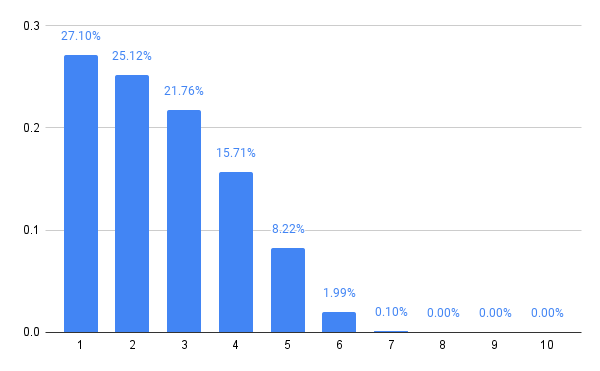

Trying a few large values for m confirms my suspicion that any reasonably large choice of m gives effectively the same result.

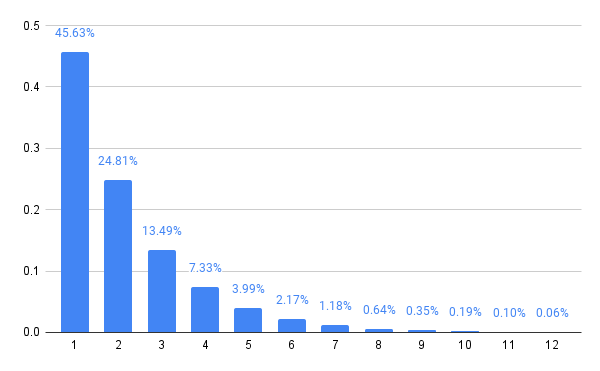

1 -> 0.4563109873079237

2 -> 0.24809127016999155

3 -> 0.1348844977362459

4 -> 7.333521940168612e-2

5 -> 3.987155303205954e-2

6 -> 2.1677725302500214e-2

7 -> 1.1785941067126387e-2

By inspection, this appears to be a geometric distribution, parameterized by the probability 0.4563109873079237. We can check that the distribution is geometric, which just means that for all k < m - 1, the ratio P(X > k) / P(X ≥ k) is the same as P(X > k + 1) / P(X ≥ k + 1). This is the defining property of a geometric distribution, and some simple algebra confirms that it holds in this case.

But what is this bizarre number? A few Google queries gets us to an answer of sorts. A 2002 Ph.D. dissertation by Joseph Myers seems to arrive at the same number in the solution to a question about graph theory, where it’s identified as the real root of the polynomial x³ - 4x² + 6x - 2. We can check that this is right for a geometric distribution. Starting with P(X=k) = √(P(X≥k+1)² -P(X>k+1)²) where k = 1, we get P(X=1) = √(P(X ≥ 2)² -P(X > 2)²). If P(X=1) = p, then P(X ≥ 2) = 1 - p, and P(X > 2) = (1 - p)², so we have p = √((1-p)² - ((1 - p)²)²), which indeed expands to p⁴ - 4p³ + 6p² - 2p = 0, so either p = 0 (which is impossible for a geometric distribution), or p³ - 4p² + 6p - 2 = 0, giving the probability seen above. (How and if this is connected to the graph theory question investigated in that dissertation, though, is certainly beyond my comprehension.)

You may wonder, in these large limiting cases, how often it turns out that no one wins, or that we see wins with each number. Answering questions like this is why I chose to use my probability library. We can first define a function to implement the game’s basic rule:

leastUnique :: (Ord a) => [a] -> Maybe a

leastUnique xs = listToMaybe [x | [x] <- group (sort xs)]

And then we can define the whole game using the strategy above for each player:

gameTo :: Integer -> Distribution Double (Maybe Integer)

gameTo m = do

ns <- replicateM 3 (nashEquilibriumTo m)

return (leastUnique ns)

Then we can update main to tell us the distribution of game outcomes, rather than plays:

main :: IO ()

main = print (probabilities (gameTo 100))

And get these probabilities:

Nothing -> 0.11320677243374572

Just 1 -> 0.40465349320873445

Just 2 -> 0.22000565820506113

Just 3 -> 0.11961465909617276

Just 4 -> 6.503317590749513e-2

Just 5 -> 3.535782320137907e-2

Just 6 -> 1.9223659987298684e-2

Just 7 -> 1.0451692718822408e-2

An 11% probability of no winner for large m is an improvement over the 25% we computed for m = 2. Once again, a least unique number greater than 7 has less than 1% probability, and the probabilities drop even more rapidly from there.

More than three players?

With an arbitrary number of players, the expressions for the probability of winning grow rather more involved, since you must consider the possibility that some other players have chosen numbers greater than yours, while others have chosen smaller numbers that are duplicated, possibly in twos or in threes.

For the four-player case, this isn’t too bad. The three winning possibilities are:

- All three other players choose the same smaller number. This has probability P(X=1)³ + … + P(X=k-1)³

- All three other players choose larger numbers, though not necessarily the same one. This has probability P(X > k)³

- Two of the three other players choose the same smaller number, and the third chooses a larger number. This has probability 3 P(X > k) (P(X=1)² + … + P(X=k-1)²)

You could possibly work out how to compute this one without too much difficulty. The algebra gets harder, though, and I dug deep enough to determine that the Nash equilibrium is no longer a geometric distribution. If you assume the Nash equilibrium is geometric, then numerically, the probability of choosing 1 that gives 1 and 2 equal rewards would need to be about 0.350788, but this choice gives too small a reward for choosing 3 or more, implying they ought to be chosen less often.

For larger n, even stating the equations turns into a nontrivial problem of accurately counting the possible ways to win. I’d certainly be interested if there’s a nice-looking result here, but I do not yet know what it is.

Numerical solutions

We can solve this numerically, though. Using the probability library mentioned above, one can easily compute, for any finite game and any strategy (as a probability distribution of moves) the expected benefit for each choice.

expectedOutcomesTo :: Int -> Int -> Distribution Double Int -> [Double]

expectedOutcomesTo n m dist =

[ probability (== Just i) $ leastUnique . (i :) <$> replicateM (n - 1) dist

| i <- [1 .. m]

]

We can then then iteratively adjust the probability of each choice slightly based on how its expected outcome compares to other expected outcomes in the distribution. It turns out to be good enough to compare with an immediate neighbor. Just so that all of our distributions remain valid, instead of working with the global probabilities P(X=k), we’ll do the computation with conditional probabilities P(X = k | X ≥ k), so that any sequence of probabilities is valid, without worrying about whether they sum to 1. Given this list of conditional probabilities, we can produce a probability distribution like this.

distFromConditionalStrategy :: [Double] -> Distribution Double Int

distFromConditionalStrategy = go 1

where

go i [] = pure i

go i (q : qs) = do

choice <- bernoulli q

if choice then pure i else go (i + 1) qs

Then we can optimize numerically, using the difference of each choice’s win probability from its neighbor as a diff to add to the conditional probability of that choice.

refine :: Int -> Int -> [Double] -> Distribution Double Int

refine n iters strategy

| iters == 0 = equilibrium

| otherwise =

let ps = expectedOutcomesTo n m equilibrium

delta = zipWith subtract (drop 1 ps) ps

adjs = zipWith (+) strategy delta

in refine n (iters - 1) adjs

where

m = length strategy + 1

equilibrium = distFromConditionalStrategy strategy

It works well enough to run this for 10,000 iterations at n = 4, m = 10.

main :: IO ()

main = do

let n = 4

m = 10

d = refine n 10000 (replicate (m - 1) 0.3)

print $ probabilities d

print $ expectedOutcomesTo n m d

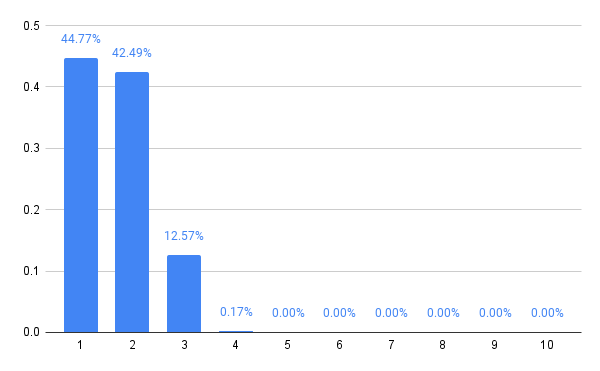

The resulting probability distribution is, to me, at least, quite surprising! I would have expected that more players would incentivize you to choose a higher number, since the additional players make collisions on low numbers more likely. But it seems the opposite is true. While three players at least occasionally (with 1% or more probability) should choose numbers up to 7, four players should apparently stop at 3.

Huh. I’m not sure why this is true, but I’ve checked the computation in a few ways, and it seems to be a real phenomenon. Please leave a comment if you have a better intuition for why it ought to be so!

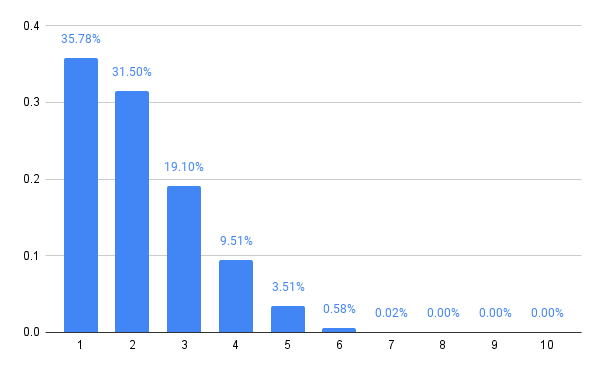

With five players, at least, we see some larger numbers again in the Nash equilibrium, lending support to the idea that there was something unusual going on with the four player case. Here’s the strategy for five players:

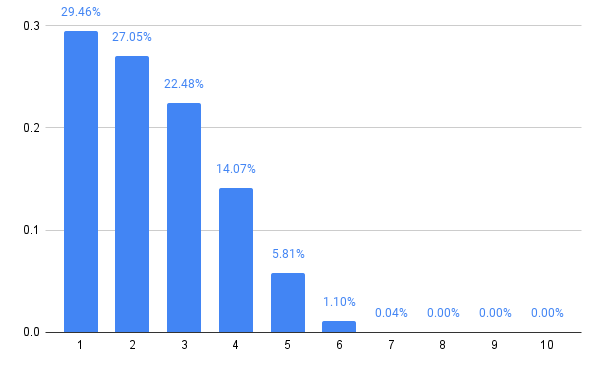

The six player variant retracts the distribution a little, reducing the probabilities of choosing 5 or 6, but then 7 players expands the choices a bit, and it’s starting to become a pattern that even numbers of players lend themselves to a tighter style of play, while odd numbers open up the strategy.

In general, it looks like this is converging to something. The computations are also getting progressively slower, so let’s stop there.

Game variants

There is plenty of room for variation in the game, which would change the analysis. If you’re looking for a variant to explore on your own, in addition to expanding the game to more players, you might try these:

- What if a tie awards each player an equal fraction of the reward for a full win, instead of nothing at all? (This actually simplifies the analysis a bit!)

- What if, instead of all wins being equal, we found the least unique number, and paid that player an amount equal to the number itself? Now there’s somewhat less of an incentive for players to choose small numbers, since a larger number gives a large payoff! This gives the problem something like a prisoner’s dilemma flavor, where players could coordinate to make more money, but leave themselves open to being undercut by someone willing to make a small profit by betraying the coordinated strategy.

What other variants might be interesting?

Addendum (Sep 26): Making it faster

As is often the case, the naive code I originally wrote can be significantly improved. In this case, the code was evaluating probabilities by enumerating all the ways players might choose numbers, and then computing the winner for each one. For large values of m and n this is a lot, and it grows exponentially.

There’s a better way. We don’t need to remember each individual choice to determine the outcome of the game in the presence of further choices. Instead, we need only determine which numbers have been chosen once, and which have been chosen more than once.

data GameState = GameState

{ dups :: Set Int,

uniqs :: Set Int

}

deriving (Eq, Ord)

To add a new choice to a GameState requires checking whether it’s one of the existing unique or duplicate choices:

addToState :: Int -> GameState -> GameState

addToState n gs@(GameState dups uniqs)

| Set.member n dups = gs

| Set.member n uniqs = GameState (Set.insert n dups) (Set.delete n uniqs)

| otherwise = GameState dups (Set.insert n uniqs)

We can now directly compute the distribution of GameState corresponding to a set of n players playing moves with a given distribution. The use of simplify from the probability library here is crucial: it combines all the different paths that lead to the same outcome into a single case, avoiding the exponential explosion.

stateDist :: Int -> Distribution Double Int -> Distribution Double GameState

stateDist n moves = go n (pure (GameState mempty mempty))

where

go 0 states = states

go i states = go (i - 1) (simplify $ addToState <$> moves <*> states)

Now it remains to determine whether a certain move can win, given the game state resulting from the remaining moves.

win :: Int -> GameState -> Bool

win n (GameState dups uniqs) =

not (Set.member n dups) && maybe True (> n) (Set.lookupMin uniqs)

Finally, we update the function that computes win probabilities to use this new code.

expectedOutcomesTo :: Int -> Int -> Distribution Double Int -> [Double]

expectedOutcomesTo n m dist = [probability (win i) states | i <- [1 .. m]]

where

states = stateDist (n - 1) dist

The result is that while I previously had to leave the code running overnight to compute the n = 8 case, I can now easily compute cases up to 15 players with enough patience. This would involve computing the winner for about a quadrillion games in the naive code, making it hopeless , but the simplification reduces that to something feasible.

It seems that once you leave behind small numbers of players where odd combinatorial things happen, the equilibrium eventually follows a smooth pattern. I suppose with enough players, the probability for every number would peak and then decline, just as we see for 4 and 5 here, as it becomes worthwhile to spread your choices even further to avoid duplicates. That’s a nice confirmation of my intuition.

Some of this I covered in earlier posts, but I’m going to construct things a little differently, so I’ll start from scratch. The Collatz conjecture is about the function f(n) defined to be n/2 if n is even, or 3n+1 if n is odd. Starting with some number (say, 7, for example) we can apply this function repeatedly to get 7, then 22, then 11, then 34, 17, 52, 26, 13, 40, 20, 10, 5, 16, 8, 4, 2, 1, and then we’ll repeat 4, 2, 1, 4, 2, 1, and so on forever. The conjecture is that no matter which positive integer you start with, you’ll always end up in that same loop of 4, 2, and 1.

For reference, it’s going to be more convenient for us to work with something called the shortcut Collatz map. The idea here is that when n is odd, we already know that 3n+1 will be even. So we can shortcut one iteration by jumping straight to (3n+1)/2, just avoiding a separate pass for the division by two that we already know will be necessary.

We tend to work in base 10 as society, but the question I asked in an article a couple weeks ago is what happens if you perform this computation in base 2 or 3, instead.

- In base 2, it’s trivial to decide if a number is even or odd, and if it’s even, to divide by two. You just look at the least significant bit, and drop it if it’s a zero!

- In base 3, it’s trivial to compute 3n+1. You just add a 1 digit to the end of the number!

We could go either way, really, and in my original article I explored both computations to see what they looked like. This time, we’ll first head deep into the base 2 side, and see where it leads us.

Collatz in Base 2

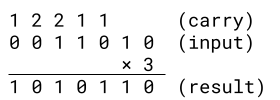

When computing the Collatz function in base 2, the computationally significant part is to multiply a base 2 number by 3. We can work this out in the standard algorithm we all learned in elementary school, working from right to left, and keeping track of a carry at each step.

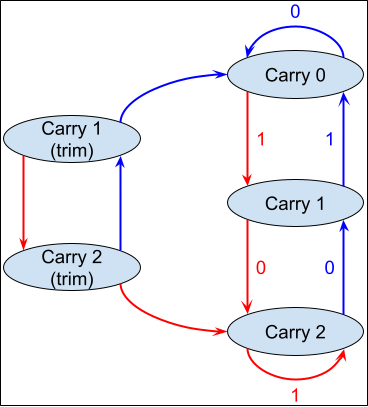

We can even enumerate the rules:

- If the next bit is a 0 and the carry is a 0, then output a 0 and carry a 0.

- If the next bit is a 1 and the carry is a 0, then output a 1 and carry a 1.

- If the next bit is a 0 and the carry is a 1, then output a 1 and carry a 0.

- If the next bit is a 1 and the carry is a 1, then output a 0 and carry a 2.

- If the next bit is a 0 and the carry is a 2, then output a 0 and carry a 1.

- If the next bit is a 1 and the carry is a 2, then output a 1 and carry a 2.

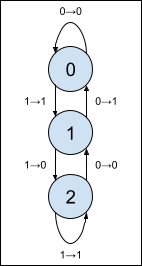

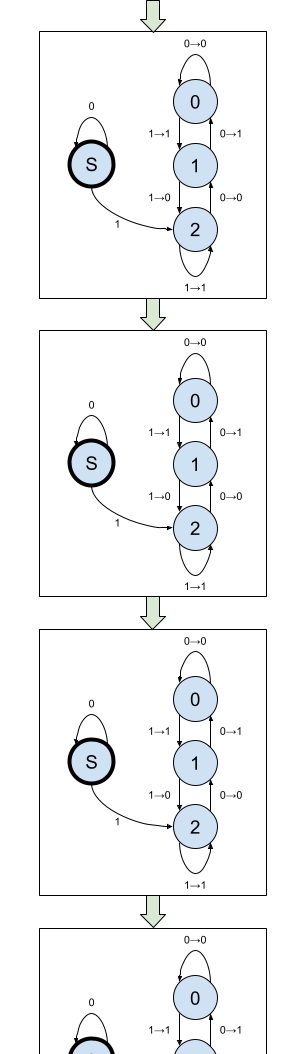

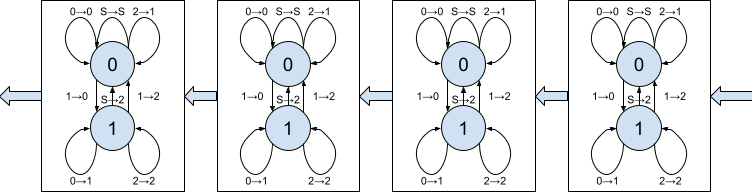

and represent this using a finite state machine with a transition diagram.

This machine isn’t too hard to understand, really. When you see a 0, move up one state; when you see a 1, move down one state. When the carry is 1 (before moving), output the opposite bit; otherwise, output the same bit. That’s all there is to it.

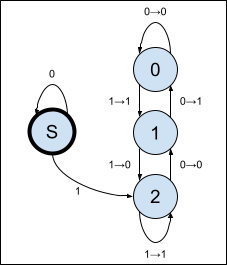

We will make three small modifications to this simple state machine:

- In the Collatz map, we want to compute 3n+1, That just amounts to starting with a carry of 1, rather than 0.

- Before computing 3n+1, we should divide by two until the number is odd. That amounts to adding a new “Start” state, or S for short, that ignores zeros on the right, and then acts like carry 1 when it encounters the first 1 bit. (Recall that we’re working from right to left!)

- Finally, let’s compute the shortcut map as well: as discussed above, when we compute 3n+1, it will always be even. (We will always move the start state that acts like carry 1 into carry 2, and the arrow there emits a 0 in the least significant bit.) We do not emit the zero when moving from the start state to carry 2, so the bits that come out represent (3n+1)/2.

The resulting maching looks like this.

When fed the right-to-left bits of a non-zero number, this machine will compute what we might call a compressed Collatz map: dividing by 2 as long as the number remains even, and then compute (3n+1)/2 just like the shortcut Collatz map does.

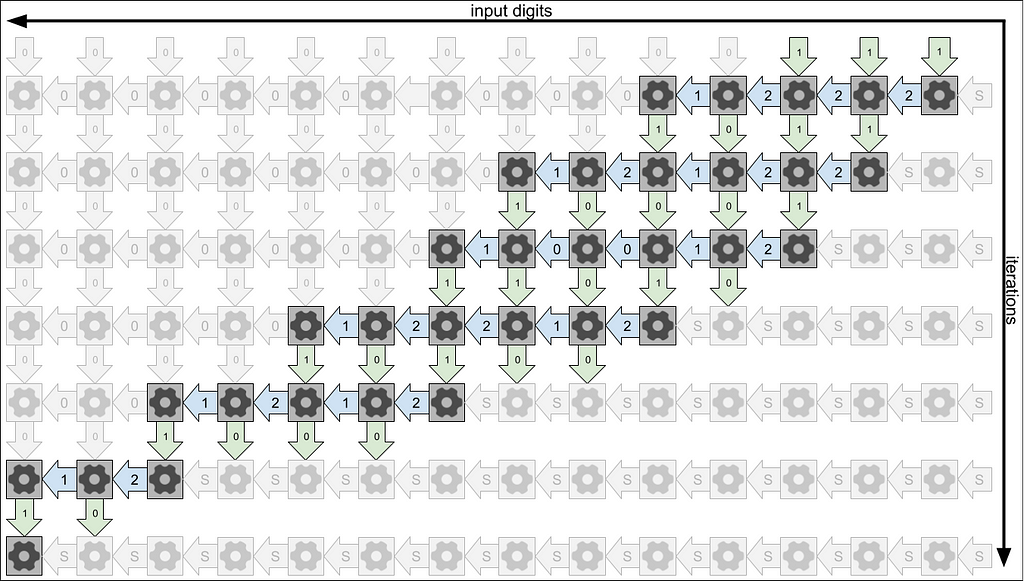

Iterating the Map

The Collatz conjecture isn’t about a single application of this map, though, but rather about the trajectory of a number when the map is applied many times in succession. To simulate this, we’ll want a whole infinite array of these machines connected end to end, so the bits that leave each one arrive at the one after. Something like this:

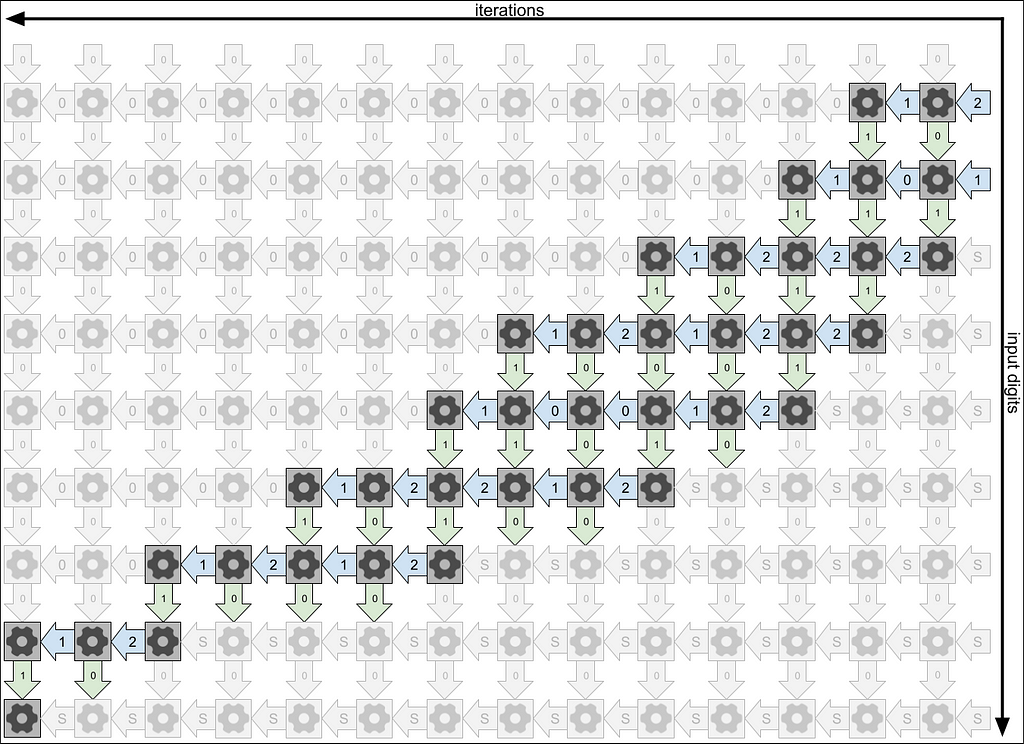

This is starting to get out of hand! So let’s simplify. Two things:

- Because their state transition diagrams are all the same, the only information we need about each machine is what state it’s in.

- The S state never emits any bits, and you can never get back to S once you leave it, so we know that as soon as we see an S, the entire rest of the machines, the whole infinite tail, is still sitting in the S state waiting for bits. We need not worry about these states at all.

- Once we’re done feeding in the non-zero digits of the input number, any machines in state 0 also become uninteresting. The rest of the inputs will all be zero, they will remain in state 0, and they will pass on that 0 bit of input unchanged. Again, we need not worry about these machines.

With that in mind, we can trace what happens when we feed this array of cascading machines the bits of a number. Let’s try 7, since we saw its sequence already earlier on.

The output of each machine feeds into the next machine below it, and I’ve drawn this propagation of outputs to inputs of the next machine using green arrows. We’ll draw the digits of input from right to left, matching the conventional order of writing binary numbers, so in a sense, time flows from right to left here. Each state machine remembers its state as time progresses to the left, and I’ve drawn this memory of previous states using blue arrows. Notice that to play out the full dynamics, we need to feed in quite a few of the leading zeros on the input.

In the rows of green arrows, you can read off the outputs of each state machine in the sequence in binary:

- binary 111 = decimal 7

- binary 1011 = decimal 11

- binary 10001 = decimal 17

- binary 11010 = decimal 26

- binary 10100 = decimal 20

- binary 1000 = decimal 8

- binary 10 = decimal 2

If we were to continue, the next rows would just repeat binary 10 (decimal 2) forever. This makes sense, because the way we defined the compressed Collatz map stabilizes at 2, rather than 1.

A second thing we can read from this map is the so-called parity sequence of the shortcut Collatz map. This is just the sequence of evens and odds that occur when the map is iterated on the starting number. When a column ends by emitting a 1 bit, bumping a new machine out of the S state, that indicates that the next value of the shortcut Collatz map will be odd. When it ends in a 0 bit, then the next value will be even.

There’s quite a lot of interesting theory about the parity sequences of the shortcut Collatz map! It turns out that every possible parity sequence is generated by a unique 2-adic integer, which I defined in my previous article, so the 2-adic integers are in one-to-one correspondence with parity sequences. We can, in fact, compute the reverse direction of this one-to-one correspondence as well using state arrays like this one. Every eventually repeating parity sequence comes from a rational number, via the canonical embedding of the rationals into the 2-adic numbers. (The converse, though, that acyclic parity sequences only come from irrational 2-adic integers, is conjectured but not known!)

Because every parity sequence comes from a unique 2-adic integer, if we could show that every positive integer eventually leads to alternating even and odd numbers in its parity sequence, this would prove that the Collatz conjecture is true. Now we have a new way of looking at that question. Among the 2-adic integers, the positive integers are those that eventually have an infinite number of leading 0s. So we can ask instead, from any starting state sequence of this array of machines, when feeding zeros into the sequence forever, do we eventually (ignoring machines at the beginning that have reached the 0 state) reach only a single machine alternating through states 1, 2, 1, 2, etc.?

This isn’t an easy question, though. Certainly, feeding zeros into the array on the left will bump the state of the top-most machines down to zero. However, the bits continue to propagate through the machine, possibly pushing new machines out of their starting states and so appending them on the bottom! There is something of a race between these two processes of pruning machines on the top and adding them on the bottom, and we would need to show that the pruning wins that race.

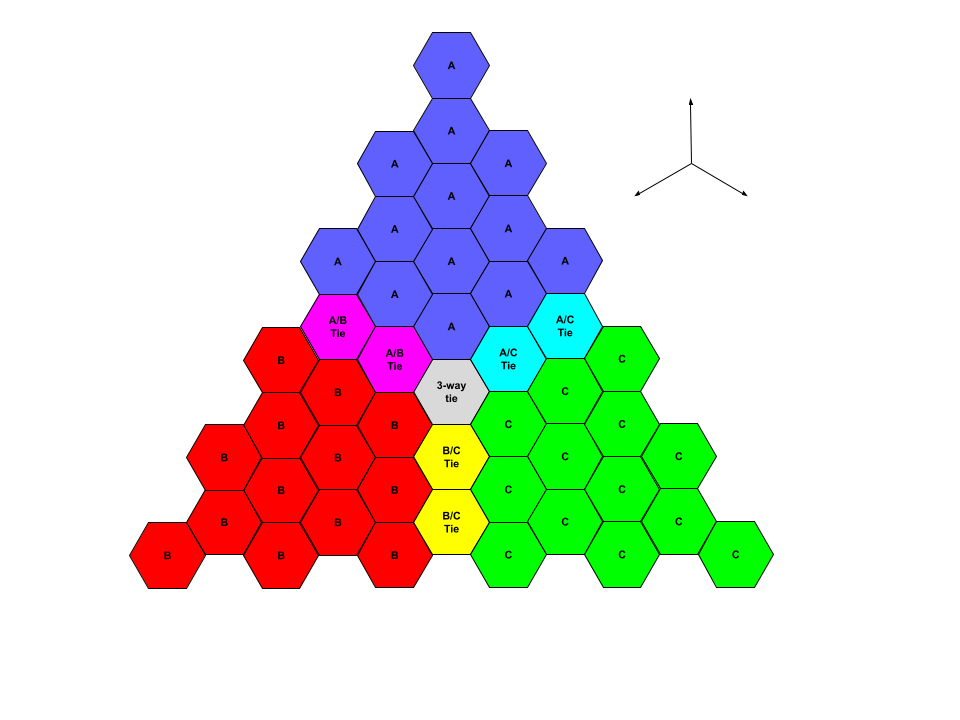

From Base 2 to Base 3

As we investigate this race, we discover something surprising about the behavior of the state sequences when leading zeros are fed into the top machine. If you read the machine states in the blue arrows, starting at the third column from the right after all the non-zero bits of input have been fed in, we can interpret those state sequences as a ternary (base 3) number! And we get quite a familiar progression:

- ternary 222 = decimal 26

- ternary 111 = decimal 13

- ternary 202 = decimal 20

- ternary 101 = decimal 10

- ternary 12 = decimal 5

- ternary 22 = decimal 8

- ternary 11 = decimal 4

- ternary 2 = decimal 2

- ternary 1 = decimal 1

That’s the shortcut Collatz sequence again! Rather than starting at 7, we jumped three numbers ahead because it took those three steps to feed in the non-zero bits of 7, so we missed 7, 11 and 17 and went straight to 26. Then we continue according to the same dynamics.

This coincidence where state sequences can be interpreted as ternary numbers is suprising, but is it useful? It can be a revealing way to think about Collatz sequences. Here’s an example.

Numbers of the form 2ⁿ-1 have a binary representation consisting of n consecutive 1s. If we trace what happens to the state sequence, we find that each 1 we feed to this state sequence propagates all the way to the end to append another 2 to the sequence, leaving us with a state sequence consisting of n consecutive 2s. As a ternary number, that is 3ⁿ-1. If the above is correct, then, we can conclude that iterating the shortcut Collatz map n times starting with 2ⁿ-1 should yield 3ⁿ-1 as a result.

In fact, this isn’t hard to prove. We can prove the more general statement that for all i ≤ n, iterating the shortcut Collatz map i times on 2ⁿ-1 gives a result of 3ⁱ2ⁿ⁻ⁱ-1. A simple induction suffices. If i = 0, then the result is immediate. Assuming it’s true for i, and that i + 1 ≤ n, we know that 3ⁱ2ⁿ⁻ⁱ-1 is odd, so applying the shortcut Collatz map one more time yields (3(3ⁱ2ⁿ⁻ⁱ-1)+1)/2, which simplifies to establish the property for i + 1 as well, completing the induction. Now let i = n to recover the original statement.

The proof was simple, but the idea came from observing the behavior of this state machine. And this is an interesting observation: 3ⁿ-1 grows asymptotically faster than 2ⁿ-1, so it implies that there is no bound on the factor by which a number might grow in the Collatz sequence. We can always find some arbitrarily large n that grows to at least about 1.5ⁿ times its original value.

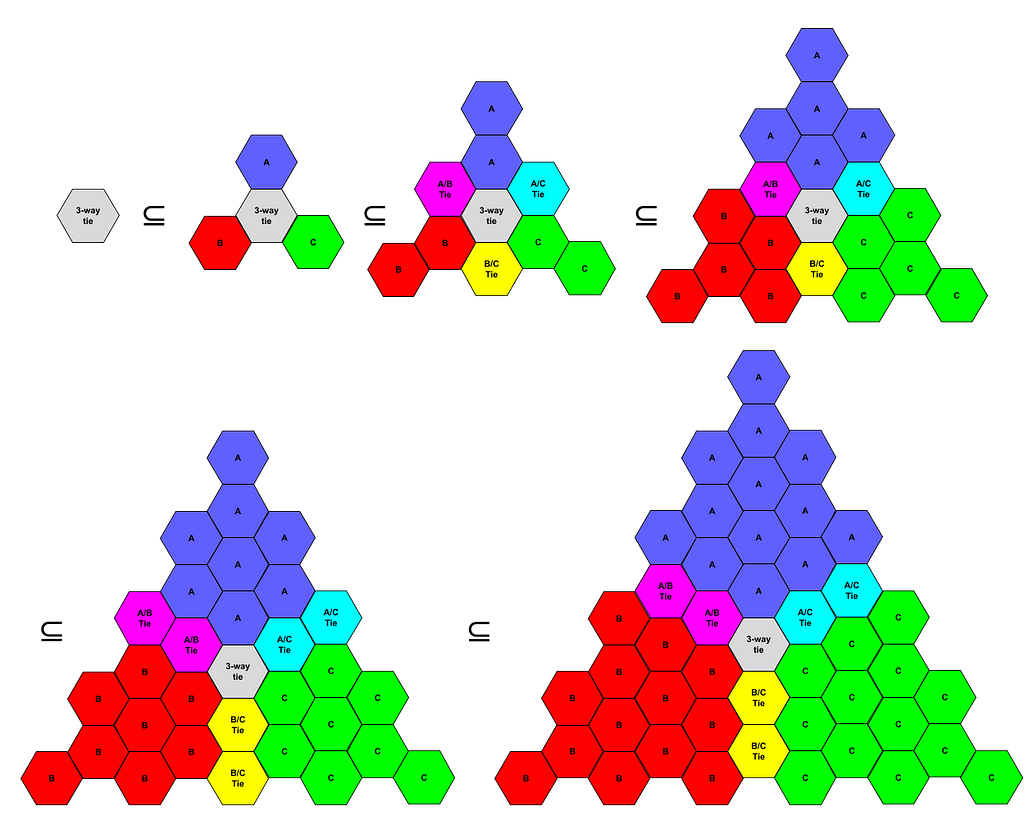

From Base 3 to Base 2

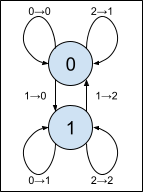

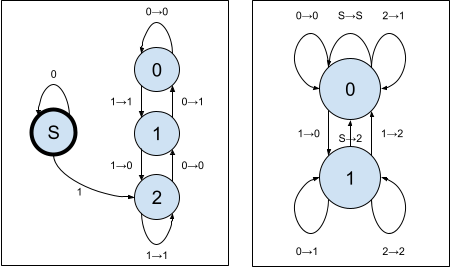

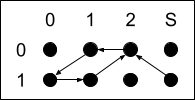

Recall that in base 3, computing 3n+1 is easy, but it’s dividing by two that becomes a non-trivial computation. Back in elementary school again, we learned about long division, an algorithm for dividing numbers digit by digit, this time left to right. To do this, we divide each digit in turn, but keep track of a remainder that propagates from digit to digit. We can also draw this as a state machine.

To extend this to the shortcut Collatz map, we need to look for a remainder when the division completes. This means that we’ll need to feed our state machine not just the ternary digits 0, 1, and 2, but an extra “Stop” signal (S for short) indicating the number is complete. Since the result may be longer than the input, it will be convenient to send an infinite sequence of these S signals, giving the machine time to output all of the digits of the result before it begins to produce S signals, as well. Upon receiving this S signal, if there is a remainder, then the input was odd, so our state machine needs to add another 2 onto the end of the sequence of ternary digits to complete the computation of (3n+1)/2 before emitting its own S signals.

Just as before, we’re interested not in a single application of this state machine, but the behavior of numbers under many different iterations, so we will again chain together an infinite number of these machines, feeding the ternary digits (or S signals) that leave each one into the next one as inputs. This time I’ll draw the ternary digits flowing from right to left.

Let’s try to simplify this picture.

- Again, all the state machines share the same transition diagram, so we need only note which state each machine is in.

- Once a machine (or the input) starts emitting S signals, it will never stop, so we need not concern outselves with these machines.

- Because the machines start in state 0 and machines in state 0 always decrease the ternary digit so no single digit can change more than two of these into non-zero states before it becomes zero itself, we’ll always encounter an infinite tail of 0 states to the left, which are similarly uninteresting.

With those simplifications in mind, we can work through the behavior of these machines starting with the input 7, which is 21 in base 3. This time, input digits (time, essentially) flows from top to bottom, while the iterations of the state machine are oriented from right to left. The green arrows represent the memory of state over time, and the blue arrows represent input and output digits.

Following the flow from right to left and reading down the blue arrows representing ternary digits, we can see the ternary values from the shortcut Collatz map computed by the state machines, read from top to bottom. We might ask a question similar to the earlier one: can we show that, starting from any state and throwing S signals at these state machines from the right, it somehow simplifies to the sequence 10 (a 0, followed by a 1 to its left), which indicates we’ve reached the cyclic orbit at 1, 2 in the shortcut Collatz sequence?

In looking at this, as you likely guessed, we find that the state sequences when read from left to right from green arrows (starting from the second row down, after all the input digits have been fed in) give the binary form of the compressed Collatz map. That’s the one that even further shortens the shortcut Collatz map by folding all the divisions by two so they happen implicitly before each odd value is processed. Starting with base 3, then, we end up back in base 2!

What’s going on? It’s easy to see that the diagram above is the same as the one from binary earlier, except for the addition of two rows at the top where we’re still feeding in the ternary digits, and some uninteresting state propagation from machines that are already emitting S signals, and swapping the interpretation of the axes. But why?

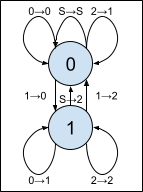

Let’s compare the state machines:

They look quite different… but this is an illusion created by a biased presentation. These diagrams emphasize the state structure, but relegate the input structure to text labels on the arrows. We can instead draw both diagrams at once in this way:

In the base 2 case, we can interpret the rows as representing bits of input, and the columns as states: three carries, and the Start state S. In the base 3 case, we can interpret the columns as inputs: three ternary digits, and the Stop signal S, and the rows as states. With either interpretation, though, the rule is the same: we are exchanging a presentation of a number from 0 through 5 as 3b + t for a presentation as 2t + b, where t takes values 0, 1, or 2, while b takes values 0 or 1, and with the same rules for the special S token on the side of the least significant digits.

So in some deep sense, computing the Collatz trajectory in base 2 or base 3 is performing the same computation. This is true even though in base 2, we’re computing the compressed Collatz map, which has fewer iterations (but more digits to compute with), while in base 3, we’re computing the shortcut Collatz map, which has more iterations (but fewer digits to compute with). Somehow these differences are all dual to each other so the same thing happens in each.

Frankly, I find that very pretty.

Brief introduction to 2-adic integers

A standard binary integer is a finite sequence of bits, either 0 or 1, with each bit having a value equal to some power of two. Because any non-negative integer can be written as a sum of powers of two, it can be written in this way.

But a finite sequence isn’t exactly right. We can always make that sequence longer, incorporating greater powers of two, by adding zeros on the left side. For this reason, if we think of a binary number as a finite sequence, we get non-unique representations: one with a 1 as the largest digit, but others that add leading zeros on the left. This is messy, so in general we tend to think of a binary integer as having infinitely many bits, but with the constraint that only finitely many of them can be non-zero. We don’t usually write the leading zeros, but that’s just a matter of notation. They are still there.

This leads to the obvious question: what happens if you remove the restriction that all but finitely many digits must be zero? The answer is the 2-adic integers. It turns out that we can write a lot of rational numbers as 2-adic integers. For example:

- Even without a negative sign, we can write -1 as …111, the 2–adic integer all of whose bits are 1. Why? Try adding one, and you’ll notice that the result is all 0s, so clearly this is the opposite of 1.

- What happens when you multiply 3 (binary 11) by the 2-adic integer …01010101011? If you work it out, you’ll get 1. So that 2-adic integer is the multiplicative inverse of 3, making it effectively 1/3.

In fact, it turns out that the 2-adic integers include all rational numbers with odd denominators! Not only that, but all of them ultimately end up with digits in a repeating pattern to the left, similar to how rational numbers in traditional decimal representations end up with digits in a repeating pattern to the right. (There are even irrational 2–adic integers that don’t repeat their digits; but they don’t correspond to the traditional irrational numbers, but rather to some completely new concept that doesn’t happen in traditional numbers!)

The Collatz map on 2-adics

The Collatz map on 2-adic integers can be defined in precisely the same way as it is on integers: even numbers are halved, while odd numbers are mapped to 3n+1. But hold on… if we can represent numbers like 1/3, what does it mean to be even or odd?

For arbitrary rationals, this would be a tricky question to answer, but in the 2–adic integers, there’s an easy answer: just look at the 1s place. If it’s 0, the number is even; if it’s 1, the number is odd. This is equivalent to saying that a rational number is even iff its numerator is even. And this notion is well-defined because we’ve already constrained the denominator to be odd.

I’m now going to redefine the Collatz step function on binary numbers from my previous post, but with one difference: I’ll assume that the numbers are odd. Because every number therefore ends with a 1, we won’t represent the 1 explicitly, but rather let it be implied. This implied 1 is expressed by the OddBits newtype.

data Bit = B0 | B1

newtype OddBits = XXX1 [Bit]

data State = C0 | C1 | C1_Trim | C2 | C2_Trim

threeNPlusOneDiv2s :: OddBits -> OddBits

threeNPlusOneDiv2s (XXX1 bits) = XXX1 (go C2_Trim bits)

where

go C1_Trim [] = []

go C1_Trim (B0 : bs) = go C0 bs

go C1_Trim (B1 : bs) = go C2_Trim bs

go C2_Trim [] = []

go C2_Trim (B0 : bs) = go C1_Trim bs

go C2_Trim (B1 : bs) = go C2 bs

go C0 [] = []

go C0 (B0 : bs) = B0 : go C0 bs

go C0 (B1 : bs) = B1 : go C1 bs

go C1 [] = [B1]

go C1 (B0 : bs) = B1 : go C0 bs

go C1 (B1 : bs) = B0 : go C2 bs

go C2 [] = [B0, B1]

go C2 (B0 : bs) = B0 : go C1 bs

go C2 (B1 : bs) = B1 : go C2 bs

This function, in a single pass, multiplies an odd number by 3, adds 1, then divides by 2 as many times as needed to make the result odd. Therefore, this is a map from the odd numbers to other odd numbers. The states represent:

- The amount carried from the previous bit when multiplying by 3.

- Whether the lower-order bits are all zeros, in which case we should continue to trim zeros instead of emit them.

This function still handles finite lists, but you can generally ignore those equations, since they are equivalent to extending with 0 bits to the left. And as Olaf suggests, the function extends to the 2-adic numbers by operating on infinite lists. (That is, except for one specific input: …010101, on which the function hangs non-productively. That’s because this 2-adic integer corresponds to the rational -1/3, and 3(-1/3) + 1 = 0, which can never be halved long enough to yield another odd number!)

Fixed points

The Collatz conjecture amounts to finding the orbits of the Collatz map, which fall into two categories: periodic orbits, which repeat infinitely, and divergent orbits, which grow larger indefinitely without repeating. Among positive integers, the conjecture is that the only orbit is the periodic one that ends in 4, 2, 1, 4, 2, 1, 4, 2, 1…

Since we’re skipping the even numbers, our step function has the property that f(1) = 1, making 1 a fixed point. Not all periodic orbits are fixed points, but it’s natural to ask whether there are any other fixed points of this map. Let’s explore this question!

We start by looking only at the non-terminating equations for the recursive definition. (Recall that the terminating equations are really just duplicates of these, since leading zeros are equivalent to termination.)

go C1_Trim (B0 : bs) = go C0 bs

go C1_Trim (B1 : bs) = go C2_Trim bs

go C2_Trim (B0 : bs) = go C1_Trim bs

go C2_Trim (B1 : bs) = go C2 bs

go C0 (B0 : bs) = B0 : go C0 bs

go C0 (B1 : bs) = B1 : go C1 bs

go C1 (B0 : bs) = B1 : go C0 bs

go C1 (B1 : bs) = B0 : go C2 bs

go C2 (B0 : bs) = B0 : go C1 bs

go C2 (B1 : bs) = B1 : go C2 bs

These observations will be relevant:

- We start in the state C2_Trim

- The Trim states do not emit bits to the result, only consuming them. Therefore, the output will lag behind the input by some number of bits depending on how long evaluation lingers in these Trim states.

- Once we leave the Trim states, we can never re-enter them. Inputs and outputs will then match exactly, so the lag stays the same forever.

- If we’re searching for inputs that evaluate in a certain way, the bits of the input are completely determined by whether we want to stay in Trim states or leave them, and then whether we want the next output to be a 0 or 1.

Because of this, when searching for a fixed point of this function, the input value is entirely determined by one choice: for how many input bits do we choose to remain in the Trim states. Once that single choice is made, the rest of the input is entirely determined by that plus the desire for the input to be a fixed point.

Let’s work some out.

Lag = 1. Here, we want to stay in the Trim states for only one bit of input. Then that bit must be a 1, since that’s what gets us out of the Trim state. From that point, we will stay in state C2, and in order to produce the 1 output bits to match the inputs, we’ll need to keep seeing 1s in the input! Then the fixed point here is XXX1 (repeat 1), which corresponds to the 2-adic integer …111.

We noted earlier that this 2–adic integer corresponds to -1. We can double-check that -1 is indeed a fixed point of the function that computes 3n+1 and then divides by 2 until the result is odd. To compute f(-1), we first compute 3(-1)+1 = -2, then divide by 2 to get -1, which is odd. So it is indeed a fixed point.

Lag = 2. Here, we want to stay in the Trim states for two bits of input. That means we expect the first bit to be 0 so that we’ll switch to state C1_Trim, and then the second bit to be 0 again to transition us to the C0 state. At this point, we’re producing output, which must match the input bits already chosen, and the input bit we need will always be a 0 so as to produce the 0 that matches the input. Then the fixed point is XXX1 (repeat 0), and keeping in mind that there’s an implied 1 on the end, this corresponds to the 2-adic integer …0001, which is just 1.

This is the standard period orbit mentioned up above: 4, 2, 1, 4, 2, 1, which is just 1s when we skip the even numbers.

Now things start to get interesting:

Lag = 3. To stay in the Trim states for exactly three bits of input, we need those bits to be 0, 1, and 1. This ends up in state C2, with the input sequence 011 left to match. The next input must therefore be 0, yielding a 0 as output, and leaving us in state C1 with 110 left to match. The next input must be 0 again, leaving us in C0 with 100 left to match. We need a 1 next, leaving us in C1 with 001 left to match. Then we need to see a 1 again to leave us in C2 with 011 left to match. That’s the same state and pending bits as we were in when we left the Trim states, so we’ve finally found a loop.

The fixed point that produces this behavior is XXX1 (cycle [0, 1, 1, 0]), and including the implied 1 on the end, this corresponds to the 2-adic integer …(0110)(0110)(0110)1. This turns out to correspond to the rational number 1/5. We can check that 3(1/5) + 1 = 8/5, and halving that three times yields 1/5 again, so this is indeed a fixed point of the map, even though it’s not an integer.

Observations about fixed points

A few observations can be made about the fixed points of this map:

- There are an infinite number of them. Every possible choice of lag, starting with one but increasing without bound, yields a fixed point, and they all must be different since they produce different behaviors.

- There are only a countably infinite number of them. This is the only way to produce a fixed point, so the list of fixed points we compute in this way is complete. There are no others.

- The 2-adic fixed points are all rational. Once we leave to Trim states, there’s only a finite amount of state involved in determining what happens from here: the state of the function implementation, together with the pending input bits remaining to match, which keeps the same length. We progress through this finite number of states indefinitely, so we must eventually reach the same state twice, and from that point, the bits will follow a repeating pattern. Therefore, interpreted as a 2–adic number, they will correspond to a rational value.

- The only integer fixed points are 1 and -1. You can see this for non-negative integers by looking at the terminating equations in the original code: the longest terminating case produces two bits at the end before ending in trailing zeros, so the lag can be no greater than 2. Similar logic applies to negative integers, which have 1s extending infinitely to the left.

In fact, if we work out what’s going on here, we find that fixpoints of this function are precisely 1 / (2ⁿ - 3) for n > 0. (In fact, n = 0 yields -1/2, which is also a fixed point as a rational, but is not a 2-adic integer so it didn’t occur in our list.)

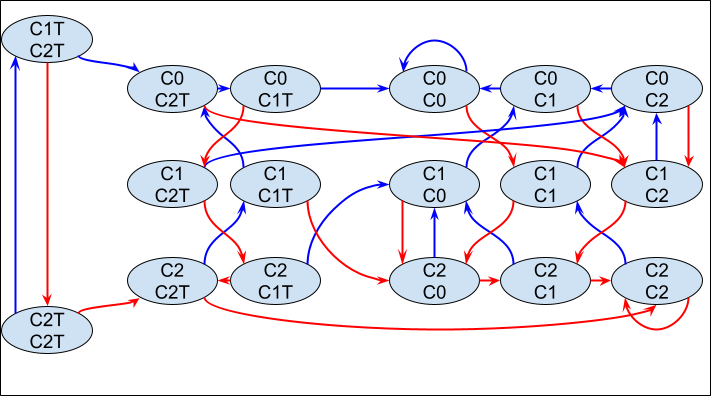

Periodic points

We can press further on this, and consider periodic points with period greater than one, by composing the function with itself and writing down the state machine that results. This grows more complex, as every additional iteration of the function adds a new choice about lag, yielding a larger-dimensional array of periodic points. The general form of the computation seems to remain the same, but the state diagrams grow increasingly daunting.

The diagram above gives the state transitions for the composition of two Collatz steps. The left two states are those where the first of the two steps has yet to produce a bit. The next six are states where the first is producing bits but the second is not. The final nine, then, represent the situation where both of the two composed steps is productive.

I have not labeled the outputs, but the general rule is that the trim states have no output, the non-trim states with an even number of occurrences of C1 will produce outputs that are the same as their inputs, and the states with odd occurrences of C1 produce outputs the opposite of their inputs.

Because there are now two kinds of trimming that happen, we can choose any combination of the two lags, giving a 2D array of points that repeat with period 2. The computations are similar to the above, so I’ll just give the results for lag 1, 2, and 3 in each dimension.

The diagonal elements are not new. This is expected, though, because a periodic point of period 1 is also periodic with period 2. The off-diagonal elements yield three new period-2 orbits:

- -5, -7, -5, …

- 5/7, 11/7, 5/7, …

- 7/23, 11/23, 7/23, …

Just as with fixed points, we can work out a closed form for the period two points. This time, we get (2^m + 3) / (2^(m+n) - 9). As we noticed above, this simplifies to the earlier formula for fixed points when m = n. (Hint: the denominator factors as a difference of squares.)

You might wonder if there’s a pattern here that continues to all periodic points, and indeed there is! On the Wikipedia page about the Collatz Conjecture, a formula is given for the unique rational number that generates any periodic sequence of even and odd numbers from the “shortcut” Collatz map. (The shortcut map is defined there as the map that divides by 2 once after each 3n+1 step.)

To translate this into our terms:

- m is the period, which is also the number of lag values.

- k₀ = 0, because the function defined here can only be evaluated on odd numbers.

- Each additional kᵢ is the sum of the first i lag values.

- n is the sum of all the lag values.

We can make the interesting observation that the sign of the number is determined by the denominator: if n > m log₂(3), or about 1.585 m, then the result will be positive. But n/m is also understood as the average of the lag values. So looking for positive periodic points amounts to choosing lag values with an average of greater than about 1.585. But perhaps not too much greater, if we want them to be integers, because we should not allow the denominator to grow too large. (Indeed, we saw in the fixed point case above that there was a bound on how large the lag could grow because the output needs to catch up!) Working out more precise upper bounds on lag would be an interesting step toward a search for periodic points.

The fact that this rational number is unique, left somewhat mysterious in the Wikipedia statement, comes down to the way that fixed points of these composed state machines are always determined by how long we linger in each of the trim states. The result on Wikipedia already implies that these are the only periodic points among the rationals with odd denominators. The analysis here also makes it clear that these are the only periodic points in the 2-adic integers, as well, so there are no irrational 2-adic periodic points of the Collatz map.

Of course, the trick would be to show that none of these rational values except for 1 are positive integers, and then that there are also no orbits that increase aperiodically. Actually solving the Collatz Conjecture is left as an exercise for the reader. :)

The Problem

A Collatz sequence starts with some positive integer x₀ and develops the sequence inductively as xₙ₊₁= xₙ / 2 if xₙ is even, or 3xₙ + 1 if xₙ is odd. For instance, starting with 13, we get:

- x₀ = 13

- x₁ = 3(13) + 1 = 40

- x₂ = 40 / 2 = 20

- x₃ = 20 / 2 = 10

- x₄ = 10 / 2 = 5

- x₅ = 3(5) + 1 = 16

- x₆ = 16 / 2 = 8

- x₇ = 8 / 2 = 4

- x₈ = 4 / 2 = 2

- x₉ = 2 / 2 = 1

- x₁₀ = 3(1) + 1 = 4

- x₁₁ = 4 / 2 = 2

- x₁₂ = 2 / 2 = 1

From there, it’s apparent that the sequence repeats 1, 4, 2, 1, 4, 2, … forever. That is one way for a Collatz sequence to end up. The famous question here, known as the Collatz Conjecture, is whether it’s the only way any such sequence can terminate. Not necessarily! There could be other cycles besides 1, 4, 2. Or there could be a sequence that keeps increasing forever without repeating a number. Or maybe that never happens. No one knows!

We do know a few things. First, if these things happen, they only happen with astronomically large numbers that even powerful computers haven’t been able to check by hand. We know that if even a single number is repeated, then that part of the sequence will repeat forever, since the whole tail of the sequence is determined by any single number in it. And we know that such a sequence cannot decrease forever, since Collatz sequences remain positive integers, so eventually would reach number that we know end up in the 1,4,2 loop. We also know that there are other loops in Collatz sequences that begin with negative integers, so the fact that there have been none found so far in the positive integers is at least a little surprising.

The Collatz Conjecture is famous because it’s probably one of the easiest unsolved math problem to understand the meaning of, for mathematical novices. There’s no Riemann zeta function to define. Just even and odd numbers, division by two, multiplication by three, and adding one. That doesn’t mean it’s easy to solve, though! Many mathematicians and countless novices have spent decades working on the problem, and there’s no promising road to a solution. The mathematician Erdős suggested that it’s not simply that no one has found the solution, but that mathematics is lacking even the basic tools needed to work on this problem.

Collatz and Alternate Bases

There are many, many ways to think about the Collatz conjecture, but one of them is to look at the computation in different bases. We’re not really attempting to find a more efficient way to compute Collatz sequences. If we cared about that, it would be far more efficient to use whatever representation our computing hardware is designed for! Rather, what we’re looking for here is the possibility of some kind of pattern in the computation that reveals something analytical about the problem.

Addition works essentially the same way the same regardless of base, but computations involved in multiplication and division are very dependent on the choice of base! Since the definition of the Collatz sequence two natural choices for computing Collatz sequences are base 2 (binary) and base 3 (ternary).

- In base 2, it’s trivial to decide whether a number is even or odd, and to divide by two. On the other hand, computing 3n+1 is less trivial, requiring a pass over potentially every digit in the number.

- In base 3, the opposite happens. Computing 3n+1 is now trivial. But recognizing that a number is even and dividing by two now require a pass over every digit.

Let’s jump into the details and see what happens.

Base 3 in Detail

Base 3 representations are appealing for the Collatz sequence because it’s trivial to compute 3n+1. It amounts to simply adding a 1 to the end of the representation, shifting everything else left (i.e., multiplying it by 3) to make room. If you have n = 1201 (decimal 46), for example, then 3n+1 = 12011 (demical 139).

The more difficult tasks are:

- Determining whether the number is even or odd. Unlike decimal, we cannot simply look at the last digit. Instead, a number in base 3 is even if and only if it has an even number of 1s in its representation. That’s not hard to count, but it does require looking at the entire sequence of digits.

- Dividing by two. Given a sequence of base 3 digits, we can express the division algorithm on right-to-left numbers as a state machine using the long division algorithm with remainders as states (starting with zero), using the following division table.

Let’s see how this table works with an example. Starting again with 1201 (decimal 46):

- We always start with a remainder of 0. The first digit is 1. That’s the second line of the table. The output digit is, therefore, 0, and the next remainder is 1.

- A remainder of 1 and a digit of 2 is the last line of the table. It tells us to add a 2 to the output, and proceed with a remainder of 1.

- A remainder of 1 and a digit of 0 is the fourth line. We add a 1 to the output, and proceed with a remainder of 1.

- A remainder of 1 and a digit of 1 is the fifth line. We add a 2 to the output and proceed with a remainder of 0.

- We’re now out of digits. The quotient is 0212 (decimal 23, but note that leading zero which we’ll talk about later!) and the remainder is 0.

Naively, we would have to make two passes over the current number: one to determine whether it’s even or odd, and then again, if it’s even, to divide by two. We can avoid this, though, by remembering that if a number is odd, we intend to compute 3n+1, which will always be even (because the product of two odd numbers is odd, so adding one makes it even), so we’ll then divide that by two. A little algebra reveals that (3n+1)/2 = 3(n/2 - 1/2) + 2 = 3⌊n/2⌋ + 2 if n is odd.

What this means is that we can go ahead and halve n regardless of whether it’s even or odd. At the end, we’ll know whether there’s a remainder or not, and if so, we will already be in position to append a 2 (rather than a 1 as discussed earlier) to the halved number and rejoin the original sequence. This skips one step of the Collatz sequence, but that’s okay. If our goal is only to determine whether the sequence eventually reaches 1, it doesn’t change the answer if we take this shortcut.

Appending that 2 to the end of the number changes the meaning of our state transition table a little bit. Instead of automatically quitting when we reach the end of the current number, we’ll need a chance to append another digit at the end. We’ll add rows to the table for what to do after all the digits have been seen, and be explicit about when to terminate (i.e., finish processing).

There’s one more detail we can handle as we go: as we saw earlier, dividing by two can produce a leading zero at the beginning of the result, which is unnecessary. We can arrange to never produce that leading zero at all, so we don’t need to ignore or remove it later. We just need to remember where we’re just starting and therefore don’t need to write leading zeros. In that case, the remainder is always zero, so there’s only one state to add.

We get the following state transition table.

Since there are no leading zeros in the representations, we need not concern ourselves with the case where the first digit encountered is a zero, but if you want to handle it, we can produce no output and remain in the Just Starting state, since it ought to change nothing. I’ve done so in the code below.

We can iterate this state machine on ternary numbers, and get consecutive values from the Collatz sequence, though slightly abbreviated because we combined the 3n+1 step with the following division by 2. The Collatz conjecture is now equivalent to the proposition that this iterated state machine will eventually produce only a single 1 digit.

I’ve implemented this in the Haskell programming language as follows:

import Data.Foldable (traverse_)

import System.Environment (getArgs)

data Ternary = T0 | T1 | T2 deriving (Eq, Read, Show)

step3 :: [Ternary] -> [Ternary]

step3 = si

where

si (T0 : xs) = si xs

si (T1 : xs) = s1 xs

si (T2 : xs) = T1 : s0 xs

si [] = []

s0 (T0 : xs) = T0 : s0 xs

s0 (T1 : xs) = T0 : s1 xs

s0 (T2 : xs) = T1 : s0 xs

s0 [] = []

s1 (T0 : xs) = T1 : s1 xs

s1 (T1 : xs) = T2 : s0 xs

s1 (T2 : xs) = T2 : s1 xs

s1 [] = [T2]

main :: IO ()

main = do

[n] <- fmap read <$> getArgs

traverse_ print (iterate step3 n)

And here’s a sample result:

$ cabal run exe:collatz3 ‘[T1, T2, T0, T1]’ | head -15

[T1,T2,T0,T1]

[T2,T1,T2]

[T1,T0,T2,T2]

[T1,T2,T2,T2]

[T2,T2,T2,T2]

[T1,T1,T1,T1]

[T2,T0,T2]

[T1,T0,T1]

[T1,T2]

[T2,T2]

[T1,T1]

[T2]

[T1]

[T2]

[T1]

Starting with 1201 (decimal 46), we get 212 (decimal 23), 1022 (decimal 35), 1222 (decimal 53), 2222 (decimal 80), 1111 (decimal 40), 202 (decimal 20), 101 (decimal 10), 12 (decimal 5), 22 (decimal 8), 11 (decimal 4), 2, 1, 2, 1, … As predicted, that’s the Collatz sequence, except for the omission of 3n+1 terms since their computation is merged into the following division by two.

Base 2 in Detail

So what happens in base 2 (binary)? It’s a curiously related but different story!

- Determining whether a number is even or odd is trivial: just look at the last bit and observe whether it is 0 or 1.