| CARVIEW |

Yun Liu 刘昀

PhD candidate in IIIS, Tsinghua University.

- Beijing, China

- Tsinghua University

- Google Scholar

- Github

Publications

Unleashing Humanoid Reaching Potential via Real-world-Ready Skill Space

Zhikai Zhang* , Chao Chen* , Han Xue* , Jilong Wang , Sikai Liang , Yun Liu , Zongzhang Zhang , He Wang , Li Yi

Robotics and Automation Letters (RA-L), 2025, with a presentation at ICRA 2026

Project Page | arXiv | Code

A large-range humanoid-reaching policy with a learned skill space encoding various real-world-ready motor skills.

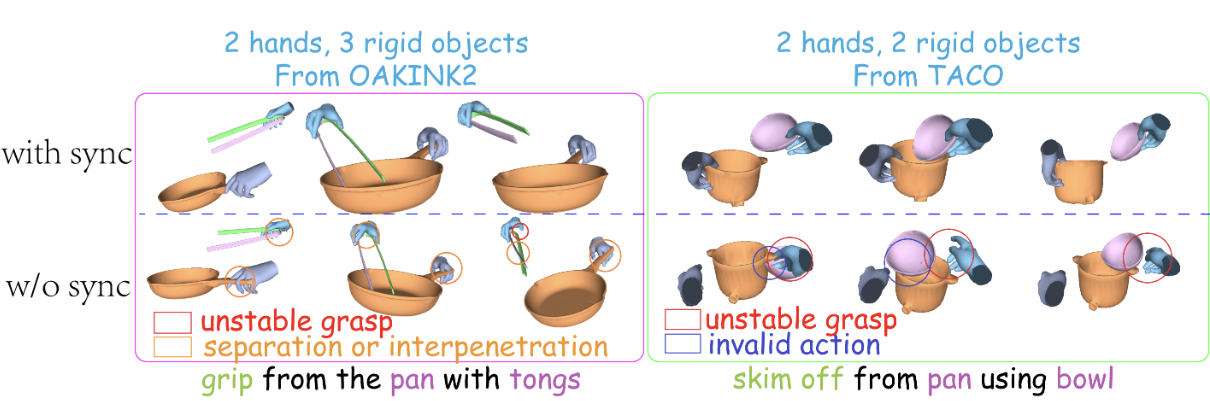

SyncDiff: Synchronized Motion Diffusion for Multi-Body Human-Object Interaction Synthesis

Wenkun He , Yun Liu , Ruitao Liu , Li Yi

International Conference on Computer Vision (ICCV), 2025

Project Page | Paper | arXiv

A motion diffusion model for synchronized multi-body human-object interaction motion synthesis with core designs of synchronization prior injection and frequency decomposition.

Yun Liu* , Bowen Yang* , Licheng Zhong* , He Wang , Li Yi

CVPR Workshop on Humanoid Agents, 2025, Spotlight

The first comprehensive benchmark for generalizable humanoid-scene interaction learning via human mimicking. Integrated a large-scale diverse human skill reference dataset with both synthetic and real-world human-scene interactions. Developed a general skill-learning paradigm and provide support for both pipeline-wise and modular evaluations.

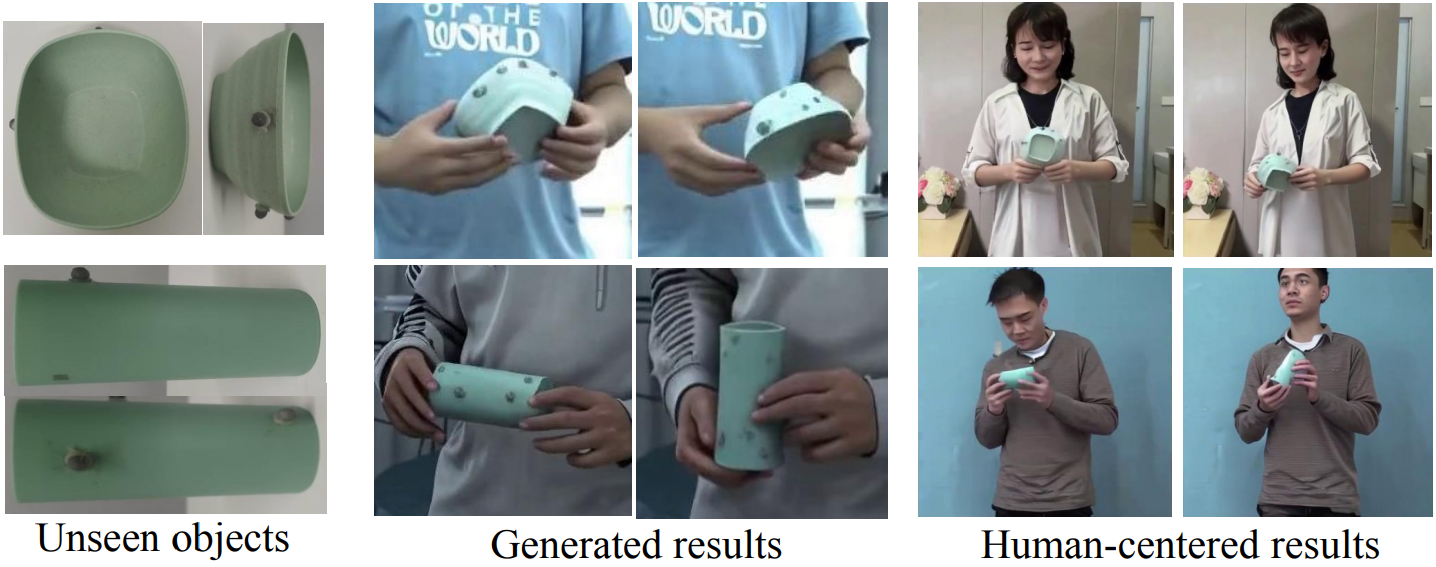

ManiVideo: Generating Hand-Object Manipulation Video with Dexterous and Generalizable Grasping

Youxin Pang , Ruizhi Shao , Jiajun Zhang , Hanzhang Tu , Yun Liu , Boyao Zhou , Hongwen Zhang , Yebin Liu

Computer Vision and Pattern Recognition (CVPR), 2025, Hightlight

Project Page | Paper | arXiv

A method for generalizable hand-object manipulation video generation.

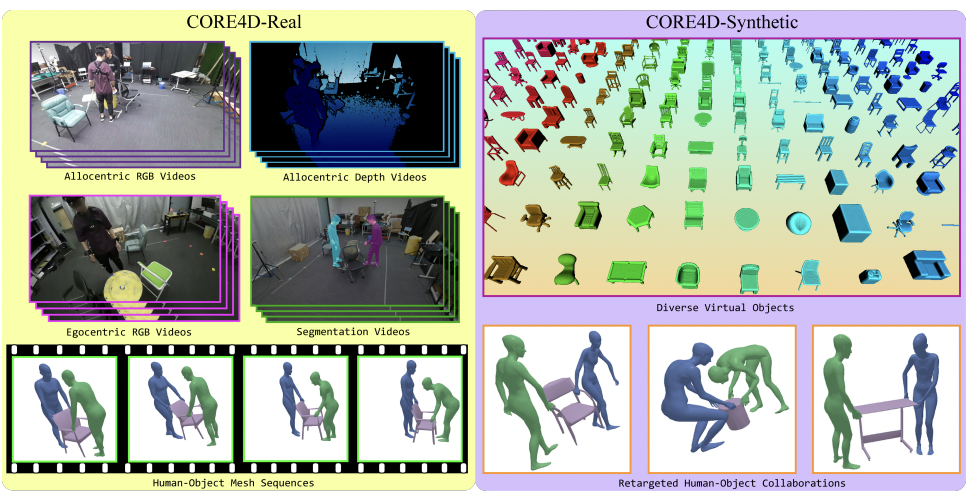

CORE4D: A 4D Human-Object-Human Interaction Dataset for Collaborative Object REarrangement

Yun Liu* , Chengwen Zhang* , Ruofan Xing , Bingda Tang , Bowen Yang , Li Yi

Computer Vision and Pattern Recognition (CVPR), 2025

Project Page | Paper | arXiv | Code | Data

A large-scale 4D human-object-human interaction dataset for collaborative object rearrangement, integrating real-world and synthetic data.

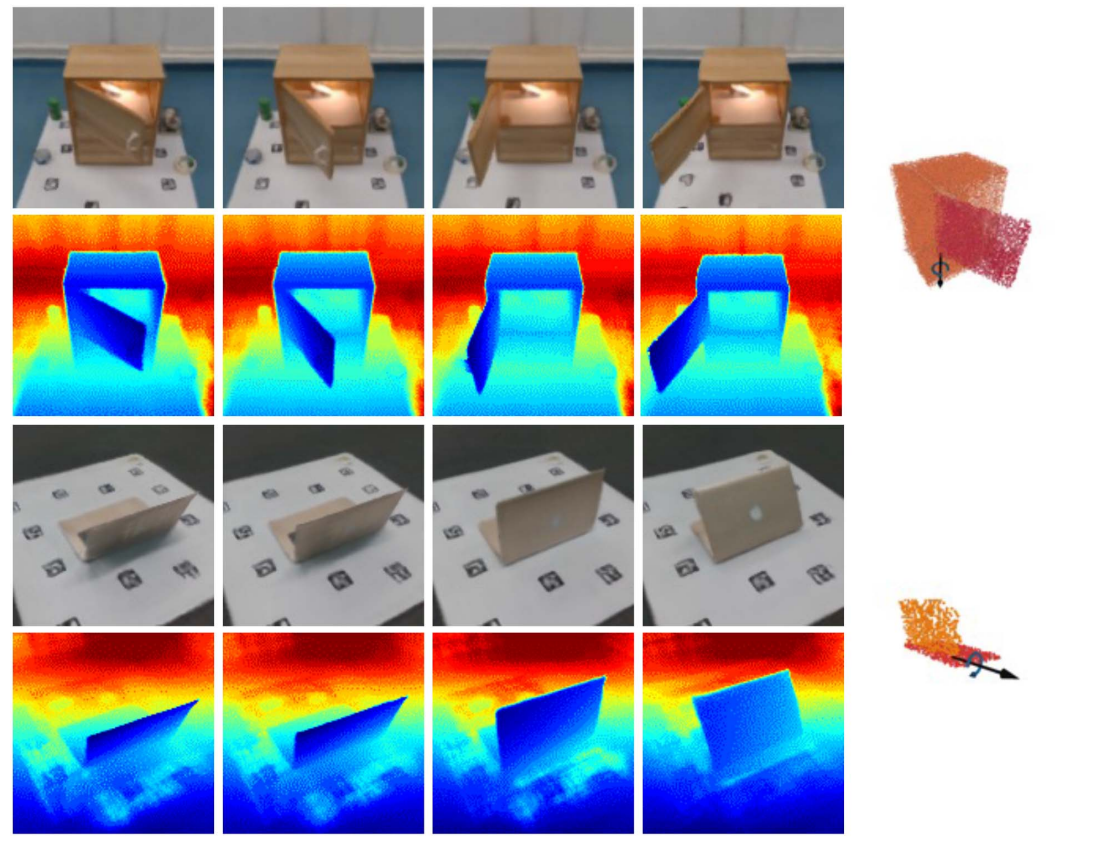

Yahao Shi , Ye Tao , Mingjia Yang , Yun Liu , Li Yi , Bin Zhou

IEEE Transactions on Visualization and Computer Graphics (TVCG), 2024

A 4D geometry and motion reconstruction method for articulated objects.

TACO: Benchmarking Generalizable Bimanual Tool-ACtion-Object Understanding

Yun Liu , Haolin Yang , Xu Si , Ling Liu , Zipeng Li , Yuxiang Zhang , Yebin Liu , Li Yi

Computer Vision and Pattern Recognition (CVPR), 2024

Project Page | Paper | arXiv | Code | Data

A large-scale real-world bimanual hand-object manipulation dataset covering extensive tool-action-object combinations.

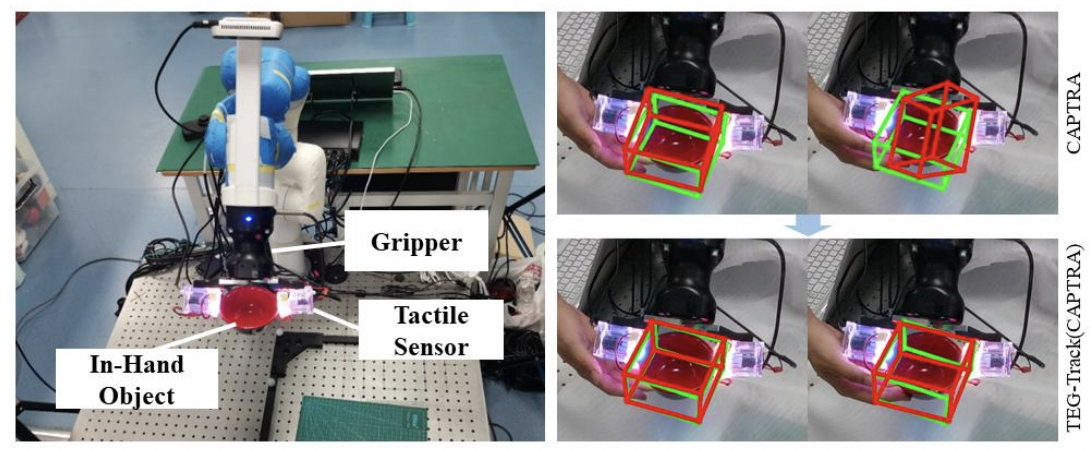

Enhancing Generalizable 6D Pose Tracking of an In-Hand Object with Tactile Sensing

Yun Liu* , Xiaomeng Xu* , Weihang Chen , Haocheng Yuan , He Wang , Jing Xu , Rui Chen , Li Yi

Robotics and Automation Letters (RA-L), 2023, with an oral presentation at ICRA 2024

This paper proposed TEG-Track, a visual-tactile in-hand object 6D pose tracking method that can generalize to new objects.

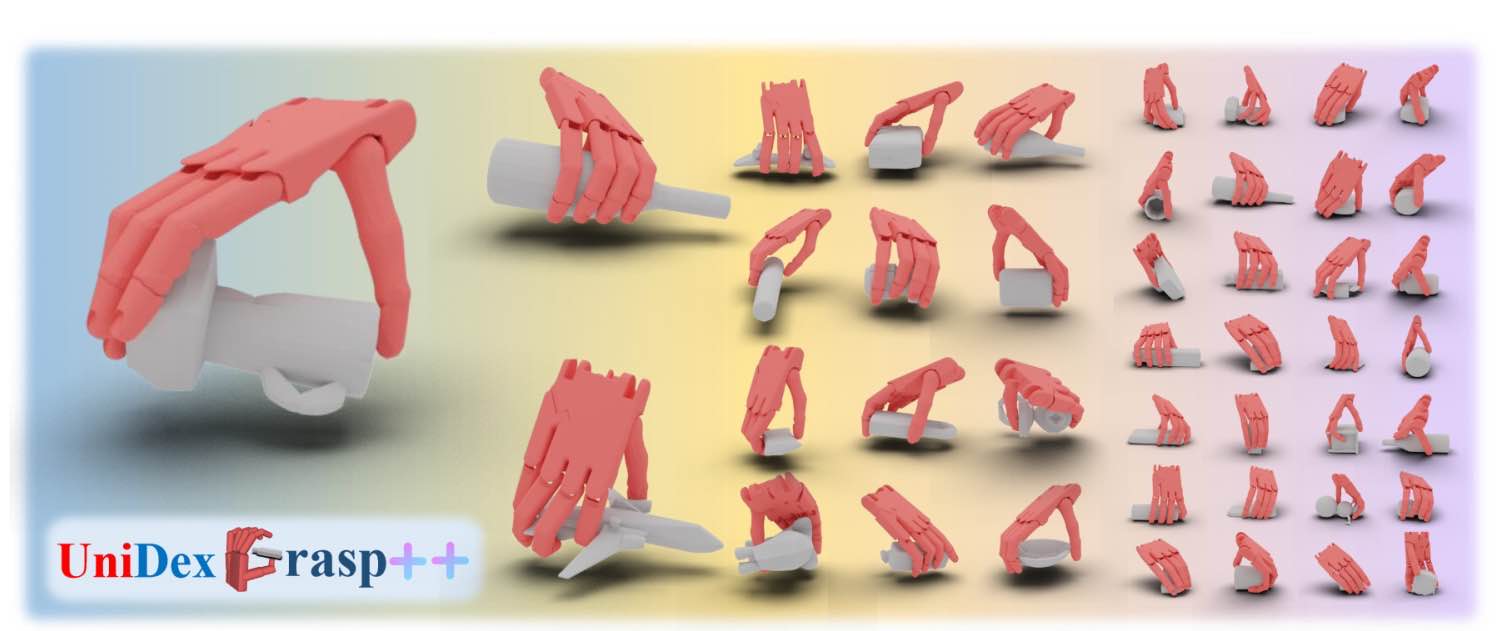

Weikang Wan* , Haoran Geng* , Yun Liu , Zikang Shan , Yaodong Yang , Li Yi , He Wang

Robotics and Automation Letters (RA-L), 2023, Best Paper Finalist

Project Page | Paper | arXiv | Code | Data

An object-agnostic method to learn a universal policy for dexterous object grasping from realistic point cloud observations and proprioception.

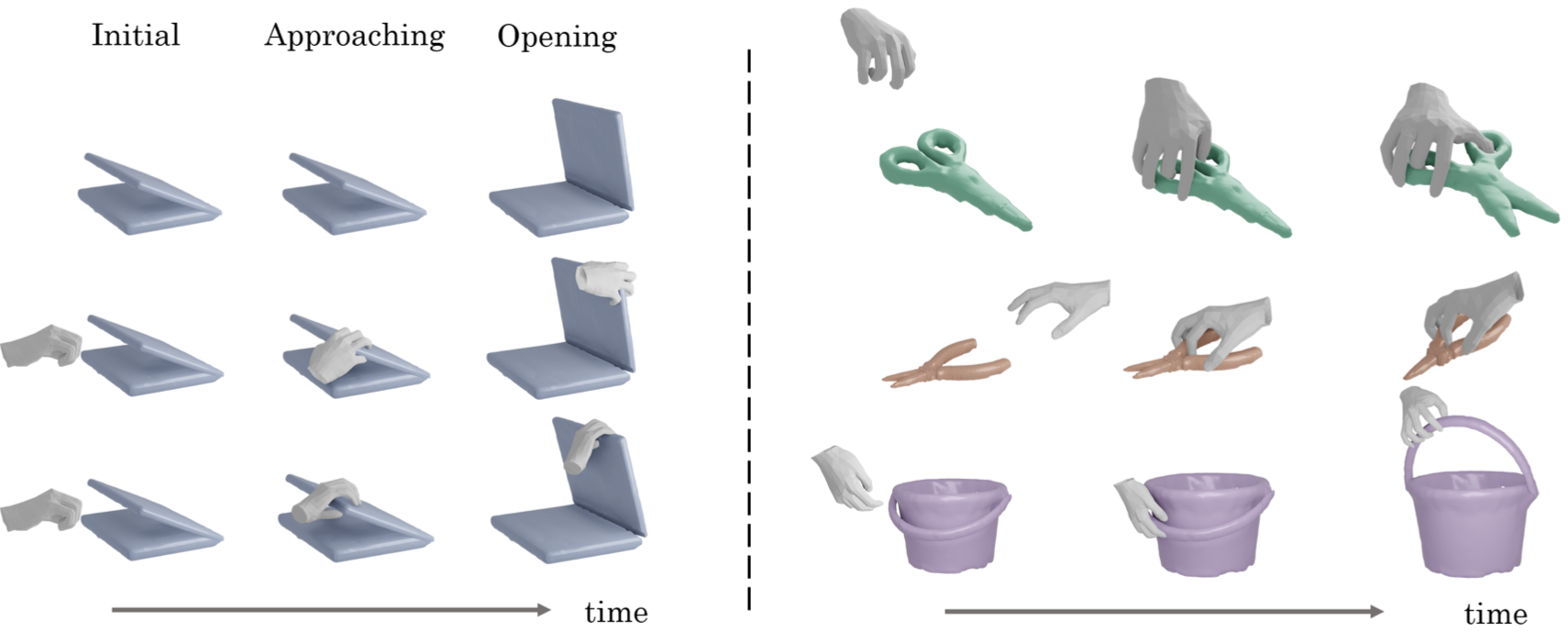

Juntian Zheng* , Lixing Fang* , Qingyuan Zheng* , Yun Liu , Li Yi

Computer Vision and Pattern Recognition (CVPR), 2023

Project Page | Paper | arXiv | Code | Data

A motion synthesis method for category-level functional hand-object manipulation.

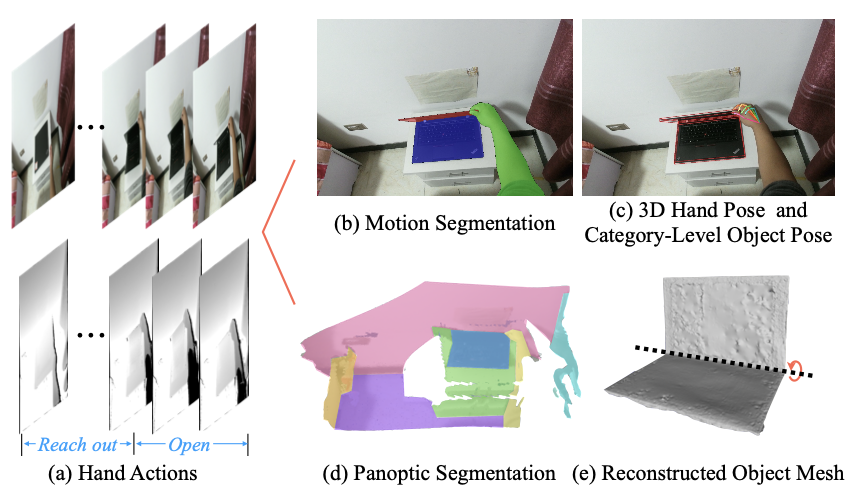

HOI4D: A 4D Egocentric Dataset for Category-Level Human-Object Interaction

Yunze Liu* , Yun Liu* , Che Jiang , Kangbo Lyu , Weikang Wan , Hao Shen , Boqiang Liang , Zhoujie Fu , He Wang , Li Yi

Computer Vision and Pattern Recognition (CVPR), 2022

Project Page | Paper | arXiv | Code | Data

The first dataset for 4D egocentric category-level human-object interaction.