| CARVIEW |

New research published published online in Cell Reports on December 12, 2013 (open access) with the nematode C. elegans suggests that combining mutants can lead to radical lifespan extension.

Scientists at the Buck Institute combined mutations in two pathways well known for lifespan extension and report a synergistic five-fold extension of longevity — these worms lived to the human equivalent of 400 to 500 years — introducing the possibility of combination therapy for aging and the maladies associated with it.

See on www.kurzweilai.net

]]>

A hand-held spectrometer pioneered by Toronto-based TellSpec that can determine exactly what is in the user’s food and display it on his or her smartphone.

See on singularityhub.com

]]>

My end of 2013 post, fresh from the oven: Of course but Maybe, (a 2013 take in ten points)

See on spacecollective.org

]]>

Researchers have discovered a cause of aging in mammals that may be reversible: a series of molecular events that enable communication inside cells between the nucleus and mitochondria.

As communication breaks down, aging accelerates. By administering a molecule naturally produced by the human body, scientists restored the communication network in older mice. Subsequent tissue samples showed key biological hallmarks that were comparable to those of much younger animals.

“The aging process we discovered is like a married couple — when they are young, they communicate well, but over time, living in close quarters for many years, communication breaks down,” said Harvard Medical School Professor of Genetics David Sinclair, senior author on the study. “And just like with a couple, restoring communication solved the problem.”

See on www.kurzweilai.net

]]>

Researchers in Germany have hijacked a natural mini-motor to do their miniscule medical work: the sperm.

–

Building a motor small enough to move a single cell can be terribly tricky. That’s why researchers in Germany have decided to instead hijack a natural mini-motor: the sperm.

Why sperm, you ask? As described in New Scientist,

Sperm cells are an attractive option because they are harmless to the human body, do not require an external power source, and can swim through viscous liquids.

But sperm don’t inherently go where you want them to go. Thus the scientists used some clever nano-engineering to rein them in.

Scientists put bull sperm cells in a petri dish along with a couple dozen iron-titanium nanotubes. The tubes act like those woven fingertraps—sperm can swim into them but can’t back themselves out. Using magnets, scientists can then steer the swimmers in the direction of their choosing. It’s like a remote-control robot where the sperm start the engines and the researchers provide the navigation.

See on blogs.discovermagazine.com

]]>

The existence of the Higgs boson particle was confirmed, a strong case for human-caused climate change was released, and scientists analyzed the oldest-known human DNA

–

From the confirmation of long-sought elementary particles to the discovery of a lost lake on Mars, 2013 has been an exciting year in science. But did it live up to expectations?

Researchers confirmed the existence of the Higgs boson particle in March, made a strong case for human-caused climate change in September and analyzed the oldest-known human DNA in December. They explored the site of a former lake on planet Mars, and speculated that perhaps fresh water still flows on the Red Planet.

Last year, LiveScience reached out to scientists in different fields and asked them for their science wishes for 2013. To find out if any of the advances of this year made those wishes come true, we re-contacted those same researchers. Here’s what they had to say.

See on www.scientificamerican.com

]]>

The decline of religion and the rise of the “nones

–

Since the early 20th century, with the rise of mass secular education and the diffusion of scientific knowledge through popular media, predictions of the deity’s demise have fallen short, and in some cases—such as in that of the U.S.—religiosity has actually increased. This ratio is changing. According to a 2013 survey of 14,000 people in 13 nations (Germany, France, Sweden, Spain, Switzerland, Turkey, Israel, Canada, Brazil, India, South Korea, the U.K. and the U.S.) that was conducted by the German Bertelsmann Foundation for its Religion Monitor, there is both widespread approval for the separation of church and state, as well as a decline in religiosity over time and across generations.”

See on www.scientificamerican.com

]]>

Wolfram gave me a glimpse under the hood in an hour-long conversation. And I have to say, what I saw was amazing.

–

Google wants to understand objects and things and their relationships so it can give answers, not just results. But Wolfram wants to make the world computable, so that our computers can answer questions like “where is the International Space Station right now.” That requires a level of machine intelligence that knows what the ISS is, that it’s in space, that it is orbiting the Earth, what its speed is, and where in its orbit it is right now.

See on venturebeat.com

]]>Microchips modeled on the brain may excel at tasks that baffle today’s computers.

–

Picture a person reading these words on a laptop in a coffee shop. The machine made of metal, plastic, and silicon consumes about 50 watts of power as it translates bits of information—a long string of 1s and 0s—into a pattern of dots on a screen. Meanwhile, inside that person’s skull, a gooey clump of proteins, salt, and water uses a fraction of that power not only to recognize those patterns as letters, words, and sentences but to recognize the song playing on the radio.

Computers are incredibly inefficient at lots of tasks that are easy for even the simplest brains, such as recognizing images and navigating in unfamiliar spaces. Machines found in research labs or vast data centers can perform such tasks, but they are huge and energy-hungry, and they need specialized programming. Google recently made headlines with software that can reliably recognize cats and human faces in video clips, but this achievement required no fewer than 16,000 powerful processors.

A new breed of computer chips that operate more like the brain may be about to narrow the gulf between artificial and natural computation—between circuits that crunch through logical operations at blistering speed and a mechanism honed by evolution to process and act on sensory input from the real world. Advances in neuroscience and chip technology have made it practical to build devices that, on a small scale at least, process data the way a mammalian brain does. These “neuromorphic” chips may be the missing piece of many promising but unfinished projects in artificial intelligence, such as cars that drive themselves reliably in all conditions, and smartphones that act as competent conversational assistants.

See on www.technologyreview.com

]]>If you have ever sat in a doctor’s waiting room, next to someone with a hacking cough and with only a pile of out-of-date Reader’s Digests for company, then you may have asked whether the system was fit for 21st Century living.

The NHS seems under increasing pressure, from GP surgeries to accident and emergency rooms. It feels as if the healthcare system is in desperate need of CPR – the question is will technology be the thing that brings it back to life?

Daniel Kraft is a trained doctor who heads up the medicine school at the Singularity University, a Silicon Valley-based organisation that runs graduate and business courses on how technology is going to disrupt the status quo in a variety of industries.

When I interview him he is carrying a device that looks suspiciously like a Tricorder, the scanners that were standard issue in Star Trek.

“This is a mock-up of a medical tricorder that can scan you and get information. I can hold it to my forehead and it will pick up my heart rate, my oxygen saturation, my temperature, my blood pressure and communicate it to my smartphone,” he explains.

In future, Dr Kraft predicts, such devices will be linked to artificial intelligence agents on smartphones, which in turn will be connected to super-computers such as IBM’s Watson, to give people instant and accurate diagnoses.

See on www.bbc.co.uk

]]>

(Medical Xpress)—University of Queensland researchers have made a major leap forward in treating renal disease, today announcing they have grown a kidney using stem cells.

University of Queensland researchers have made a major leap forward in treating renal disease, today announcing they have grown a kidney using stem cells.

The breakthrough paves the way for improved treatments for patients with kidney disease and bodes well for the future of the wider field of bioengineering organs.

Professor Melissa Little from UQ’s Institute for Molecular Bioscience (IMB), who led the study, said new treatments for kidney disease were urgently needed.

“One in three Australians is at risk of developing chronic kidney disease and the only therapies currently available are kidney transplant and dialysis,” Professor Little said.

“Only one in four patients will receive a donated organ, and dialysis is an ongoing and restrictive treatment regime.

“We need to improve outcomes for patients with this debilitating condition, which costs Australia $1.8 billion a year.”

The team designed a protocol that prompts stem cells to form all the required cell types to ‘self-organise’ into a mini-kidney in a dish.

“During self-organisation, different types of cells arrange themselves with respect to each other to create the complex structures that exist within an organ, in this case, the kidney,” Professor Little said.

“The fact that such stem cell populations can undergo self-organisation in the laboratory bodes well for the future of tissue bioengineering to replace damaged and diseased organs and tissues.”

See on medicalxpress.com

]]>

Diagnoses have soared as makers of the drugs used to treat attention deficit hyperactivity disorder have found success with a two-decade marketing campaign.

After more than 50 years leading the fight to legitimize attention deficit hyperactivity disorder, Keith Conners could be celebrating.

Severely hyperactive and impulsive children, once shunned as bad seeds, are now recognized as having a real neurological problem. Doctors and parents have largely accepted drugs like Adderall and Concerta to temper the traits of classic A.D.H.D., helping youngsters succeed in school and beyond.

But Dr. Conners did not feel triumphant this fall as he addressed a group of fellow A.D.H.D. specialists in Washington. He noted that recent data from the Centers for Disease Control and Prevention show that the diagnosis had been made in 15 percent of high school-age children, and that the number of children on medication for the disorder had soared to 3.5 million from 600,000 in 1990. He questioned the rising rates of diagnosis and called them “a national disaster of dangerous proportions.”

“The numbers make it look like an epidemic. Well, it’s not. It’s preposterous,” Dr. Conners, a psychologist and professor emeritus at Duke University, said in a subsequent interview. “This is a concoction to justify the giving out of medication at unprecedented and unjustifiable levels.”

See on www.nytimes.com

]]>Scientists are hoping a new technique will let them “see” pain in the bodies of hurting people, so doctors won’t have to rely solely on patients’ sometimes unclear accounts of their own pain.

Past research has shown a link between pain and a certain kind of molecule in the body, called a sodium channel—a protein that helps nerve cells transmit pain and other sensations to the brain. Certain types of sodium channel are over-produced at the site of an injury. So researchers set out to develop a way to make the resulting over-concentrations of sodium channels visible in scanning images.

Current ways to diagnose pain basically involve asking the patient if something hurts. This can lead doctors astray for a variety of reasons, including if a patient can’t communicate well or doesn’t want to talk about the pain. It can also be hard to tell how well a treatment is really working.

No existing method can measure pain intensity objectively or help physicians pinpoint where the pain is, said Sandip Biswal of Stanford University in California and colleagues, who described their new technique Nov. 21 online in the Journal of the American Chemical Society.

Biswal and colleagues tested the technique in rats.

They used an existing scanning method known as positron emission tomography (PET) scan, which uses a harmless radioactive substance called a tracer to look for disease in the body. They also turned to a small molecule called saxitoxin, produced naturally by certain types of microscopic marine creatures, and attached a signal to it so they could trace it by PET imaging.

When the researchers injected the molecule into rats, often a stand-in for humans in lab tests, they saw that the molecule accumulated where the rats had nerve damage. The rats didn’t show signs of toxic side effects, the scientists said, adding that the work is one of the first attempts to mark these sodium channels in a living animal.

See on www.world-science.net

]]>

Recent news coming from The New York Times reports that Google has confirmed the acquisition of Boston Dynamics.

Recent news coming from The New York Times reports that Google has confirmed the acquisition of Boston Dynamics. Google revealed sometime last week that it already had plenty of robot projects underway, and they’re headed up by Andy Rubin the same man who made the company’s Android operating system. Boston Dynamics is the engineering company that built the DARPA projects like WildCat/ Cheetah, Atlas, Petman, and Big Dog. Adding Boston Dynamics to its arsenal of robotics company buy, is an indication that Google wants in on the action of “robots-are-our-future.” At the moment there are no specific products to expect, or how much Google paid for the acquisition, but we all know that Google+Robotics = kickass consumer robots. Check out the video of Petman robot from Boston Dynamics below.

See on techmymoney.com

In the coming decade, the internet will be about bringing people together in the real world. Highlight wants to be the social network that rings in this new era.

Highlight wants to be the social network that rings in this new era. It’s something entirely different than the networks we know today, built not on distant friendships and disembodied interactions but instead on whoever happens to be around you at a given moment. The newest version of the app, available for iPhone and Android, uses every sensor, signal, and stream it can get its hands on to passively figure out what you’re doing, and it intelligently scans users nearby to figure out who you might be interested in. It’s not necessarily about people you know but people you could know. And that makes it both way cooler and way creepier than Facebook could ever dream of being.

See on www.wired.com

]]>

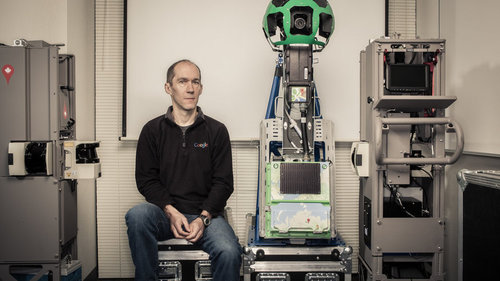

In the battle for digital dominance, victory depends on being the first to map every last place on the globe. It’s as hard as it sounds.

See on www.nytimes.com

]]>

(CNN) — When IBM’s Watson system defeated the human champion on “Jeopardy!” in February 2011, it surprised the world with its unprecedented command of a vast array of facts, puns, and clever questions.

But how will that feat change our lives over the next decade? What does it mean for the future of intelligent machines?

Watson accumulated its wide-ranging knowledge by “reading” the equivalent of millions of books, foreshadowing a revolution in how computers acquire, analyze, and create knowledge.

Given the explosive volume of text available to anyone today in the form of web pages, articles, tweets and more, automatic machine reading is a critical part of technology’s future.

Here are five ways we predict the Machine-Reading Revolution will change your life in the coming decade:

1. Being a scientific assistant,2. Answering questions,3. Being an online shopping concierge,4. Acting as an automated medical assistant,5. Delivering real-time statistics

See on edition.cnn.com

]]> ]]>

]]>

The world’s largest film industry—that’d be India’s—is largely barren of the superhero and spaceship films that dominate Hollywood. What, exactly, accounts for the difference?

–

Hollywood’s had a long love affair with sci-fi and fantasy, but the romance has never been stronger than it is today. A quick glance into bookstores, television lineups, and upcoming films shows that the futuristic and fantastical is everywhere in American pop culture. In fact, of Hollywood’s top earners since 1980, a mere eight have not featured wizardry, space or time travel, or apocalyptic destruction caused by aliens/zombies/Robert Downey Jr.’s acerbic wit. Now, with Man of Steel, it appears we will at last have an effective reboot of the most important superhero story of them all.

These tales of mystical worlds and improbable technological power appeal universally, right? Maybe not. Bollywood, not Hollywood, is the largest movie industry in the world. But only a handful its top hits of the last four decades have dealt with science fiction themes, and even fewer are fantasy or horror. American films in those genres make much of their profits abroad, but they tend to underperform in front of Indian audiences.

This isn’t to say that there aren’t folk tales with magic and mythology in India. There are. That makes their absence in Bollywood and their overabundance in Hollywood all the more remarkable. Whereas Bollywood takes quotidian family dramas and imbues them with spectacular tales of love and wealth found-lost-regained amidst the pageantry of choreographed dance pieces, Hollywood goes to the supernatural and futurism. It’s a sign that longing for mystery is universal, but the taste for science fiction and fantasy is cultural.

See on www.theatlantic.com

]]>

Synthetic biology moves us from reading to writing DNA, allowing us to design biological systems from scratch for any number of applications. Its capabilities are becoming clearer, its first products and processes emerging. Synthetic biology’s reach already extends from reducing our dependence on oil to transforming how we develop medicines and food crops. It is being heralded as the next big thing; whether it fulfils that expectation remains to be seen. It will require collaboration and multi-disciplinary approaches to development, application and regulation. Interesting times ahead!

See on www.innovationmanagement.se

]]>

Google, in partnership with NASA and the Universities Space Research Association (USRA), has launched an initiative to investigate how quantum computing might lead to breakthroughs in machine learning, a branch of AI that focuses on construction and study of systems that learn from data..

The new lab will use the D-Wave Two quantum computer.A recent study (see “Which is faster: conventional or quantum computer?“) confirmed the D-Wave One quantum computer was much faster than conventional machines at specific problems.

The machine will be installed at the NASA Advanced Supercomputing Facility at the NASA Ames Research Center in Mountain View, California.

“We hope it helps researchers construct more efficient and more accurate models for everything from speech recognition, to web search, to protein folding,” said Hartmut Neven, Google director of engineering.

Hybrid solutions

“Machine learning is highly difficult. It’s what mathematicians call an ‘NP-hard’ problem,” he said. “Classical computers aren’t well suited to these types of creative problems. Solving such problems can be imagined as trying to find the lowest point on a surface covered in hills and valleys.

“Classical computing might use what’s called a ‘gradient descent’: start at a random spot on the surface, look around for a lower spot to walk down to, and repeat until you can’t walk downhill anymore. But all too often that gets you stuck in a “local minimum” — a valley that isn’t the very lowest point on the surface.

“That’s where quantum computing comes in. It lets you cheat a little, giving you some chance to ‘tunnel’ through a ridge to see if there’s a lower valley hidden beyond it. This gives you a much better shot at finding the true lowest point — the optimal solution.”

Google has already developed some quantum machine-learning algorithms, Neven said. “One produces very compact, efficient recognizers — very useful when you’re short on power, as on a mobile device. Another can handle highly polluted training data, where a high percentage of the examples are mislabeled, as they often are in the real world. And we’ve learned some useful principles: e.g., you get the best results not with pure quantum computing, but by mixing quantum and classical computing.”

See on www.kurzweilai.net

]]>

IN “SKYFALL”, the latest James Bond movie, 007 is given a gun that only he can fire. It works by recognising his palm print, rendering it impotent when it falls into a baddy’s hands. Like many of Q’s more fanciful inventions, the fiction is easier to conjure up than the fact. But there is a real-life biometric system that would have served Bond just as well: cardiac-rhythm recognition.

Anyone who has watched a medical drama can picture an electrocardiogram (ECG)—the five peaks and troughs, known as a PQRST pattern (see picture), that map each heartbeat. The shape of this pattern is affected by such things as the heart’s size, its shape and its position in the body. Cardiologists have known since 1964 that everyone’s heartbeat is thus unique, and researchers around the world have been trying to turn that knowledge into a viable biometric system. Until now, they have had little success. One group may, though, have cracked it.

Foteini Agrafioti of the University of Toronto and her colleagues have patented a system which constantly measures a person’s PQRST pattern, confirms this corresponds with the registered user’s pattern, and can thus verify to various devices that the user is who he says he is. Through a company called Bionym, which they have founded, they will unveil it to the world in June.

See on www.economist.com

]]>

When people create and modify their virtual reality avatars, the hardships faced by their alter egos can influence how they perceive virtual environments, according to researchers presenting their findings at 2013 Annual Conference on Human Factors…

–

When people create and modify their virtual reality avatars, the hardships faced by their alter egos can influence how they perceive virtual environments, according to researchers.

A group of students who saw that a backpack was attached to an avatar that they had created overestimated the heights of virtual hills, just as people in real life tend to overestimate heights and distances while carrying extra weight, according to Sangseok You, a doctoral student in the school of information, University of Michigan.

“You exert more of your agency through an avatar when you design it yourself,” said S. Shyam Sundar, Distinguished Professor of Communications and co-director of the Media Effects Research Laboratory, Penn State, who worked with You. “Your identity mixes in with the identity of that avatar and, as a result, your visual perception of the virtual environment is colored by the physical resources of your avatar.”

Researchers assigned random avatars to one group of participants, but allowed another group to customize their avatars. In each of these two groups, half of the participants saw that their avatar had a backpack, while the other half had avatars without backpacks, according to You.

When placed in a virtual environment with three hills of different heights and angles of incline, participants who customized their avatars perceived those hills as higher and steeper than participants who were assigned avatars by the researchers, Sundar said. They also overestimated the amount of calories it would take to hike up the hill if their custom avatar had a backpack.

“If your avatar is carrying a backpack, you feel like you are going to have trouble climbing that hill, but this only happens when you customize the avatar,” said Sundar.

See on news.psu.edu

]]>

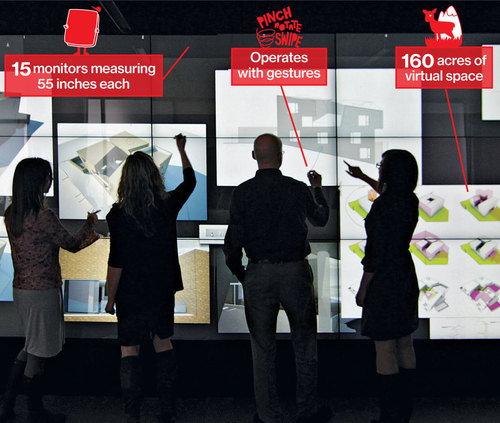

Haworth and Obscura Digital’s digital whiteboard can hold 160 acres of virtual space-

Apple (AAPL) has rolled out smaller models of its iPad. Jeff Reuschel is thinking bigger. The global design director for office-furniture maker Haworth, in partnership with interactive display company Obscura Digital, has created a touchscreen that covers a conference-room wall. Like a supersize version of CNN’s (TWX) Magic Wall, Bluescape displays a unified image across 15 linked 55-inch flat-screen monitors, each equipped with 32 specialized sensors to read users’ hand movements. Unlike whiteboards or flip charts, it won’t require much erasing or page turning: When zoomed out as far as possible, the digital board’s virtual space totals 160 acres. Using Bluescape, corporate and university clients can store often scattershot brainstorming sessions in perpetuity. Co-workers or classmates can add digital sticky notes, either with a digital pen on the wall itself or by uploading documents from other devices, from which they can also browse the virtual space. “There are fewer and fewer people working in cubicles,” says Reuschel. “The old-fashioned vertical surfaces are going away.”

See on www.businessweek.com

]]>

Twitter, Facebook, Google… we know the internet is driving us to distraction. But could sitting at your computer actually calm you down? Oliver Burkeman investigates the slow web movement

–

Back in the summer of 2008 – a long time ago, in internet terms, two years before Instagram, and around the time of Twitter’s second birthday – the US writer Nicholas Carr published a now famous essay in the Atlantic magazine entitled Is Google Making Us Stupid? The more time he spent online, Carr reported, the more he experienced the sensation that something was eating away at his brain. “I’m not thinking the way I used to think,” he wrote. Increasingly, he’d sit down with a book, but then find himself unable to focus for more than two or three pages: “I get fidgety, lose the thread, begin looking for something else to do. I feel as if I’m always dragging my wayward brain back to the text.” Reading, he recalled, used to feel like scuba diving in a sea of words. But now “I zip along the surface like a guy on a jetski.”

In the half-decade since Carr’s essay appeared, we’ve endured countless scare stories about the life-destroying effects of the internet, and by and large they’ve been debunked. No, the web probably isn’t addictive in the sense that nicotine or heroin are; no, Facebook and Twitter aren’t guilty of “killing conversation” or corroding real-life friendship or making children autistic. Yes, the internet is “changing our brains”, but then so does everything – and, contrary to the claims of one especially panicky Newsweek cover story, it certainly isn’t “driving us mad”.

See on www.guardian.co.uk

]]> Your data is your interface (via Pando Daily)

Your data is your interface (via Pando Daily)

By Jarno Mikael Koponen On April 17, 2013We all view the world differently and on our own terms. Each of us use different words to describe the same book, movie, favorite food, person, work of art, or news article. We express our uniqueness by reviewing, tagging, commenting, liking, and rating things…

TEDxRotterdam – Igor Nikolic – Complex adaptive systems

On TEDxRotterdam Igor Nikolic left the audience in awe with his stunning presentation and visualizations, mapping complex systems.

]]>Seth Llyod is a Professor in the Department of Mechanical Engineering at the Massachusetts Institute of Technology MIT. His talk, “Programming the Universe”, is about the computational power of atoms, electrons, and elementary particles.

A highly recommended watch.

]]>How our modular brains lead us to deny and distort evidence

–

If you have pondered how intelligent and educated people can, in the face of overwhelming contradictory evidence, believe that evolution is a myth, that global warming is a hoax, that vaccines cause autism and asthma, that 9/11 was orchestrated by the Bush administration, conjecture no more. The explanation is in what I call logic-tight compartments—modules in the brain analogous to watertight compartments in a ship.

The concept of compartmentalized brain functions acting either in concert or in conflict has been a core idea of evolutionary psychology since the early 1990s. According to University of Pennsylvania evolutionary psychologist Robert Kurzban in Why Everyone (Else) Is a Hypocrite (Princeton University Press, 2010), the brain evolved as a modular, multitasking problem-solving organ—a Swiss Army knife of practical tools in the old metaphor or an app-loaded iPhone in Kurzban’s upgrade. There is no unified “self” that generates internally consistent and seamlessly coherent beliefs devoid of conflict. Instead we are a collection of distinct but interacting modules often at odds with one another. The module that leads us to crave sweet and fatty foods in the short term is in conflict with the module that monitors our body image and health in the long term. The module for cooperation is in conflict with the one for competition, as are the modules for altruism and avarice or the modules for truth telling and lying.

…

See on www.scientificamerican.com

]]>

Today, January 8, is Hawking’s birthday, yet on this day it’s worth examining just who and what we are really celebrating: the man, the mind, or … the machines?

–

Traditionally, assistants execute what the head directs or has thought of beforehand. But Hawking’s assistants – human and machine – complete his thoughts through their work; they classify, attribute meaning, translate, perform. Hawking’s example thus helps us rethink the dichotomy between humans and machines.

highly recommended read

See on www.wired.com

]]>