| CARVIEW |

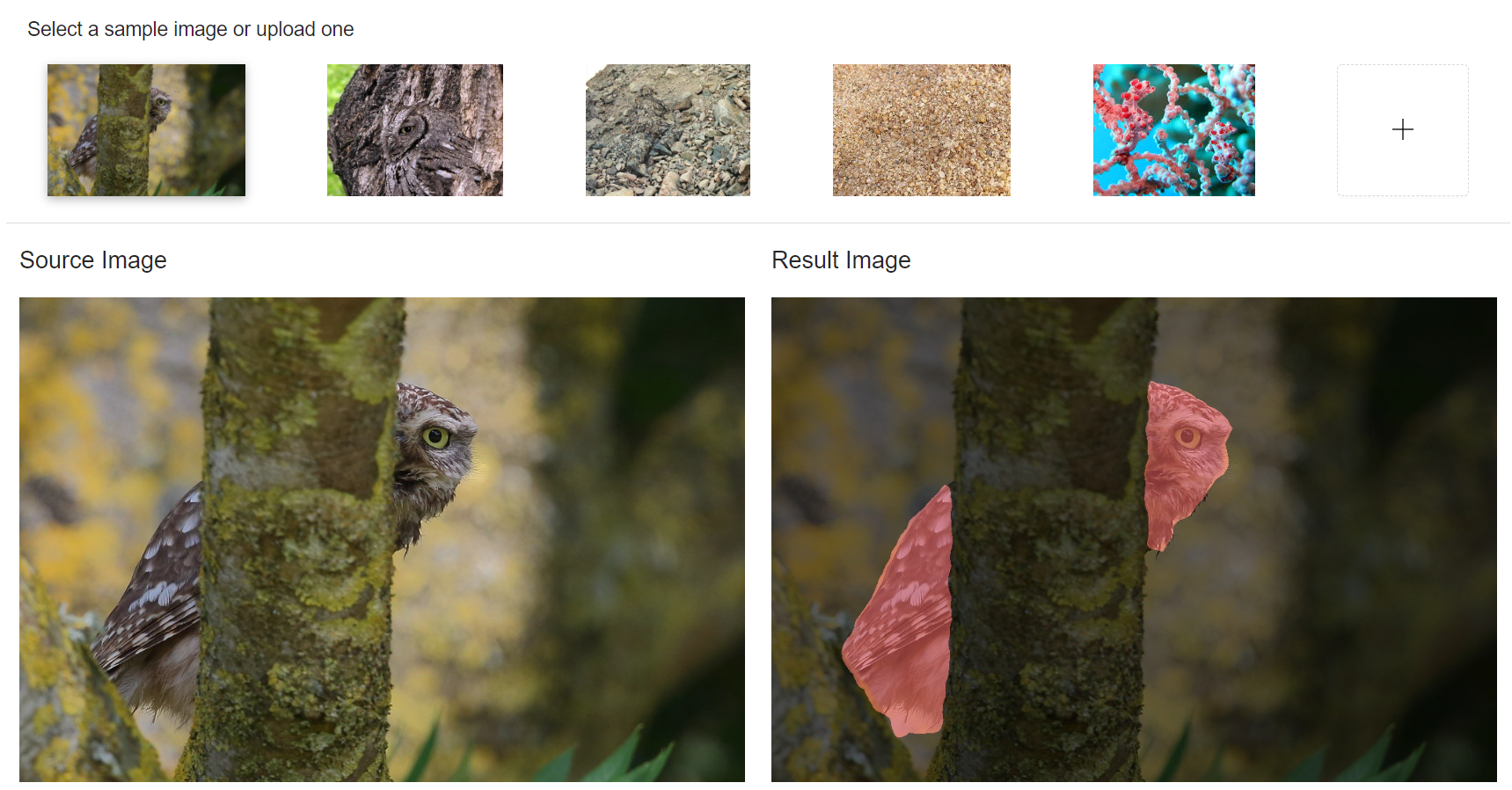

Application: Link

Overview

Sensory ecologists have found that this s background matching camouflage strategy works by deceiving the visual perceptual system of the observer. Naturally, addressing concealed object detection (COD) requires a significant amount of visual perception knowledge. Understanding COD has not only scientific value in itself, but it also important for applications in many fundamental fields, such as computer vision (e.g., for search-and-rescue work, or rare species discovery), medicine (e.g., polyp segmentation, lung infection segmentation), agriculture (e.g., locust detection to prevent invasion), and art (e.g., recreational art). The high intrinsic similarities between the targets and non-targets make COD far more challenging than traditional object segmentation/detection. Although it has gained increased attention recently, studies on COD still remain scarce, mainly due to the lack of a sufficiently large dataset and a standard benchmark like Pascal-VOC, ImageNet, MS-COCO, ADE20K, and DAVIS.

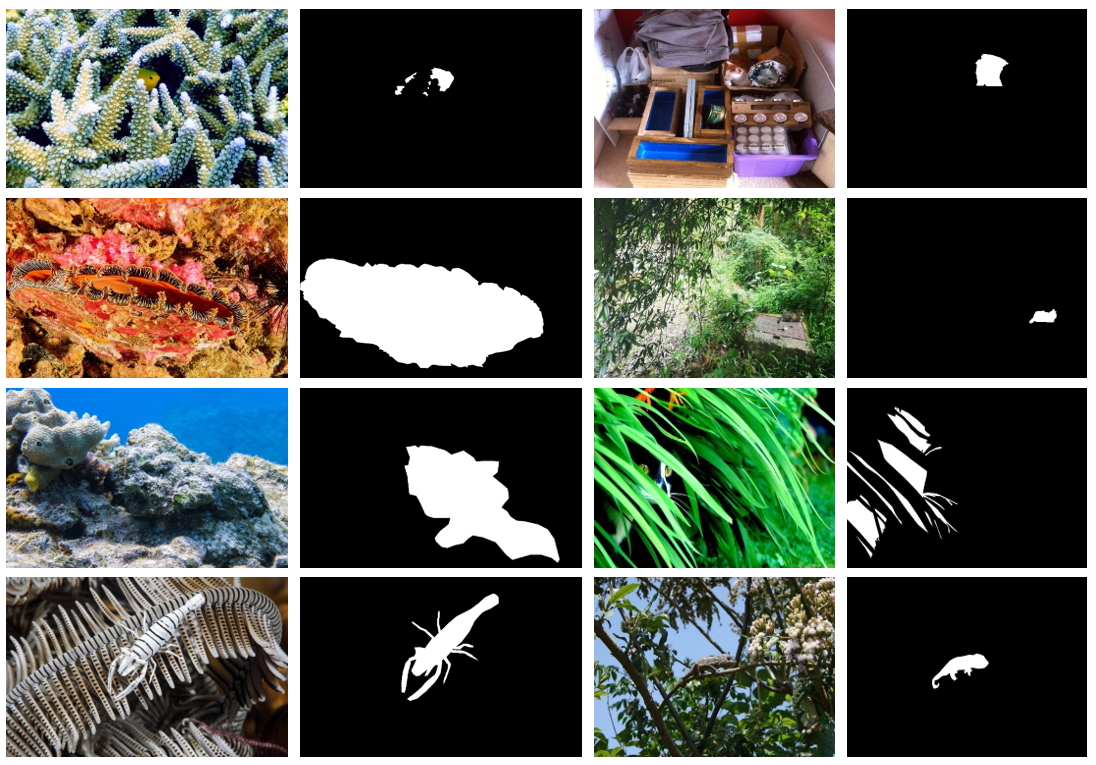

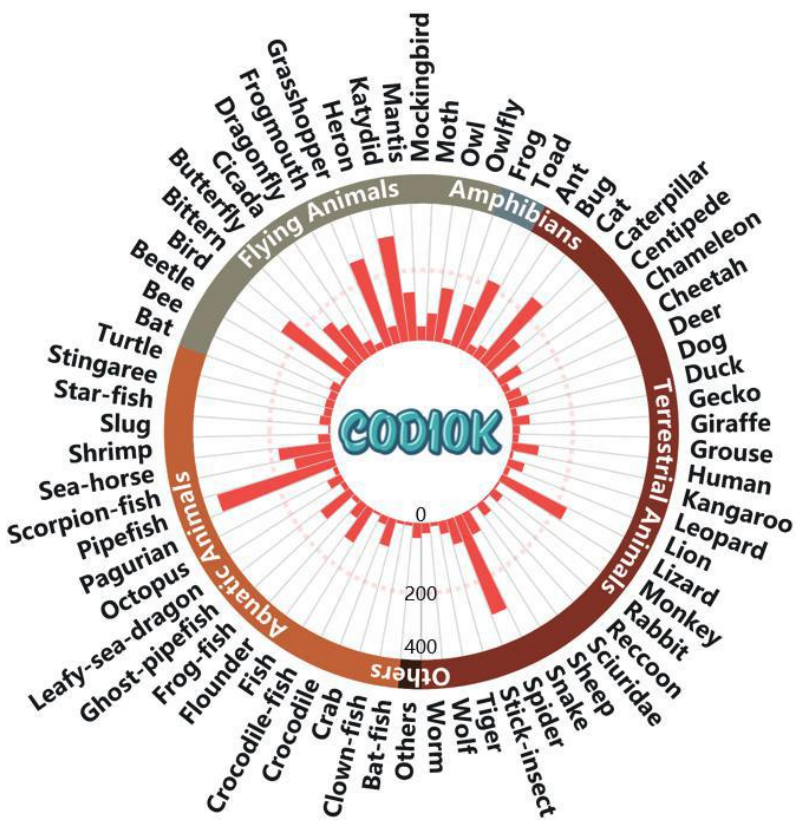

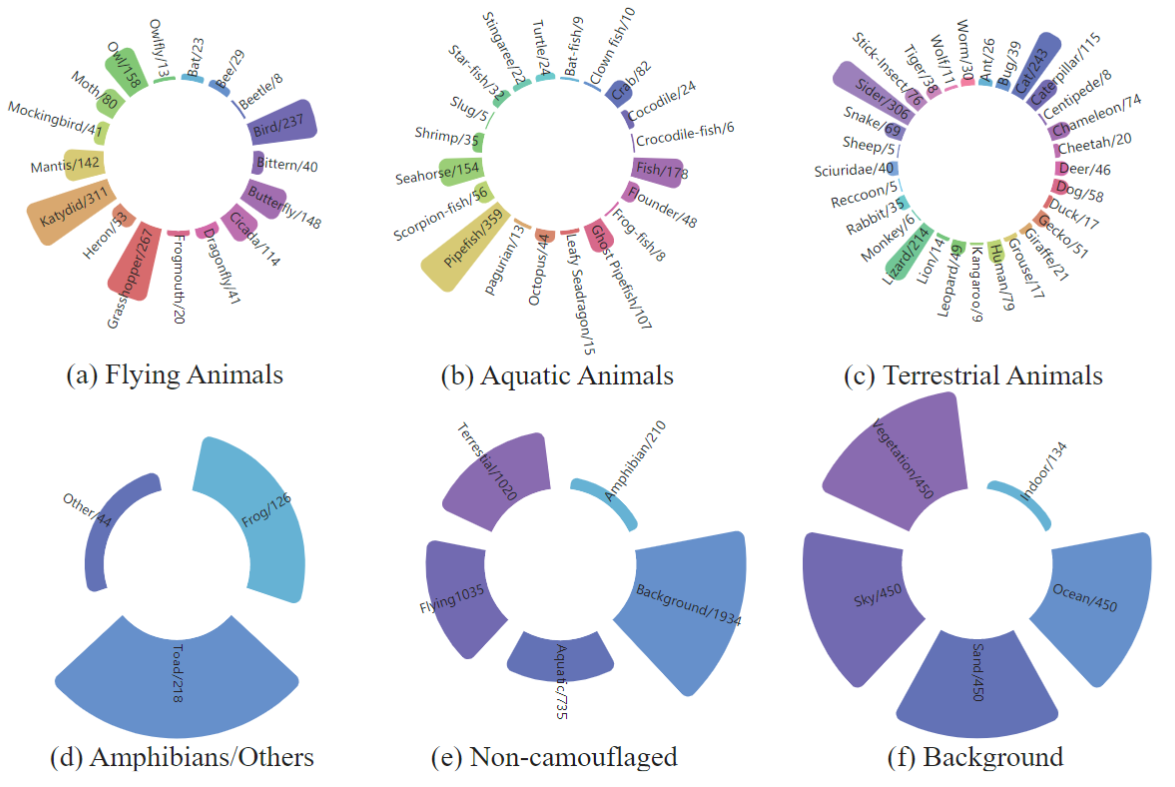

To build the large-scale COD dataset, we build the COD10K, which contains 10,000 images (5,066 camouflaged, 3,000 background, 1,934 noncamouflaged), divided into 10 super-classes, and 78 sub-classes (69 camouflaged, nine non-camouflaged) which are collected from multiple photography websites.

Download

COD10K Dataset (10,000)

Training Set (6,000)

The training set (COD10K-Tr) contains 6,000 images including 3,040 camouflaged images and 2,960 non-camouflaged images.

Test Set (4,000)

The test set (COD10K-Te) contains 4,000 images including 2,026 camoufalged images and 1,974 non-camouflaged images.

Non-Camouflaged Images (4,000)

The Non-Camouflaged set (COD10K-NonCAM) can be used for the contrast learning model.

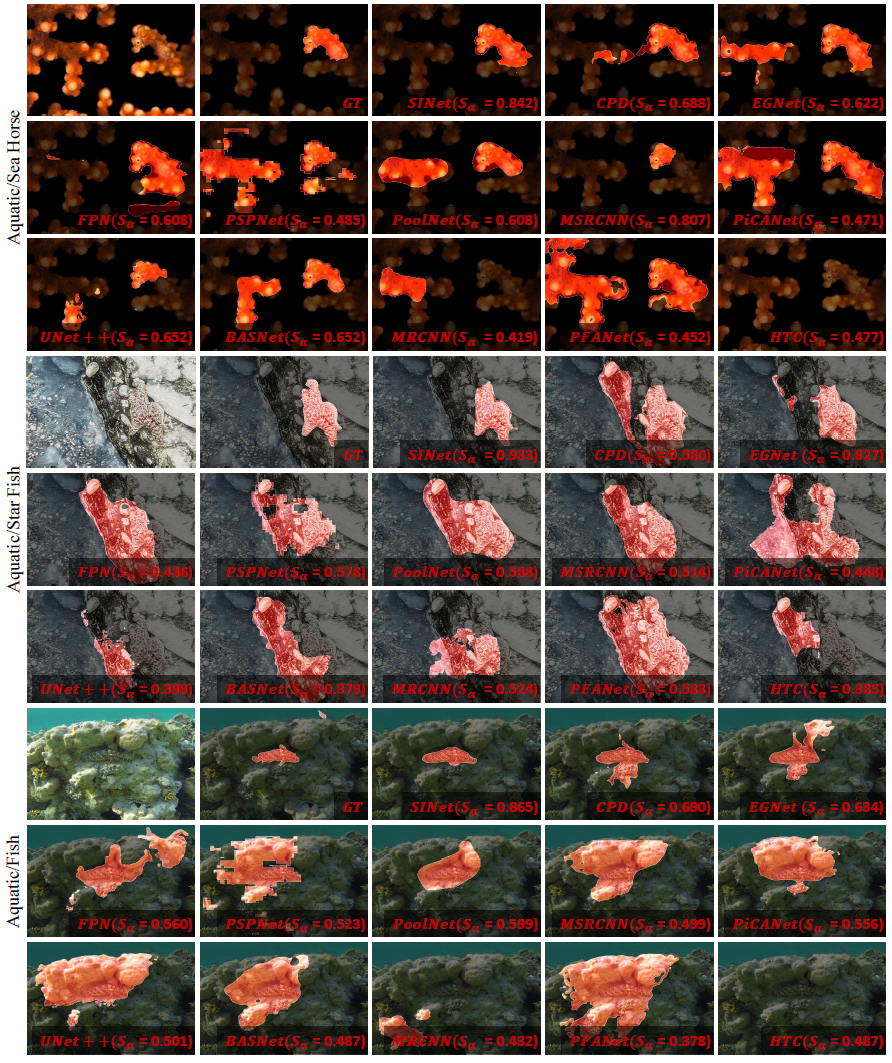

Benchmark

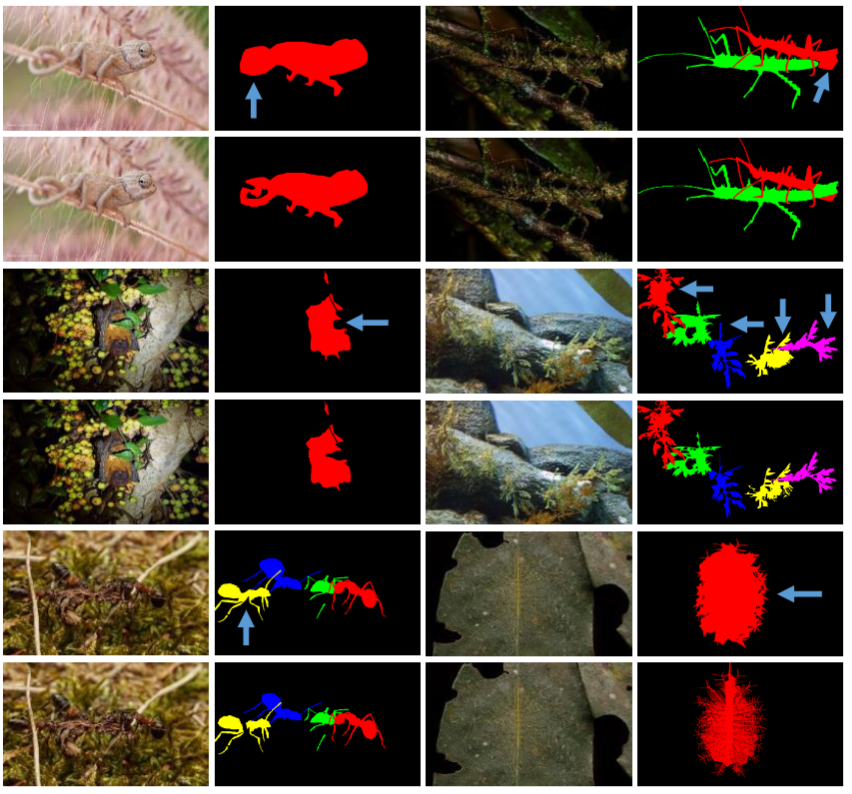

Qualitative Comparisons Against SOTAs.

Citation

Deng-Ping Fan, Ge-Peng Ji, Guolei Sun, Ming-Ming Cheng, Jianbing Shen, Ling Shao. Concealed Object Detection. TPAMI, 2022.[PDF][中译版][Github][COD10K Dataset]

Deng-Ping Fan, Ge-Peng Ji, Guolei Sun, Ming-Ming Cheng, Jianbing Shen, Ling Shao. Camouflaged Object Detection. CVPR, 2020.[PDF][中译版][Github]

Bibtex

@article{fan2022concealed,

author={Fan, Deng-Ping and Ji, Ge-Peng and Cheng, Ming-Ming and Shao, Ling},

title={Concealed object detection},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

year={2022}

}

@inproceedings{fan2020camouflaged,

author={Fan, Deng-Ping and Ji, Ge-Peng and Sun, Guolei and Cheng, Ming-Ming and Shen, Jianbing and Shao, Ling},

title={Camouflaged object detection},

booktitle={CVPR},

year={2020}

}

Visitors

Polyp Segmentation

Polyp Segmentation.

Art Design

Art Design based on camouflaged images.

Simulate View Motion

Internet search engine application equipped without (top)/with (bottom) a camouflaged object detection.

AR Application

The type of defect detection including fabric (first column), marble (second column), aluminum (third column), wood (last column).

AR Application

Search and rescue system based on camouflaged human detection working in a disaster area.

Translation between camouflaged objects and salient objects

The translation between camouflaged and salient objects helps generate more training data for both fields.

Groups and Categories

Our camouflaged object detection dataset (COD10K) contains 10,000 images images of 78 groups (69 concealed, 9 non-concealed) and 10 categories (5 camouflaged: terrestrial, atmobios, aquatic, amphibian, and other, 5 non-camouflage: sky, vegetation, indoor, ocean, and sand) manually collected from internet based on our pre-designed keywords.

- Number of Images: 10,000

- Number of Groups: 10

- Number of Categories: 78

Dataset Statistics

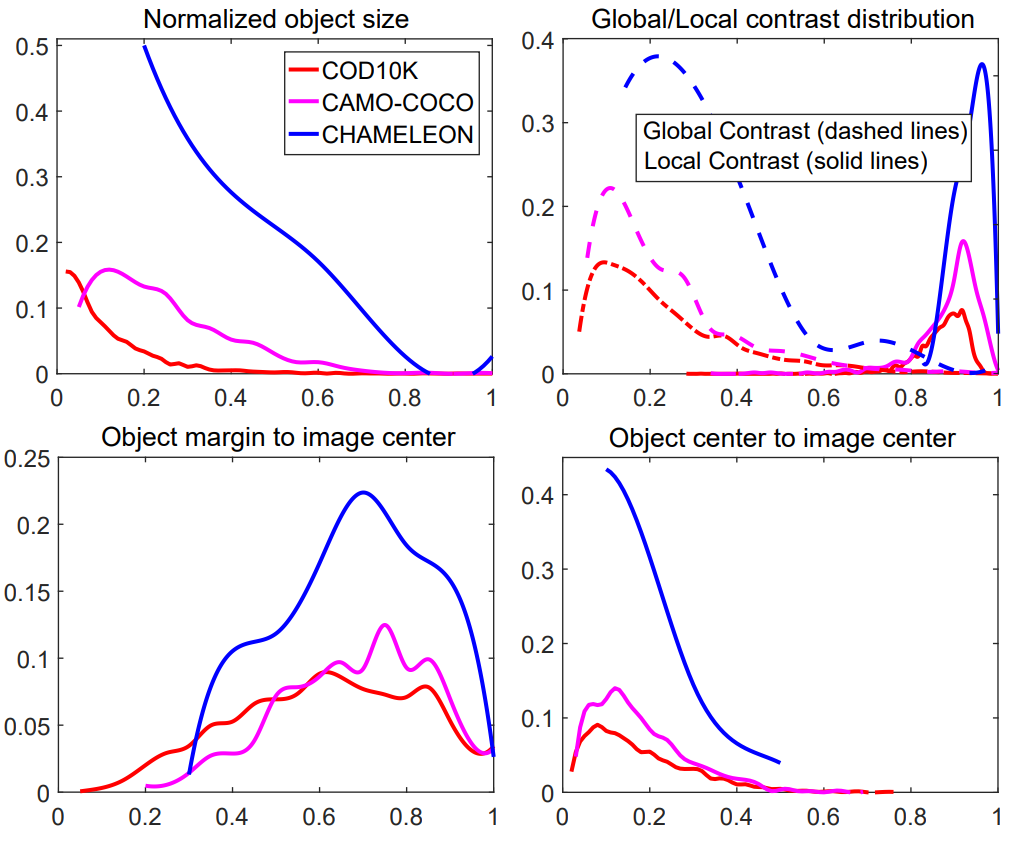

Comparison between the proposed COD10K and existing datasets. COD10K has smaller objects (top-left), contains more difficult conceale (top-right), and suffers from less center bias (bottom-left/right).

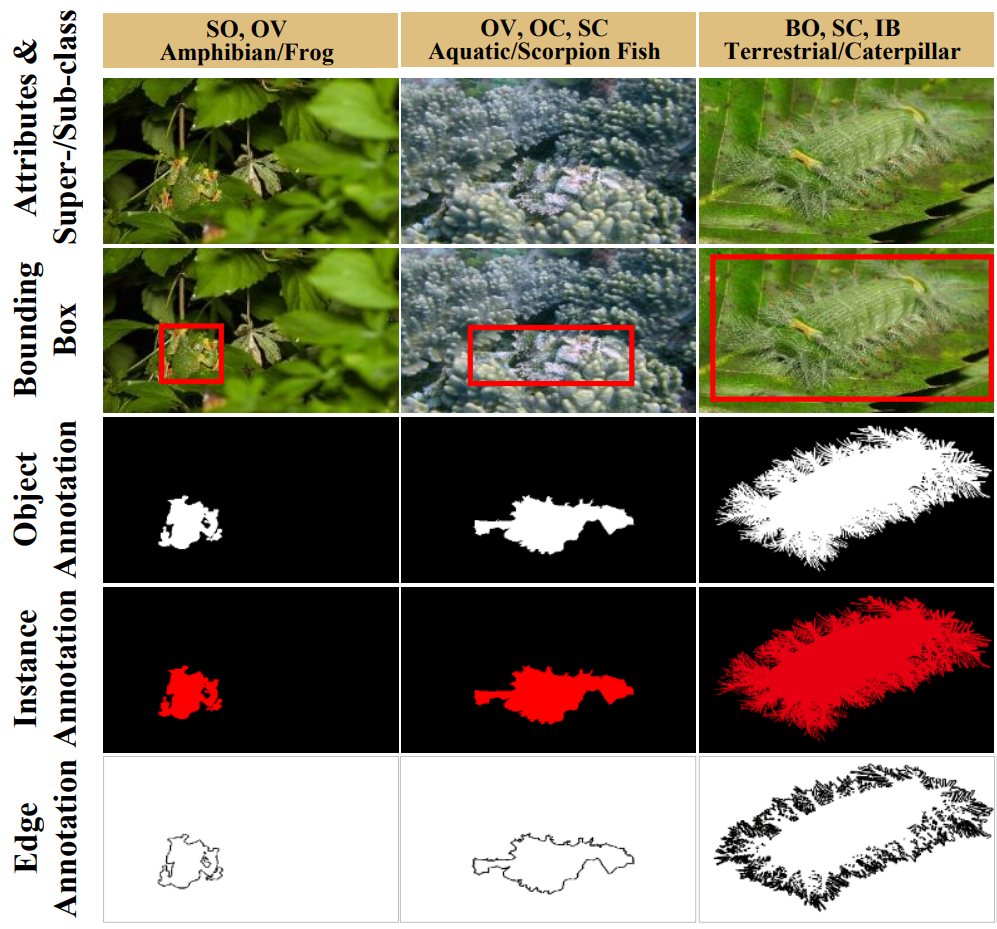

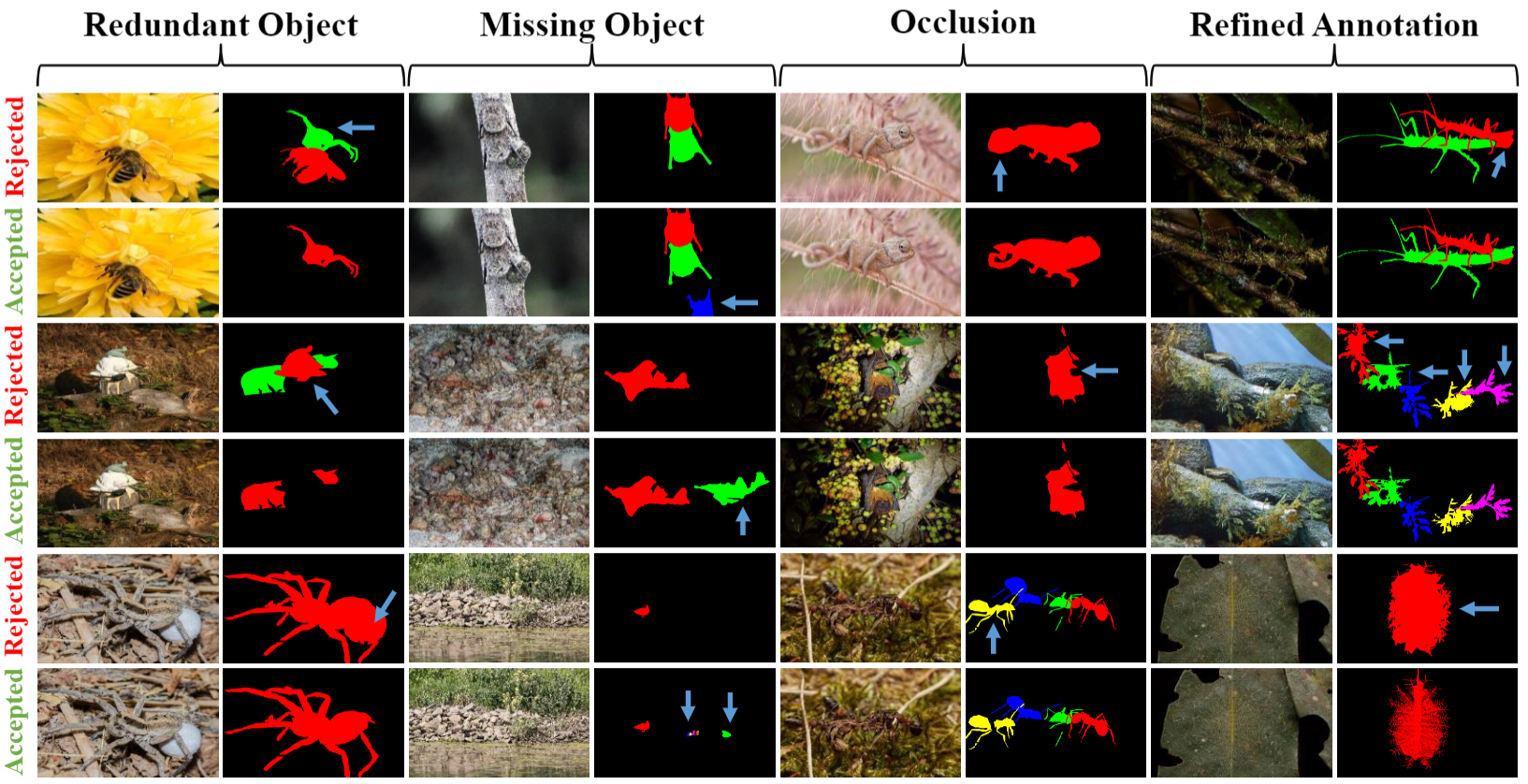

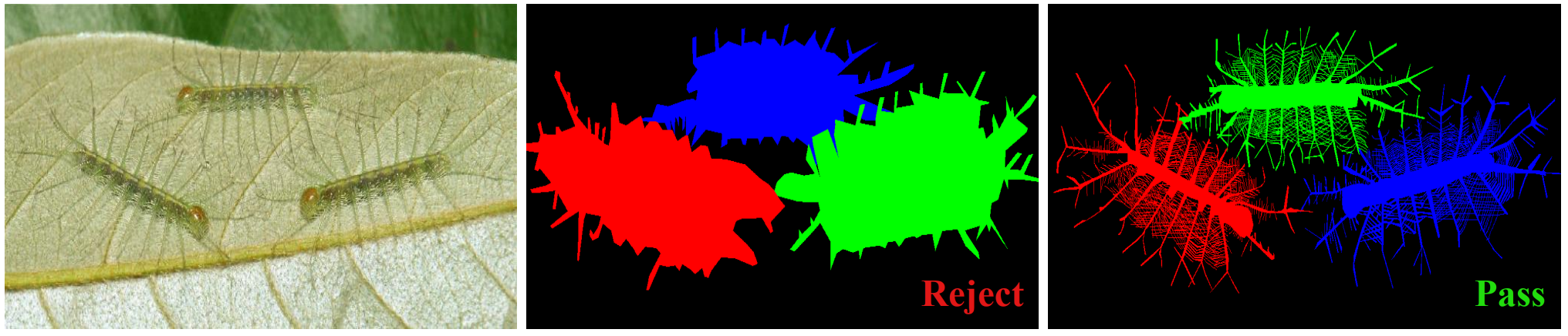

Annotation Quality

Regularized quality control during our labeling reverification stage. We strictly adhere to the four major criteria (i.e., redundant object, missing object, occlusion, and refined annotation.) for rejection or acceptance, nearing the ceiling of annotation accuracy.

High-quality annotation. The annotation quality is close to the existing matting-level annotation.

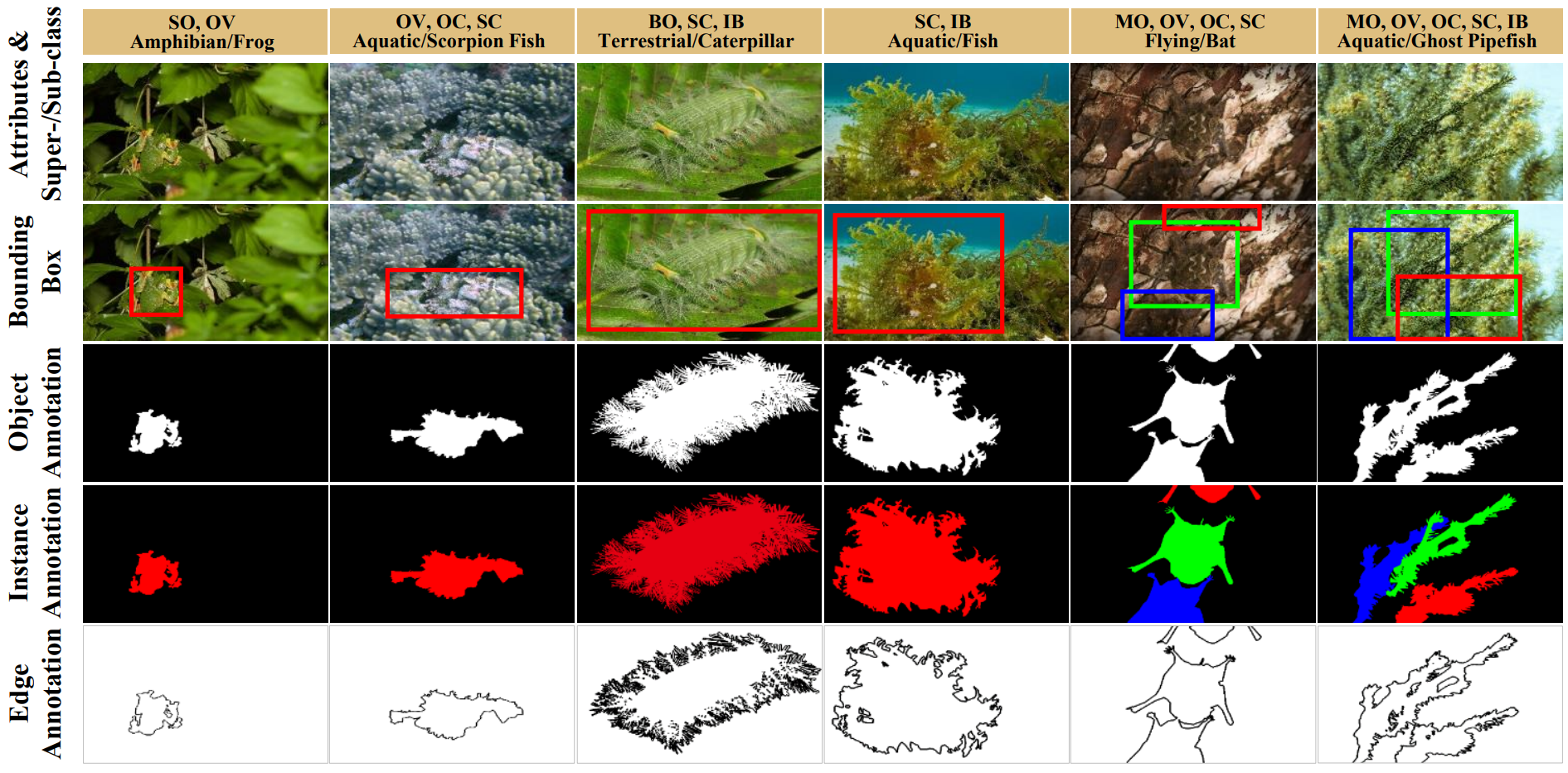

Label Diversity

Annotation diversity in the proposed COD10K dataset. Instead of only providing coarse-grained object-level annotations like in previous works, we offer six different annotations for each image, which include attributes and categories (1st row), bounding boxes (2nd row), object annotation (3rd row), instance annotation (4th row), and edge annotation (5th row).

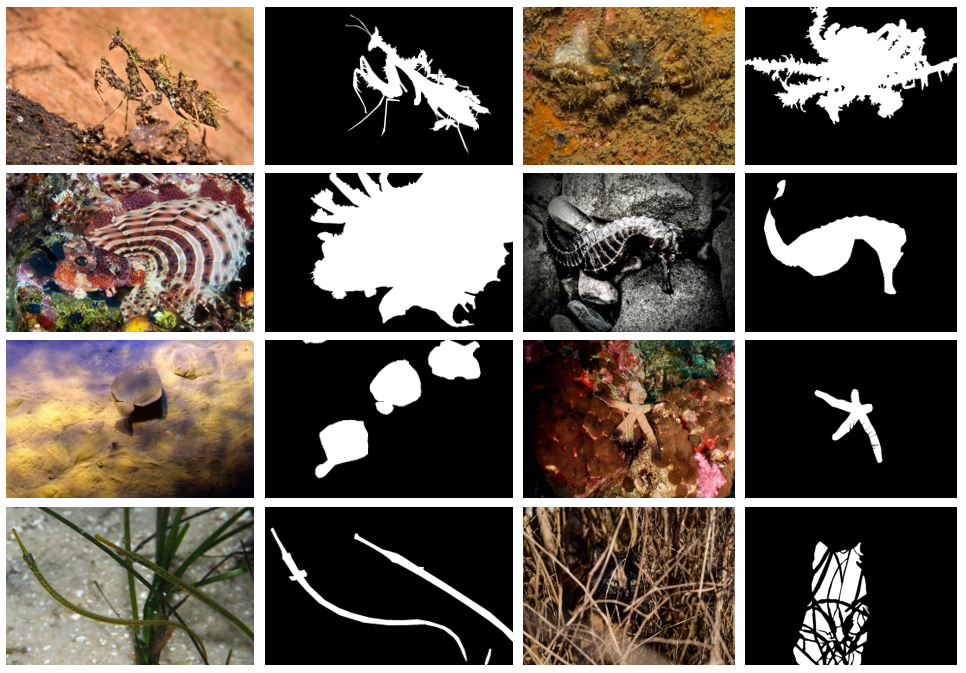

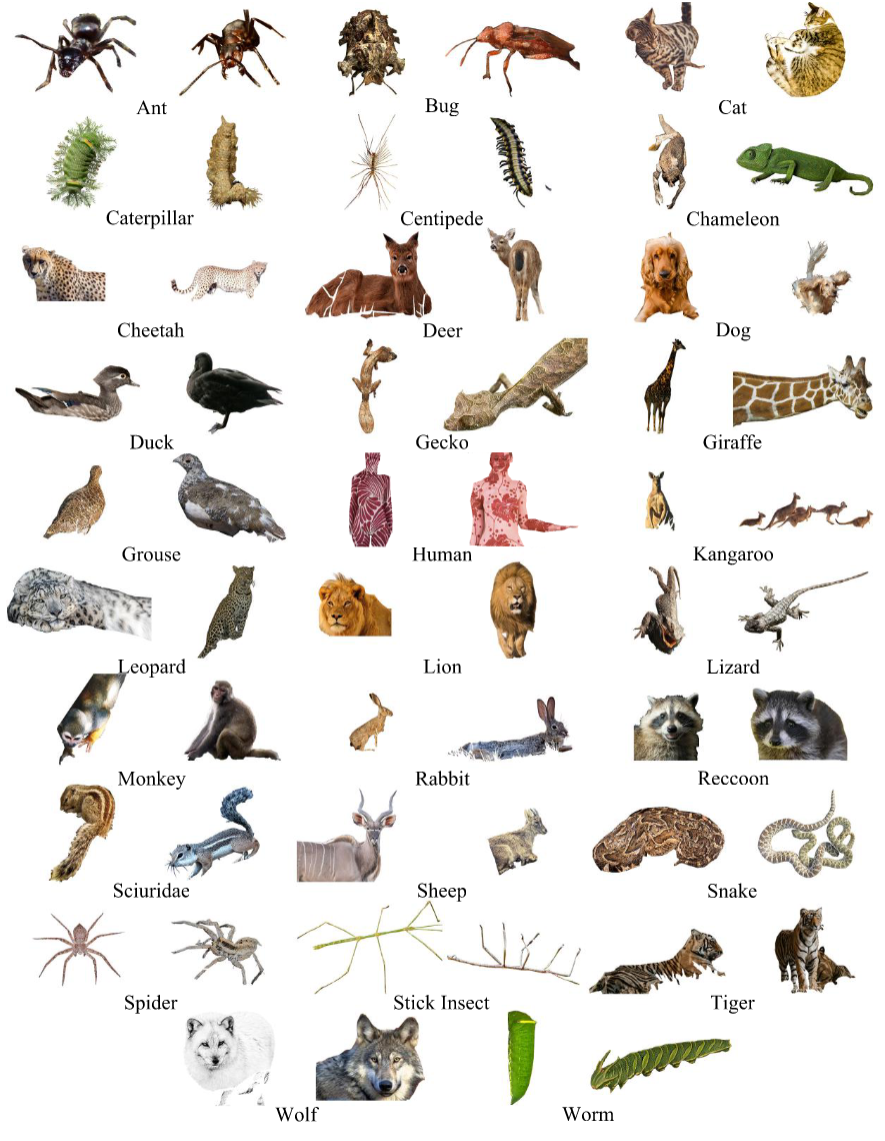

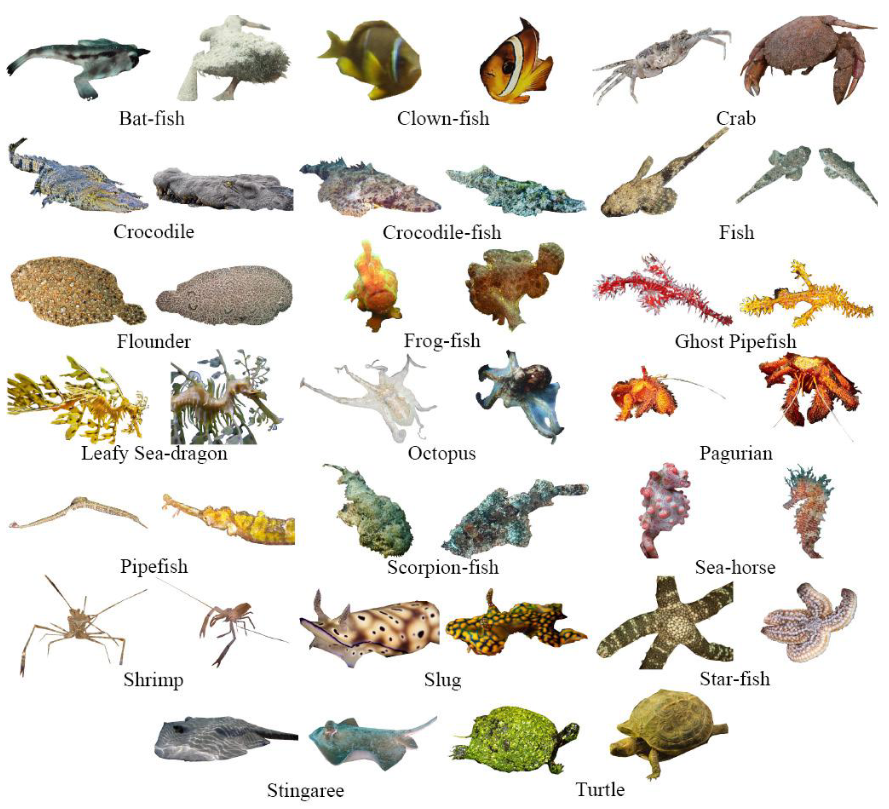

Object Diversity

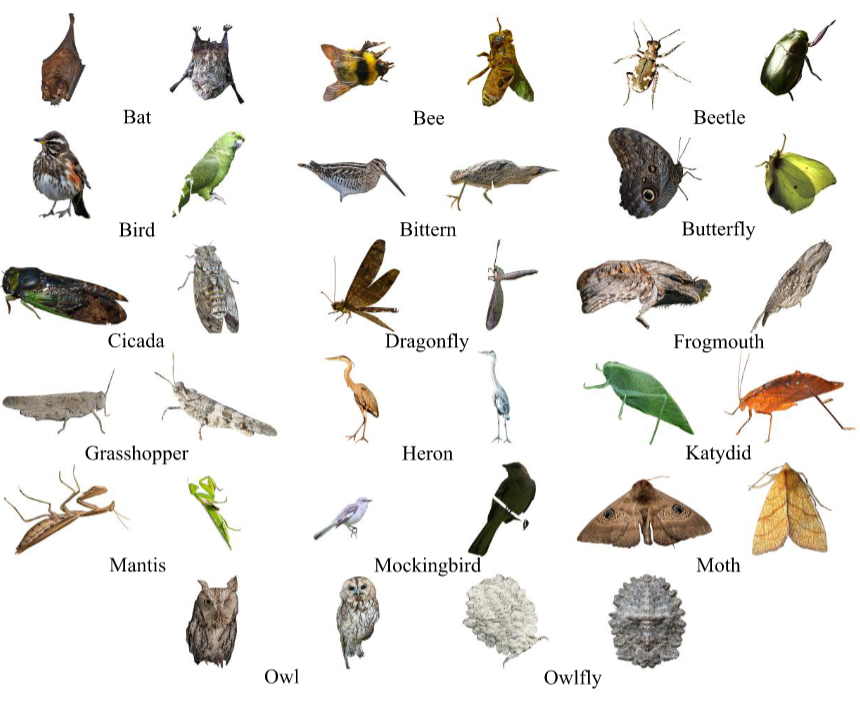

Extraction of individual samples from 29 sub-classes of our COD10K (1/5)–Terrestrial animals.

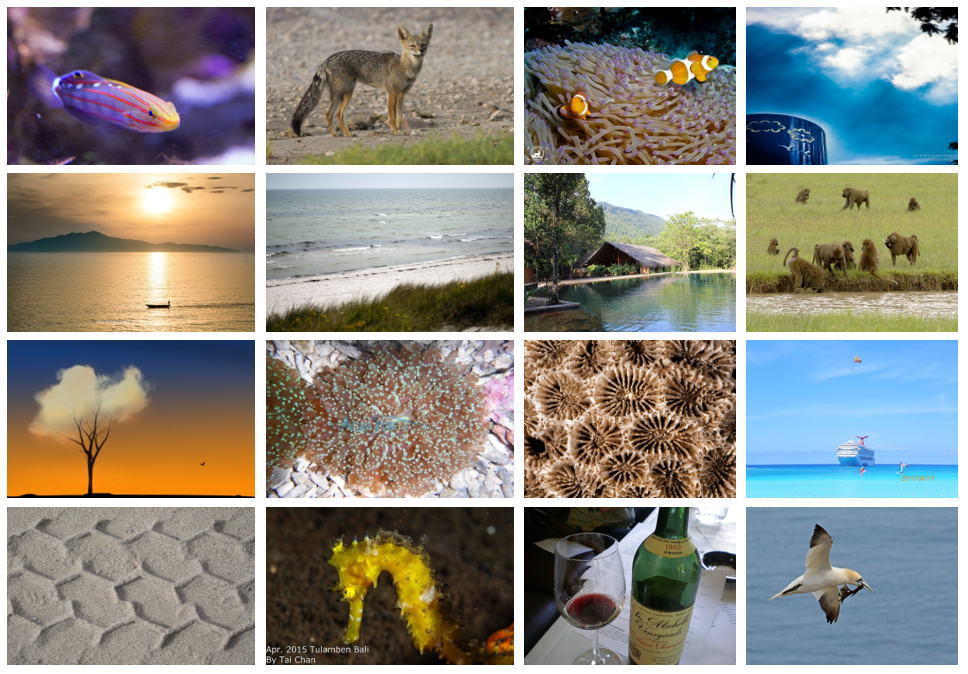

Extraction of individual samples from 20 sub-classes of our COD10K (2/5)–Aquatic animals.

Extraction of individual samples from two sub-classes of our COD10K (3/5)–Amphibians.

Extraction of individual samples from two sub-classes of our COD10K (4/5)–Flying animals.

Extraction of individual samples from two sub-classes of our COD10K COD10K (5/5)–Other animals. Note that we merge 21 classes (e.g., bear, elephant, fox, mouse, shark, sea lion, etc.) into a single sub-class because they do not have sufficient images (less than 5).

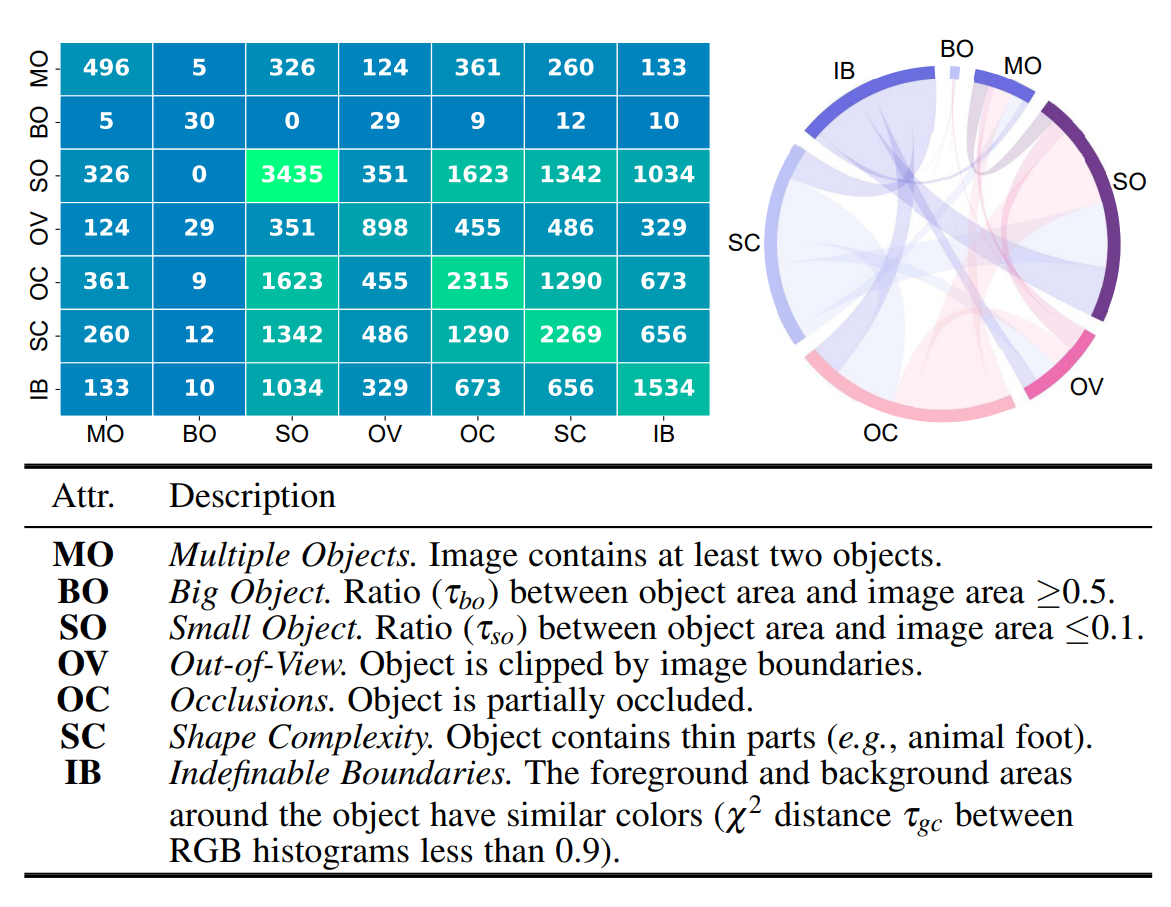

Data Attributes

Attribute distribution. Top-left: Co-attributes distribution over COD10K. The number in each grid indicates the total number of images. Top-right: Multi-dependencies among these attributes. A larger arc length indicates a higher probability of one attribute correlating to another. Bottom: attribute descriptions.