| CARVIEW |

Select Language

HTTP/2 200

server: GitHub.com

content-type: text/html; charset=utf-8

last-modified: Wed, 10 Dec 2025 21:03:21 GMT

access-control-allow-origin: *

strict-transport-security: max-age=31556952

etag: W/"6939e019-345a"

expires: Sun, 28 Dec 2025 15:30:49 GMT

cache-control: max-age=600

content-encoding: gzip

x-proxy-cache: MISS

x-github-request-id: 955F:2F7ECD:7C32D8:8B36B0:69514AD0

accept-ranges: bytes

age: 0

date: Sun, 28 Dec 2025 15:20:49 GMT

via: 1.1 varnish

x-served-by: cache-bom-vanm7210091-BOM

x-cache: MISS

x-cache-hits: 0

x-timer: S1766935249.261229,VS0,VE209

vary: Accept-Encoding

x-fastly-request-id: 578439b247f3b84ed82f7d1392ca3451f47a964a

content-length: 4223

NeurIPS 2025: The Science of Benchmarking Tutorial

The Science of Benchmarking

What's Measured, What's Missing, What's Next

News: slides available at benchmarking.science/slides.pdf

NeurIPS 2025 Tutorial

Tuesday, December 2, 2025, 1:30pm -> 4:00pm

NeurIPS 2025, San Diego Convention Center, Exhibit Hall G,H

Martin Ziqiao Ma

University of Michigan

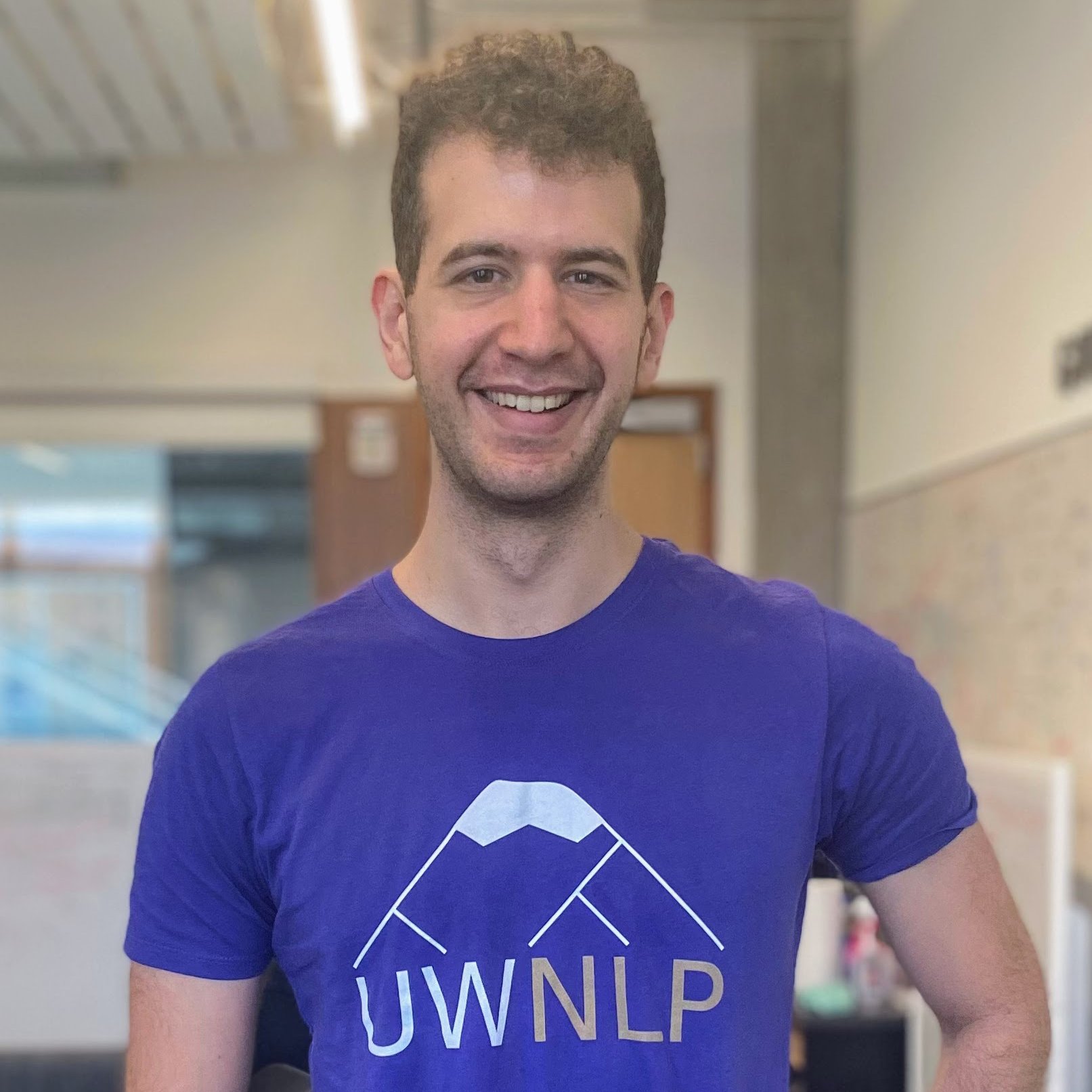

Michael Saxon

University of Washington

Xiang Yue

Carnegie Mellon University (Now @ Meta)

https://benchmarking.science

Outline

1. Epistemology, Design & Practice

What should we measure? What makes a good benchmark?

Video coming soon

2. Limitations

What are the main current issues in benchmarking? How is the landscape of models changing to make benchmarks worse? How do people approach it? What should attendees who want to get in to evaluation know?

3. Emerging Paradigms

How are people addressing these problems? Touching on adversarial methods, dynamic benchmarks, arenas and scaled human evals, simulators & sandboxes, applied interpretability. What can attendees work on?

Video coming soon

4. Panel Conversation

Panelists from diverse areas will share their perspectives.