| CARVIEW |

The post AppLink Fundamentals III: Building with AppLink – Development Flow and Language Choices appeared first on Heroku.

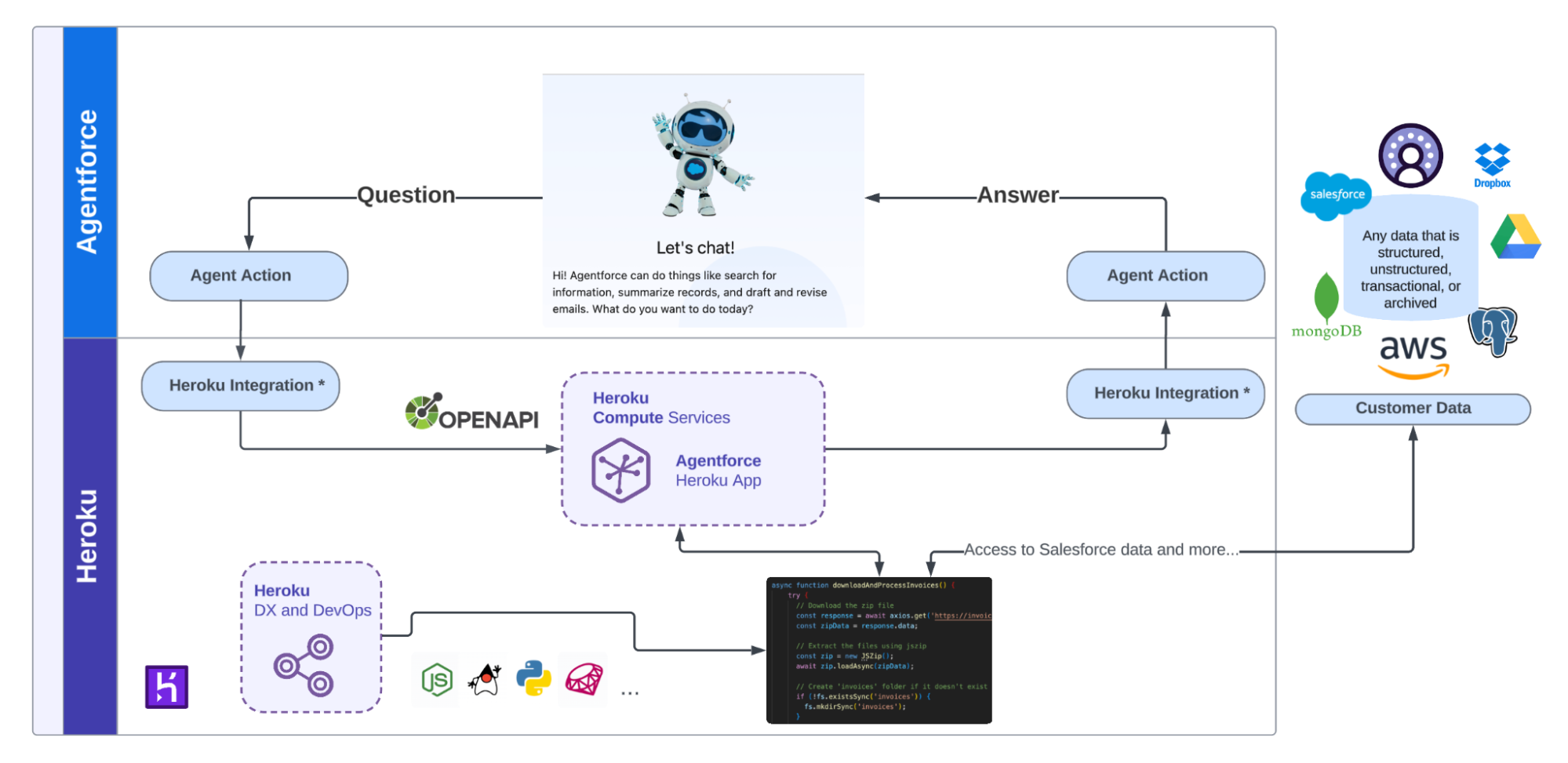

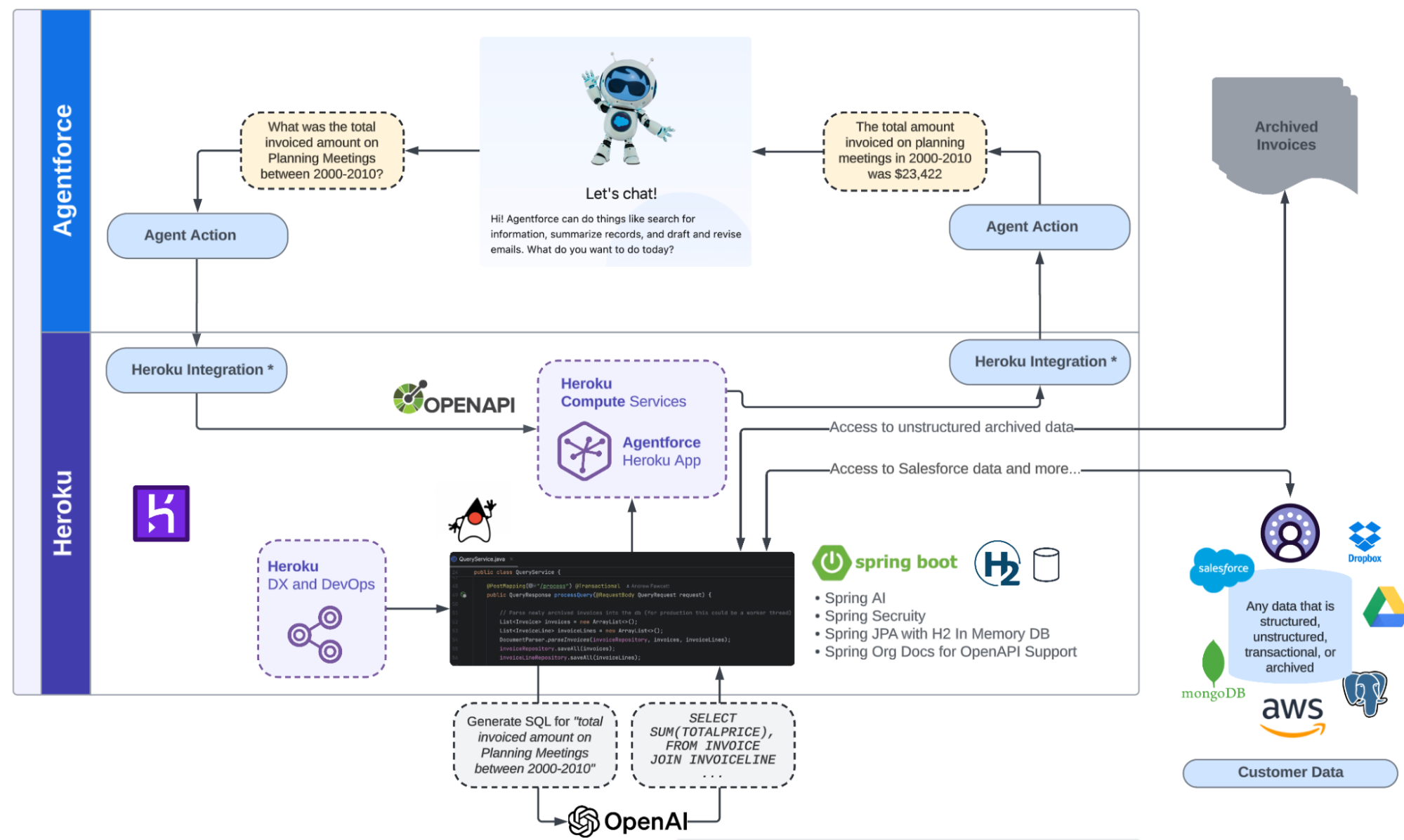

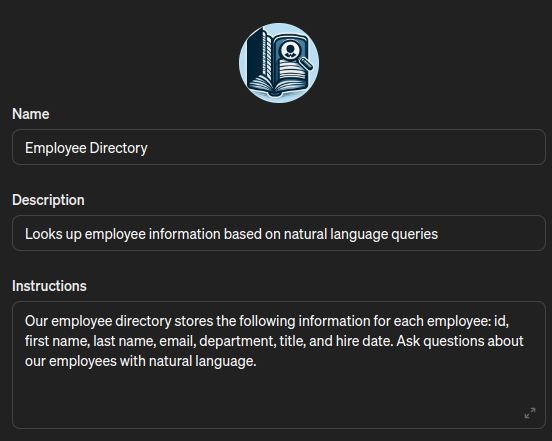

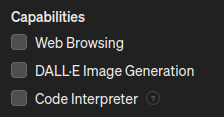

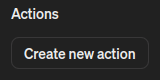

]]>Tell me more about integrating with Agentforce?

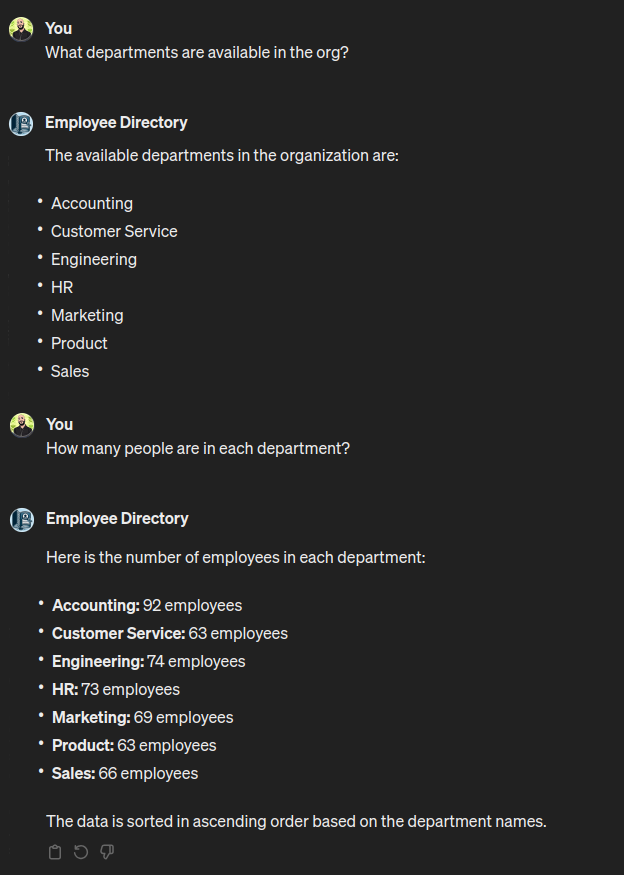

Agentforce integration leverages AppLink’s full computational flexibility to extend agent capabilities far beyond native Salesforce functionality. Due to the compute power and framework availability at developers’ disposal, agents can return rich content – not just text data – including generated images, PDFs, complex calculations, and processed data from external APIs.

The Heroku Agentforce Tutorial provides comprehensive step-by-step guidance for creating custom Agent Actions, from initial setup through production deployment.

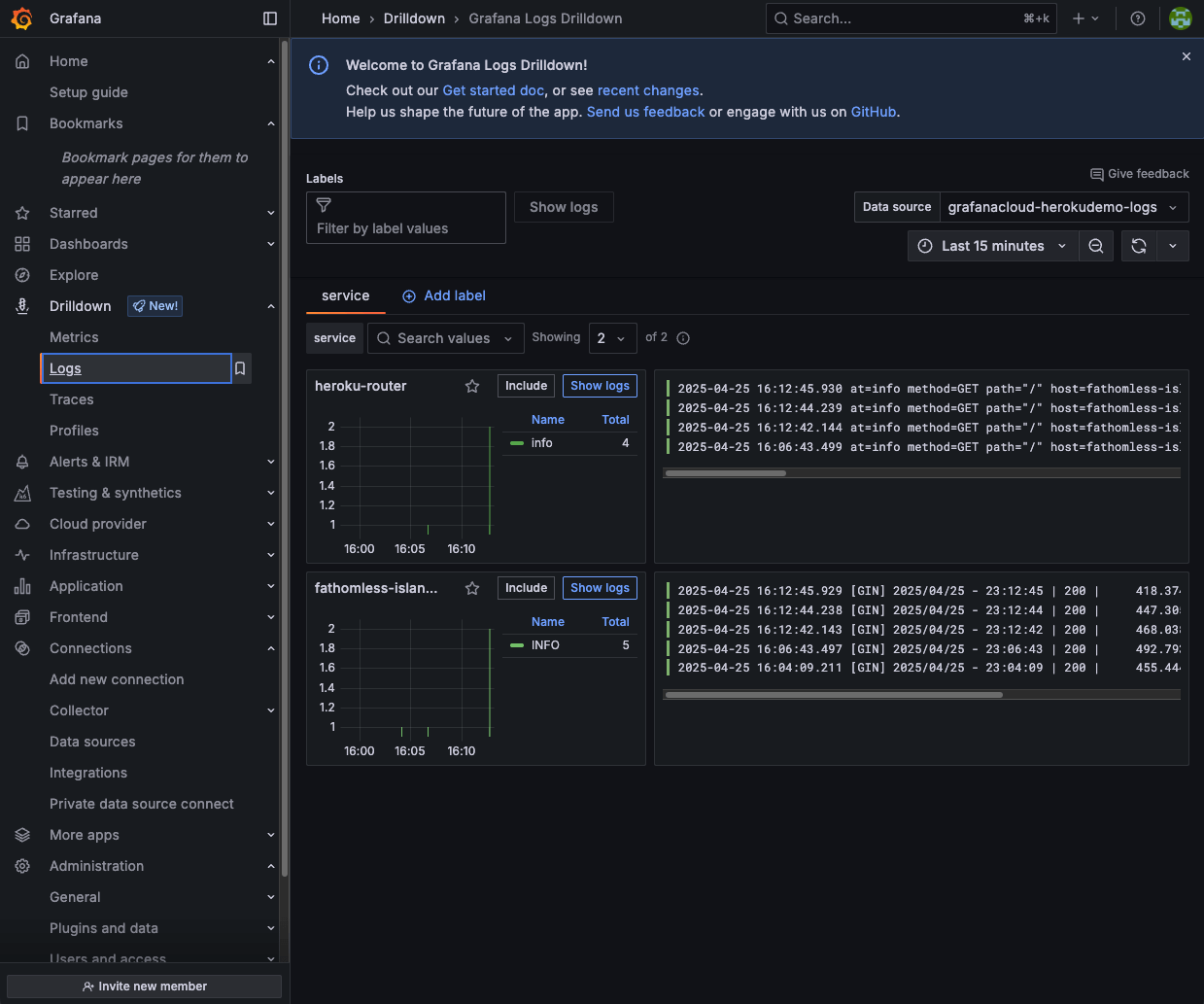

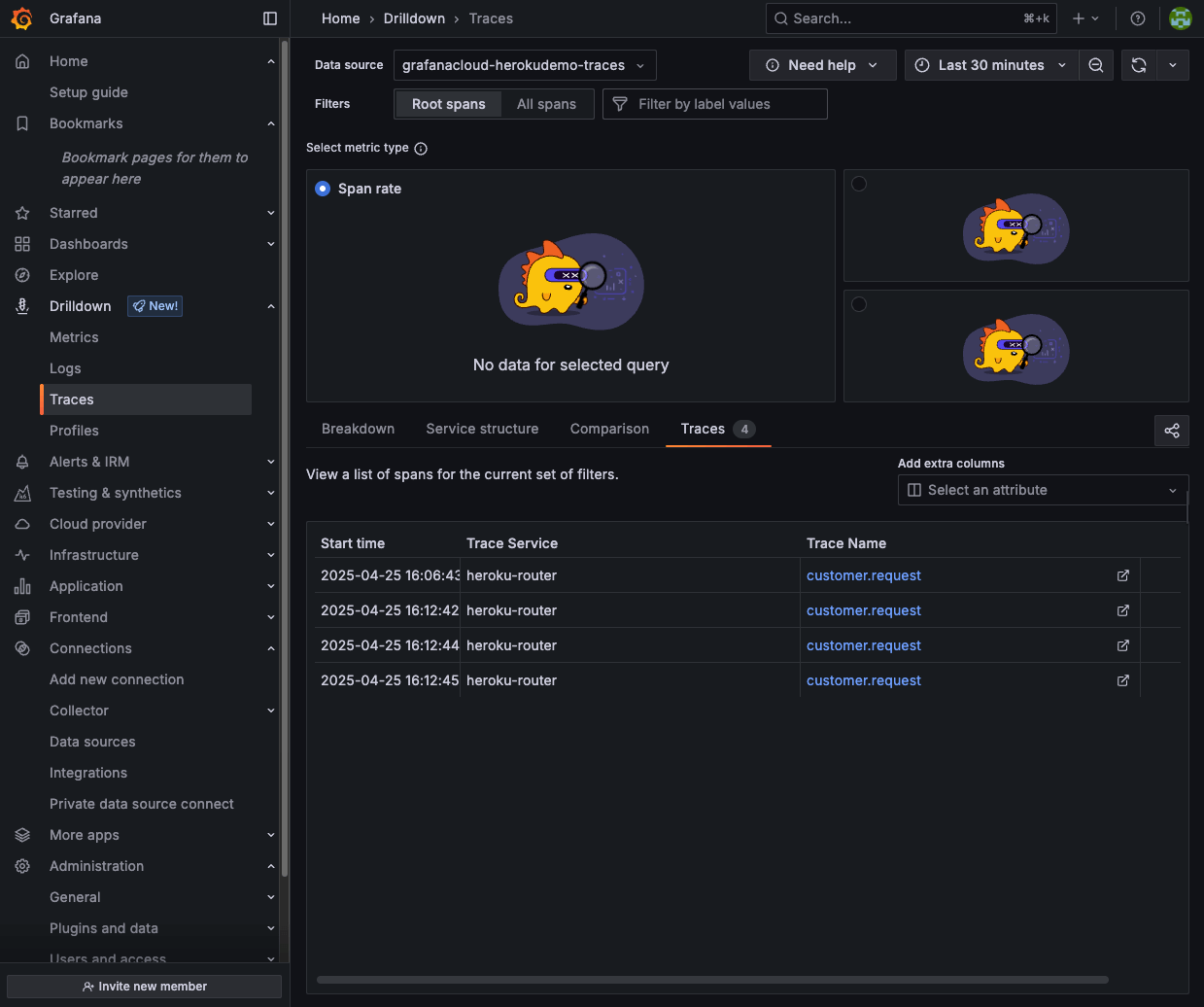

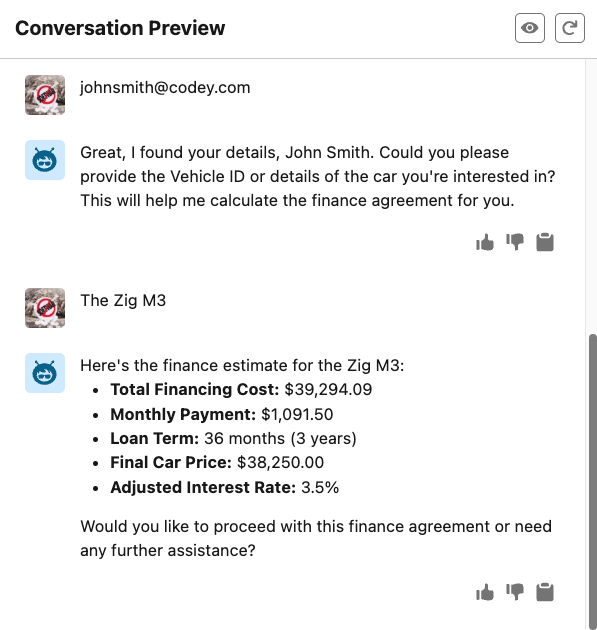

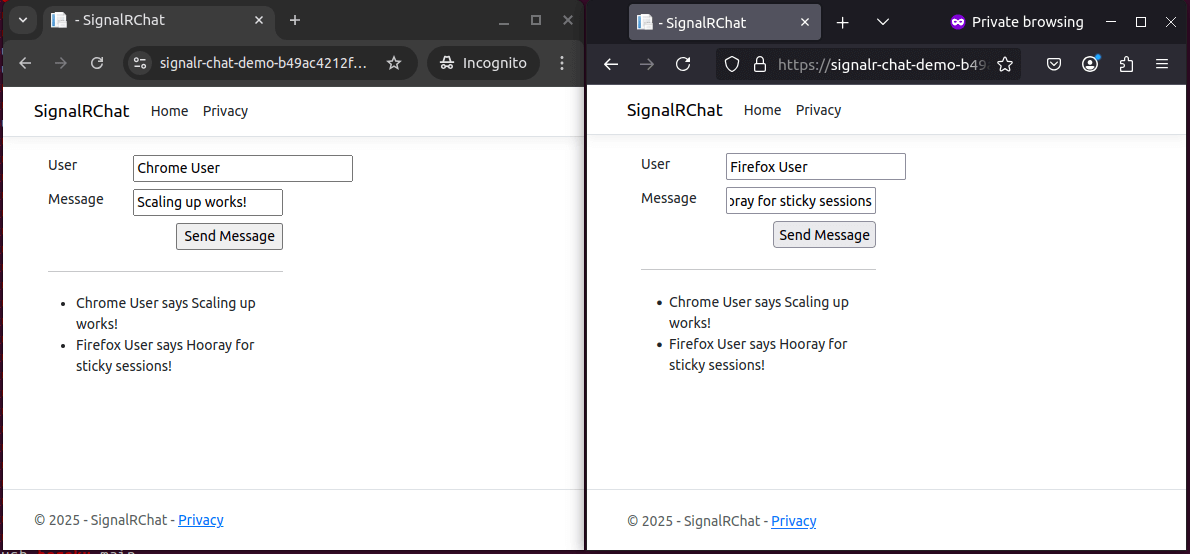

Real-world example: Automotive finance agent

Car dealership agents need to provide instant, competitive finance estimates that consider complex pricing rules, customer credit profiles, and generate professional documentation. Here’s how a Koa Cars Finance Agent performs real-time credit assessments, applies complex pricing rules, and generates professional PDF agreements:

Customer: Finance estimate request

↓

Agent: "What's your contact email?"

↓

Customer: "johnsmith@codey.com"

↓

Agent: "Which car model interests you?"

↓

Customer: "I'm interested in the Zig M3 car"

↓

Agent: Calls Heroku Finance Service

The service also automatically generates a professional PDF finance agreement:

This demonstrates AppLink’s capability to handle complex business logic including multi-tier interest rate calculations, real-time credit assessments, dynamic pricing with dealer incentives, and automatic PDF generation – all while seamlessly integrating with Salesforce CRM data.

Enhanced OpenAPI configuration for agent actions

Agentforce requires additional OpenAPI YAML attributes beyond standard external service configuration. AppLink automatically handles these specialized requirements when you include the appropriate x-sfdc agent extensions:

x-sfdc:

agent:

topic:

classificationDescription: "This API allows agents to calculate automotive finance estimates, assess credit profiles, and generate professional documentation."

scope: "Your job is to assist customers with vehicle financing by providing instant competitive estimates, applying complex pricing rules, and generating finance agreements."

instructions:

- "If the customer asks for finance estimates, collect contact email and vehicle model information."

- "Use real-time credit assessment and dealer-specific pricing rules for accurate calculations."

- "Generate professional PDF agreements and attach them to Contact records automatically."

name: "automotive_finance_topic"

action:

publishAsAgentAction: true

isUserInput: true

isDisplayable: true

privacy:

isPii: true

These extensions enable:

- Agent Topic Generation: Creates logical groupings of related actions for agent organization – agent topics help agents understand when and how to use your services

- Action Publishing: Automatically makes your endpoints available as Agent Actions within the defined topic

- User Input Configuration: Controls whether fields require additional user input

- Display Configuration: Determines which response fields are shown to users

- Privacy Controls: Enables PII handling for sensitive operations

For complete details on OpenAPI configuration for Agentforce, see Configuring OpenAPI Specification for Heroku AppLink.

Development flow

The AppLink development workflow supports rapid iteration across Salesforce environments, from scratch orgs to production deployments. Understanding the development tools and processes ensures smooth implementation and reliable deployments.

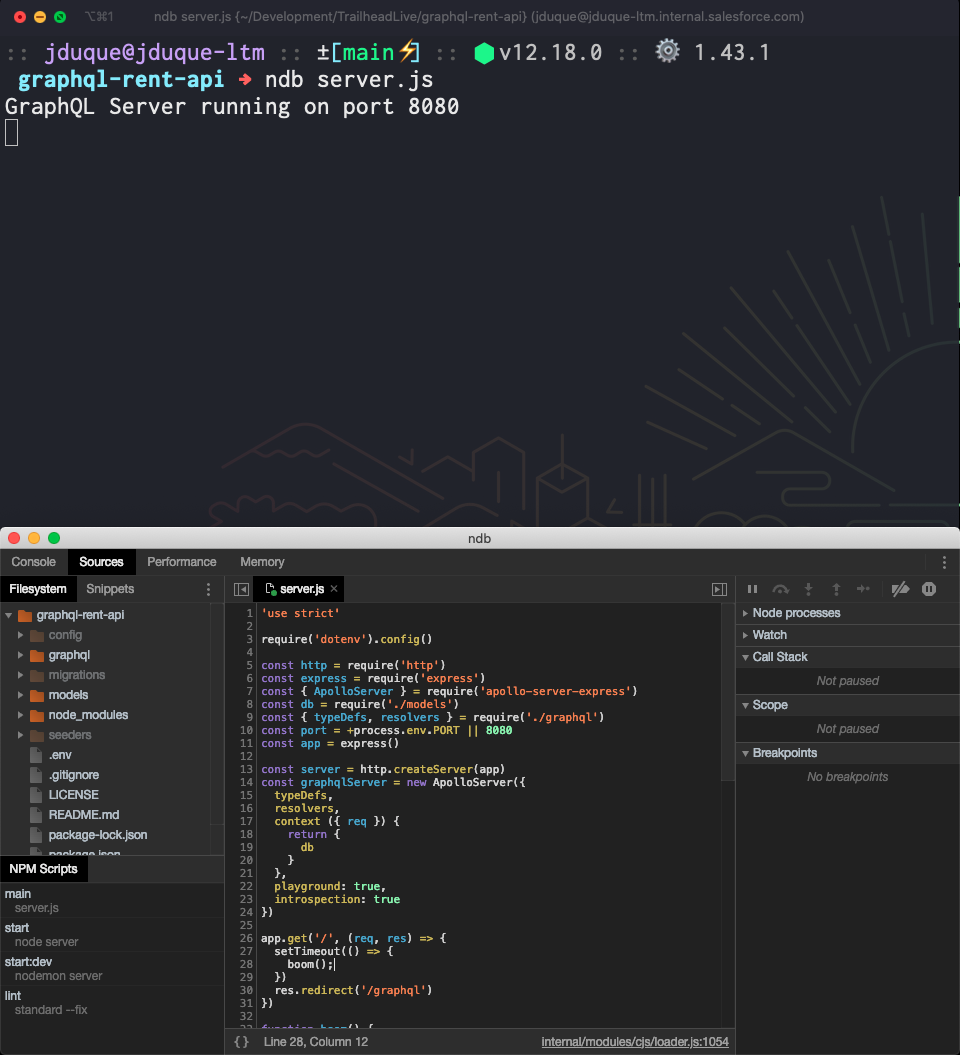

Local development

Local development with AppLink focuses on testing Pattern 2 applications (Extending Salesforce) that receive requests from Salesforce. Pattern 1 applications (API access) don’t require special local testing since they make outbound calls to Salesforce APIs directly.

The invoke.sh script (found in /bin folders of sample applications) simulates requests from Salesforce with the correct headers, enabling local development and testing before deployment. For example, see the Pattern 3 invoke.sh script for testing batch operations locally.

Usage: ./bin/invoke.sh [session-based-permission-set]

The script provides several key features for development workflow:

- User Authentication Simulation: The script uses the Salesforce CLI to extract org details including access tokens, API versions, and org identifiers. It constructs the required

x-client-contextheader with base64-encoded JSON containing authentication and context information that your application would receive from Salesforce in production. - Permission Set Testing: For applications requiring elevated permissions (user mode plus), the script supports session-based permission set activation through a third parameter. It automatically creates and removes

SessionPermSetActivationrecords, allowing developers to test permission-dependent functionality locally before deploying to environments where these permissions would be granted through Flows, Apex, or Agentforce configurations. - Request Payload Simulation: The script accepts JSON payloads as parameters, enabling testing of various request scenarios and edge cases. This capability is essential for validating business logic and error handling before moving to integration testing.

To use the invoke.sh script for local testing, authenticate with your target org using the Salesforce CLI, then execute the script with your org alias and request payload:

# Authenticate with your Salesforce org

sf org login web --alias my-org

# Install dependencies and start local development server

npm install

npm run dev # or npm start depending on your package.json scripts

# In a separate terminal, test locally with simulated Salesforce headers

./bin/invoke.sh my-org 'https://localhost:8080/api/generatequote' '{"opportunityId": "006am000006pS6P"}'

This local development workflow integrates seamlessly with your existing Node.js development tools – use nodemon for auto-reloading, your preferred debugger, and standard logging libraries. The invoke.sh script is language and framework agnostic, working with any technology stack you choose for your Heroku application.

Managing changes to the OpenAPI specification

Managing changes in the interface between your Heroku application and Salesforce requires careful attention to the OpenAPI specification file that defines your service contract. This specification serves as the single source of truth for both your application’s API endpoints and the Salesforce components that consume them.

When developing new features or modifying existing endpoints, maintaining specification alignment prevents breaking changes that could disrupt dependent Salesforce components. The OpenAPI specification defines not only the request and response schemas but also the HTTP methods, status codes, and error formats that your consuming Flows, Apex classes, or Agentforce Actions expect.

Salesforce enforces this alignment through validation during the publish process. If you attempt to publish an updated application with breaking changes to an existing specification, and there are active Apex classes, Flows, or Agentforce Actions referencing those endpoints, the publish command will fail with validation errors. This protection mechanism prevents accidental service disruptions in production environments.

For development environments where you need to iterate rapidly on service interfaces, scratch orgs provide the flexibility to start fresh when needed. However, if you’re working with persistent sandboxes or production environments, you have two options when breaking changes are necessary: either remove all references to the modified endpoints from your Flows, Apex classes, and Agentforce Actions before publishing, or use a different client name parameter in the CLI publish command to create a parallel service definition, for example --client-name MyService_v2.

Using scratch orgs

Scratch orgs represent the optimal development environment for AppLink applications, particularly when your service interfaces change frequently during development. Unlike traditional sandboxes, scratch orgs provide a clean, disposable environment that can be recreated as needed when breaking changes occur or when you need to test deployment scenarios from ground zero.

The key advantage of scratch orgs for AppLink development lies in their ability to start fresh without the complexity of cleaning up existing references, published applications, or permission configurations. When your service evolves significantly, you can create a new scratch org, configure the necessary features, and test your complete deployment pipeline without worrying about conflicts from previous iterations.

To configure a scratch org for AppLink development, you must enable the required features in your scratch org definition file. For standard Salesforce integration, include the HerokuAppLink feature in your project’s config/project-scratch-def.json:

{

"orgName": "AppLink Development",

"edition": "Developer",

"features": ["HerokuAppLink"],

"settings": {

"lightningExperienceSettings": {

"enableS1DesktopEnabled": true

}

}

}

For Data Cloud integration scenarios, also include the CustomerDataPlatform feature alongside HerokuAppLink. Once configured, create and authenticate with your scratch org using standard Salesforce CLI commands, then proceed with your AppLink connection and deployment workflow.

Scratch orgs excel in scenarios where you’re developing new integration patterns, testing permission model changes, or validating deployment automation. They provide the confidence that your deployment process works correctly from a clean state, which is essential for production readiness. While traditional sandboxes remain valuable for longer-term testing scenarios and stakeholder demonstrations, scratch orgs offer the rapid iteration cycle that modern development practices require.

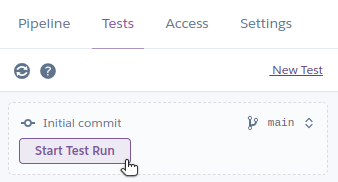

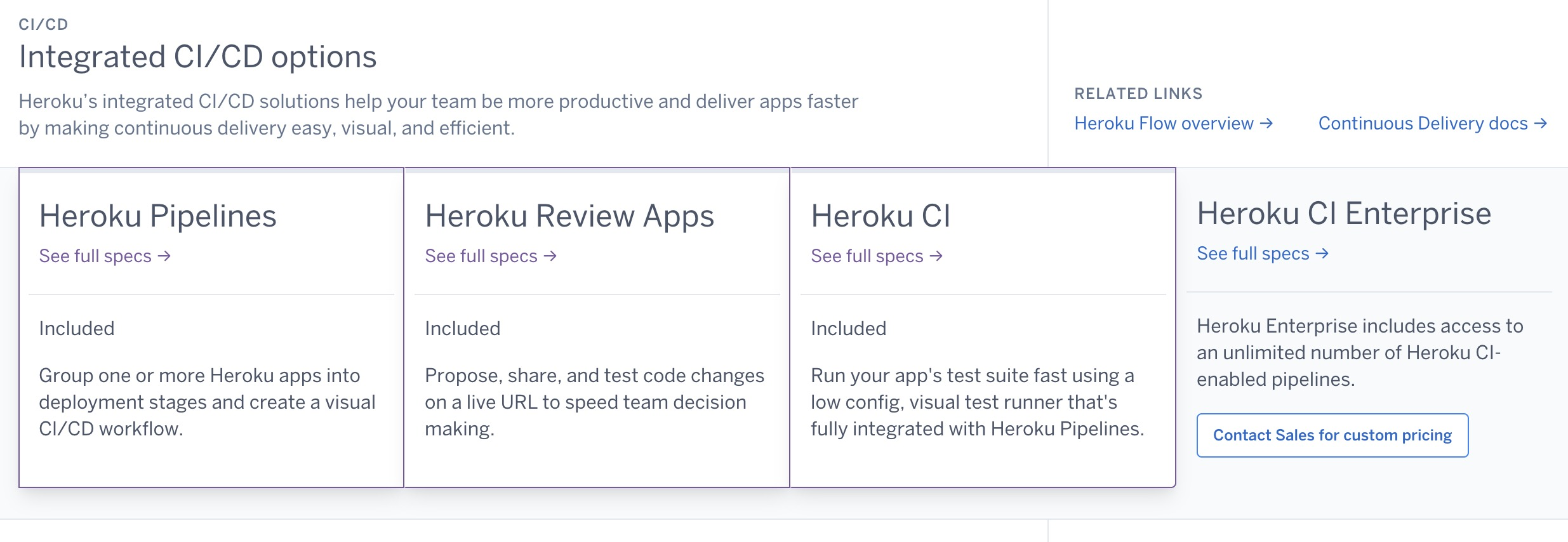

CI/CD integration

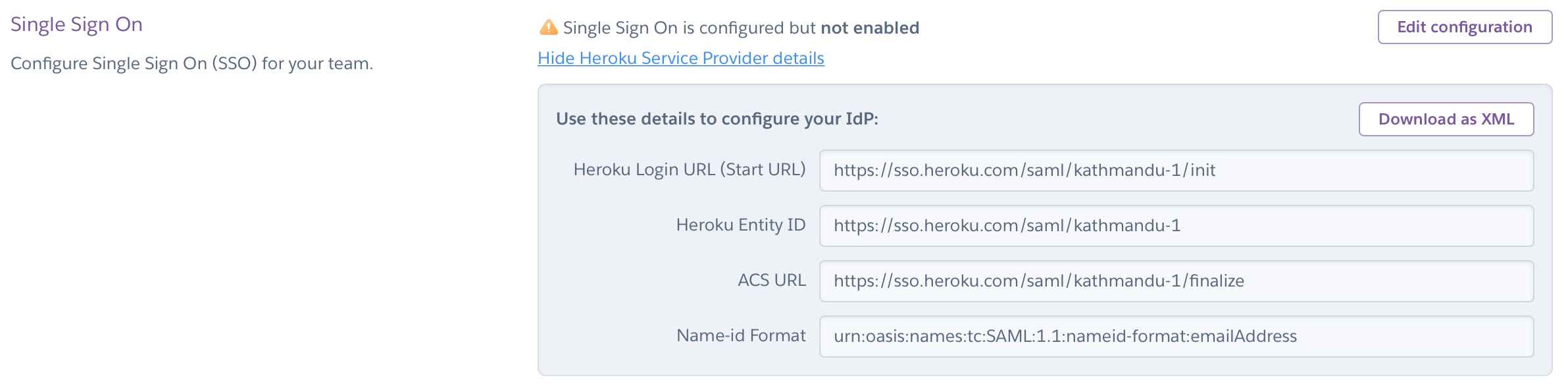

The JWT-based authentication flow (heroku salesforce:connect:jwt) integrates seamlessly with scratch org workflows, enabling automated connection setup as part of your CI/CD pipeline. This capability allows you to script complete environment provisioning, from scratch org creation through application deployment and testing, providing reproducible development environments for your entire team.

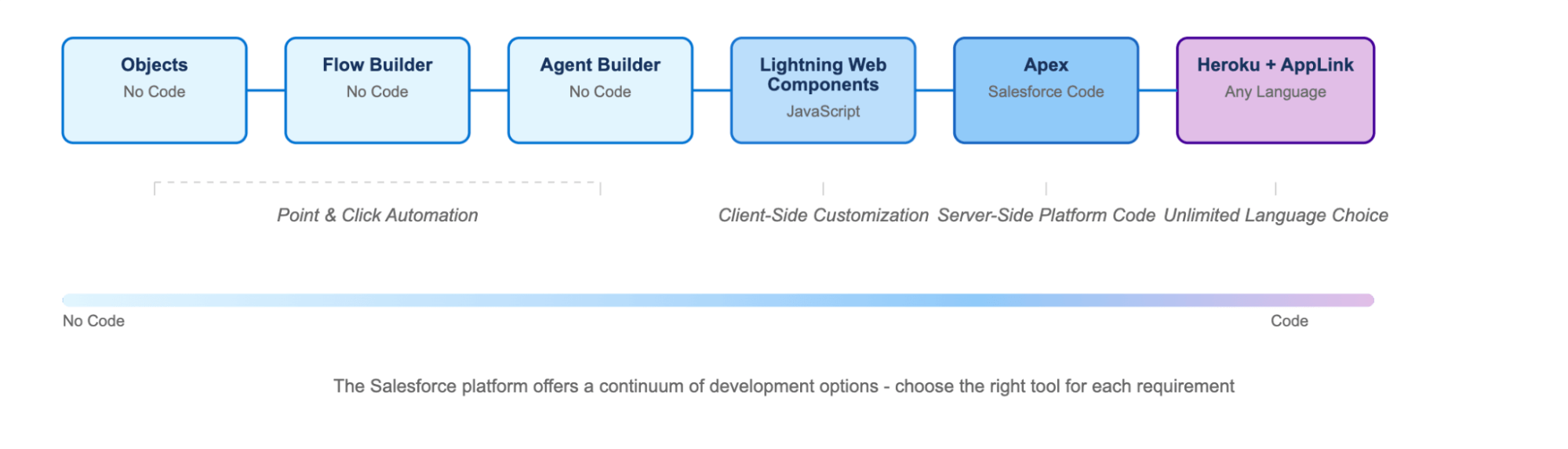

Coding language choices

When extending Salesforce functionality, choosing the right programming language depends on your specific requirements, team expertise, and operational constraints. While Apex remains a powerful option for many scenarios, AppLink opens up the entire spectrum of modern programming languages, each bringing unique capabilities and development ecosystems to your Salesforce solutions.

The following comparison helps you understand the tradeoffs between Apex and other programming languages when building Salesforce extensions, highlighting where each approach excels and the specific capabilities that become available when hosting code on Heroku using the AppLink add-on. Consider using Apex for transaction-critical operations requiring database triggers and system-level access, while leveraging AppLink and modern programming languages for computationally intensive tasks, external integrations, and scenarios where existing code investments can be preserved and extended.

| Capability | Apex | Node.js, Python, Java…* |

|---|---|---|

| Fully Managed Trusted Infrastructure |  |

|

| Extend Apex, Flow and Agentforce |  |

|

| Record Update Logic in Transaction |  |

Triggers not supported |

| Secure by Default | With Annotations |  |

| Run as User | With Annotations |  |

| Run as System | Default | Principle of Least Privilege** |

| Limits Handling | Fixed CPU Timeout, Heap and Concurrency Limits | Elastic Horizontal and Vertical Scale*** |

| Extend Existing Code Investment | N/A |  |

* Capabilities only available when hosting code on Heroku using the Heroku AppLink Add-on

** Heroku logic can leverage Session-based Permission Sets to elevate beyond user permissions

*** Salesforce API limits still apply; use Unit of Work patterns to make optimal use of updates

AppLink also enables developers with skills in your wider organization or hiring pool to contribute to Salesforce programs using languages they’re already proficient in, expanding your team’s ability to deliver sophisticated Salesforce extensions without requiring specialized Apex training.

Summary

AppLink represents a fundamental shift in how developers can extend Salesforce, breaking through traditional platform limitations to bring unlimited computational flexibility to the Salesforce ecosystem. With enterprise-grade security through User Mode authentication, seamless integration where Heroku applications appear natively within Salesforce through generated Apex classes, Flow actions, and Agentforce capabilities, and support for unlimited language choice in Node.js, Python, Java, and other languages, AppLink bridges the gap between Salesforce’s declarative power and unlimited programming flexibility.

Whether you’re extending core CRM functionality, building sophisticated agent actions, or integrating with external systems, AppLink provides the foundation for enterprise-grade Salesforce extensions using the languages and frameworks you know best.

Read More of the AppLink Fundamentals series

- AppLink Fundamentals I: AppLink Integration Patterns – Connecting Salesforce to Heroku Applications

- AppLink Fundamentals II: Advanced AppLink Integrations – Automation & AI

- AppLink Fundamentals III: Building with AppLink – Development Flow and Language Choices

The post AppLink Fundamentals III: Building with AppLink – Development Flow and Language Choices appeared first on Heroku.

]]>Extending Salesforce automation, code, and AI

Once your Heroku application is deployed and connected using AppLink, it becomes available for invocation from Apex, Flow, and Agentforce. The key to this integration is the OpenAPI specification that…

The post AppLink Fundamentals II: Advanced AppLink Integrations – Automation & AI appeared first on Heroku.

]]>

Extending Salesforce automation, code, and AI

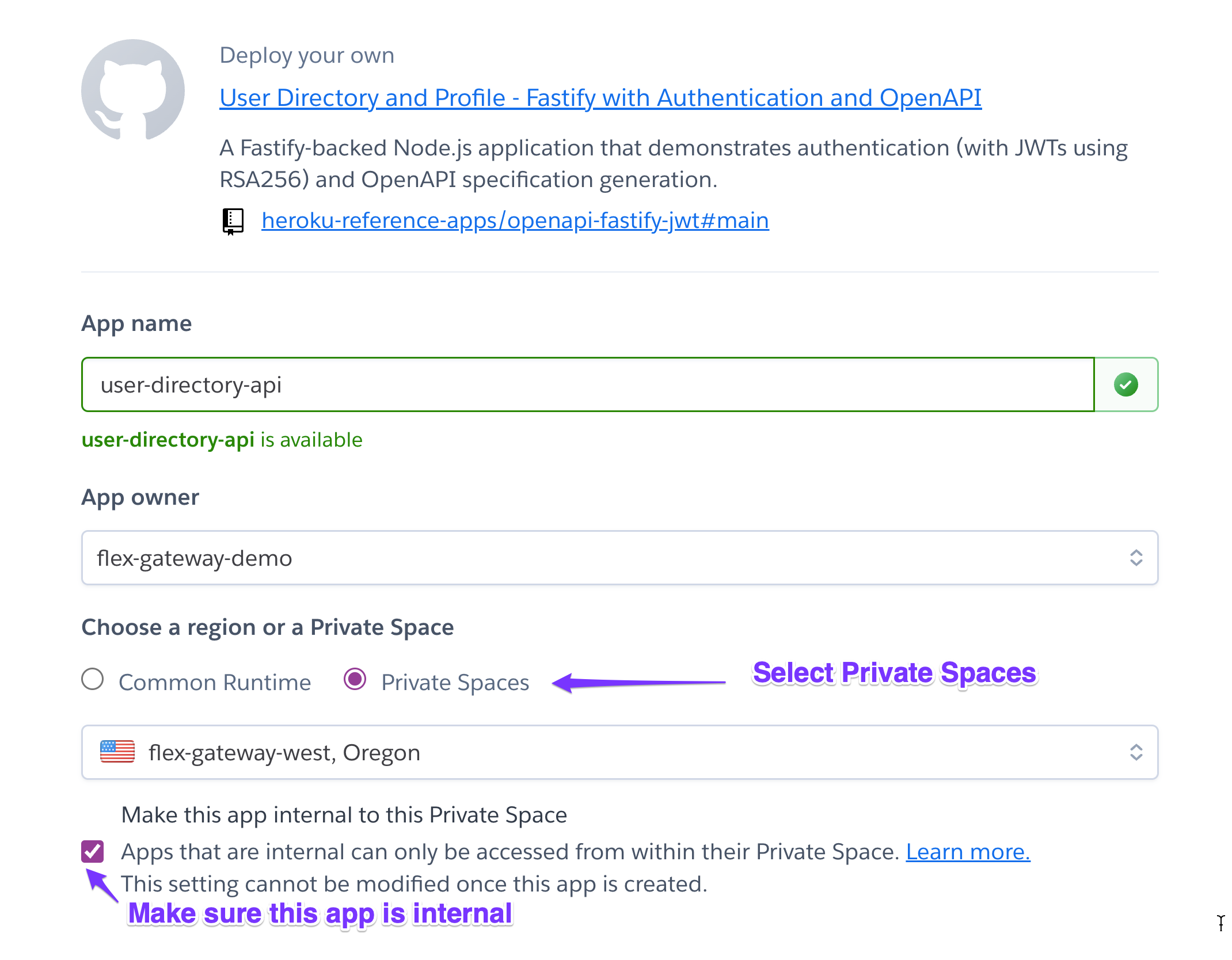

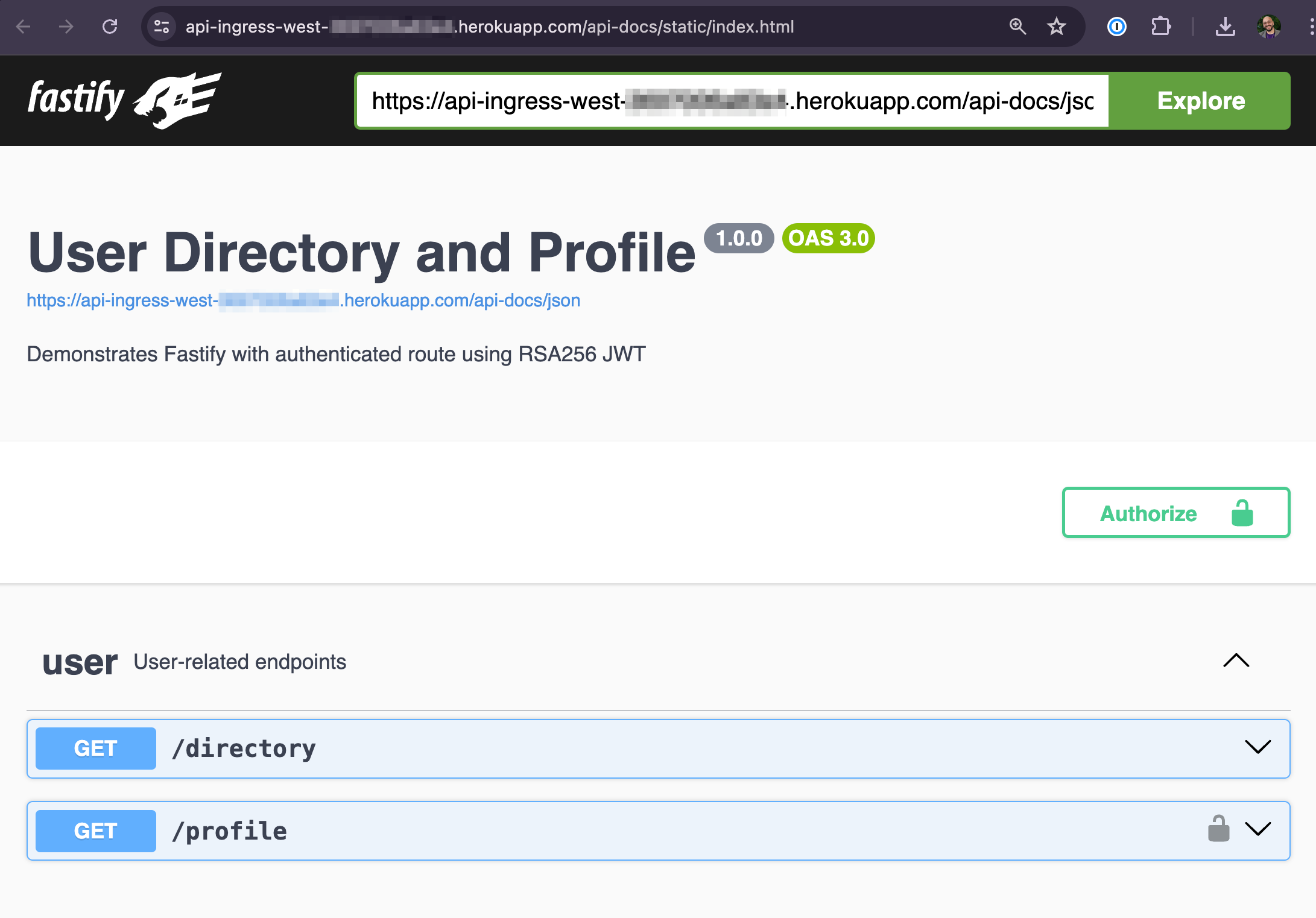

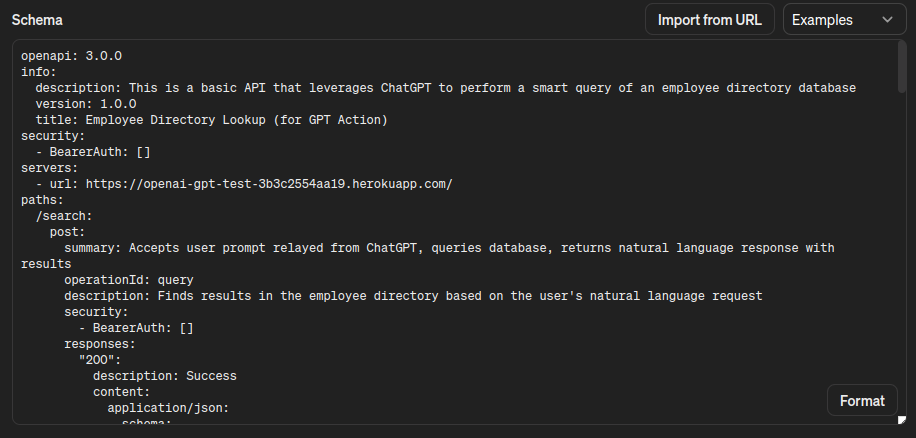

Once your Heroku application is deployed and connected using AppLink, it becomes available for invocation from Apex, Flow, and Agentforce. The key to this integration is the OpenAPI specification that describes your endpoints, enabling automatic service discovery and registration in Salesforce.

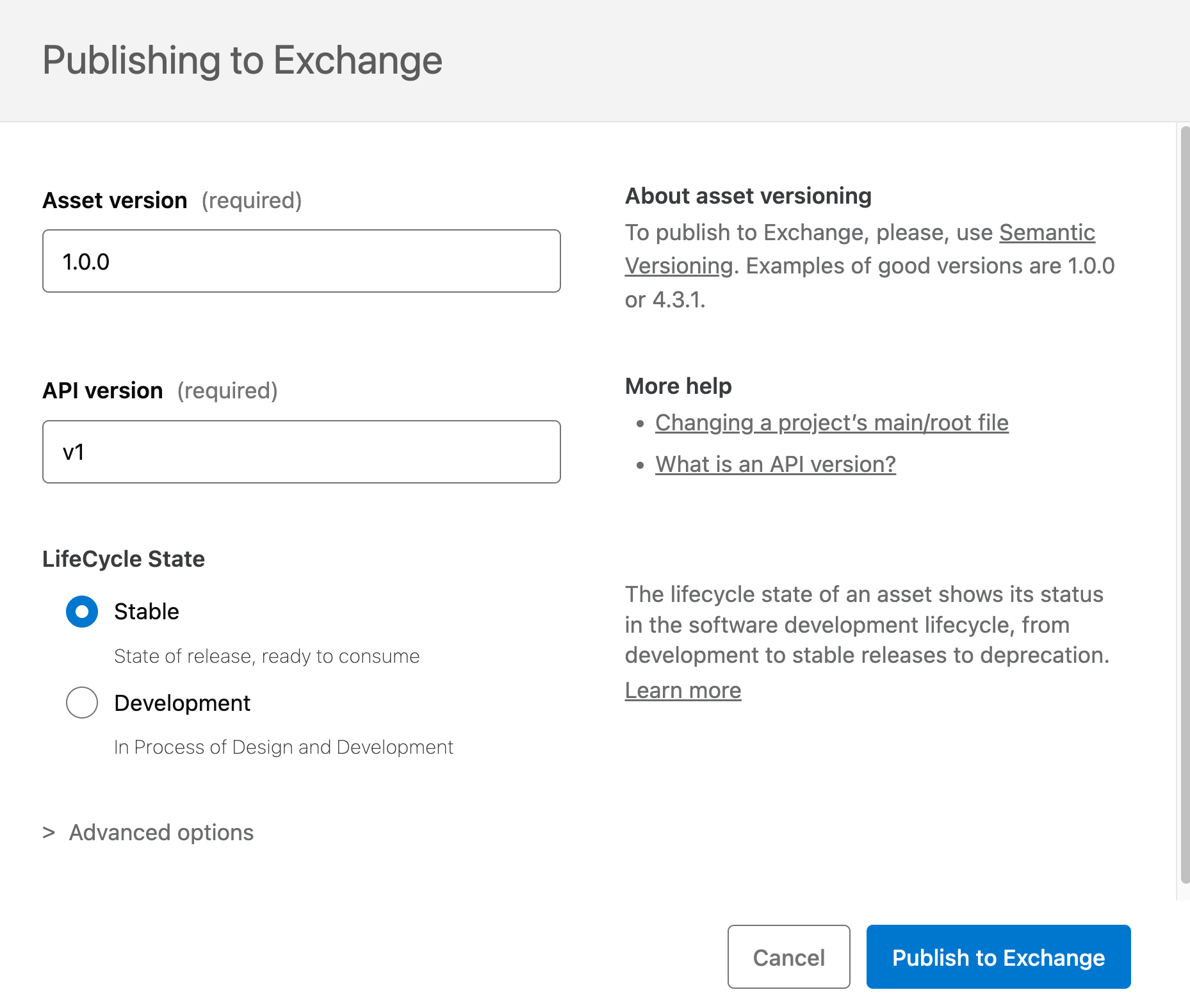

OpenAPI specification integration

AppLink uses your OpenAPI (YAML or JSON) specification to understand your service capabilities and generate the appropriate Salesforce integration artifacts. Here’s an example from the Pattern 2 sample showing how the generateQuote operation is defined:

components:

schemas:

QuoteGenerationRequest:

type: object

required:

- opportunityId

description: Request to generate a quote, includes the opportunity ID to extract product information

properties:

opportunityId:

type: string

description: A record Id for the opportunity

paths:

/api/generatequote:

post:

operationId: generateQuote

summary: Generate a Quote for a given Opportunity

description: Calculate pricing and generate an associated Quote.

requestBody:

content:

application/json:

schema:

$ref: "#/components/schemas/QuoteGenerationRequest"

x-sfdc:

heroku:

authorization:

connectedApp: GenerateQuoteConnectedApp

permissionSet: GenerateQuotePermissions

responses:

"200":

description: OK

content:

application/json:

schema:

$ref: "#/components/schemas/QuoteGenerationResponse"

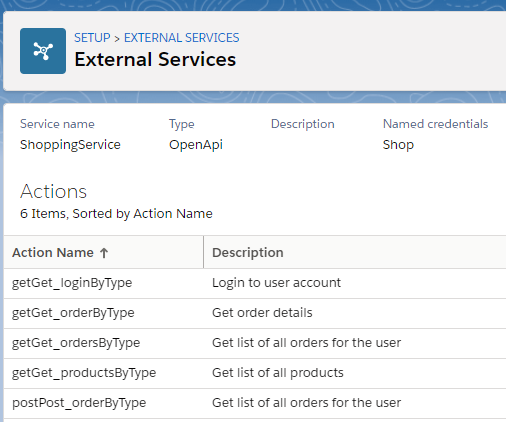

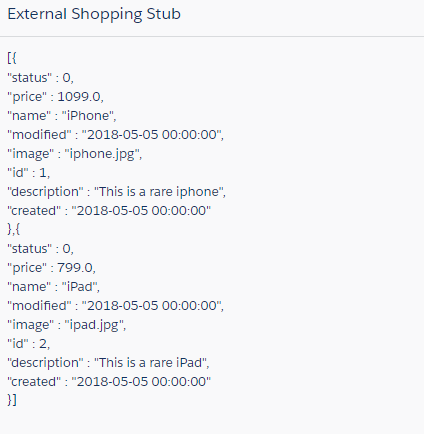

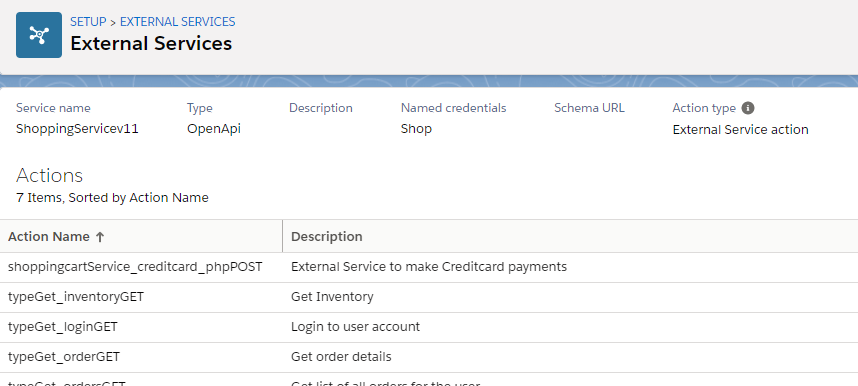

The x-sfdc section contains Salesforce-specific metadata that AppLink uses to configure authentication and permissions. When you run heroku salesforce:publish, this specification becomes:

- Generated Apex classes with strongly-typed request/response objects

- External Service definitions visible in Flow Builder’s Action palette

- Agent Actions available for Agentforce configuration

- Connected App and Permission Set configurations for secure access

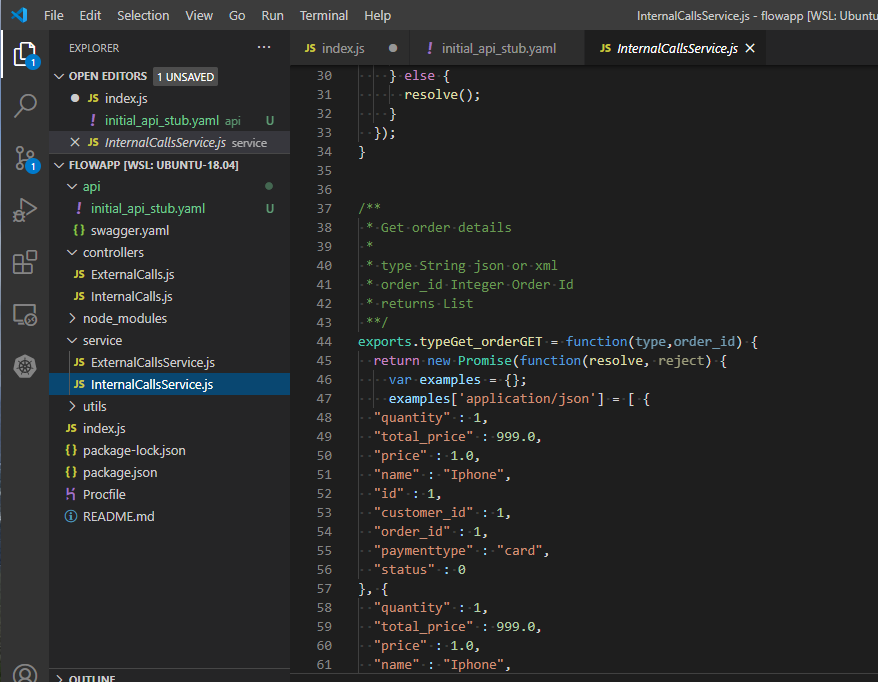

Rather than writing OpenAPI specifications manually (which can be tedious and error-prone), most AppLink samples leverage the schema definition features already built into popular Node.js frameworks. The Pattern 2 sample uses Fastify’s schema system to automatically generate the specification, but similar approaches work with Express.js using libraries like swagger-jsdoc or express-openapi:

// From heroku-applink-pattern-org-action-nodejs/src/server/routes/api.js

const quoteGenerationSchema = {

operationId: 'generateQuote',

summary: 'Generate a Quote for a given Opportunity',

'x-sfdc': {

heroku: {

authorization: {

connectedApp: 'GenerateQuoteConnectedApp',

permissionSet: 'GenerateQuotePermissions'

}

}

},

body: { $ref: 'QuoteGenerationRequest#' },

response: {

200: { schema: { $ref: 'QuoteGenerationResponse#' } }

}

};

This approach ensures your API documentation stays synchronized with your implementation while providing the metadata Salesforce needs for seamless integration.

Invoking from Apex

AppLink enables both synchronous and asynchronous invocation from Apex:

Synchronous Invocation: Your Heroku service appears as a generated Apex class that you can invoke directly:

// From heroku-applink-pattern-org-action-nodejs sample

HerokuAppLink.GenerateQuote service = new HerokuAppLink.GenerateQuote();

HerokuAppLink.GenerateQuote.generateQuote_Request request = new HerokuAppLink.GenerateQuote.generateQuote_Request();

HerokuAppLink.GenerateQuote_QuoteGenerationRequest body = new HerokuAppLink.GenerateQuote_QuoteGenerationRequest();

body.opportunityId = '006SB00000DItEfYAL';

request.body = body;

System.debug('Quote Id: ' + service.generateQuote(request).Code200.quoteId);

The generated classes handle authentication, serialization, and HTTP communication automatically. Synchronous calls are subject to Apex callout limits and timeout constraints.

Asynchronous Invocation with Callbacks: For long-running operations beyond Apex governor limits, AppLink supports asynchronous processing with callback handling. This requires additional OpenAPI specification using the standard callbacks definition to define the callback endpoint that Salesforce will invoke when processing completes.

This pattern enables background processing workflows where your Heroku application can perform extensive calculations or external API integrations without blocking the Salesforce user interface. For detailed callback configuration examples, see Getting Started with AppLink and Pattern 3: Scaling Batch Jobs.

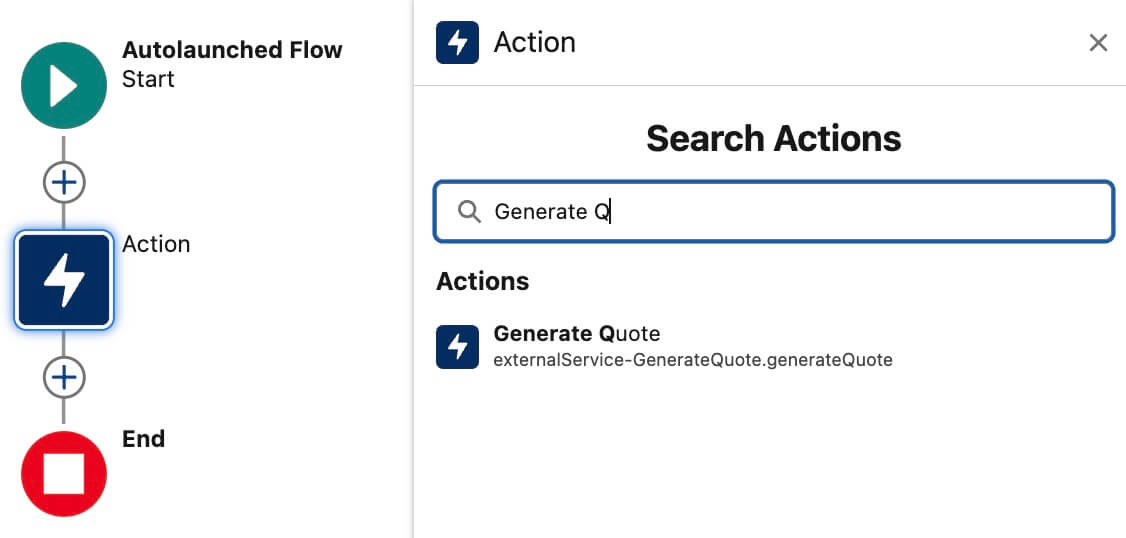

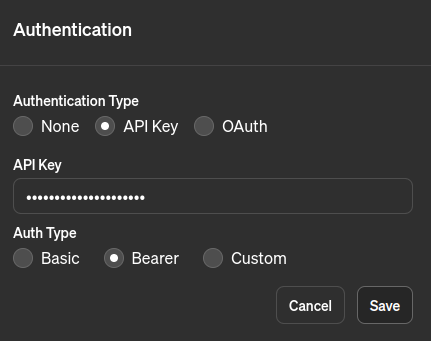

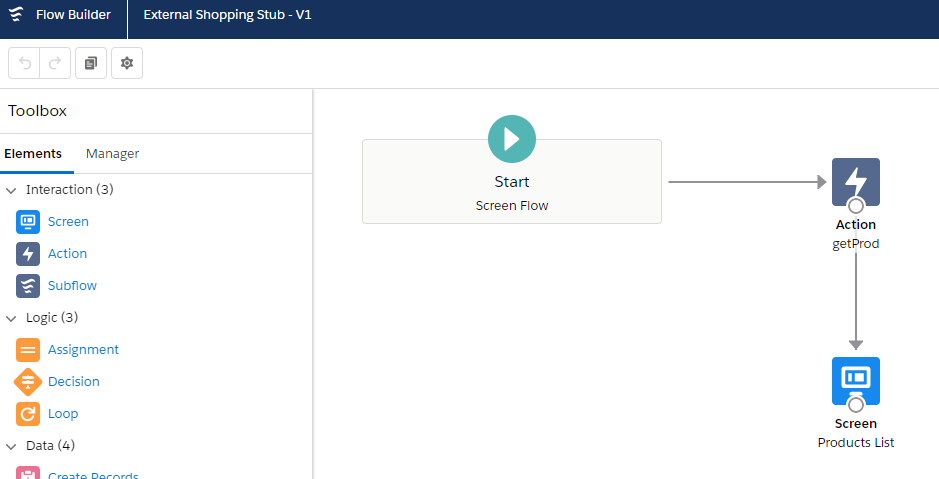

Invoking from Flow

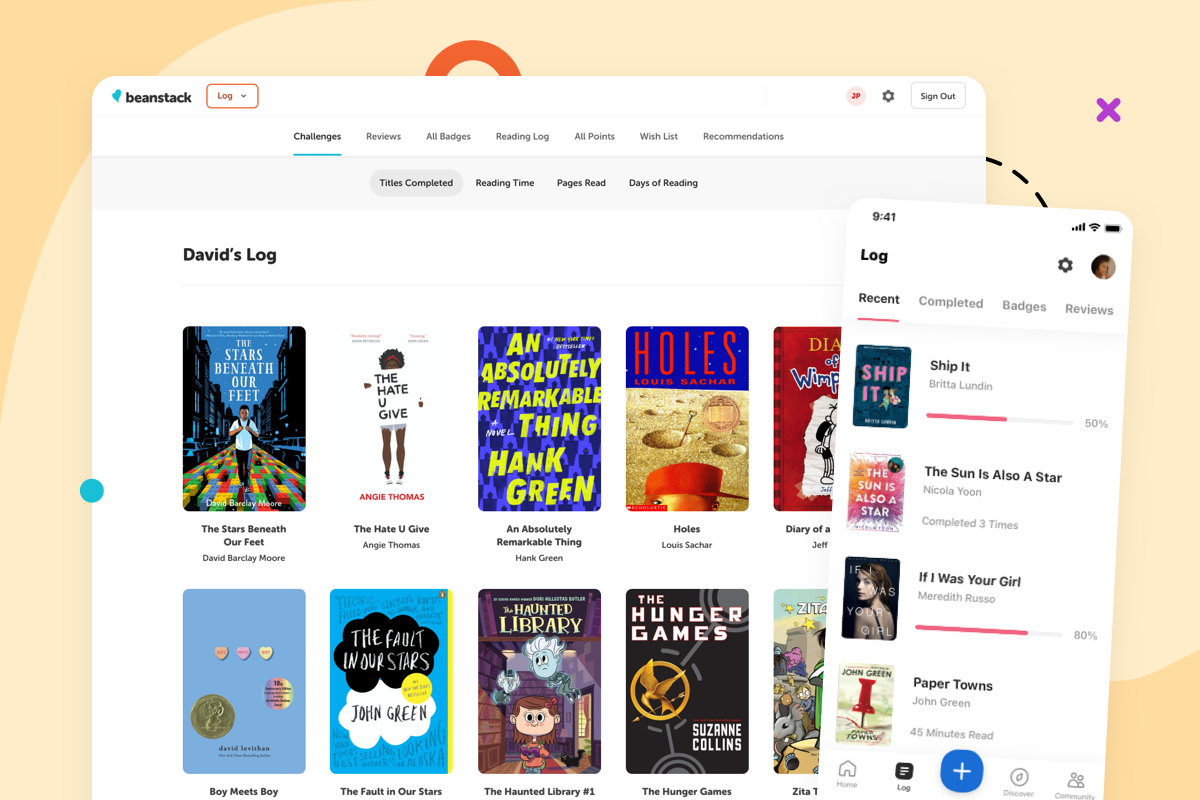

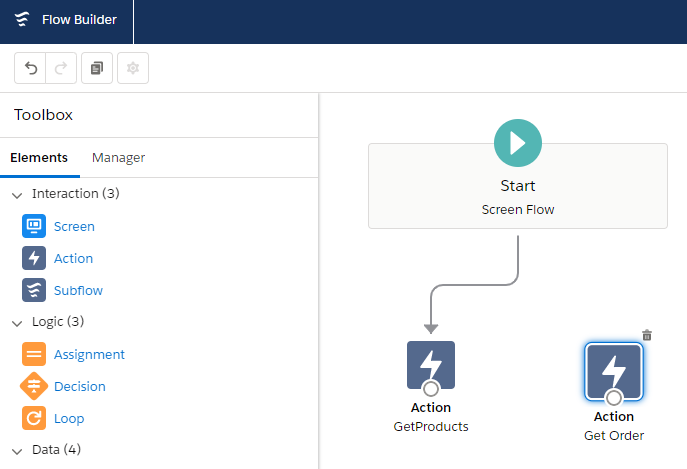

Flow Builder provides no-code access to your Heroku applications through External Service Actions. After publishing your service, it appears automatically in the Action palette:

Flow Builder integration

Flow developers can drag your Heroku operation onto the canvas, configure input variables, and capture output data just like any other Flow action. This enables sophisticated business automation combining Salesforce’s declarative tools with your custom processing logic.

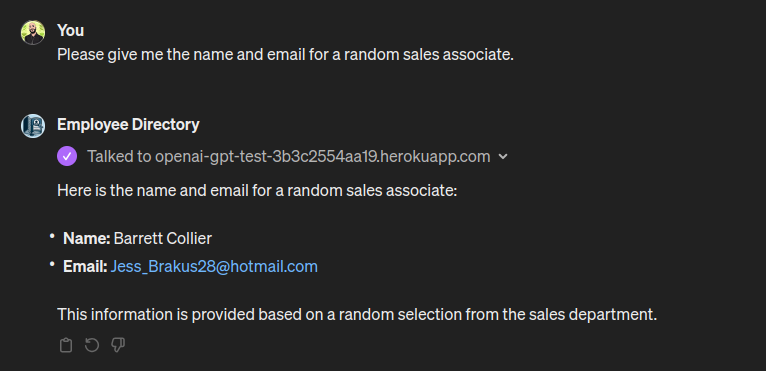

Invoking from Agentforce

Agentforce leverages your Heroku applications as Agent Actions organized within Agent Topics through the enhanced OpenAPI configuration detailed in the dedicated Agentforce section below. Once configured with the appropriate x-sfdc agent extensions, agents can automatically invoke your Heroku endpoints to fulfill user requests requiring specialized processing, external API calls, or complex calculations beyond native Salesforce capabilities.

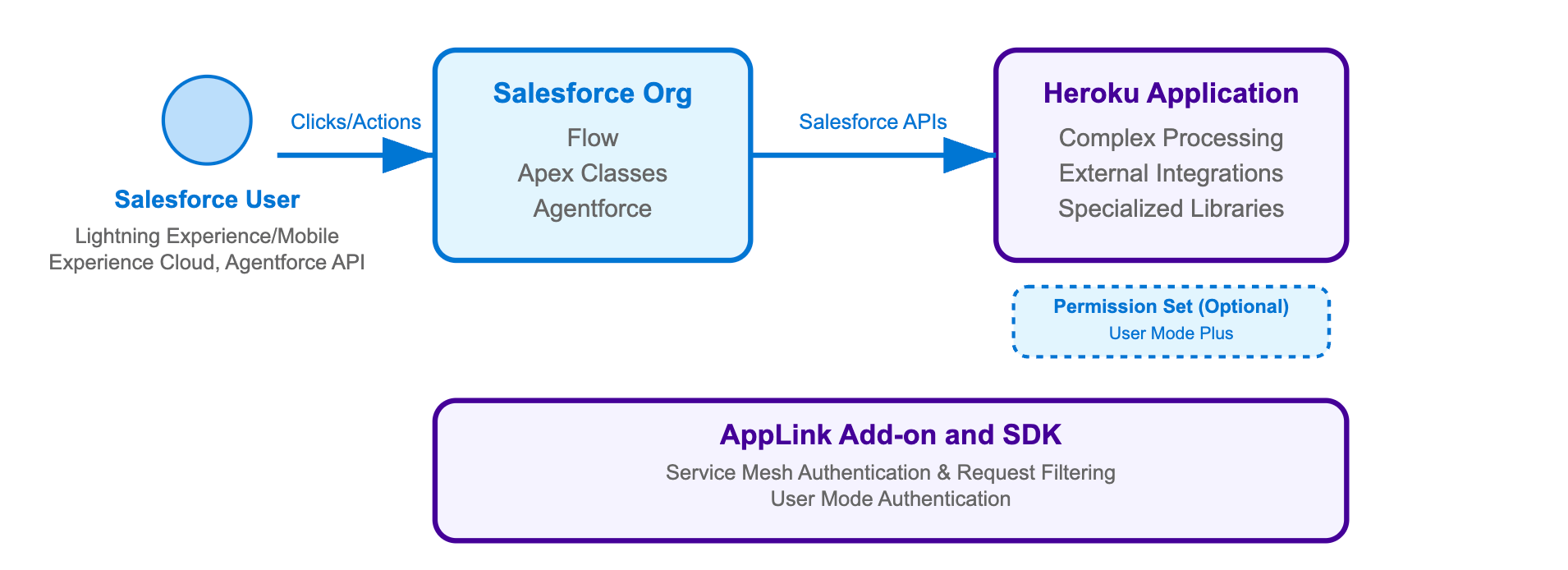

Permission elevation and security

AppLink operates using User Mode authentication, meaning your code inherits the exact permissions of the Salesforce user who triggers the operation. This provides the most secure integration by following the principle of least privilege.

However, for scenarios where your application needs to access data or perform operations beyond the triggering user’s permissions, AppLink supports elevated permissions (known as “user mode plus” in the main documentation) through Permission Sets. This optional advanced feature allows administrators to grant specific additional permissions that are activated exclusively during code execution.

For example, your Heroku application might need to access sensitive discount override fields that regular users cannot see, or create records in objects where users have read-only access. The Permission Set approach ensures these elevated permissions are:

- Explicitly defined and administrator-controlled

- Only active during Heroku application execution

- Visible through standard Salesforce Permission Set management

- Applied temporarily without changing the user’s permanent permissions

For detailed implementation guidance including permission set configuration and testing approaches, see the Pattern 2 sample documentation.

What’s next?

This blog has explored how AppLink facilitates advanced integrations with Salesforce, extending capabilities across Data Cloud, automation with Flow and Apex, and intelligent interactions with Agentforce. We’ve seen how OpenAPI specifications streamline service discovery and how AppLink’s permission model offers granular control over elevated access.

In our final blog, we’ll shift focus to the practical aspects of the development workflow, including local testing, managing OpenAPI changes, and crucial considerations when choosing between Apex and other programming languages for your Salesforce extensions. Stay tuned for insights into building and deploying your AppLink solutions with confidence.

Read More of the AppLink Fundamentals series

- AppLink Fundamentals I: AppLink Integration Patterns – Connecting Salesforce to Heroku Applications

- AppLink Fundamentals II: Advanced AppLink Integrations – Automation & AI

- AppLink Fundamentals III: Building with AppLink – Development Flow and Language Choices

The post AppLink Fundamentals II: Advanced AppLink Integrations – Automation & AI appeared first on Heroku.

]]>Usage patterns

AppLink supports four proven integration patterns , but we'll focus on the two primary patterns that represent the main integration approaches - the other two patterns are variations of…

The post AppLink Fundamentals I: AppLink Integration Patterns – Connecting Salesforce to Heroku Applications appeared first on Heroku.

]]>

Usage patterns

AppLink supports four proven integration patterns, but we’ll focus on the two primary patterns that represent the main integration approaches – the other two patterns are variations of these same foundational concepts. Note that these patterns align with AppLink’s three official user modes: Pattern 1 corresponds to “run-as-user mode”, while Pattern 2 uses both “user mode” and “user mode plus” (for elevated permissions). Let’s explore both core patterns with their specific architectures and implementation approaches.

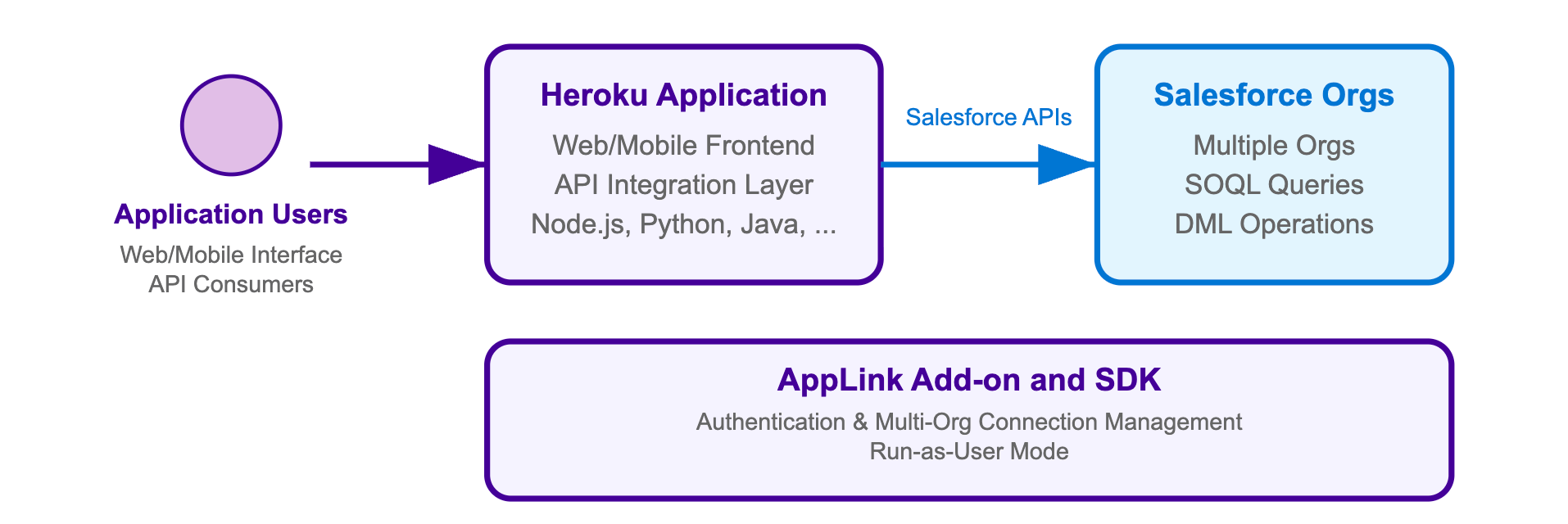

Pattern 1: Salesforce API access

This pattern uses run-as-user mode authentication, which enables system-level operations with consistent, predictable permissions by using a specific designated user’s context. This approach is ideal for automated processes and customer-facing applications that require stable permission sets and don’t depend on the triggering user’s access level. Run-as-user authorizations allow your Heroku applications to access Salesforce data across multiple orgs with the permissions of the designated user.

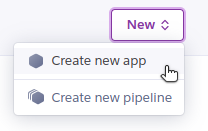

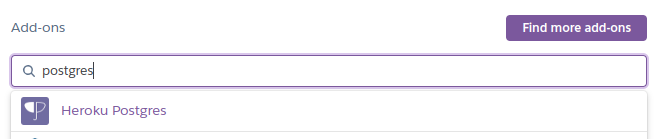

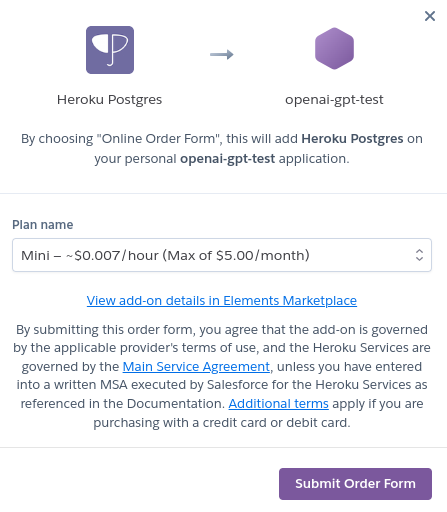

For a Heroku application that accesses Salesforce APIs, you need:

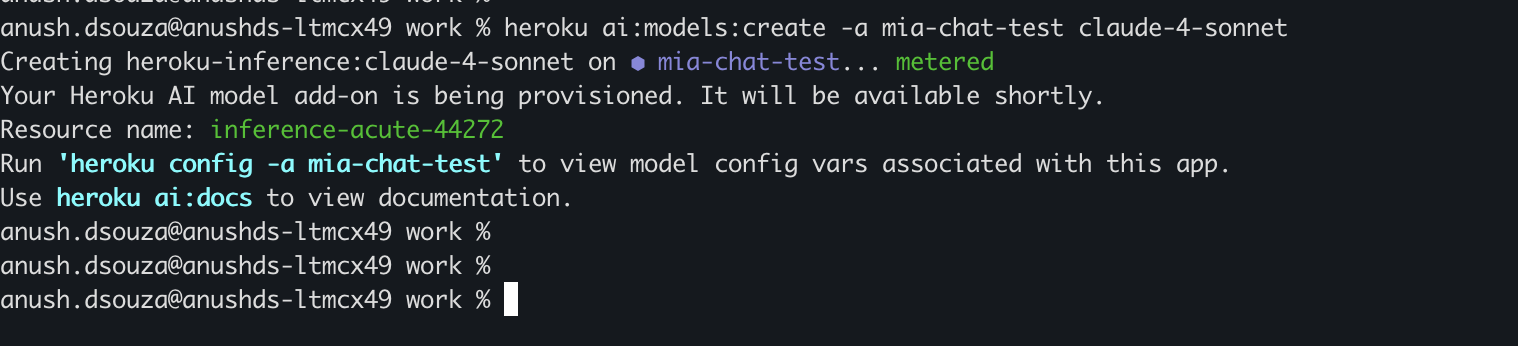

- AppLink Add-on:

heroku addons:create heroku-applink - AppLink CLI Plugin: Install the Heroku CLI plugin for deployment commands

- Org Login: An org login for a user with the ‘Manage Heroku Apps’ permission

- AppLink SDK (optional): For your chosen language to simplify integration

Here’s the complete command sequence for deploying and connecting a Node.js application with Salesforce API access, adapted from our Pattern 1 sample:

# Create and configure Heroku app

heroku create

heroku addons:create heroku-applink --wait

heroku buildpacks:add heroku/nodejs

heroku config:set HEROKU_APP_ID="$(heroku apps:info --json | jq -r '.app.id')"

# Connect to Salesforce org(s) using run-as-user mode

heroku salesforce:authorizations:add my-org

heroku config:set CONNECTION_NAMES=my-org

# Deploy application

git push heroku main

heroku open

Your application retrieves named authorizations and performs SOQL queries across multiple Salesforce orgs. The SDK simplifies multi-org connectivity through the AppLink add-on, which manages authentication and connection pooling automatically.

The first step in your Node.js application is initializing the AppLink SDK and retrieving a specific named authorization. This follows familiar Node.js patterns where connection details are managed through environment variables:

// From heroku-applink-pattern-api-access-nodejs/index.js

const sdk = init();

// Get connection names from environment variable

const connectionNames = process.env.CONNECTION_NAMES ?

process.env.CONNECTION_NAMES.split(',') : []

// Initialize connection for specific org

const org = await sdk.addons.applink.getAuthorization(connectionName.trim())

console.log('Connected to Salesforce org:', {

orgId: org.id,

username: org.user.username

})

Once you have an org connection, executing SOQL queries becomes straightforward using the Data API. The SDK handles authentication, session management, and provides structured responses that are easy to work with:

// Execute SOQL query using the Data API

const queryResult = await org.dataApi.query('SELECT Name, Id FROM Account')

console.log('Query results:', {

totalSize: queryResult.totalSize,

done: queryResult.done,

recordCount: queryResult.records.length

})

// Transform the records to expected format

const accounts = queryResult.records.map(record => ({

Name: record.fields.Name,

Id: record.fields.Id

}))

For Java developers, refer to the SalesforceClient.java class in the Java Pattern 1 sample for equivalent functionality. This implementation directly uses the AppLink API endpoint GET /authorizations/{connection_name} as described in the AppLink API documentation, demonstrating how to integrate without the SDK by making HTTP calls to ${HEROKU_APPLINK_API_URL}/authorizations/{developerName} with Bearer token authentication.

When you run the sample application locally or deploy it to Heroku, the code above produces a web interface that displays Account records from your connected Salesforce orgs. The application demonstrates both single-org and multi-org connectivity, with automatic authentication handling through the AppLink add-on:

The interface shows Account records from each connected org, along with connection details and bulk API capabilities. This demonstrates how AppLink simplifies multi-org data access patterns that would otherwise require complex OAuth flows and session management.

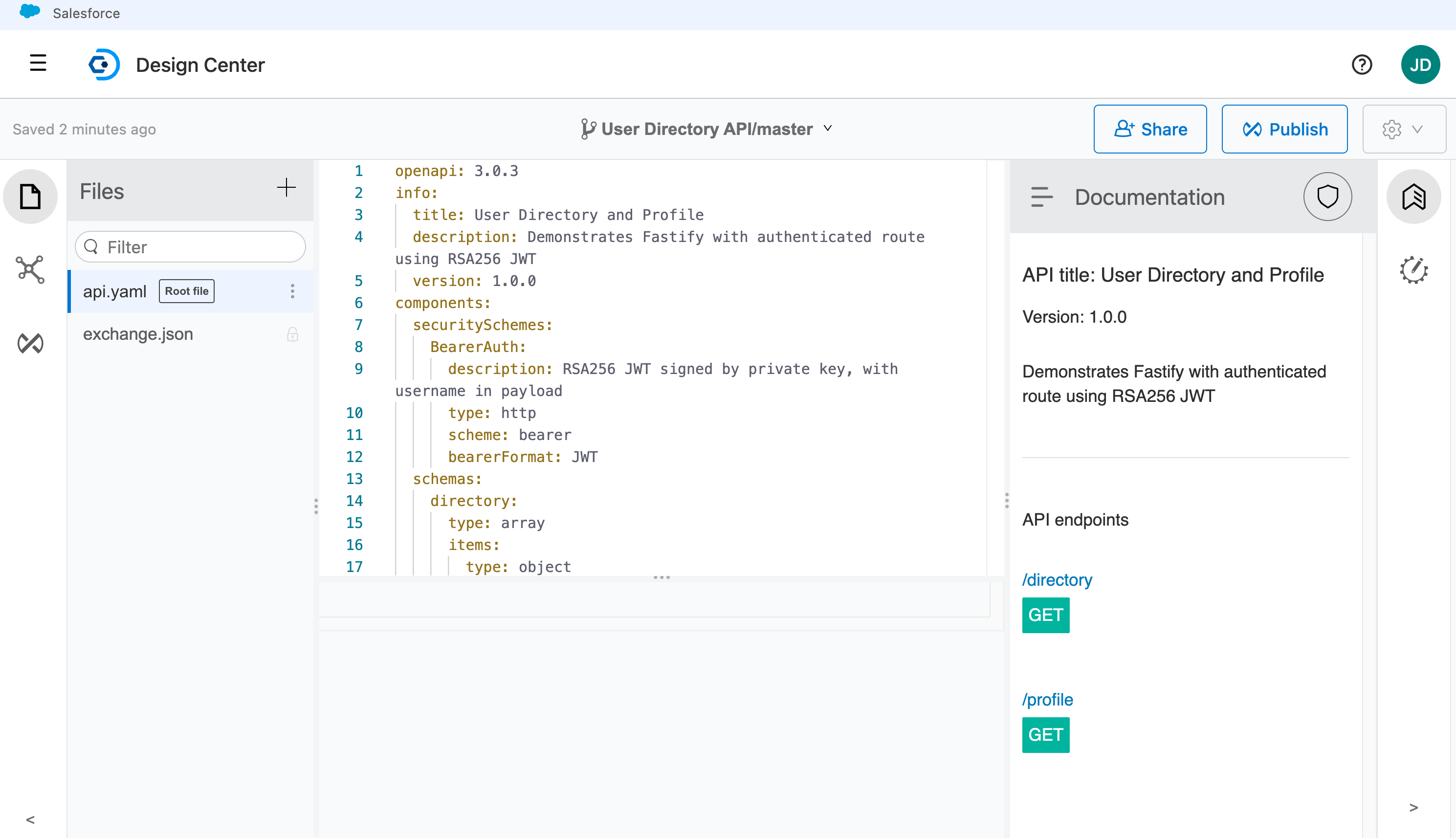

Pattern 2: Extending Salesforce

This pattern enables Salesforce users to invoke your Heroku applications directly from within Salesforce through Flow, Apex, or AgentForce. Your application becomes a published service that extends Salesforce capabilities across Lightning Experience, Sales Cloud, Service Cloud, and other Salesforce products. By publishing your application through AppLink, you’re extending the Salesforce platform with custom business logic that users can seamlessly access from their familiar Salesforce interface.

This pattern uses User Mode authentication, which provides the most secure integration by inheriting the exact permissions of the Salesforce user who triggers the operation. Additionally, User Mode supports elevated permissions (known as “user mode plus” in the main documentation) that are granted exclusively during code execution through Permission Sets. This allows your Heroku application to perform operations that the triggering user cannot normally perform, with admin-approved elevated permissions visible through Permission Sets in the org.

For a Heroku application designed to be invoked by Salesforce, you need:

- AppLink Add-on:

heroku addons:create heroku-applink - AppLink Buildpack:

heroku buildpacks:add --index=1 heroku/heroku-applink-service-mesh - AppLink CLI Plugin: Install the Heroku CLI plugin for deployment commands

- OpenAPI YAML file: Describing your HTTP endpoints for Salesforce discovery

- Org Login: An org login for a user with the ‘Manage Heroku Apps’ permission

- AppLink SDK (optional): For your chosen language to simplify integration

- Procfile Configuration: To inject the service mesh for authentication

The deployment process requires an api-docs.yaml file that describes your HTTP endpoints using the OpenAPI specification format. This file serves as the bridge between your Heroku application and Salesforce, enabling automatic generation of Apex classes, Flow actions, and Agentforce integrations. The YAML file contains both standard API documentation and Salesforce-specific metadata that controls authentication and permissions – we’ll explore its structure and contents in detail later in this blog.

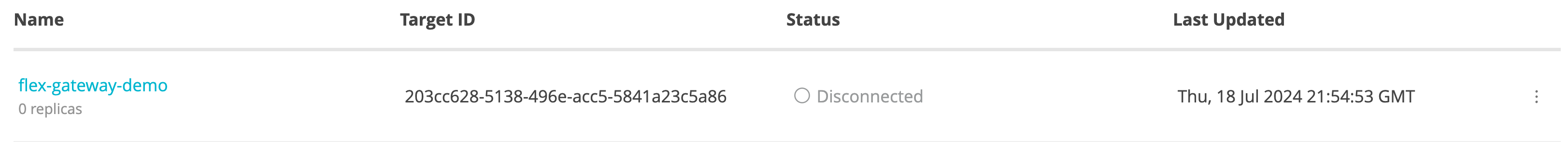

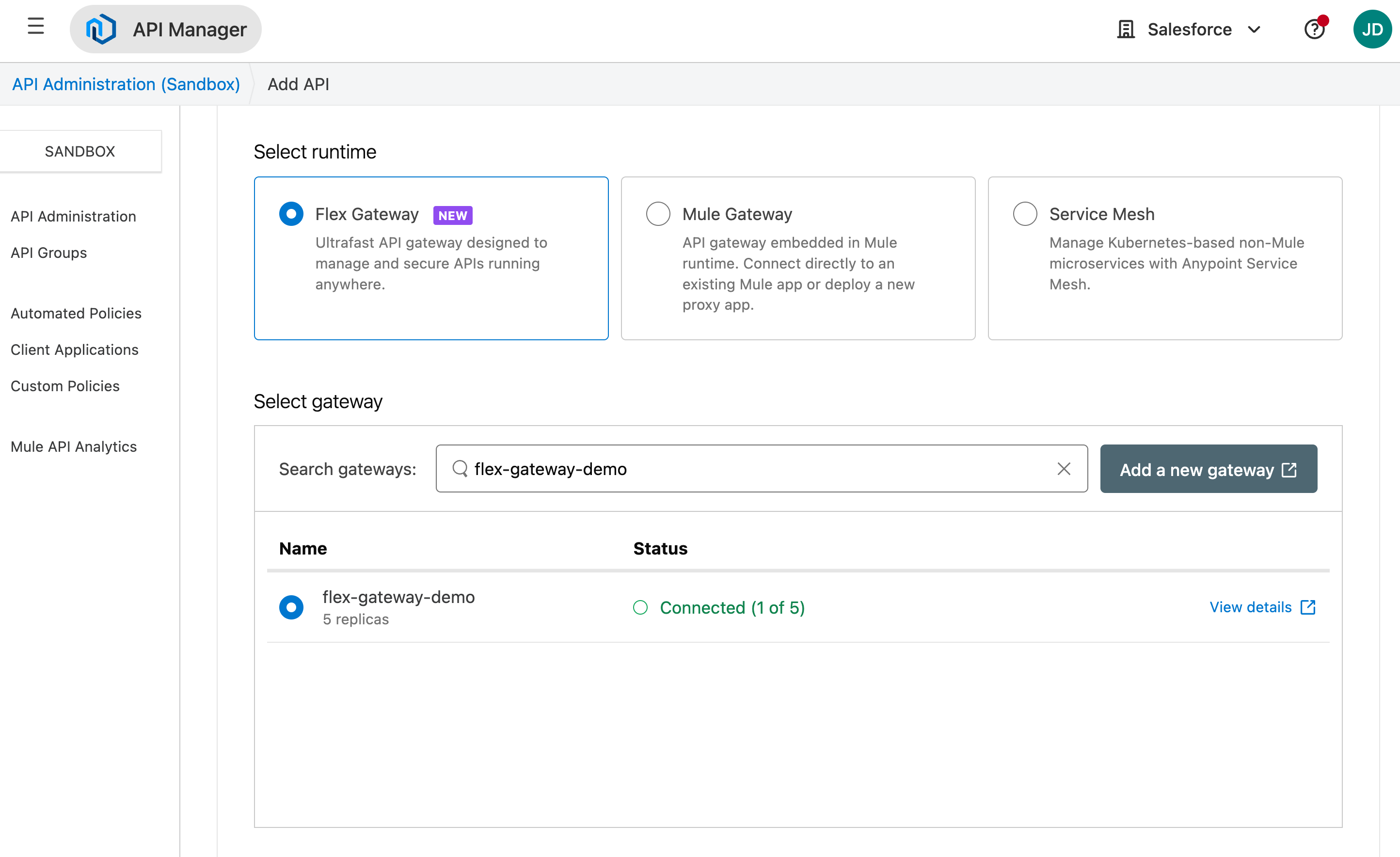

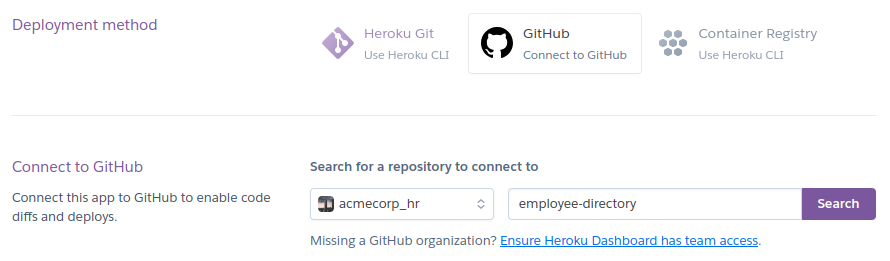

The following command sequence installs the AppLink add-on, configures a buildpack that injects a request interceptor known as the service mesh (which handles authentication and blocks external access), and establishes the secure connection between your Heroku application and Salesforce org. Note that Pattern 2 uses salesforce:connect to create connections (for app publishing) rather than salesforce:authorizations:add used in Pattern 1 (for data access). This deployment and connection process is adapted from our Pattern 2 sample:

# Create and configure Heroku app

heroku create

heroku addons:create heroku-applink

heroku buildpacks:add --index=1 heroku/heroku-applink-service-mesh

heroku buildpacks:add heroku/nodejs

heroku config:set HEROKU_APP_ID="$(heroku apps:info --json | jq -r '.app.id')"

# Deploy and connect to Salesforce

git push heroku main

heroku salesforce:connect my-org

heroku salesforce:publish api-docs.yaml --client-name GenerateQuote --connection-name my-org --authorization-connected-app-name GenerateQuoteConnectedApp --authorization-permission-set-name GenerateQuotePermissions

Your Procfile needs to route requests through the service mesh for authentication, and your application should use APP_PORT instead of the standard PORT environment variable (which is now used by the service mesh). For example, in Node.js:

// From config/index.js

port: process.env.APP_PORT || 8080,

web: APP_PORT=3000 heroku-applink-service-mesh npm start

Important security note: The service mesh will by default block all incoming requests to the application unless they are from a Salesforce org. The HEROKU_APP_ID config variable is currently required as part of the implementation – in future releases we will look to remove this requirement.

Once your application is deployed and published, you need to grant the appropriate permissions to users who will be invoking your Heroku application through Apex, Flow, or Agentforce:

# Grant permissions to users

sf org assign permset --name GenerateQuote -o my-org

sf org assign permset --name GenerateQuotePermissions -o my-org

The permission sets serve different purposes: GenerateQuote grants users access to the Heroku app (through the Flow, Apex or Agentforce interaction they are using), while GenerateQuotePermissions provides additional permissions the code might require to access objects and fields in the org that the user cannot normally access – this elevated permission model is discussed in the next section in more detail.

Applications use familiar Express-style middleware to parse incoming Salesforce requests and enable transactional operations. The SDK’s parseRequest method handles the complex process of extracting user context and authentication details from Salesforce requests – no need to manually parse headers or manage authentication tokens.

When using the AppLink SDK with your preferred Node.js web framework, middleware configuration follows standard patterns. The Pattern 2 sample uses Fastify (though Express.js, Koa, or other frameworks work equally well), where the SDK automatically parses incoming request headers and body, extracting user context and setting up the authenticated Salesforce client for your route handlers.

The middleware is implemented as a Fastify plugin that applies to all routes:

// From heroku-applink-pattern-org-action-nodejs/src/server/middleware/salesforce.js

const preHandler = async (request, reply) => {

const sdk = salesforceSdk.init();

try {

// Parse incoming Salesforce request headers and body

const parsedRequest = sdk.salesforce.parseRequest(

request.headers,

request.body,

request.log

);

// Attach Salesforce client to request context

request.salesforce = Object.assign(sdk, parsedRequest);

} catch (error) {

console.error('Failed to parse request:', error.message);

throw new Error('Failed to initialize Salesforce client');

}

};

This middleware plugin is registered in the main application file where the Fastify server is configured:

// From heroku-applink-pattern-org-action-nodejs/src/server/app.js

import { salesforcePlugin } from './middleware/salesforce.js';

// Register Salesforce plugin

await fastify.register(salesforcePlugin);

For developers not using the AppLink SDK, the key integration point is parsing the x-client-context header that contains base64-encoded JSON with authentication and user context. Here’s how you can implement this manually in Java:

// From heroku-applink-pattern-org-action-java/.../SalesforceClientContextFilter.java

private static final String X_CLIENT_CONTEXT_HEADER = "x-client-context";

// Decode the base64 header value and parse the JSON

String encodedClientContext = request.getHeader(X_CLIENT_CONTEXT_HEADER);

String decodedClientContext = new String(

Base64.getDecoder().decode(encodedClientContext),

StandardCharsets.UTF_8

);

ObjectMapper objectMapper = new ObjectMapper();

JsonNode clientContextNode = objectMapper.readTree(decodedClientContext);

// Extract authentication and context fields

String accessToken = clientContextNode.get("accessToken").asText();

String apiVersion = clientContextNode.get("apiVersion").asText();

String orgId = clientContextNode.get("orgId").asText();

String orgDomainUrl = clientContextNode.get("orgDomainUrl").asText();

JsonNode userContextNode = clientContextNode.get("userContext");

String userId = userContextNode.get("userId").asText();

String username = userContextNode.get("username").asText();

This approach bypasses the SDK entirely and directly constructs the Salesforce SOAP API endpoint ({orgDomainUrl}/services/Soap/u/{apiVersion}) using the authentication details from the header. The JSON structure in the x-client-context header contains:

{

"accessToken": "00D...",

"apiVersion": "62.0",

"requestId": "request-123",

"orgId": "00Dam0000000000",

"orgDomainUrl": "https://yourorg.my.salesforce.com",

"userContext": {

"userId": "005am000001234",

"username": "user@example.com"

}

}

One of the key advantages of Pattern 2 applications is the ability to perform multiple DML operations atomically – similar to database transactions in Node.js ORMs like Sequelize or Prisma. The SDK’s Unit of Work pattern ensures all operations succeed or fail together, providing transactional integrity for complex business processes that involve creating or updating multiple related records:

// From heroku-applink-pattern-org-action-nodejs/src/server/services/pricingEngine.js

const { context } = client;

const org = context.org;

// Create Unit of Work for transactional operations

const unitOfWork = org.dataApi.newUnitOfWork();

// Register Quote creation

const quoteRef = unitOfWork.registerCreate({

type: 'Quote',

fields: {

Name: 'New Quote',

OpportunityId: request.opportunityId

}

});

// Register related QuoteLineItems

queryResult.records.forEach(record => {

const discountedPrice = (quantity * unitPrice) * (1 - effectiveDiscountRate);

unitOfWork.registerCreate({

type: 'QuoteLineItem',

fields: {

QuoteId: quoteRef.toApiString(), // Reference to Quote being created

PricebookEntryId: record.fields.PricebookEntryId,

Quantity: quantity,

UnitPrice: discountedPrice / quantity

}

});

});

// Commit all operations in one transaction

const results = await org.dataApi.commitUnitOfWork(unitOfWork);

const quoteResult = results.get(quoteRef);

return { quoteId: quoteResult.id };

For comprehensive examples including Bulk API operations, event handling, and advanced patterns, explore the complete integration patterns samples which demonstrate real-world scenarios across Node.js, Java, and Python implementations.

In the second part of this blog, we’ll dive deeper into how to invoke this Heroku logic from Apex, Flow, and Agentforce, including the specific Salesforce security models in effect and practical implementation guidance for each integration point.

Pattern Comparison

Now that you’ve seen both primary patterns, here’s a comparison of their key differences:

| Aspect | Pattern 1: Salesforce API Access | Pattern 2: Extending Salesforce |

|---|---|---|

| Authentication | Run-as-user via salesforce:authorizations:add |

Invoking User via salesforce:connect |

| Buildpack | Not required – app accessible to external users | Required – blocks external access, Salesforce-only |

| Port Configuration | Standard PORT usage | APP_PORT configuration needed |

| Org Support | Multiple org connections supported | Single org connection with permission-based access |

| Service Discovery | Not required | Service publishing required (salesforce:publish) |

| Permission Model | Run-as-user permissions across orgs | User and user mode plus via Permission Sets |

| Use Case | Web apps accessing Salesforce data | Salesforce invoking external processing |

For detailed guidance on all integration patterns and when to use each one, note that we have a Getting Started Guide that goes through this in more detail, as well as being covered in context in each of the README files for our accompanying samples.

Additional integration patterns and features

While Patterns 1 and 2 cover the foundational approaches, AppLink also supports two additional patterns that extend these core concepts:

Pattern 3: Scaling batch jobs

This pattern builds on Pattern 2’s extension approach by delegating large-scale data processing with significant compute requirements to Heroku Worker processes. This pattern is ideal when you need to process large datasets that exceed Salesforce batch job limitations, providing parallel processing capabilities and sophisticated error handling. See the complete Pattern 3 implementation for detailed guidance on batch processing architectures.

Pattern 4: Real-time eventing

This pattern extends Pattern 1’s API access approach by using Run-as-User authentication to establish event listening for Platform Events and Change Data Capture from Salesforce. The work is performed by the Run-as-User, enabling real-time responses to data changes and event-driven automation with custom notifications sent to desktop or mobile devices. Explore the Pattern 4 implementation for event-driven integration examples.

To summarize, Pattern 3 builds on Pattern 2’s extension approach (Invoking User), while Pattern 4 builds on Pattern 1’s API access approach (Run-as-User), focusing on different scenarios and authentication models. Complete sample implementations for all four patterns are available in the AppLink integration patterns repository.

Data Cloud integration

AppLink provides comprehensive Data Cloud integration capabilities that enable bi-recreational data flow between your Heroku applications and Salesforce Data Cloud. Your applications can execute SQL queries against Data Cloud using the dataCloudApi.query() method to access unified customer profiles, journey analytics, and real-time insights.

Additionally, you can create Data Cloud Actions that allow Data Cloud to invoke your Heroku applications through Data Action Targets. The SDK’s parseDataActionEvent() function handles incoming Data Cloud events, providing structured access to event metadata, current and previous values, and custom business logic integration points. This creates powerful scenarios like real-time personalization engines, automated customer journey optimization, and intelligent data enrichment workflows that combine Data Cloud’s analytics capabilities with Heroku’s computational flexibility.

What’s next?

In our next blog in the AppLink Fundamentals series, we’ll delve into advanced integrations with Flow, Apex, and Agentforce, demonstrating how AppLink amplifies Salesforce’s existing features. Following that, we’ll cover the practical aspects of the development flow, including local testing, managing OpenAPI changes, and the key considerations when choosing between Apex and other programming languages for your Salesforce extensions.

Read More of the AppLink Fundamentals series

- AppLink Fundamentals I: AppLink Integration Patterns – Connecting Salesforce to Heroku Applications

- AppLink Fundamentals II: Advanced AppLink Integrations – Automation & AI

- AppLink Fundamentals III: Building with AppLink – Development Flow and Language Choices

The post AppLink Fundamentals I: AppLink Integration Patterns – Connecting Salesforce to Heroku Applications appeared first on Heroku.

]]>New to Heroku? Watch this brief introduction video to get familiar with the platform before diving into AppLink.

With the general availability of Heroku AppLink directly on the Salesforce Setup…

The post Heroku AppLink: Extend Salesforce with Any Programming Language appeared first on Heroku.

]]>

New to Heroku? Watch this brief introduction video to get familiar with the platform before diving into AppLink.

With the general availability of Heroku AppLink directly on the Salesforce Setup menu, Heroku is significantly expanding the programming language options available to Salesforce developers. AppLink empowers you to securely deploy code written in virtually any language directly to the Salesforce platform, enabling enhanced growth and capabilities for existing workloads. Heroku applications can be seamlessly attached to multiple Salesforce orgs, allowing your customizations and automations to leverage Heroku’s renowned scaling capabilities. This groundbreaking integration makes it possible to build nearly anything on the Salesforce platform without the need to store or move data off-platform for complex processing. With AppLink, you get the same trust commitment as every other Salesforce product, as AppLink handles all the security and integration for you!

Use AppLink with the language of your choice

If you’re a Salesforce architect or developer familiar with Node.js (the same runtime used by Lightning Web Components) or Python, this blog is for you. This initial release of AppLink provides SDK support for Node.js and Python, with a primary focus on Node.js examples and patterns. We’ve also included Java samples that demonstrate how to use AppLink in languages that don’t currently have a dedicated SDK, by working directly with AppLink’s APIs. Importantly, AppLink’s APIs are designed to work with virtually any programming language, giving you the freedom to use the tools and frameworks you’re already productive with.

In this series, we’ll embark on a journey to explore the key components of AppLink, discover how to extend Salesforce Flows, Apex, and Agentforce with external logic, and understand how AppLink helps build solutions with customer data security as a top priority, with user mode enabled by default. We’ll also delve into various usage patterns, the development flow, and crucial considerations for when to leverage AppLink versus traditional Apex development.

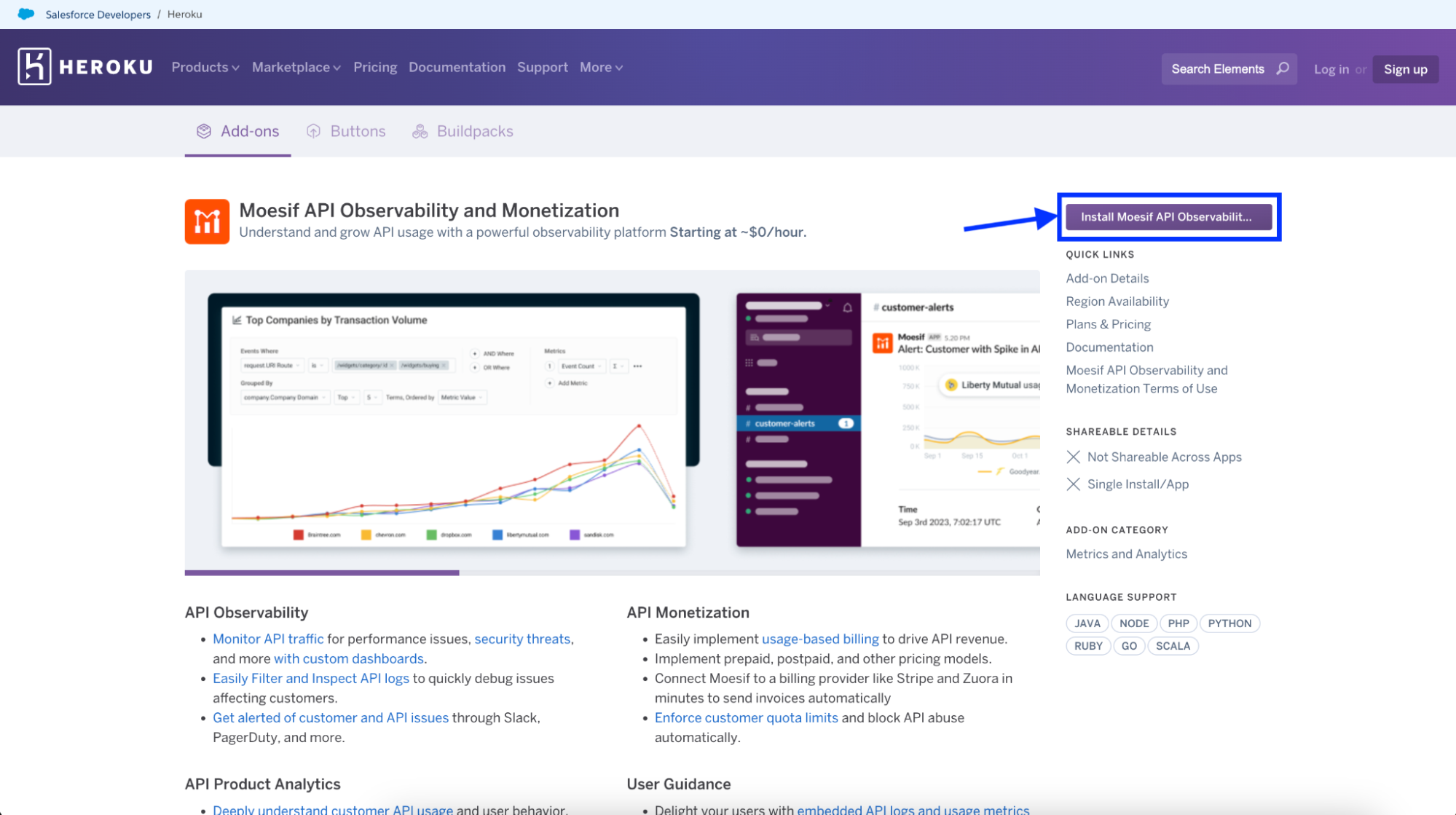

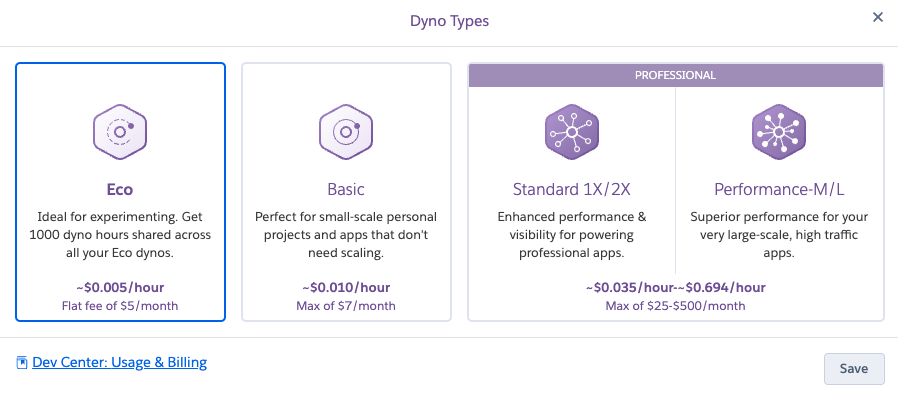

Exploring Heroku AppLink features

AppLink functions as a standard Heroku add-on. However, unlike add-ons from ecosystem partners, AppLink is owned and managed directly by Heroku engineers as an extension to the Heroku platform itself. As an add-on, you can expect a familiar UI, normal provisioning processes, and the ability to share the add-on across multiple Heroku applications and services. AppLink is available to all Salesforce orgs and can be easily found under the Setup menu. The add-on itself is free; you only pay for the Heroku compute and any desired data resources through normal Heroku billing. Click here to learn more about Heroku Add-ons.

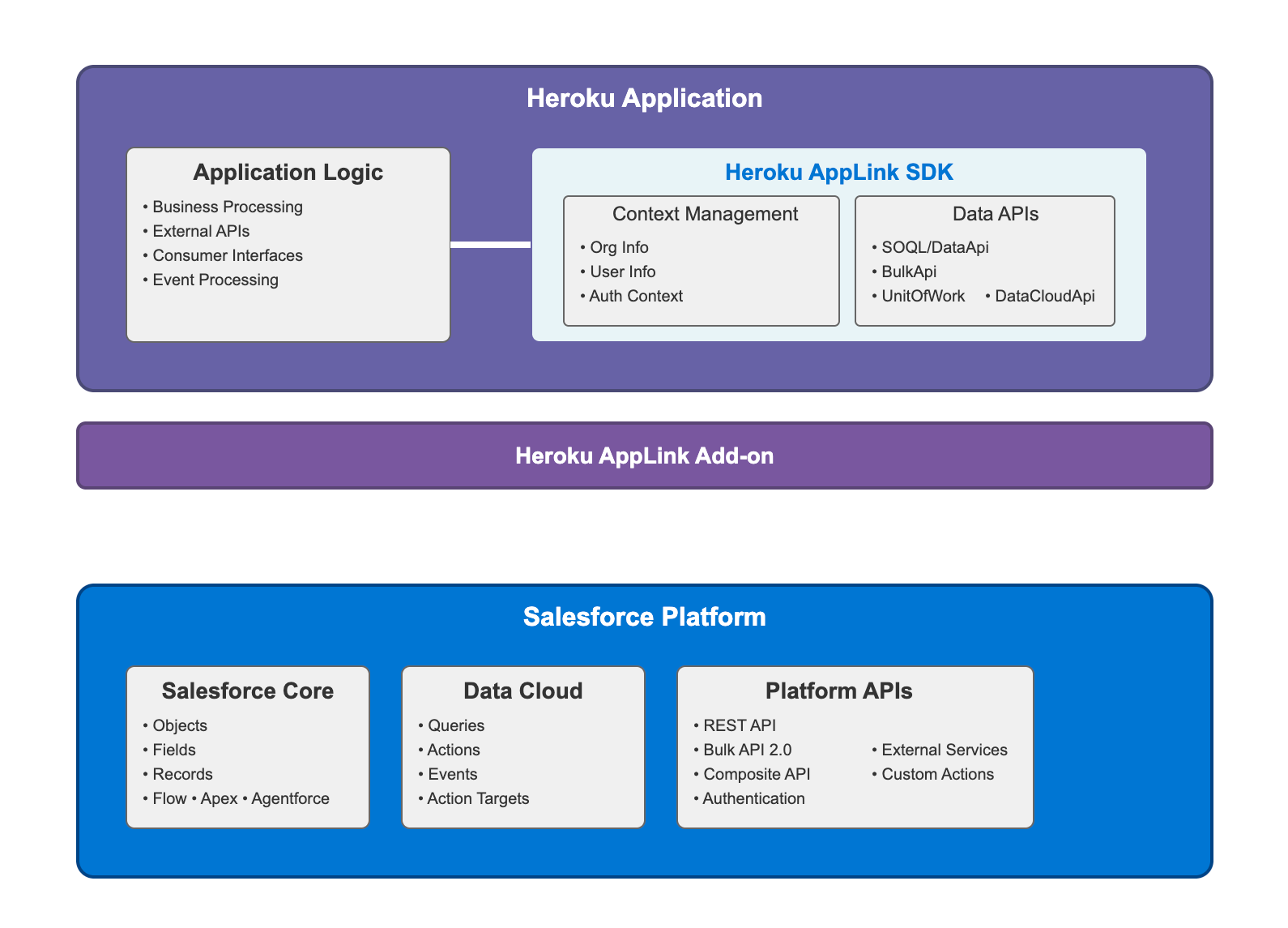

AppLink is comprised of several key components that work in concert to create a fully managed bridge between your Heroku application and other Salesforce products. Understanding this architecture is crucial for successful implementation, as each component plays a specific role in enabling secure, authenticated communication between your custom code and the Salesforce platform.

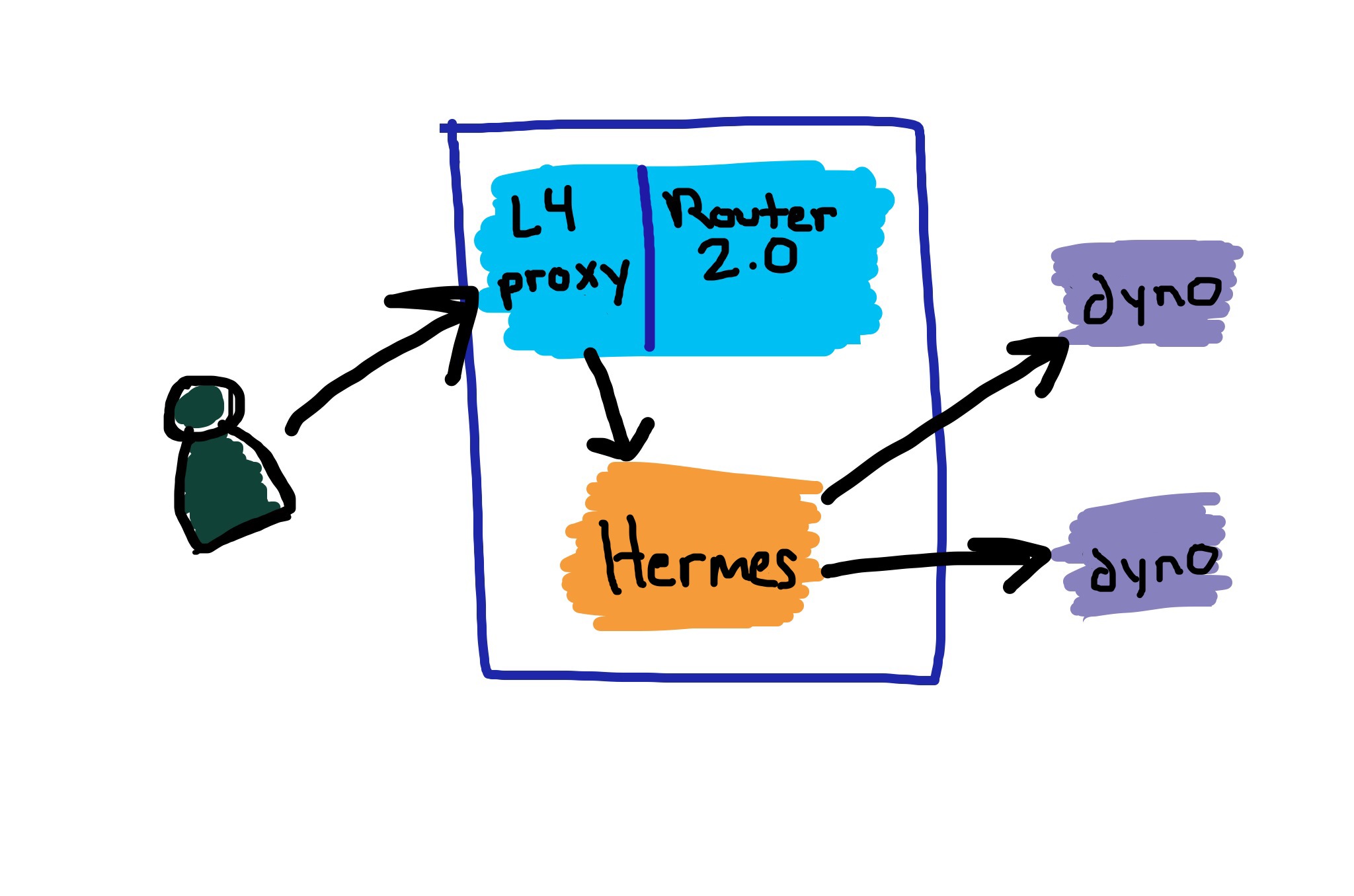

The diagram below illustrates the complete AppLink ecosystem, showcasing how requests flow between Salesforce orgs and your Heroku applications, the vital role of the AppLink add-on in managing connections and authentication, and how various AppLink components coordinate to provide seamless integration. Whether you’re building applications that call Salesforce APIs or services that extend Salesforce functionality, this architecture forms the foundation for all integration scenarios.

Each component within AppLink serves a distinct purpose in creating the integrated experience. The table below provides a detailed overview of the role and capabilities of each AppLink component, demonstrating how they work together to provide comprehensive Salesforce-Heroku connectivity.

| Component | Role |

|---|---|

| Add-on | Acts as the foundational connectivity layer (heroku addons:create heroku-applink), providing automatic provisioning between Heroku and Salesforce, security token management, and service discovery for making Heroku apps discoverable within Salesforce and the API Catalog. Works in conjunction with the Buildpack when building AppLink solutions that extend Salesforce as described later in this blog. Exposes environment variables: HEROKU_APPLINK_API_URL and HEROKU_APPLINK_TOKEN for authentication and API access. |

| Buildpack | Functions as the security and authentication layer (heroku buildpacks:add --index=1 heroku/heroku-applink-service-mesh) that injects the service mesh into Heroku applications designed to be invoked by Salesforce. The service mesh acts as a request interceptor that handles authentication, blocks external access to ensure only Salesforce can invoke the application, and routes authenticated requests to your application code. Required for applications that extend Salesforce functionality through Flow, Apex, or Agentforce integration patterns. |

| Dashboard | Functions as the centralized monitoring interface accessible via heroku addons:open heroku-applink with three main tabs: Connections (lists Salesforce and Data Cloud org connections with status), Authorizations (shows run-as-user authorizations with developer names and connected orgs), and Publications (displays published apps across orgs with connection status). Provides comprehensive visibility into your Heroku-Salesforce integrations. |

| CLI | Serves as the command-line interface for deployment commands, connecting and publishing apps to Salesforce orgs, local development tools, permission management, and multi-environment support. The salesforce:authorizations commands enable existing Heroku applications to access Salesforce data (run-as-user mode), while salesforce:connect commands are used for User Mode. Publishing commands allow Heroku code to be invoked via Flow, Apex, or Agentforce. |

| API | Serves as the programmatic gateway providing unified access to Salesforce and Data Cloud data with automatic authentication, authorization, and connection pooling. Used by the CLI and SDK, and can be used directly by developers’ own code for custom integrations. |

| SDK | Acts as the developer toolkit that simplifies AppLink integration by providing request processing capabilities, automatic authentication handling, and unified data access methods. The SDK parses incoming requests from Salesforce (Flows, Apex, Agentforce), including decoding the x-client-context HTTP header which contains base64 encoded JSON with user context and authentication details, routes them to appropriate business logic, and transforms responses back to Salesforce-compatible formats. Key features include connection management, transaction support, and structured error handling. Currently available for Node.js and Python, while other languages are fully supported but must use the AppLink API directly instead of the SDK. |

| OpenAPI Integration | Functions as the service discovery and registration mechanism using OpenAPI Specification files (YAML or JSON format) for endpoint discovery, automatic service registration in Salesforce Setup menu and API Catalog, and External Service generation for admins. Uses x-sfdc extensions to map Permission Set names for elevated permissions beyond the user’s access level, and to automatically create Agentforce Custom Actions. Currently supports OpenAPI 3.0 at time of writing – check Salesforce External Services documentation for the latest supported version. These features will be discussed further later. |

| Salesforce API Integration | Provides the data access layer where the AppLink SDK includes helpers for SOQL Query Engine, DML Operations, Data Cloud Integration, and Bulk API Support, but developers can still directly access these APIs or use existing Salesforce API libraries they prefer. |

Together, these components offer a comprehensive and cohesive ecosystem that simplifies the complex task of integrating Heroku applications with Salesforce. By providing dedicated tools for everything from secure connectivity and automatic authentication to streamlined deployment, monitoring, and service discovery, AppLink reduces development overhead and accelerates time to market. This holistic approach ensures that developers can focus on building powerful business logic, knowing that the underlying infrastructure for secure and scalable Salesforce extension is fully managed and integrated.

Stay tuned: from foundation to practical application

This blog post has provided a foundational understanding of AppLink – what it is, why it’s a critical new tool for Salesforce developers, and its core components.

In our three part series, AppLink Fundamentals, we’ll dive into the practical application of AppLink by exploring its key integration patterns, showing you how to connect your Heroku applications to Salesforce for various use cases. Subsequent posts in this series will delve into advanced integrations with Data Cloud, Flow, Apex, and Agentforce, followed by a look at the development workflow, language choices, and best practices for building robust solutions with AppLink. Stay tuned to unlock the full potential of extending Salesforce with the power of Heroku.

Additional AppLink resources

- Getting Started with Heroku AppLink and Salesforce

- Getting Started with Heroku AppLink and Data Cloud

- Getting Started with Heroku AppLink and Agentforce

Read the AppLink Fundamentals series

- AppLink Fundamentals I: AppLink Integration Patterns – Connecting Salesforce to Heroku Applications

- AppLink Fundamentals II: Advanced AppLink Integrations – Automation & AI

- AppLink Fundamentals III: Building with AppLink – Development Flow and Language Choices

The post Heroku AppLink: Extend Salesforce with Any Programming Language appeared first on Heroku.

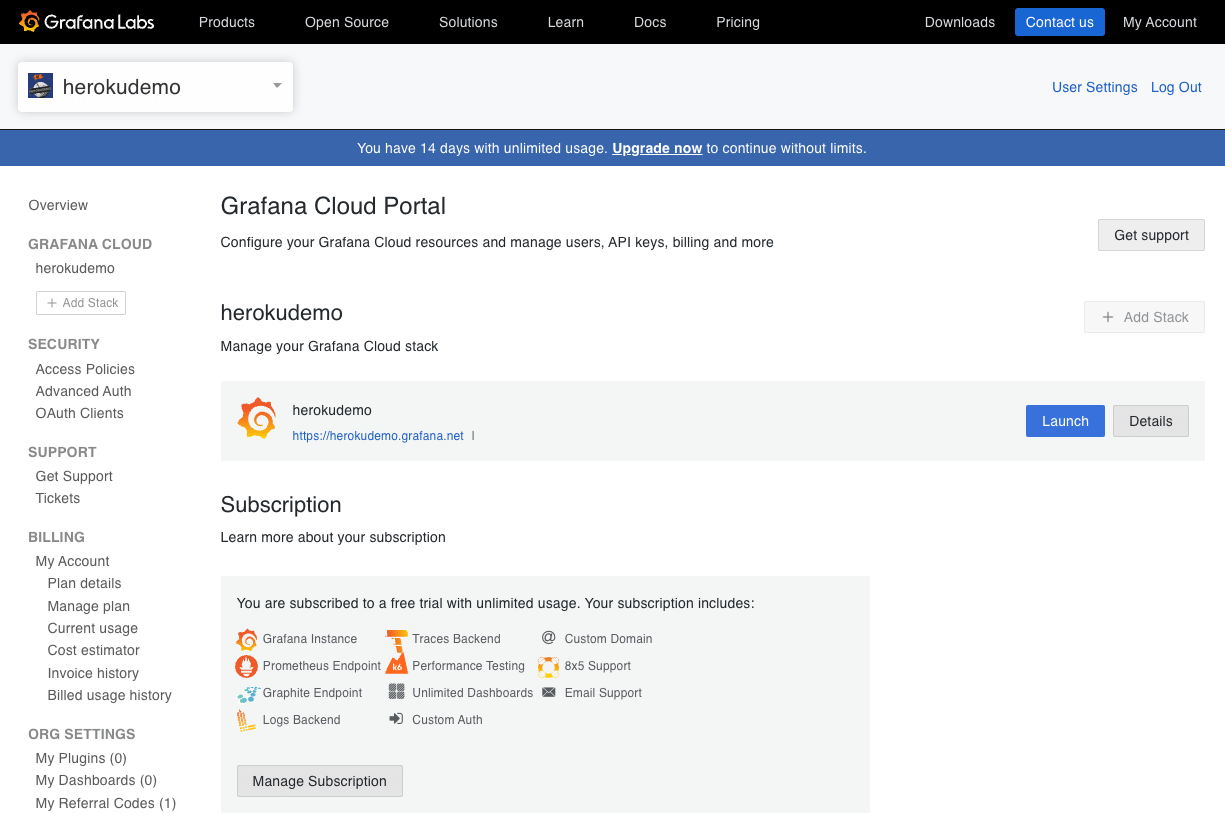

]]>The post OpenTelemetry, Kubernetes, and Fir: Putting it All Together appeared first on Heroku.

]]>Fir is Heroku’s next generation cloud platform, designed to offer more modern cloud-native capabilities with flexibility and scalability. It’s built on proven, open-source technologies. Traditional Heroku relied on proprietary technologies, which was appropriate at the time because high-quality open-source alternatives didn’t exist. But now, technologies like Kubernetes and OpenTelemetry are considered best-in-class solutions that are widely deployed and supported by a vast ecosystem.

Kubernetes is at the core of Fir’s infrastructure, providing automated scaling, self-healing, and efficient resource management. And while Kubernetes powers Fir, end users are not exposed to it directly—which is a good thing, since Kubernetes is very complex. Under the hood, Fir takes advantage of the powerful capabilities of Kubernetes, but it only exposes the user-friendly Heroku interface for user interaction.

OpenTelemetry offers standard-based visibility into how applications and services interact within Fir as well as integrate with external systems. By leveraging OpenTelemetry with Fir, developers can gain deep insights into application performance. They can track distributed requests and even route telemetry data to external monitoring platforms if they wish.

Let’s look more deeply into OpenTelemetry and what it brings to the table.

Understanding OpenTelemetry

As an observability framework, OpenTelemetry is designed to standardize the collecting, processing, and exporting of telemetry data from applications. It aims to provide a unified approach to capturing this data across distributed systems so that developers can monitor performance and diagnose issues effectively.

One of the primary goals of OpenTelemetry is to eliminate vendor lock-in. You can send telemetry data to various backends, such as Prometheus, Jaeger, Grafana, Datadog, and—of course—Heroku, without modifying application code.

OpenTelemetry consists of language-specific APIs and SDKs coupled with the OpenTelemetry Collector, all working together to provide a unified observability framework. The APIs define a standard way to generate telemetry data, while the SDKs offer language-specific implementations for instrumenting applications. The OpenTelemetry Collector acts as a processing pipeline. It supports the ingestion, filtering, and export of telemetry data using the OpenTelemetry Protocol (OTLP), which standardizes the transmission of data to various observability backends.

OpenTelemetry supports three primary telemetry data types, each serving a critical role in observability:

- Logs capture discrete events, such as errors or system messages, providing a detailed record of application behavior.

- Metrics track numerical data over time, offering quantifiable insights into system performance.

- Traces track the flow of requests across distributed services, enabling developers to diagnose latency issues and optimize performance.

Fir: Heroku’s next generation platform

By integrating Kubernetes into its core, Fir empowers developers to deploy and manage applications with greater control and resilience. Leveraging Kubernetes ensures that applications can handle varying workloads seamlessly, adapting to changing demands without manual intervention. Because it runs specifically on top of AWS EKS, Fir can take advantage of more diverse and powerful instance types, such as the AWS Graviton processor.

Fir utilizes Open Container Initiative (OCI) images and Cloud Native Buildpacks to package and deploy services and applications. This is a major improvement, because it means developers in the Heroku world can tap into their standards-based knowledge and tooling. In addition, Fir integrates seamlessly with OpenTelemetry, providing a built-in collector and easy ways to configure drains for transmitting telemetry data to additional destinations if needed.

Basing Fir on open-source standards and technologies is a major advantage for several reasons:

- If a company already uses these technologies and has a centralized observability platform, then they can gradually migrate some systems to Fir, while retaining a single pane of glass for all systems.

- It avoids lock-in because companies can easily migrate to other Kubernetes providers and still use their existing OpenTelmetry instrumentation and CNB pipelines.

- Companies have the option of maintaining a hybrid environment, in which some systems run on Heroku Fir while others run on other Kubernetes providers if they need more control.

The benefits of OpenTelemetry in Fir

Native integration with OpenTelemetry means Fir enables automatic telemetry collection without requiring extensive manual setup.

By tracing requests across distributed services (including on non-Heroku systems interacting with Fir), developers can easily pinpoint failures and optimize system performance. These capabilities enable teams to proactively address issues before they impact end users, improving application reliability.

OpenTelemetry’s vendor-agnostic approach gives organizations the flexibility to choose their preferred monitoring and analytics tools. Since OpenTelemetry is an open-source project, it benefits from continuous improvements and broad community support.

Because of its lightweight and distributed architecture, OpenTelemetry is well-suited for large-scale, cloud-native environments like Fir’s Kubernetes-based infrastructure. It efficiently handles high-volume telemetry data, ensuring that performance monitoring scales alongside the application.

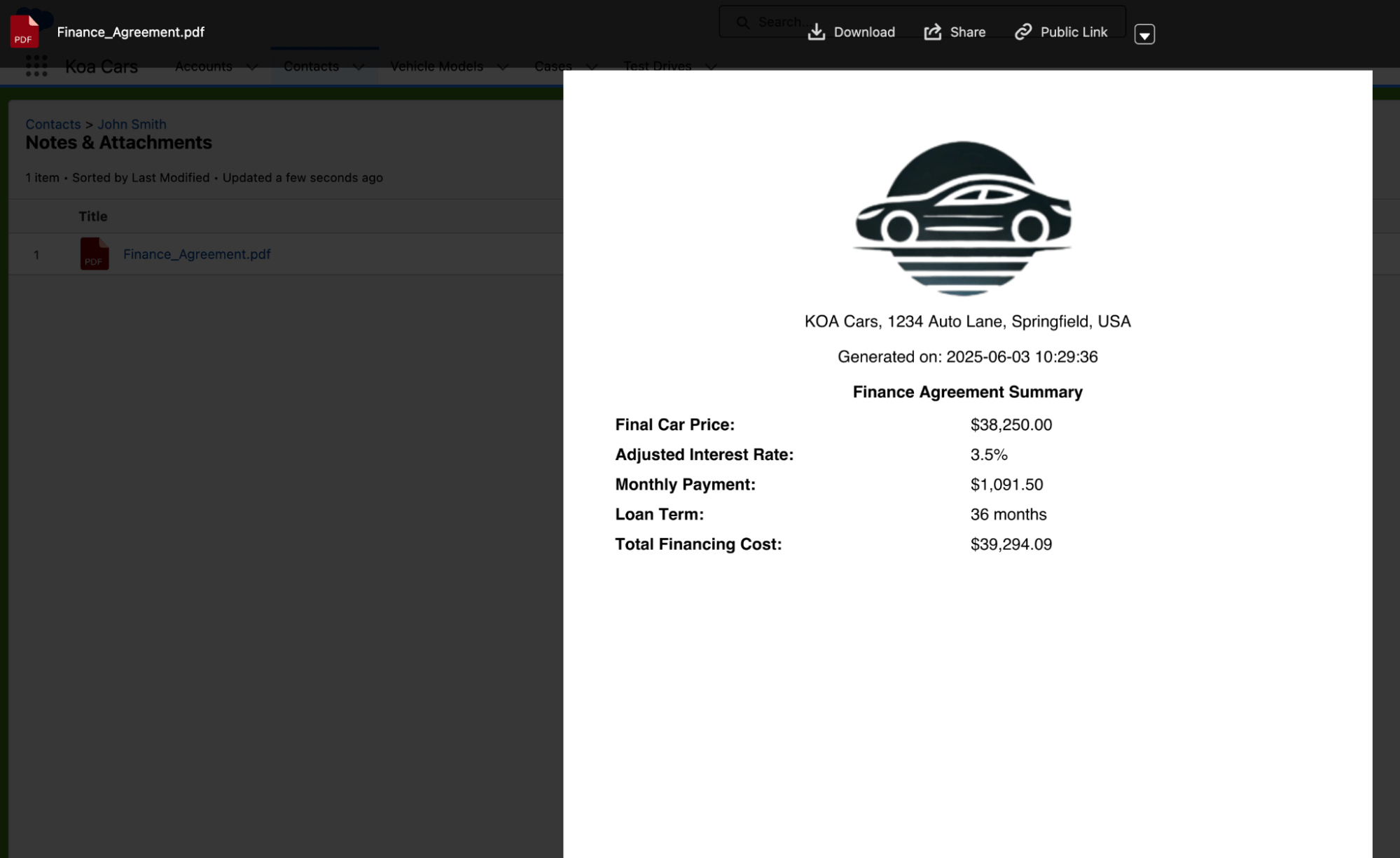

How does OpenTelemetry work with Fir?

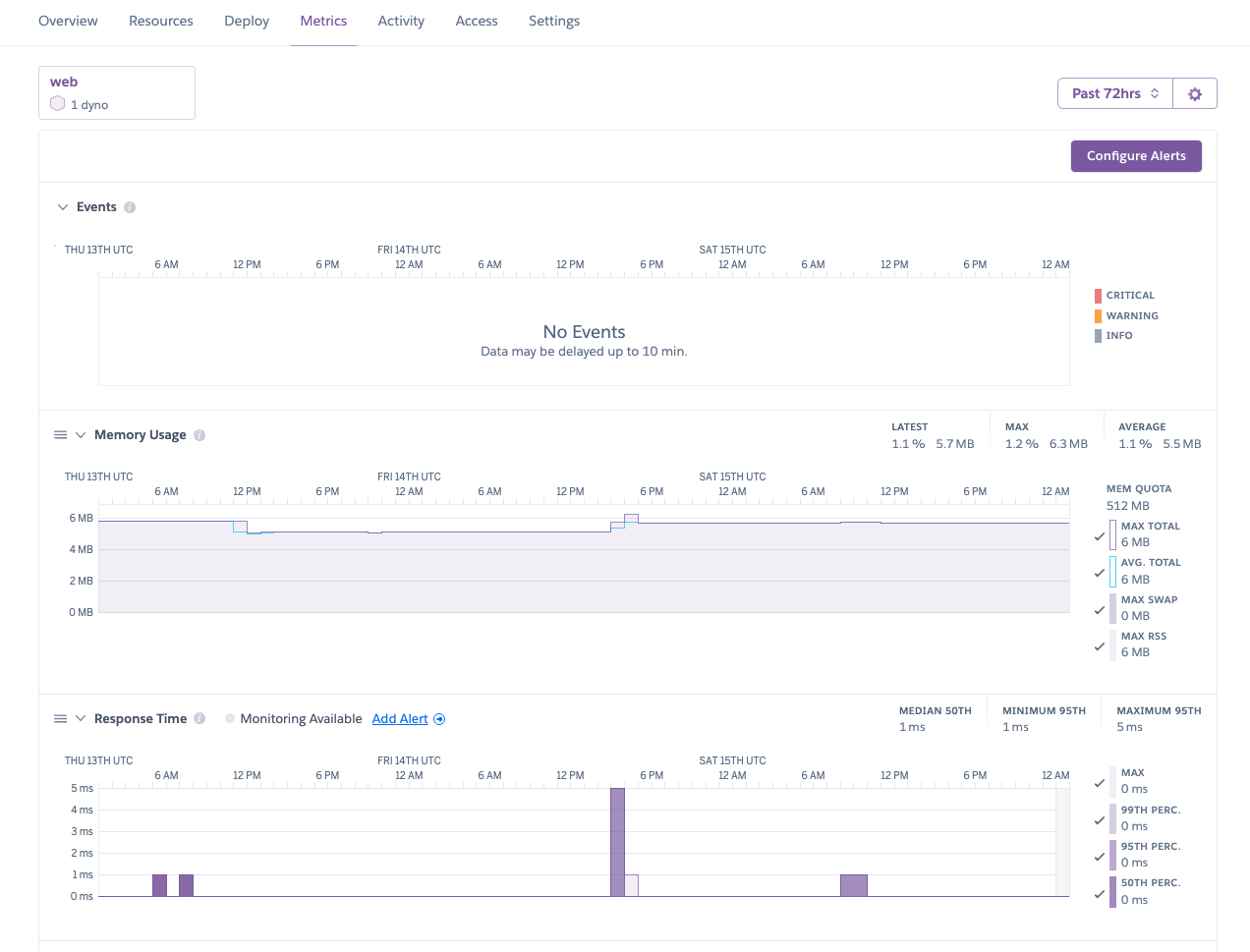

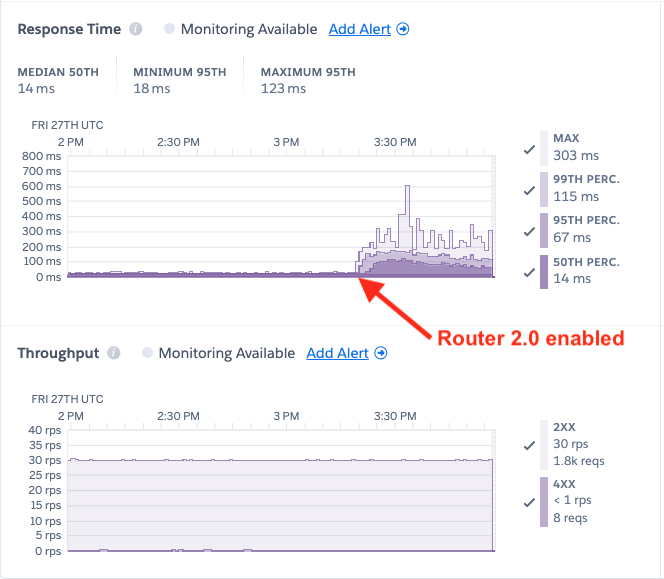

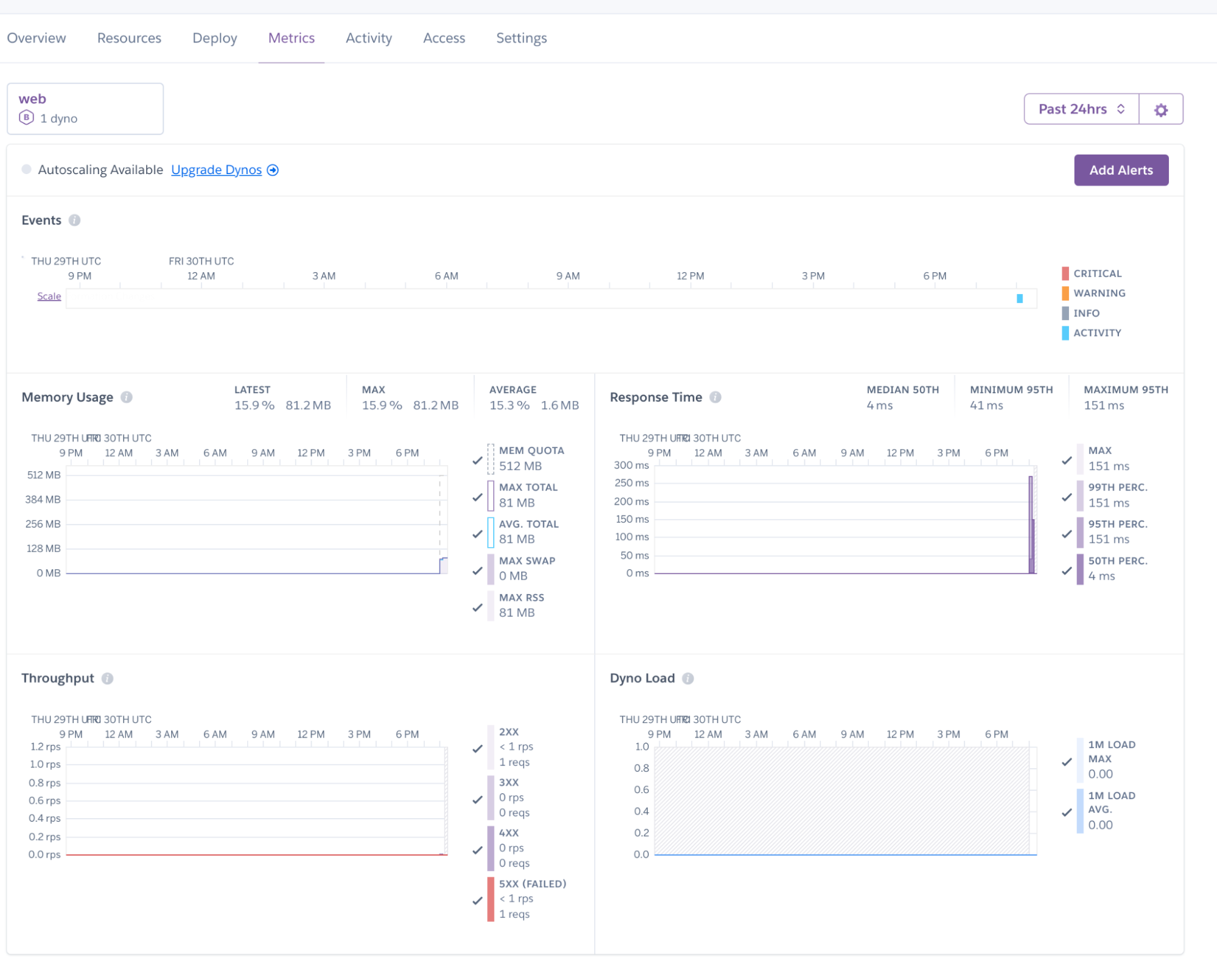

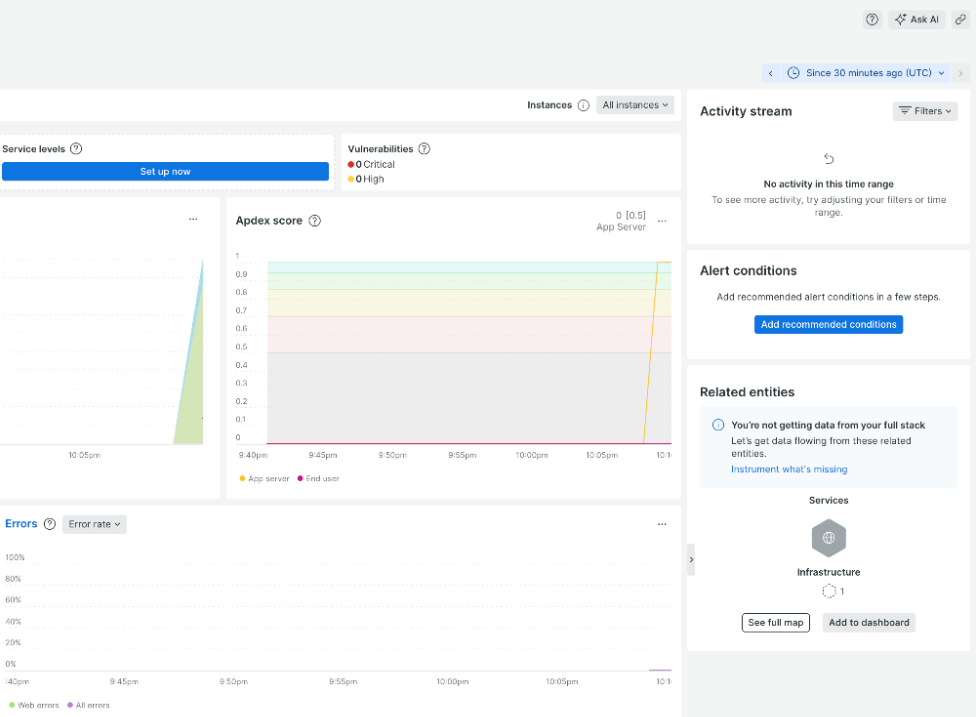

Fir provides out-of-the-gate OpenTelemetry logs and metrics for your dynos and the applications running on them. These are displayed in your app’s dashboard.

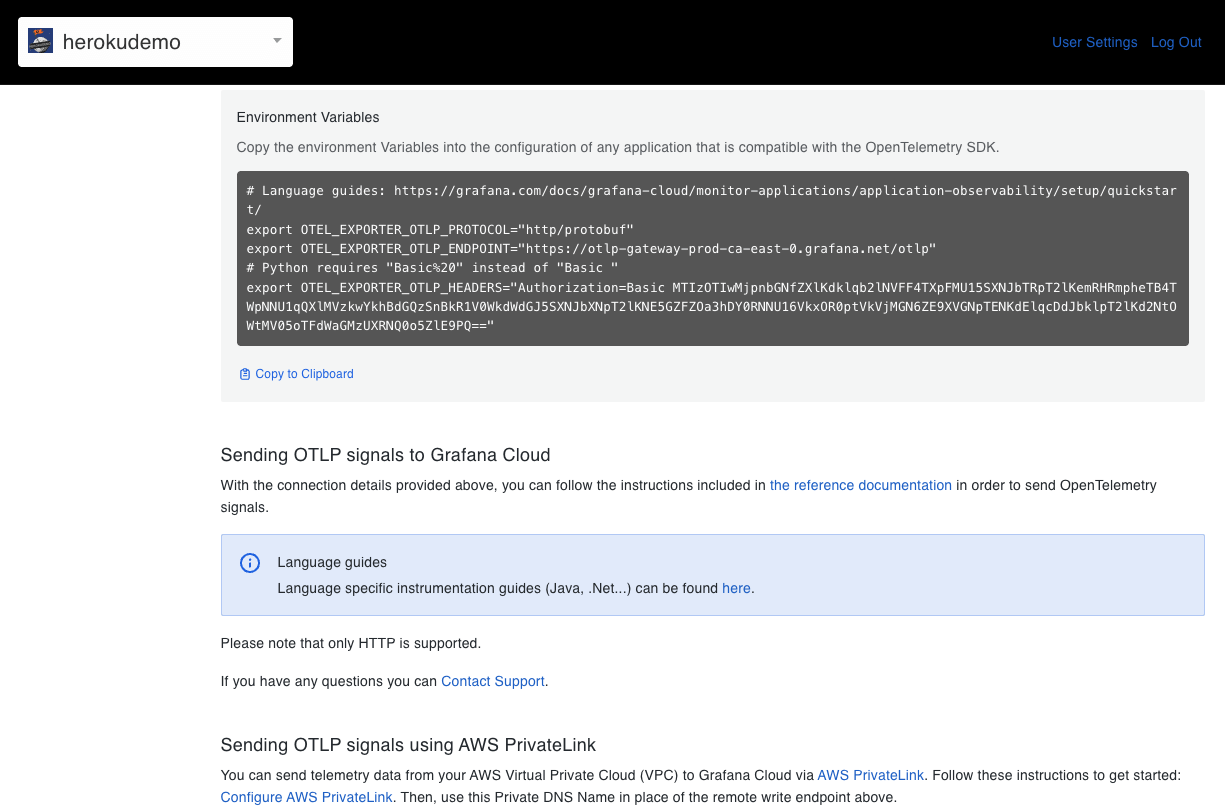

You can take this even further. If you configure an OpenTelemetry SDK and instrument your application, then you can generate custom metrics and distributed traces. You can also configure drains to send your telemetry data to third-party observability platforms.

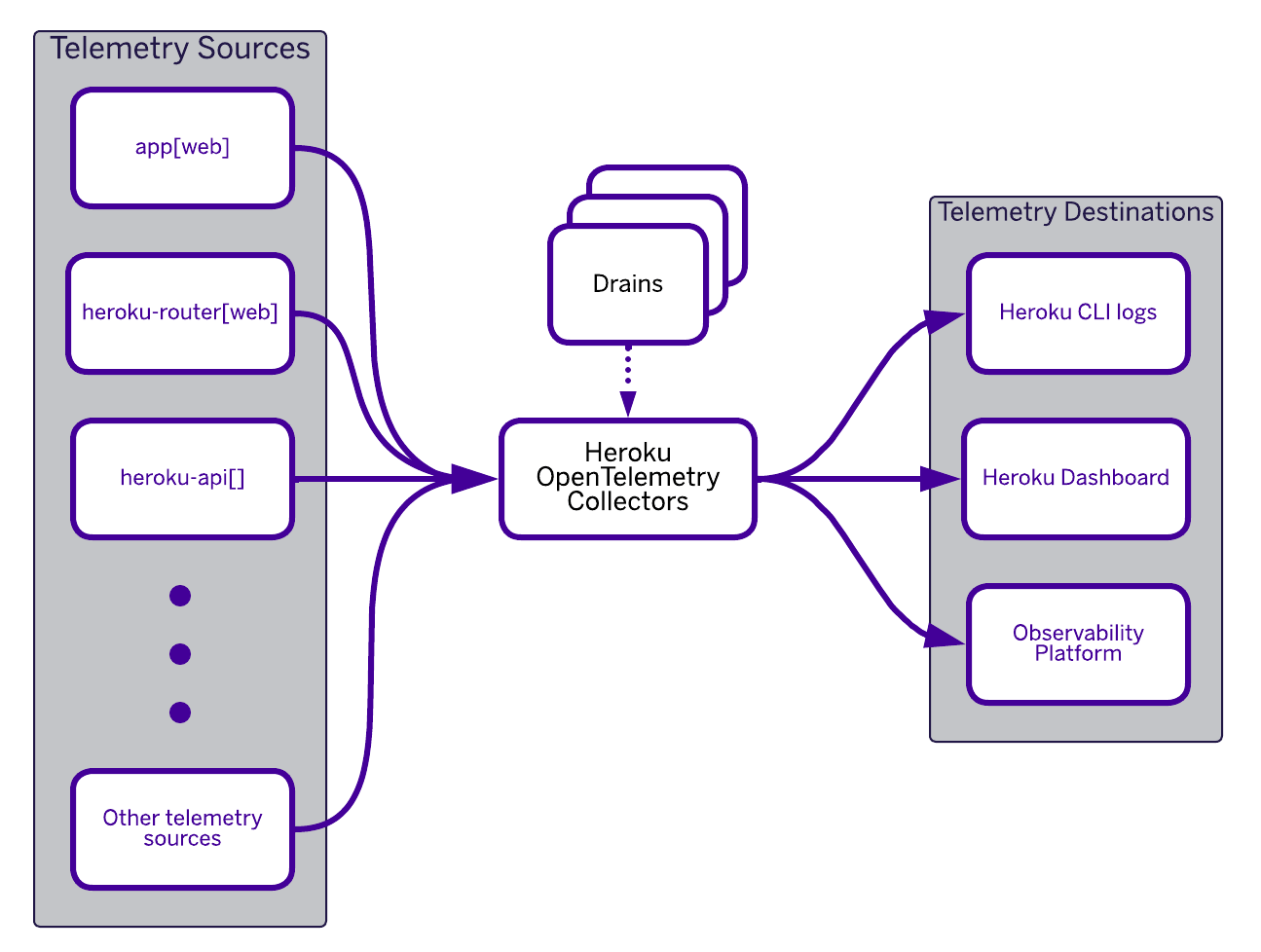

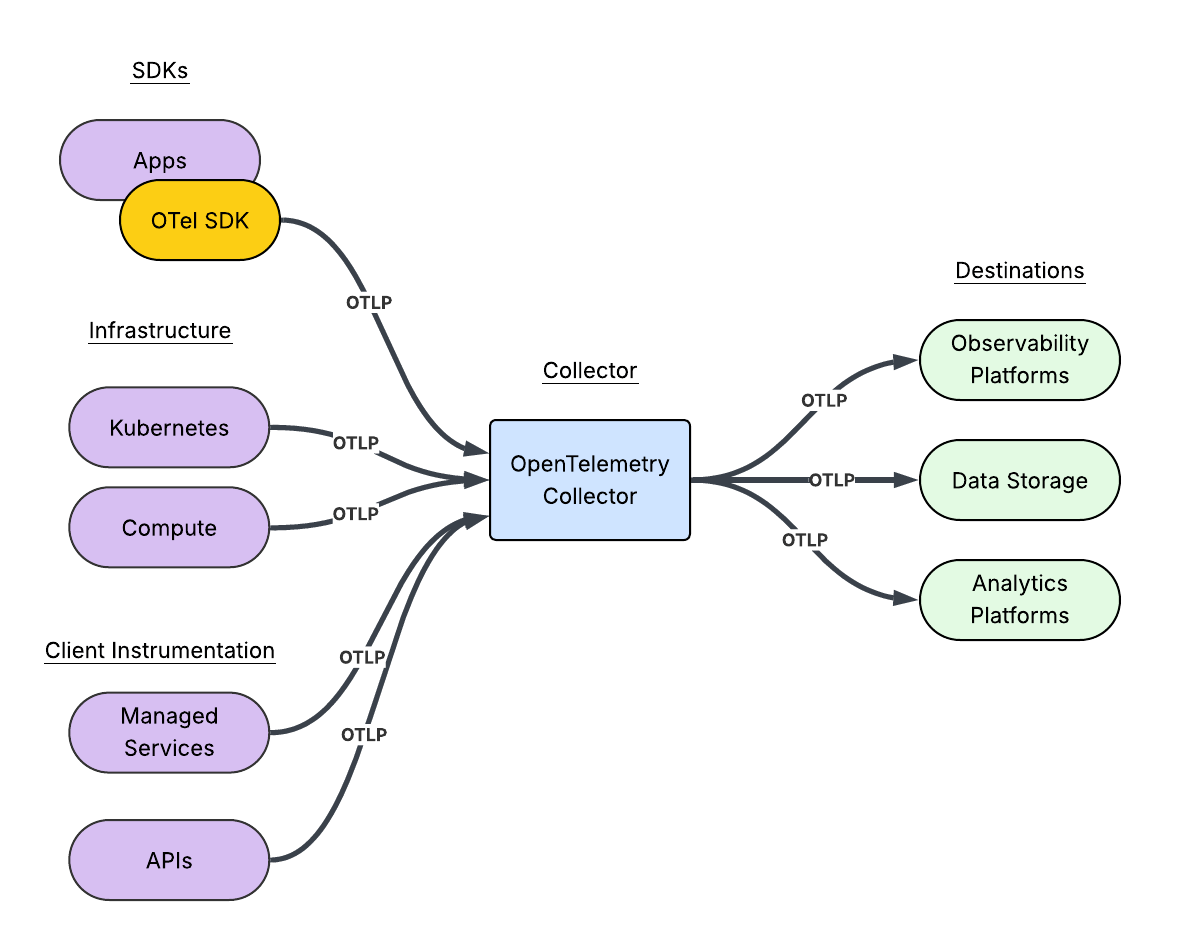

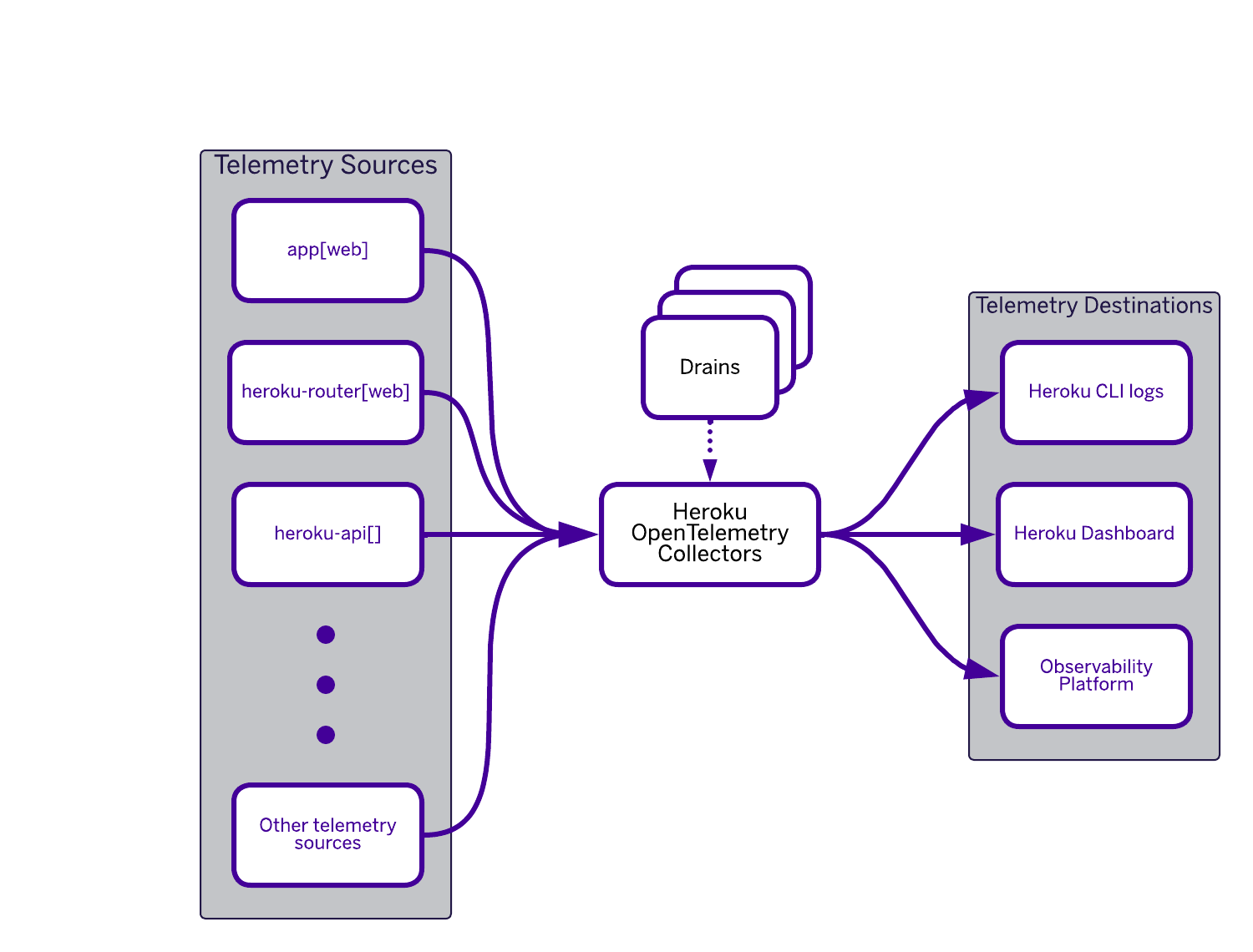

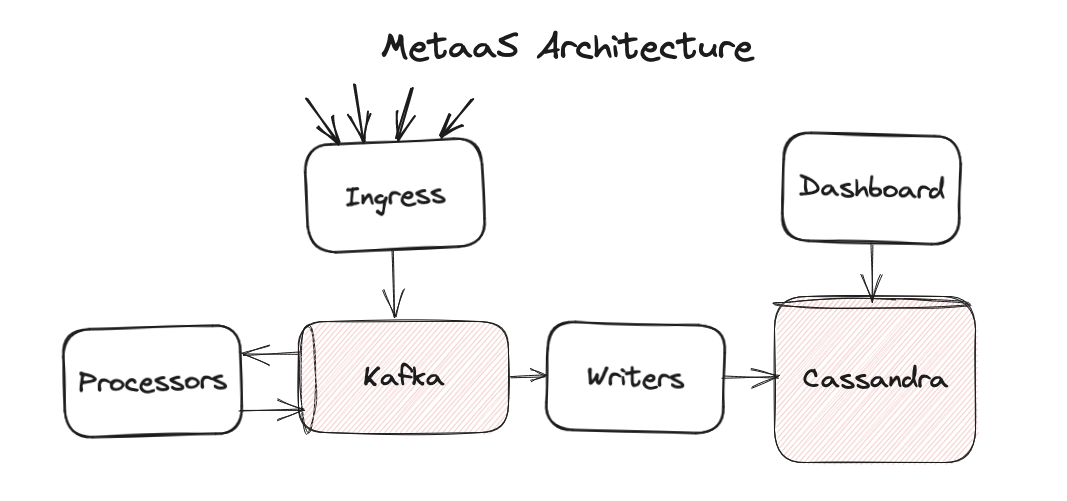

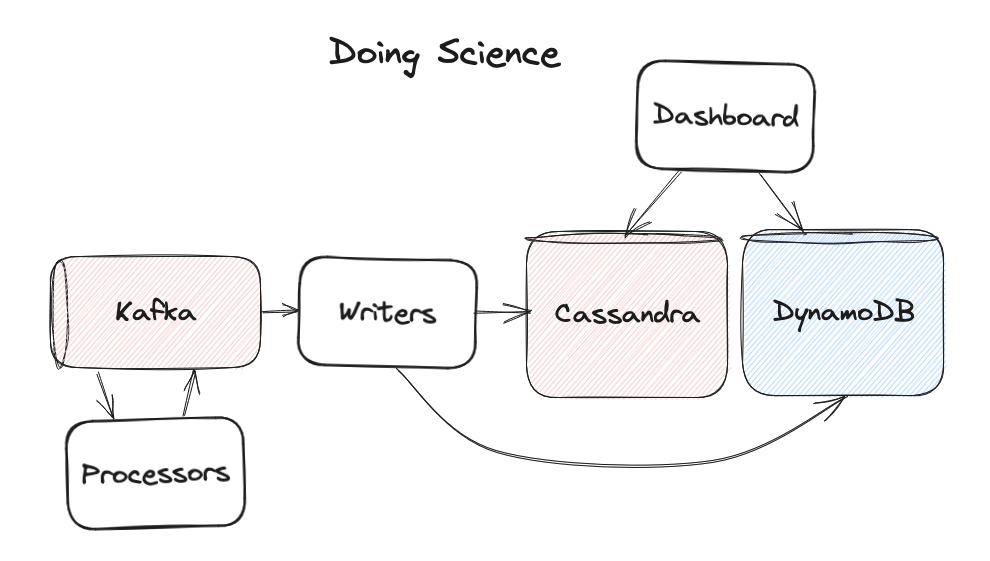

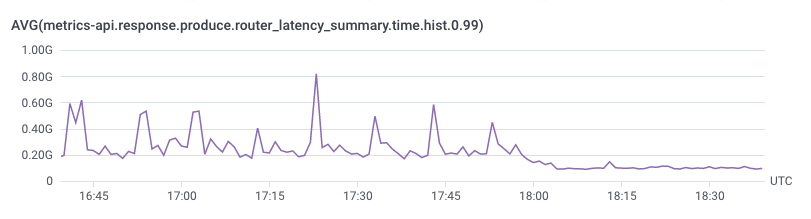

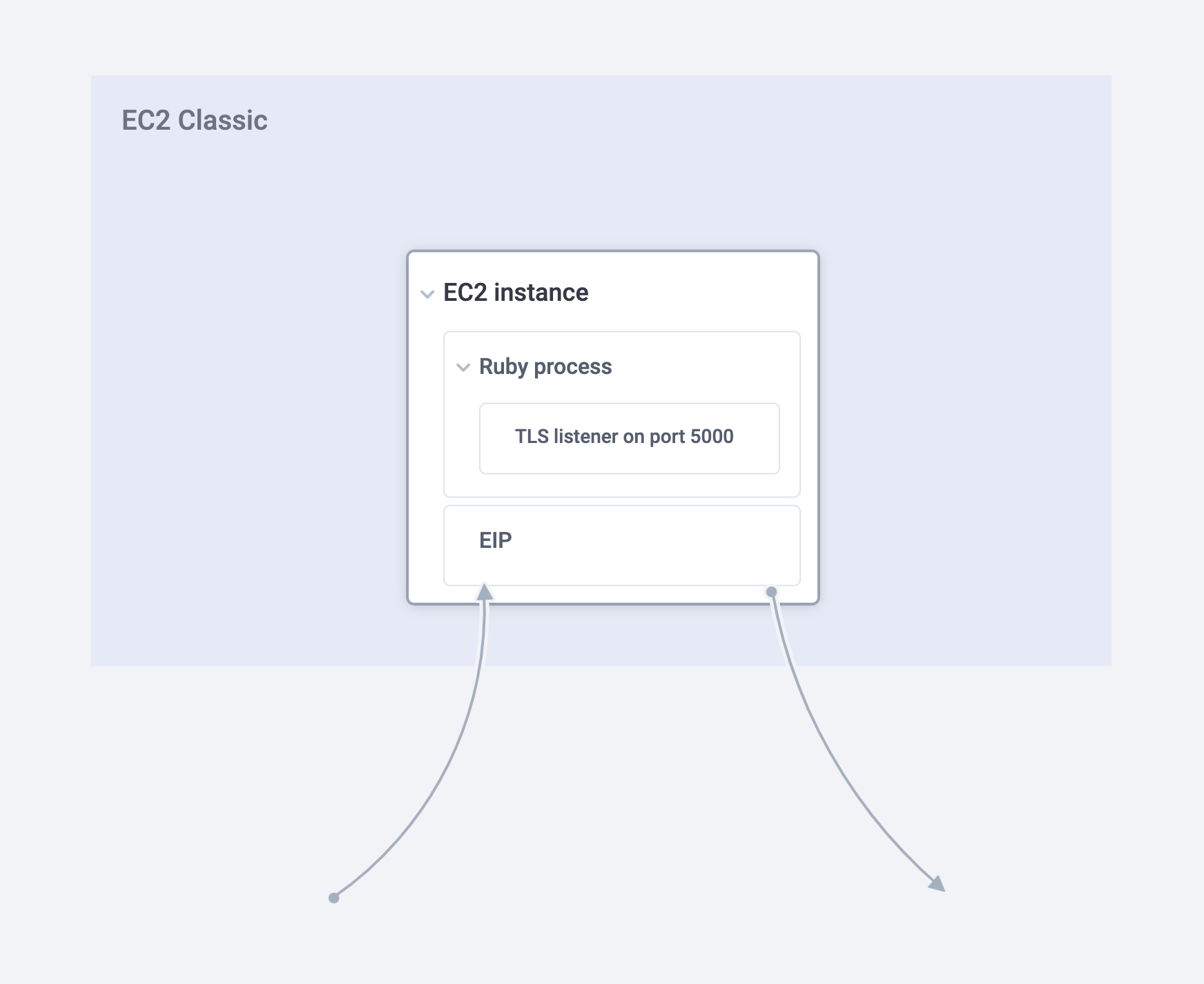

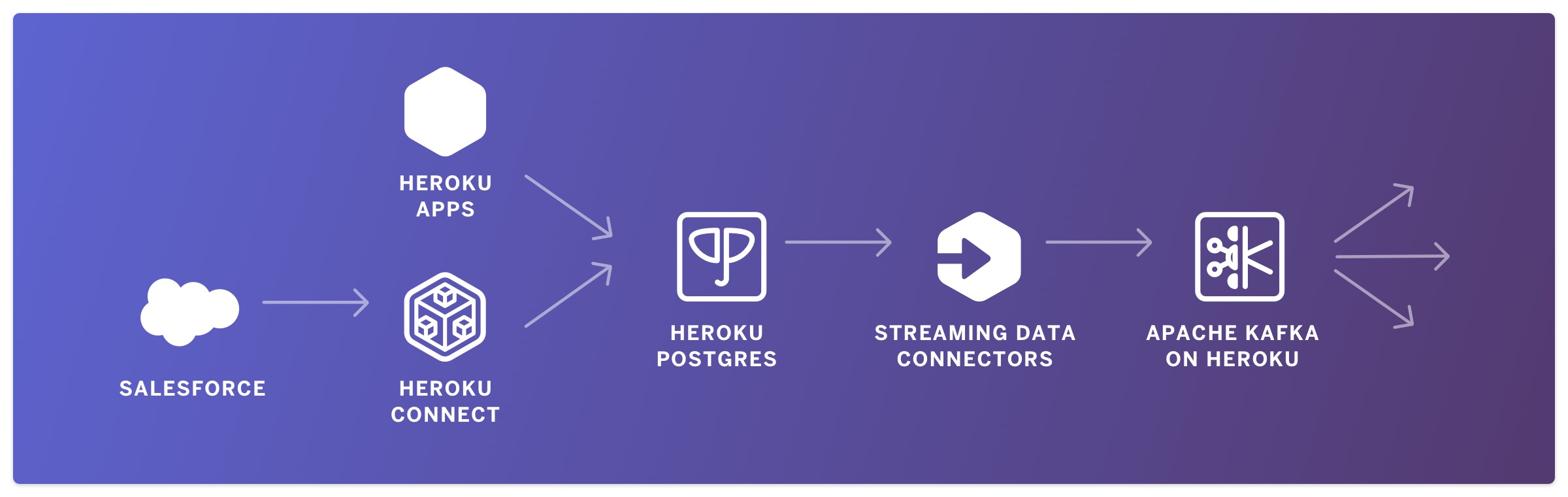

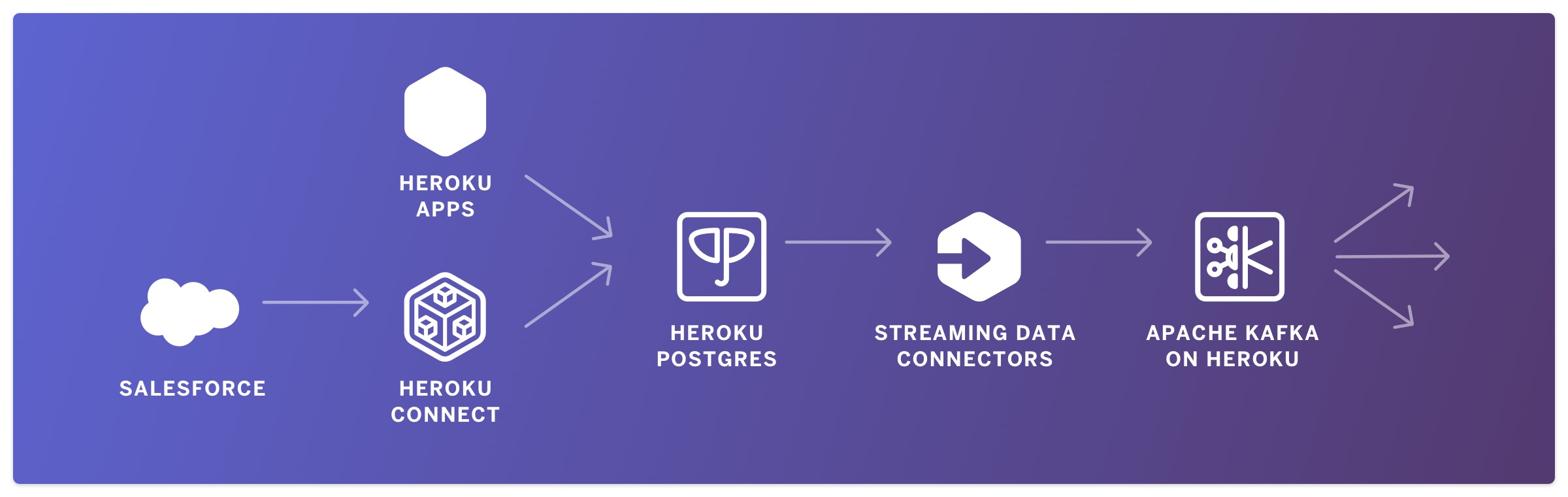

How do all the pieces fit together? Consider the following diagram:

The built-in Heroku OpenTelemetry Collector does all the heavy lifting for you.

- Fir uses the Heroku OpenTelemetry Collector to collect data from various telemetry sources such as your web application, the Heroku router, and the Heroku API.

- The collector is configured with various telemetry destinations (drains).

- The collector sends the relevant telemetry data to destinations like Heroku CLI logs, the Heroku dashboard, or other observability platforms.

OpenTelemetry drains can be defined at the space level—meaning they apply to all applications in the space—or at an individual application level. This is done using the Heroku CLI:

$ heroku telemetry -h

list telemetry drains

USAGE

$ heroku telemetry [-s ] [--app ]

FLAGS

-s, --space= filter by space name

--app= filter by app name

DESCRIPTION

list telemetry drains

EXAMPLES

$ heroku telemetry

COMMANDS

telemetry:add Add and configure a new telemetry drain. Defaults to collecting all telemetry unless otherwise specified.

telemetry:info show a telemetry drain's info

telemetry:remove remove a telemetry drain

telemetry:update updates a telemetry drain with provided attributes (attributes not provided remain unchanged)

The key to the interoperability of Heroku’s telemetry data is the Open Telemetry Protocol (OTLP). This protocol has two transports: gRPC and HTTP. Heroku supports both. While the gRPC transport is more efficient and has more features (HTTP/2 streaming, bi-directional streaming, Protocol Buffers payload), it might not be able to traverse some firewalls or be routed properly. In these cases, the HTTP transport, based on simple HTTP 1.1, may be the best option. It may depend also on the support in the SDK of your programming language.

Conclusion

As cloud-native applications become more complex and distributed, observability is no longer optional. It is a fundamental requirement for ensuring reliability, performance, and rapid debugging. OpenTelemetry is quickly becoming the industry standard for telemetry collection, and its seamless integration into Fir ensures that applications running on the platform can be monitored with minimal effort.

Fir’s underlying Kubernetes foundation allows organizations to benefit from industry-leading infrastructure without needing to manage the complexity of Kubernetes directly. This combination provides a powerful and future-proof platform that simplifies operations while ensuring full visibility into application behavior.

Fir’s reliance on open standards and technologies is a win-win because it reduces the risk of vendor lock-in for users and also benefits from the development effort of the open-source community to enhance and improve those technologies.

Additional resources

- Planting New Platform Roots in Cloud Native with Fir

- Heroku Documentation

- Cloud Native Buildpacks: Go tutorial from Heroku

- OTLP Specification 1.5.0 | OpenTelemetry

The post OpenTelemetry, Kubernetes, and Fir: Putting it All Together appeared first on Heroku.

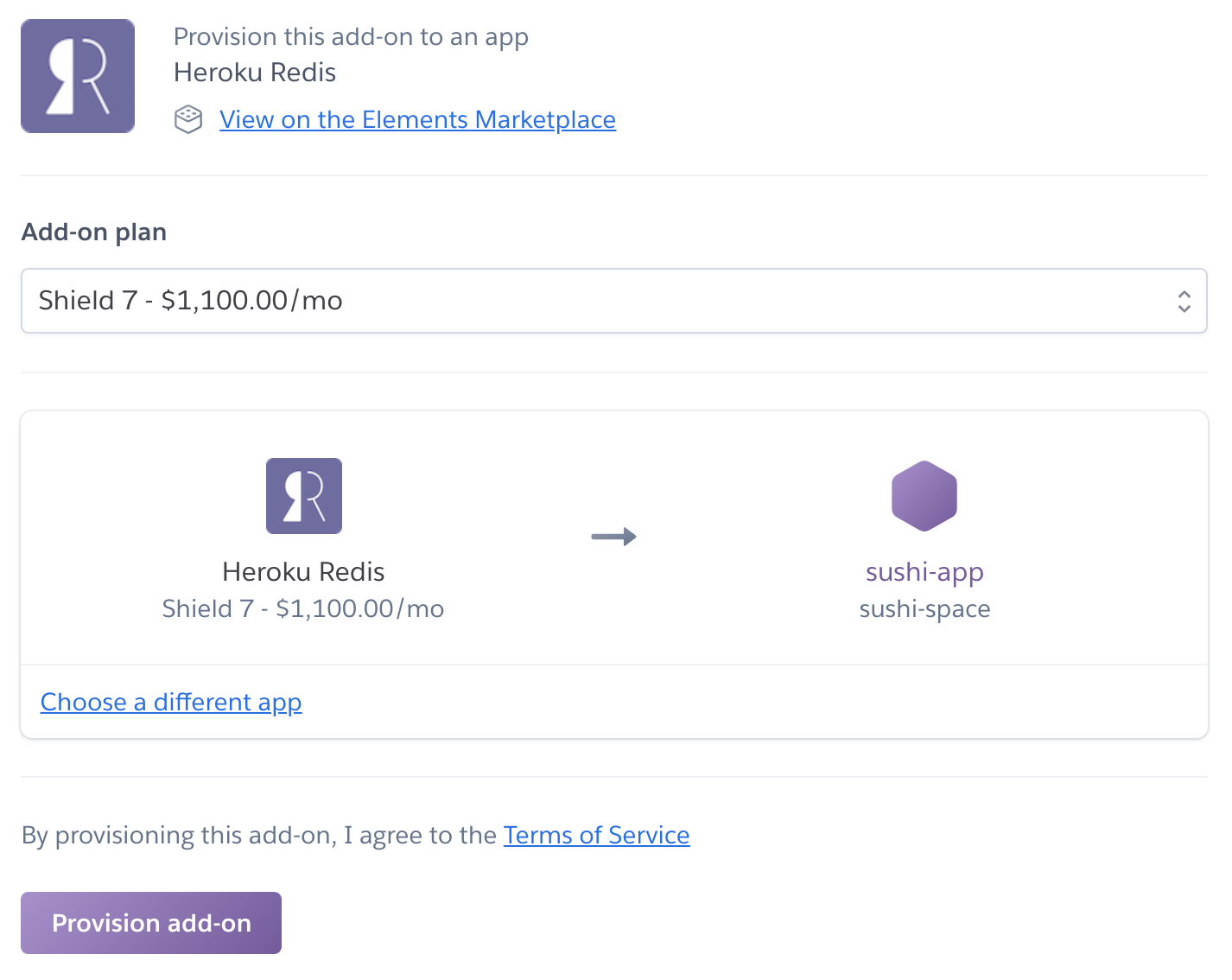

]]>For years, Heroku customers have relied on our managed in-memory data store services for caching, session management, real-time leaderboards, queueing, and so much more. Valkey is a drop-in, open-source fork of Redis OSS at v7.2, maintained by the Linux Foundation, and is backwards compatible with Redis OSS protocols…

The post Heroku Key-Value Store Now Supports Valkey 8.1 with JSON and Bloom Modules appeared first on Heroku.

]]>For years, Heroku customers have relied on our managed in-memory data store services for caching, session management, real-time leaderboards, queueing, and so much more. Valkey is a drop-in, open-source fork of Redis OSS at v7.2, maintained by the Linux Foundation, and is backwards compatible with Redis OSS protocols and clients. With Valkey v8.1, we’re continuing our commitment to providing you with a robust, scalable, and developer-friendly in-memory datastore. We are delivering this enhancement to empower you to build faster, smarter, and more efficient applications on Heroku.

What’s New in Valkey v8.1

Valkey v8.1 itself comes packed with core improvements designed to make your applications perform:

- Enhanced Performance: Experience lower latencies and higher throughput. Valkey v8.1 features a new, more memory-efficient hash table implementation and optimizations in I/O threading. This means your apps can handle more requests, faster. Active memory defragmentation also sees a significant reduction in request latency.

- Better Memory Efficiency: Squeeze more out of your instances. The new hash table design reduces memory usage per key, allowing you to store more data cost-effectively.

These improvements mean your existing Heroku Key-Value Store use cases will run faster and more efficiently, mostly without needing any changes on your end.

How to upgrade to Valkey v8.1

You can upgrade your Heroku Key-Value Store instance to the latest version with:

heroku redis:upgrade –version 8.1 –app app-nameIf you’re on mini, above command will upgrade your instance immediately. If you’re on premium or larger plans, the above command will prepare the maintenance and you can upgrade by running maintenance.

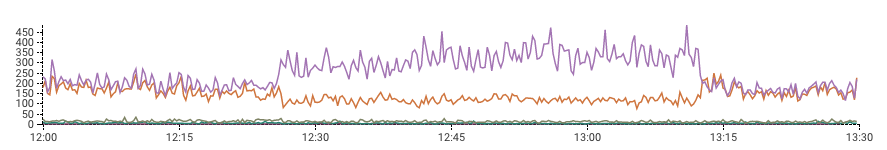

Valkey 8 Benchmark Highlights (vs Valkey 7.2)

To give you a clearer picture of the performance uplift, our internal benchmarks (combination of SETS and GETS operations) comparing Valkey 8.0 (a precursor to 8.1, sharing many core enhancements) with Valkey 7.2 on various Heroku Key-Value Store premium plans show significant improvements. Here’s a snapshot of the average gains observed:

| Heroku Plan (Cores) | Valkey 8.0 vs 7.2: Ops/sec Increase | Valkey 8.0 vs 7.2: Avg. Latency Reduction |

|---|---|---|

| premium-7 (2 cores) | ~6.5% | ~6.1% |

| premium-9 (4 cores) | ~37.4% | ~27.3% |

| premium-10 (8 cores) | ~44.7% | ~25.3% |

| premium-12 (16 cores) | ~164.6% | ~63.0% |

| premium-14 (32 cores) | ~201.8% | ~62.5% |

These benchmarks demonstrate that as you scale to plans with more CPU cores, the performance advantages of Valkey 8.x become even more pronounced, allowing your applications to handle substantially more operations per second with lower latency. While specific gains can vary by workload, the trend is clear: Valkey 8.1 is engineered for speed and efficiency. We offer a variety of Heroku Key-Value Store options to tailor to your needs.

Valkey Bloom & ValkeyJSON: Powerful New Modules for Heroku Key-Value Store

The real headline-grabbers with this release are the new, highly anticipated modules now available: Valkey Bloom and ValkeyJSON. These modules (similar to extensions on Heroku Postgres) unlock entirely new ways to leverage the power and simplicity of Heroku Key-Value Store within your Heroku applications. Let’s go over each one in more detail!

Valkey Bloom: Probabilistic Data Structures for Efficiency

Valkey Bloom introduces Bloom filters, a probabilistic data structure that excels at quickly and memory-efficiently determining if an element is probably in a set, or definitely not in a set.

- Benefit: Bloom filters can dramatically reduce the load on your primary databases and improve application efficiency by avoiding unnecessary, expensive lookups for items that don’t exist. They achieve this with remarkable memory savings – potentially over 90% compared to traditional methods for some applications.

- Use Cases:

- Cache “Probably Not Found” Queries: Before hitting your main database for an item, quickly check a Bloom filter. If it says the item is definitely not there, you save a costly database query. This is fantastic for recommendation engines (filtering out already-seen items), unique username checks, or fraud detection systems (checking against known fraudulent IPs or transaction patterns).

- Content Filtering: Efficiently check against large lists of malicious URLs or profanity.

- Ad Deduplication: Ensure users aren’t shown the same advertisement repeatedly.

While Bloom filters have a chance of a “false positive” (saying an item might be in the set when it isn’t), they guarantee no “false negatives” (if it says an item isn’t there, it’s truly not there). For many use cases, this trade-off is incredibly valuable for the performance and memory gains.

ValkeyJSON: Native JSON Handling for Rich Data Structures

Many modern applications rely heavily on JSON. With ValkeyJSON, you can now work with JSON data more naturally and efficiently within a Heroku Key-Value Store instance.

- Benefit: ValkeyJSON provides native support for storing, retrieving, and manipulating JSON documents. This allows for atomic operations on specific parts of a JSON object without needing to fetch and parse the entire thing in your application, leading to better performance and simpler application code.

- Use Cases:

- Store Complex Objects: Easily store user profiles, product catalogs with nested attributes, configuration data, or any other complex objects as JSON documents.

- Atomic Updates: Modify specific fields within a JSON document directly in Valkey. For example, update a user’s last login time or add an item to an array within a product’s attributes without rewriting the whole object.

- Simplified Development: Reduce boilerplate code for serializing and deserializing JSON in your application.

If your application deals with structured but flexible data, ValkeyJSON can significantly streamline your data management and improve performance.

Getting Started with Valkey Modules on Heroku Key-Value Store

Once you upgrade to Valkey v8.1, these powerful modules are already enabled and commands can be used. For example, to add an item to bloom filter through the CLI:

BF.ADD name-of-filter item-to-insertWe encourage you to explore the official Valkey documentation for Valkey Bloom and Valkey JSON to dive deeper into their commands and capabilities.

Valkey v8.1 & Module Power: Your Faster, More Flexible Heroku Data Store

The addition of Valkey v8.1, along with the Valkey Bloom and ValkeyJSON modules to Heroku Key-Value Store offerings, represents a significant step forward in the capabilities available to you on the Heroku platform. We’re excited to see how you’ll leverage these new tools to build the next generation of innovative applications.

As always, we’re here to support you if you get stuck. Stay tuned for more detailed guides and examples on using these new features. For now, get ready to explore the enhanced power and flexibility of Heroku Key-Value Store! Happy coding!

The post Heroku Key-Value Store Now Supports Valkey 8.1 with JSON and Bloom Modules appeared first on Heroku.

]]>In a recent Salesforce study , 84% of developers with AI say it helps their teams complete their projects faster. AI-powered, natural language development tools make it possible for anyone to create software by typing instructions in English. This increase in new apps is only…

The post Introducing the Heroku AI Platform as a Service (AI PaaS) appeared first on Heroku.

]]>In a recent Salesforce study, 84% of developers with AI say it helps their teams complete their projects faster. AI-powered, natural language development tools make it possible for anyone to create software by typing instructions in English. This increase in new apps is only matched by the increasing complexity of new technology to choose from, integrate, and maintain as a stable and secure platform – stressing the existing delivery gaps and friction in software delivery.

Heroku was founded to make deploying and scaling Ruby on Rails apps in the cloud easy for developers. Over the last decade, we remained steadfast in this mission by expanding to support more languages, databases, and now to AI. The Heroku AI PaaS brings powerful AI primitives into our opinionated platform with a simplified developer experience and automated operations to accelerate delivery of AI-powered apps and agents.

The Heroku AI PaaS adds these new capabilities to our robust cloud-native platform foundation:

- Heroku AppLink: Enable Agentforce to more use cases with custom actions, channels, tools in any programming language and securely integrated with a single click through Salesforce Flows, Apex, and Data Cloud. Agentforce is the agentic layer of the Salesforce Platform for deploying autonomous AI agents across any business function. This capability brings the ecosystem of programming languages and custom code to augment and enhance Salesforce implementations. AppLink will GA in July.

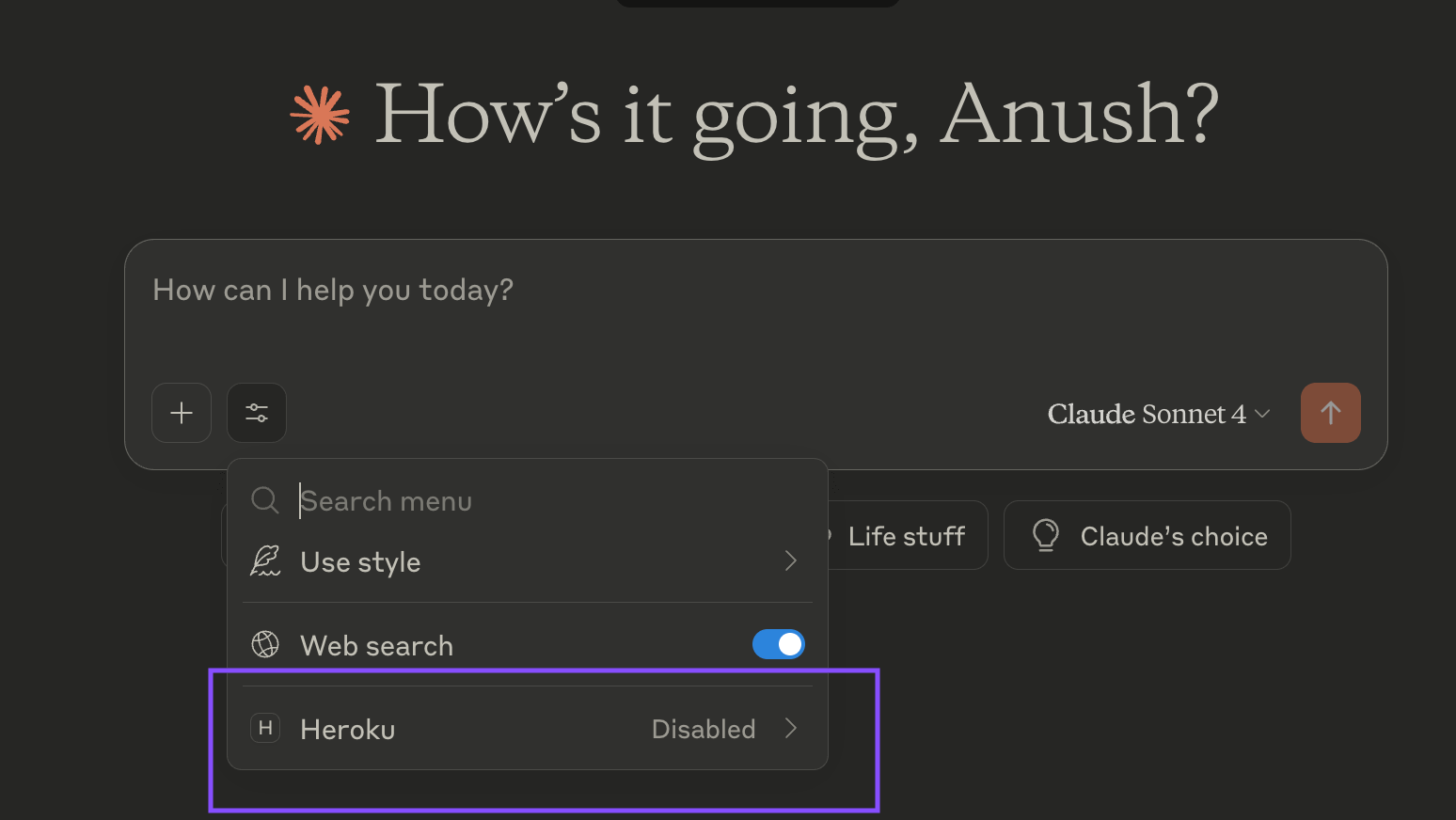

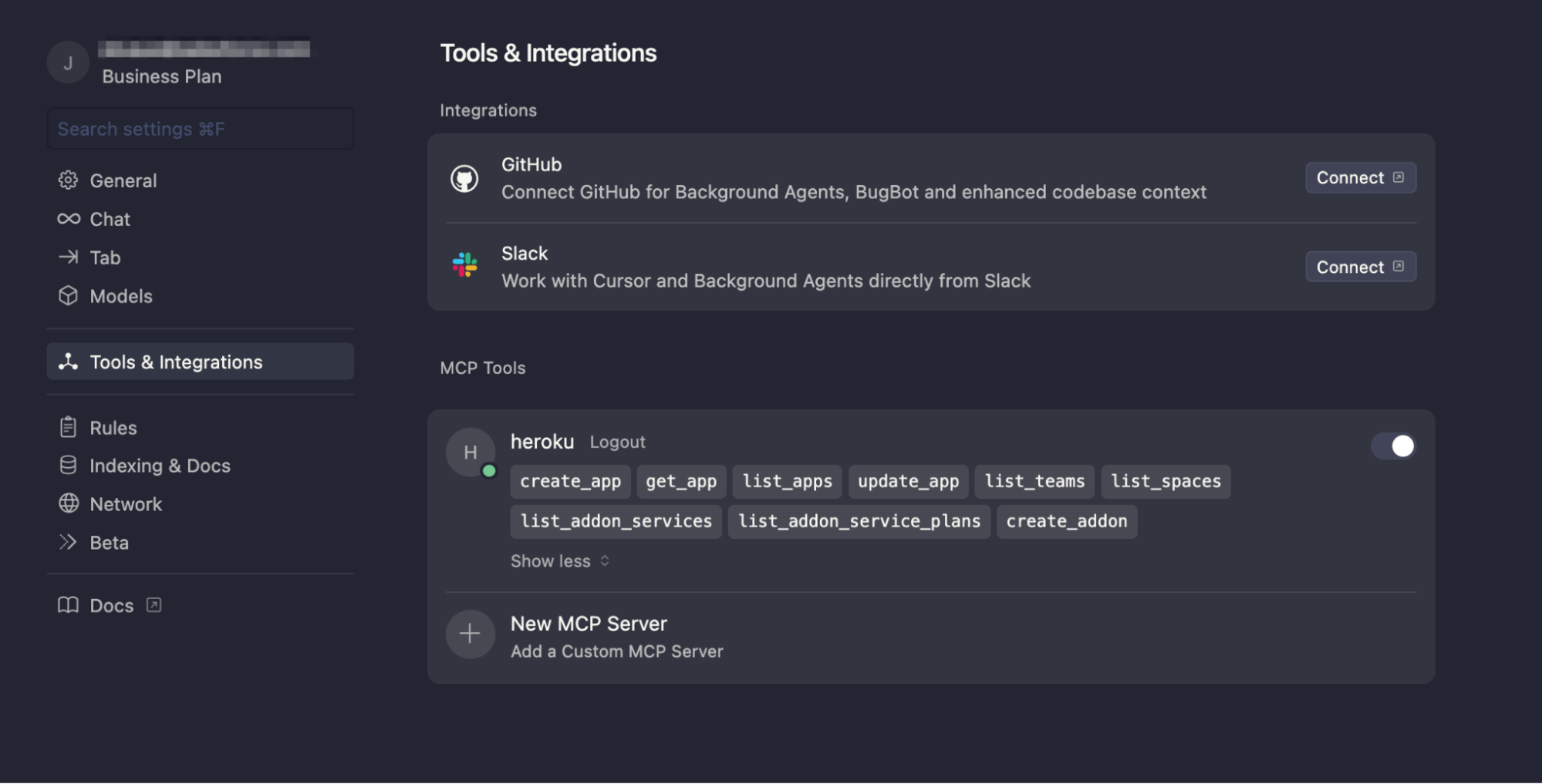

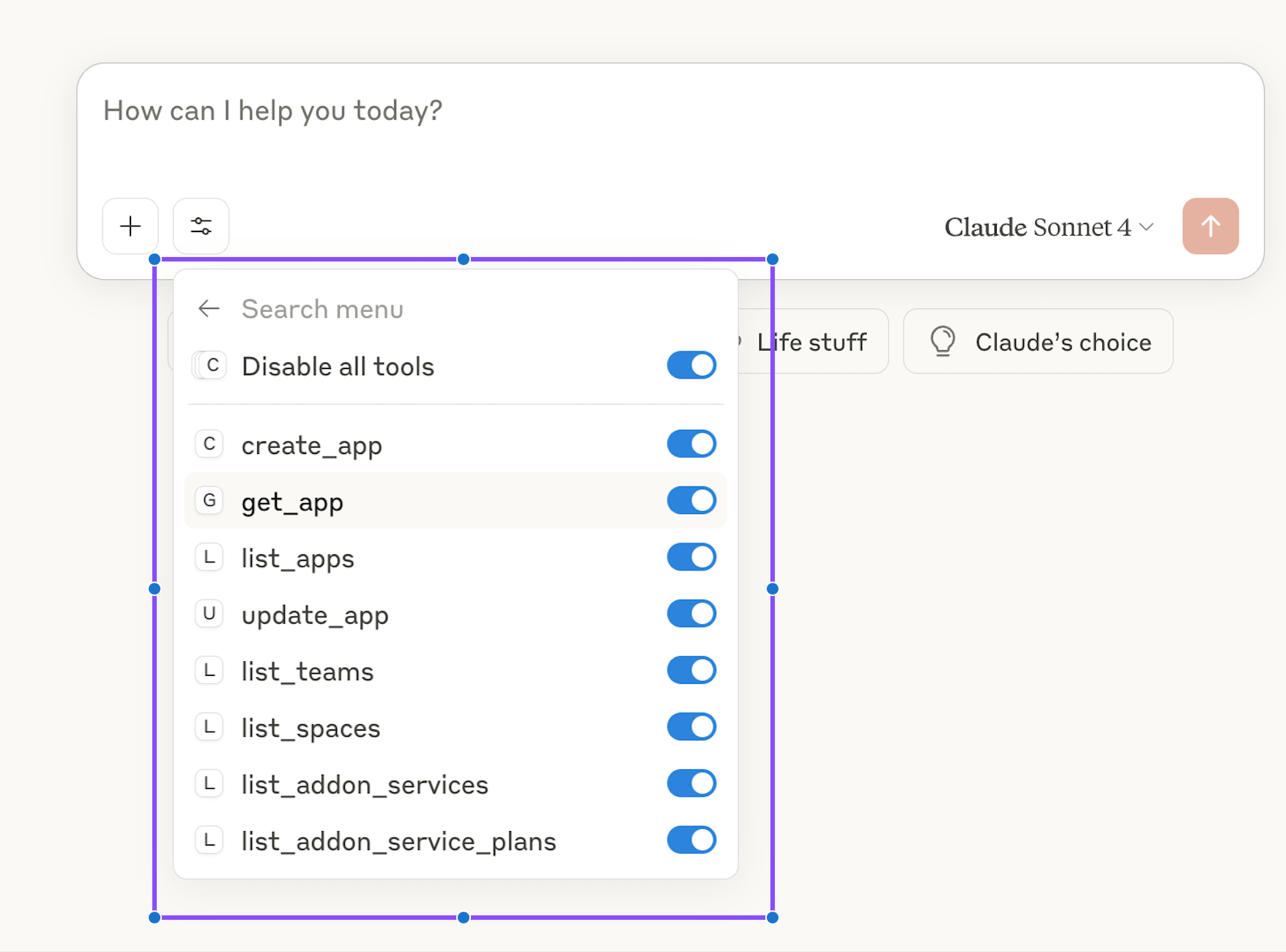

- AI-Native Tools Integration: Leverage tools like Cursor, Windsurf, and Claude Code to vibe code new apps, modernize existing apps, and add capabilities to agents. Deploy and manage code running on Heroku directly from these new developer tools. This capability is available today.

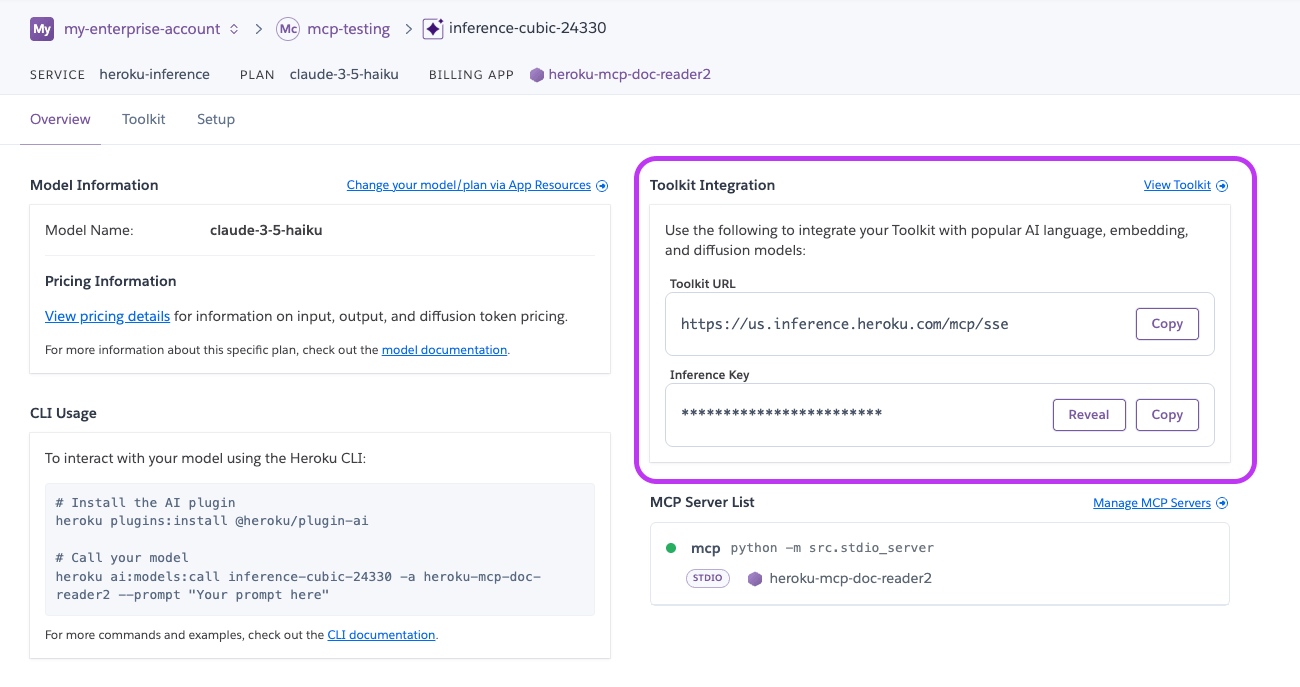

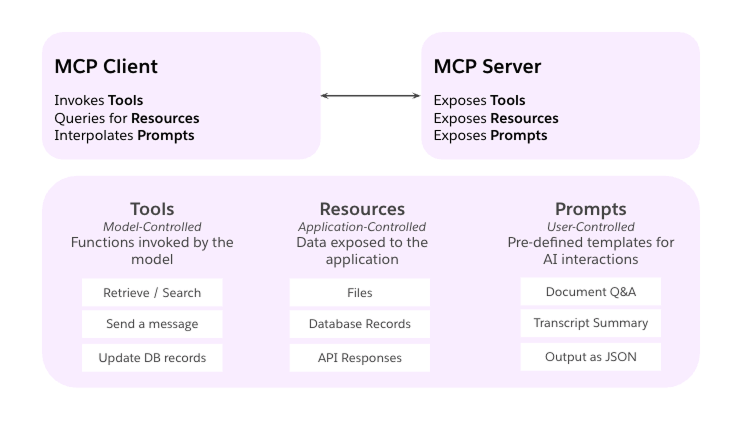

- MCP Server Toolkit: Enable your agents with more capabilities by exposing relevant tools and data with MCP Servers, a critical element of agentic workflows. Build and run custom MCP Servers, the official Heroku MCP Server, or the Heroku Remote MCP Server on Heroku and Heroku MCP Toolkits provide a unified gateway to deploy and manage multiple MCP servers on the platform. With Agentforce 3.0‘s native MCP support, bringing your Heroku Remote MCP Server to Agentforce is now a reality. MCP Server support is available today.

- Heroku Managed Inference and Agents: Brings together a set of powerful primitives into the platform that make it simple for developers to build, scale, and operate AI-powered features and applications, without the heavy lifting of managing their own AI infrastructure. Access to leading models from Claude, Cohere, Stability, and more; plus primitives for building agents that can reason, act, and call tools, developers can focus on delivering differentiated experiences for their users, rather than wrangling inference infrastructure or orchestration logic. Heroku Managed Inference and Agents is available today.

Plus these new AI apps and agents will benefit from Heroku’s built-in automation, autoscaling, observability, and dashboards for all workloads running on the platform, giving you peace of mind at any scale and the metrics to monitor ongoing performance.

Complementing the platform innovations are new additions to our partner program to increase the technical expertise available to our customers around the world that help deliver their new app projects faster and more successfully. New certifications deepen expertise in solution design and implementation while a new Heroku Expert Area in Partner Navigator makes it easy for customers to find the right partner for their needs. Learn more about the partner updates here.

Our focus has always been on the apps, the code that drives your business. The software being built today is agentic and these new apps and agents require access to data, tools, and other agents to get the job done. The Heroku AI PaaS brings powerful AI technology to your fingertips with ease of use in mind to help you deliver value to your business faster and with less complexity. Start exploring the new Heroku today.

The post Introducing the Heroku AI Platform as a Service (AI PaaS) appeared first on Heroku.

]]>Salesforce is the world’s #1 AI CRM and provider of the Agentforce digital labor platform bringing together the C360 apps and data with Heroku. Every customer’s business is unique and that…

The post Elevate Your Salesforce Consulting Practice with Heroku appeared first on Heroku.

]]>Salesforce is the world’s #1 AI CRM and provider of the Agentforce digital labor platform bringing together the C360 apps and data with Heroku. Every customer’s business is unique and that often means designing solutions that bring together multiple Salesforce Clouds with 3rd party systems, and building customized experiences around them. Heroku’s robust AI PaaS provides the flexibility developers need and the reliability businesses need to enable these solutions with custom apps, services, and native integration to the Salesforce platform.

What’s new for partners:

- Heroku Expert Area: Available in July as part of the Salesforce Partner Navigator, the new Heroku Expert Area is designed to recognize and reward partners with proven Heroku expertise.

- Trailblazer Community Group: A private community group for Salesforce Consulting Partners on their Heroku journey to get the latest updates, network with peers, and connect with the Heroku team.

- Product Benefits: Partners are provided access to Heroku products to get hands on with the technology, enable their teams, and build demos.

“At Showoff we deeply value our partnership with Heroku—not just for the powerful technology platform it provides, but for the trust and collaboration that underpin our relationship. Our customers understand the value Heroku brings, and we see that every day in the agility and scalability it enables. What sets this partnership apart is the personal connection—the ability to pick up the phone, solve problems together, and collaborate on innovative solutions that truly drive business value”

– Barry Sheehan, CCO, Showoff

Certifications to build deep expertise

Expertise is built through knowledge and experience. We have two certifications to help partners continue their enablement journey on the path to becoming a Heroku Expert. Partners can achieve Heroku Specialist and Heroku Cloud Expert distinctions, with Heroku Implementation Expert distinction launching in 2026. Information on how to achieve Heroku distinctions is available in the Heroku Partner Readiness Guide and Heroku Technical Learning Journey.

- Heroku Developer Specialist [new]: This speciality area demonstrates an individual’s ability to build and manage Heroku applications effectively with an emphasis on practical skills related to deploying, maintaining, and scaling apps on the Heroku platform. A core component of this distinction is the Heroku Developer Accredited Professional certification.

- Heroku Architect Specialist [new]: This speciality area demonstrates an individual’s ability to design and implement applications on Heroku with a focus on architectural best practices. It involves understanding complex architectural considerations and leveraging Heroku features to build robust, scalable applications. A core component of this distinction is the Heroku Architect certification.

- Heroku Cloud Expert [new]: This distinction demonstrates an organization’s proven expertise in successfully delivering Heroku implementations that achieve high customer satisfaction scores.

Access to Heroku products

Starting next month, eligible Salesforce Consultants and Cloud Resellers will get access to Heroku products within a Heroku Demo Org with access to a Heroku Dev Starter Package which includes Dynos, Heroku Connect, and General Credits. This direct access to products provides a hands-on environment to build demos, design and prototype solutions, and complements enablement for certification.