| CARVIEW |

Navigation Menu

-

-

Notifications

You must be signed in to change notification settings - Fork 56.2k

ONNX: Add support for Resnet_backbone (Torchvision) #16887

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

b2f1150 to

61a5c96

Compare

|

@ashishkrshrivastava Could you please rebase opencv_extra patch (to resolve conflict)? |

|

@alalek I was really really busy for last 2 days. I will complete it by today. |

8c50c73 to

95ab36b

Compare

79e50ad to

84ef9fb

Compare

|

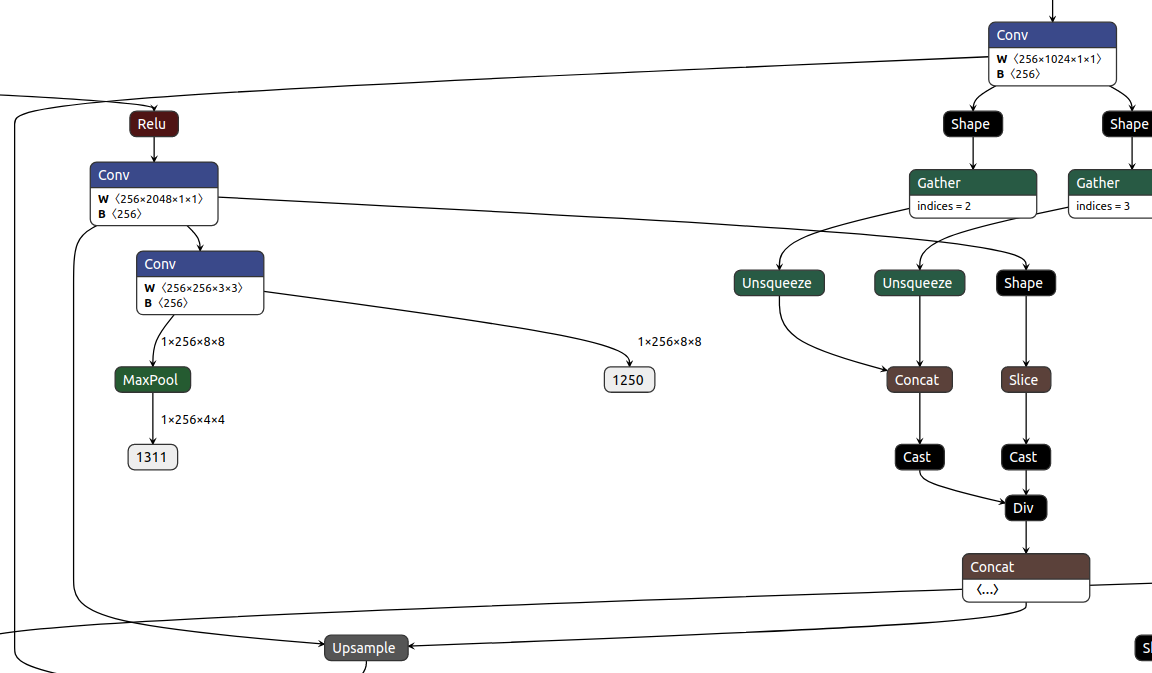

@ashishkrshrivastava, thanks for keep working on that! I'm wondering if it's possible to make calculations over constants during import and then just extend Resize parsing, what do you think? Because it looks like that we are trying for now very specific thing but can make subgraph to calculate target size. Can you please check which nodes from the following resize subgraph are computed properly in runtime and which are not? |

|

@dkurt, we just need some changes in onnx_importer.cpp in order to run this version of upsample/Resize subgraph. No need of fusion. else if (layer_type == "Resize")

{

for (int i = 1; i < node_proto.input_size(); i++)

CV_Assert(layer_id.find(node_proto.input(i)) == layer_id.end());

String interp_mode = layerParams.get<String>("coordinate_transformation_mode");

CV_Assert_N(interp_mode != "tf_crop_and_resize",

interp_mode != "tf_half_pixel_for_nn");

layerParams.set("align_corners", interp_mode == "align_corners");

Mat shapes = getBlob(node_proto, constBlobs, node_proto.input_size() - 1);

CV_CheckEQ(shapes.size[0], 4, "");

CV_CheckEQ(shapes.size[1], 1, "");

CV_CheckTypeEQ(shapes.depth(), CV_32S, "");

int height = shapes.at<int>(2);

int width = shapes.at<int>(3);

if (node_proto.input_size() == 3)

{

shapeIt = outShapes.find(node_proto.input(0));

CV_Assert(shapeIt != outShapes.end());

MatShape scales = shapeIt->second;

height *= scales[2];

width *= scales[3];

}

layerParams.set("width", width);

layerParams.set("height", height);

if (layerParams.get<String>("mode") == "linear") {

layerParams.set("mode", interp_mode == "pytorch_half_pixel" ?

"opencv_linear" : "bilinear");

}

replaceLayerParam(layerParams, "mode", "interpolation");

}

else if (layer_type == "Upsample")

{

layerParams.type = "Resize";

if (layerParams.has("scales"))

{

// Pytorch layer

DictValue scales = layerParams.get("scales");

CV_Assert(scales.size() == 4);

layerParams.set("zoom_factor_y", scales.getIntValue(2));

layerParams.set("zoom_factor_x", scales.getIntValue(3));

}

else

{

// Caffe2 layer

replaceLayerParam(layerParams, "height_scale", "zoom_factor_y");

replaceLayerParam(layerParams, "width_scale", "zoom_factor_x");

}

replaceLayerParam(layerParams, "mode", "interpolation");

if (node_proto.input_size() == 2 || (layerParams.get<String>("interpolation") == "linear" && framework_name == "pytorch")) {

layerParams.type = "Resize";

Mat scales = getBlob(node_proto, constBlobs, 1);

CV_Assert(scales.total() == 4);

if (layerParams.get<String>("interpolation") == "linear" && framework_name == "pytorch")

layerParams.set("interpolation", "opencv_linear");

layerParams.set("zoom_factor_y", scales.at<float>(2));

layerParams.set("zoom_factor_x", scales.at<float>(3));

}

}Change 1. CV_Assert_N(interp_mode != "tf_crop_and_resize",

interp_mode != "tf_half_pixel_for_nn");from CV_Assert_N(interp_mode != "tf_crop_and_resize", iinterp != "assymetric",

interp_mode != "tf_half_pixel_for_nn");change 2.. if (node_proto.input_size() == 2 || (layerParams.get<String>("interpolation") == "linear" && framework_name == "pytorch")) {

layerParams.type = "Resize";

Mat scales = getBlob(node_proto, constBlobs, 1);

CV_Assert(scales.total() == 4);

if (layerParams.get<String>("interpolation") == "linear" && framework_name == "pytorch")

layerParams.set("interpolation", "opencv_linear");

layerParams.set("zoom_factor_y", scales.at<float>(2));

layerParams.set("zoom_factor_x", scales.at<float>(3));

}from if (layerParams.get<String>("interpolation") == "linear" && framework_name == "pytorch") {

layerParams.type = "Resize";

Mat scales = getBlob(node_proto, constBlobs, 1);

CV_Assert(scales.total() == 4);

layerParams.set("interpolation", "opencv_linear");

layerParams.set("zoom_factor_y", scales.at<float>(2));

layerParams.set("zoom_factor_x", scales.at<float>(3));

}

So each node is working properly but resize and upsample, adding above changes would make that work too. |

eb5b0ee to

c9c6078

Compare

| constant_node->add_output(initializer.name()); | ||

| opencv_onnx::AttributeProto* value = constant_node->add_attribute(); | ||

| opencv_onnx::TensorProto* tensor = value->add_tensors(); | ||

| tensor->CopyFrom(initializer); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's also remove initialized nodes in the loop after they copied to the constant node

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@dkurt If I remove intializers inside this loop. then this function would be useless because it wont be able to fetch initializer data.

opencv/modules/dnn/src/onnx/onnx_importer.cpp

Lines 133 to 147 in c9c6078

| std::map<std::string, Mat> ONNXImporter::getGraphTensors( | |

| const opencv_onnx::GraphProto& graph_proto) | |

| { | |

| opencv_onnx::TensorProto tensor_proto; | |

| std::map<std::string, Mat> layers_weights; | |

| for (int i = 0; i < graph_proto.initializer_size(); i++) | |

| { | |

| tensor_proto = graph_proto.initializer(i); | |

| Mat mat = getMatFromTensor(tensor_proto); | |

| releaseONNXTensor(tensor_proto); | |

| layers_weights.insert(std::make_pair(tensor_proto.name(), mat)); | |

| } | |

| return layers_weights; | |

| } |

which is being called here

opencv/modules/dnn/src/onnx/onnx_importer.cpp

Lines 330 to 334 in c9c6078

| addConstantNodesForInitializers(graph_proto); | |

| simplifySubgraphs(graph_proto); | |

| std::map<std::string, Mat> constBlobs = getGraphTensors(graph_proto); | |

| // List of internal blobs shapes. |

So we will have to fetch data from constant nodes, means we need to create a function which first go through all of the nodes and fetch data from all constant nodes.

Am I right ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's see, it set_allocated_t will work - we can keep as is.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@dkurt , please have a look at the changes.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@dkurt Setting tensor directly from initializer wasn't working

value->set_allocated_t(initializer);

It was giving me Segmentation core error so I created a new tensor and set that to value attribute. So it is copied tensor.

opencv_onnx::TensorProto* tensor = initializer.New();

tensor->CopyFrom(initializer);

value->set_allocated_t(tensor);There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

tensor->CopyFrom(initializer);

releaseONNXTensor(initializer);

value->set_allocated_t(tensor);where releaseONNXTensor is

void releaseONNXTensor(opencv_onnx::TensorProto& tensor_proto)

{

if (!tensor_proto.raw_data().empty()) {

delete tensor_proto.release_raw_data();

}

}I think you meant the same.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Something like that but we have no raw_data in this case

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

all the initializers have data type as raw_data. I have checked data types.

frozenBatchNorm2d.onnx

bias: raw data

running_mean: raw data

running_var: raw data

weight: raw data

upsample_unfused_two_inputs_opset9_torch1.4.onnx

conv1.bias: raw data

conv1.weight: raw data

conv2.bias: raw data

conv2.weight: raw dataupsample_unfused_two_inputs_opset11_torch1.4.onnx

conv1.bias: raw data

conv1.weight: raw data

conv2.bias: raw data

conv2.weight: raw data

but yeah, we cant judge by looking at just 3 models, Do you think we should modify this function void releaseONNXTensor(opencv_onnx::TensorProto& tensor_proto) for all data_types or a new function should be created to check and delete all data_types and let this function as it is ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No, that's not necessary. We can just call method clear_initializer.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

i think we cannot use

graph_proto.clear_initializer() before calling getGraphTensors(graph_proto).

And if we use it inside addConstantNodesForInitializers(graph_proto); then would get the following error.

error: (-204:Requested object was not found) Blob 23 not found in const blobs in function 'getBlob'

cea7ad8 to

3d51aa3

Compare

| layerParams.set("zoom_factor_y", scales.at<float>(2)); | ||

| layerParams.set("zoom_factor_x", scales.at<float>(3)); | ||

| if (layerParams.get<String>("mode") == "linear" && framework_name == "pytorch") | ||

| layerParams.set("mode", "opencv_linear"); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think that this logic should be independent from scaling source location. Let's move out of if-else scope

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done !

f6a3c78 to

3cba646

Compare

|

Please take a look at merge artifacts for opencv_extra PR: https://github.com/opencv/opencv_extra/pull/734/files#r407673861. |

Done ! |

|

@ashishkrshrivastava Right now I need to export my Unet/Resnet with opset=9, other wise the Upsample block is split into many cast/concat blocks like described here (which won't load in OpenCV): pytorch/pytorch#27376 (comment) |

|

@JulienMaille support for opset 9 and opset 11 for unfused Upsample and Resize are already added. check #16709 . But those subgraphs has scaling for both H, W. The example you shared has only scale for H only. So subgraph is different accordingly. Still in my view that subgraph will also work because in case of no fusion, each node has to work properly as data flows through each node and OpenCV currently supports all those operator nodes. I would love to add subgraph fusion for such subgraphs, if needed. But for now, it will work without fusion. |

3cba646 to

74ce3b0

Compare

74ce3b0 to

e0ac0cf

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

👍 Looks good to merge, thank you!

Merge with extra: opencv/opencv_extra#734

resolves #16876

Pull Request Readiness Checklist

See details at https://github.com/opencv/opencv/wiki/How_to_contribute#making-a-good-pull-request

Patch to opencv_extra has the same branch name.

This PR adds

Support to resnet_backbone by adding

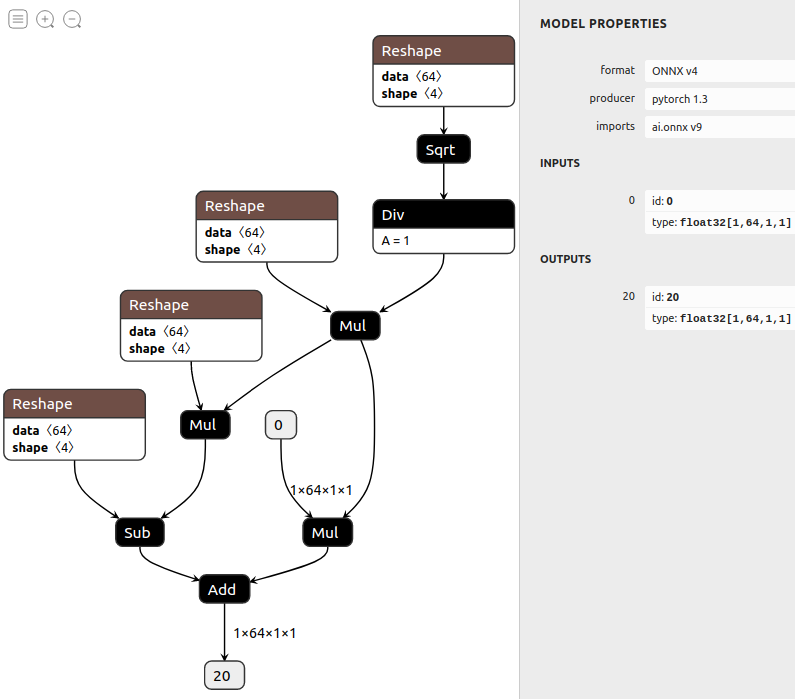

Unfused Version of batchnorm. Latest version of pytorch ( 1.4 )

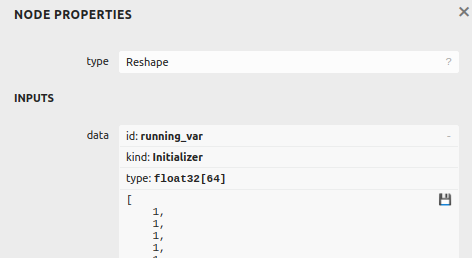

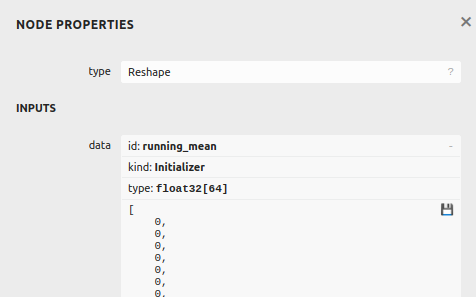

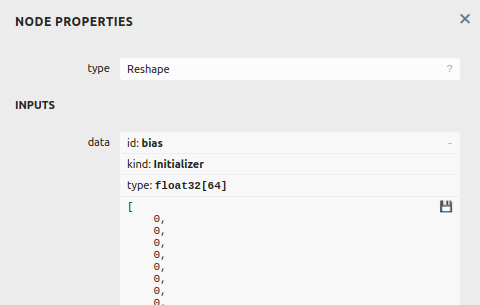

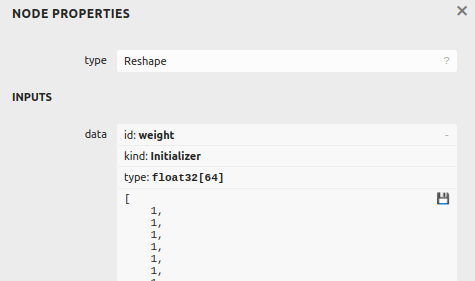

Below for are node properties of Reshape nodes

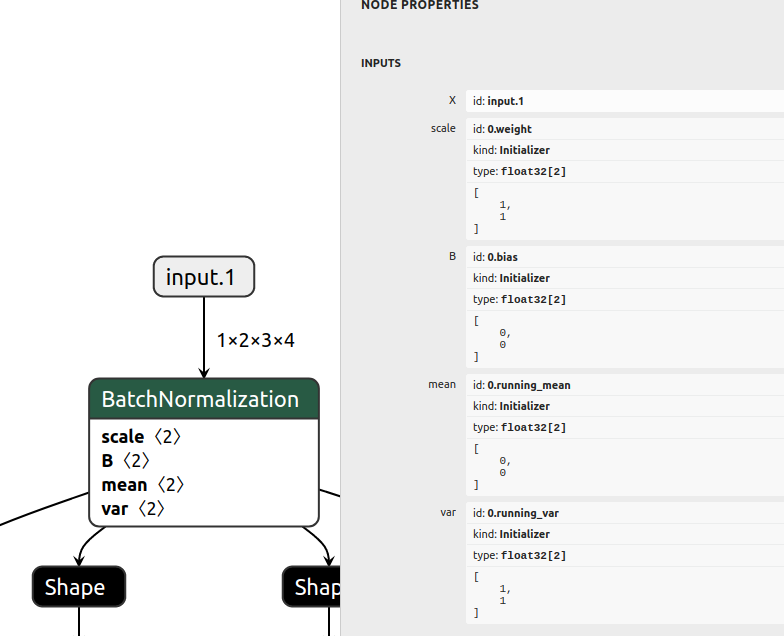

Below attached Fused version of Batchnorm to show comparison between unfused and fused batchnorm.

Initializers inputs of Reshape of above unfused subgraph acts same as Initializer inputs of Fused batchnorm.

Initializers are not considered as nodes.

One more example of Initializer is Weight and bias of Convolution node

And Below mentioned Upsample subgraph with two inputs.