| CARVIEW |

Navigation Menu

-

-

Notifications

You must be signed in to change notification settings - Fork 56.3k

Add IPPE method for planar pose estimation in solvePnP #14362

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

| int flags ); | ||

|

|

||

| //TODO: doc | ||

| CV_EXPORTS_W int solvePnPGeneric( InputArray objectPoints, InputArray imagePoints, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Other possibility could be to return a struct of pose info instead of all the parameters. But I have not seen this yet in OpenCV, due to issue with Python/Java bindings maybe?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Python/Java bindings

I believe, bindings generator should support non-complex structures.

modules/calib3d/src/solvepnp.cpp

Outdated

| //TODO: | ||

| // else | ||

| // { | ||

| // // use CV_32F if all PnP inputs are CV_32F and outputs are empty |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

use CV_32F if all PnP inputs are CV_32F and outputs are empty

EPnP should return always in double precision:

opencv/modules/calib3d/src/epnp.cpp

Lines 195 to 196 in 1f517b8

| Mat(3, 1, CV_64F, ts[N]).copyTo(t); | |

| Mat(3, 3, CV_64F, Rs[N]).copyTo(R); |

Same thing with most of the methods, e.g. for P3P:

opencv/modules/calib3d/src/p3p.cpp

Lines 55 to 56 in 1f517b8

| cv::Mat(3, 1, CV_64F, translation).copyTo(tvec); | |

| cv::Mat(3, 3, CV_64F, rotation_matrix).copyTo(R); |

For iterative:

opencv/modules/calib3d/src/calibration.cpp

Lines 1251 to 1257 in 1f517b8

| _r = cvMat( rvec->rows, rvec->cols, | |

| CV_MAKETYPE(CV_64F,CV_MAT_CN(rvec->type)), param ); | |

| _t = cvMat( tvec->rows, tvec->cols, | |

| CV_MAKETYPE(CV_64F,CV_MAT_CN(tvec->type)), param + 3 ); | |

| cvConvert( &_r, rvec ); | |

| cvConvert( &_t, tvec ); |

For me, even if all the input are in CV_32F, rvec and tvec should be overwritten in double precision.

I have changed the code to return rvec and tvec always in CV_64F depth. Internally, all these methods should use double for calculation.

Documentation should be updated to reflect that.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

rvec and tvec should be overwritten in double precision

Not always.

Use this pattern:

if (R.needed()) _R.convertTo(R, R.empty() ? CV_64FC1 : R.type());

(however it is buggy in some cases - doesn't support empty Mat_<float>, but should raise an exception)

Internally, all these methods should use double for calculation.

This is the right way.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This makes sense:

- always return in

CV_64FC1 - except if

rvecortvecis not empty

Need to check that it works properly.

modules/calib3d/src/solvepnp.cpp

Outdated

| else if (flags == SOLVEPNP_IPPE) | ||

| { | ||

| double val = 0.0; | ||

| bool planar = isPlanarObjectPoints(opoints, 1e-5, val); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have added a method to check that the input points are indeed planar. Otherwise, we return false.

Which threshold to use? Here we have:

opencv/modules/calib3d/src/calibration.cpp

Line 1112 in 1f517b8

| if( W[2]/W[1] < 1e-3) |

In IPPE there is #define IPPE_SMALL 1e-7

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The threshold is a bit arbitrary. To keep consistent with OpenCV 1e-3 should be fine.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have changed the threshold to use 1e-3 for IPPE_SMALL too.

| Point3f pmin = Point3f(-1, -1, 5), | ||

| Point3f pmax = Point3f(1, 1, 10)) | ||

| { | ||

| RNG rng = cv::theRNG(); // fix the seed to use "fixed" input 3D points |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should we use instead ts->get_rng()?

| { | ||

| rvec.at<double>(i,0) = rng.uniform(minVal, maxVal); | ||

| tvec.at<double>(i,0) = rng.uniform(minVal, maxVal/10); | ||

| tvec.at<double>(i,0) = (i == 2) ? rng.uniform(minVal*10, maxVal) : rng.uniform(-maxVal, maxVal); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We need tz>0 but tx and ty can be negative.

| Mat intrinsics, distCoeffs; | ||

| generateCameraMatrix(intrinsics, rng); | ||

| if (method == 4) intrinsics.at<double>(1,1) = intrinsics.at<double>(0,0); | ||

| //UPnP is mapped to EPnP |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have commented all the specific code for UPnP, DLS? since they are internally remapped to EPnP.

It is misleading to have different results, thresholds between EPnP and UPnP, DLS.

| //cout << error << " " << inliers.size() << " " << eps[method] << endl; | ||

| if (error > maxError) | ||

| maxError = error; | ||

| isTestSuccess = isTestSuccess && rvecDiff < eps[method] && tvecDiff < eps[method]; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ultimately, I think that we should have different thresholds for translation and rotation. Pratically, we use the same values...

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I believe, robust test check should call projectPoints() with estimated rvec/tvec and check relative norm L2.

| } | ||

|

|

||

| TEST(Calib3d_SolvePnP, double_support) | ||

| TEST(Calib3d_SolvePnPRansac, double_support) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Not sure of the validity of this test (same thing with TEST(Calib3d_SolvePnPRansac, input_type)).

Not sure about how the images points are defined. Since we just check that we got the same results between float and double input, I guess it is more or less OK?

|

Tests are tricky. Input are generated randomly but we got lots of bad results even when using image points projected by the ground truth pose. Is there some issue when we generate a camera pose where most of the points are not visible for instance? We don't test with noise for image points whereas in real life there are many source of uncertainties. |

modules/calib3d/src/ippe.cpp

Outdated

| canonicalObjPoints.at<Vec2d>(i)[1] = UZeroAligned.at<double>(1, i); | ||

| //or | ||

| //TODO: | ||

| // CV_DbgAssert(abs(UZeroAligned.at<double>(2, i)) <= IPPE_SMALL); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should we keep the CV_DbgAssert or maybe find a way to return false to not interrupt the main program?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I believe, CV_Error() with Error::StsNoConv is fine here.

not interrupt the main program

"main program" should expect that OpenCV C++ functions can raise exceptions and handle that cases.

a58a857 to

8eeec7e

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you!

Please take a look on comments below.

| int flags ); | ||

|

|

||

| //TODO: doc | ||

| CV_EXPORTS_W int solvePnPGeneric( InputArray objectPoints, InputArray imagePoints, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Python/Java bindings

I believe, bindings generator should support non-complex structures.

| SOLVEPNP_AP3P = 5, //!< An Efficient Algebraic Solution to the Perspective-Three-Point Problem @cite Ke17 | ||

| SOLVEPNP_MAX_COUNT //!< Used for count | ||

| enum { SOLVEPNP_ITERATIVE = 0, | ||

| SOLVEPNP_EPNP = 1, //!< EPnP: Efficient Perspective-n-Point Camera Pose Estimation @cite lepetit2009epnp |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could you please change indentation for 4 spaces here?

It would be nice to add some name for this enum too:

enum SolvePnPMethod {

SOLVEPNP_ITERATIVE = 0,

...

#ifndef CV_DOXYGEN

SOLVEPNP_MAX_COUNT

#endif

};

modules/calib3d/src/ippe.cpp

Outdated

|

|

||

| Mat pts = _targetPoints.getMat(); | ||

| H_.create(3, 3, CV_64FC1); | ||

| Mat H = H_.getMat(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Matx33d H;

H( , ) = ...

H.copyTo(H_);

or Mat(H, false).copyTo(H_);

modules/calib3d/src/solvepnp.cpp

Outdated

| //TODO: | ||

| // else | ||

| // { | ||

| // // use CV_32F if all PnP inputs are CV_32F and outputs are empty |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

rvec and tvec should be overwritten in double precision

Not always.

Use this pattern:

if (R.needed()) _R.convertTo(R, R.empty() ? CV_64FC1 : R.type());

(however it is buggy in some cases - doesn't support empty Mat_<float>, but should raise an exception)

Internally, all these methods should use double for calculation.

This is the right way.

modules/calib3d/src/solvepnp.cpp

Outdated

| Mat w, u, vt; | ||

| SVDecomp(objectPointsCentred, w, u, vt); | ||

| val = w.at<double>(2)/w.at<double>(1); | ||

| return (w.at<double>(2)/w.at<double>(1) < threshold); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

reuse val?

- fabs()?

a / b < c==>a < c * b?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have used the multiplication.

I don't know exactly what this quantity represents exactly.

fabs is not used here :

opencv/modules/calib3d/src/calibration.cpp

Line 1112 in d0032b0

| if( W[2]/W[1] < 1e-3) |

| //cout << error << " " << inliers.size() << " " << eps[method] << endl; | ||

| if (error > maxError) | ||

| maxError = error; | ||

| isTestSuccess = isTestSuccess && rvecDiff < eps[method] && tvecDiff < eps[method]; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I believe, robust test check should call projectPoints() with estimated rvec/tvec and check relative norm L2.

|

This is a great start. I have a couple of comments. Efficiency: For solvePnPGeneric, maybe there could be an input flag to bypass non-trivial error checks (e.g. that the model is planar). This would allow for faster computation. If error checks are disabled, it is the user's responsibility to ensure the data they pass to solvePnPGeneric is correct. Generality: solvePnP's iterative solver (SOLVEPNP_ITERATIVE flag) is a bit messy in the way that it handles initialization. The iterative solver requires an initial pose estimate. Currently there are two ways the initial pose estimate is established. The first is by the user setting the flag useExtrinsicGuess=true, in which case the inputted rvec and tvec are used as the initial estimate. The second way is by setting the flag useExtrinsicGuess=false, and then the initial estimate is computed using an existing non-iterative pnp method. Currently, if the model is planar, the non-iterative method used is Zhang's method (calibration.cpp line 1115), which is bad because it can only return one pose solution and can be unstable. If the model is non-planar, the method is the DLT (calibration.cpp line 1171). I would say that a nice generic interface for pnp would allow the user to specify 1) the pnp method used for initialization (non-iterative - requires no initial solution) and 2) the pnp method used for refining the solution(s) provied by 1). IPPE can then replace Zhang as the default pnp method for 1). For 2), currently the options could be either "none" (no solution refinement) or OpenCV's Levenberg–Marquardt implementation, where each pose solution from 1) should be refined with LM. |

|

I have added the checks only if See also discussion in #14181 about extrinsic guess. |

77548ed to

566c570

Compare

|

Hi! Just wondering if you need me to assist? |

86a65ce to

cf0ed60

Compare

|

The PR should be ready on my side. Main changes:

@tobycollins |

|

For the main changes: solveP3P, all real solutions should have zero reprojection error up to numerical precision, so there's no need to sort them. |

|

There is something a little bit odd. Log

I just did a quick test. |

|

@catree Sorry for the delayed reply. Regarding the duplicate solutions, it is because the quartic solver I put here always tries to get four solutions, even if two of them may be complex (and they will share the same real part), so the two duplicate roots are actually the real parts of two conjunctive complex ones. This may capture some real roots that are computed to be complex numbers with super small imagine part due to numerical errors instead of rejecting them. I believe it is ok to change the code so that we reject all complex roots (either true ones or the ones caused by numerical errors). P3P is usually used in RANSAC, missing one root in one iteration (which does not happen frequently) is acceptable. |

|

@tonyke1993 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you!

Please check comments below.

modules/calib3d/src/ippe.cpp

Outdated

| // of this distribution and at https://opencv.org/license.html | ||

|

|

||

| // This file is based on files from packages softfloat and fdlibm | ||

| // issued with the following licenses: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

from packages softfloat and fdlibm

Please remove/replace this.

modules/calib3d/src/ippe.cpp

Outdated

| ata01 = a00 * a10 + a01 * a11; | ||

| ata11 = a10 * a10 + a11 * a11; | ||

|

|

||

| gamma = sqrt(0.5 * (ata00 + ata11 + sqrt((ata00 - ata11) * (ata00 - ata11) + 4.0 * ata01 * ata01))); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should we check?

sqrt()argument is not negative (due rounding errors)gammais not close to zero (division is below) or raiseCV_Error(Error::StsNoConv, "...");

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have added the two checks with CV_Error.

modules/calib3d/src/ippe.cpp

Outdated

| { | ||

| canonicalObjPoints.at<Vec2d>(i)[0] = UZeroAligned.at<double>(0, i); | ||

| canonicalObjPoints.at<Vec2d>(i)[1] = UZeroAligned.at<double>(1, i); | ||

| // assert(abs(UZeroAligned.at<double>(2, i)) <= IPPE_SMALL); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

needed?

modules/calib3d/src/ippe.cpp

Outdated

| float dx, dy; | ||

| for (int i = 0; i < n; i++) | ||

| { | ||

| if (projectedPoints.depth() == CV_32F) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

projectedPoints.depth()

Probably it is better to cache this value into some variable outside of the loop.

|

|

||

| double nx = (p1y - p2y)*(p1z - p3z) - (p1y - p3y)*(p1z - p2z); | ||

| double ny = (p1x - p3x)*(p1z - p2z) - (p1x - p2x)*(p1z - p3z); | ||

| double nz = (p1x - p2x)*(p1y - p3y) - (p1x - p3x)*(p1y - p2y); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

BTW, there are cross() implementations for Vec3d / Point3d types.

modules/calib3d/src/ippe.hpp

Outdated

| * @param tvec: Translation component of pose | ||

| * @return: average depth of the object | ||

| */ | ||

| double meanSceneDepth(InputArray objectPoints, InputArray rvec, InputArray tvec); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

indentation 5->4 spaces

modules/calib3d/src/ippe.hpp

Outdated

| namespace HomographyHO { | ||

|

|

||

| /** | ||

| * @brief Computes the best-fitting homography matrix from source to target points using Harker and O'Leary's method: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please avoid indentation in namespaces

modules/calib3d/src/solvepnp.cpp

Outdated

| projectedPoints_ = projectedPoints_.reshape(1, static_cast<int>(projectedPoints.size()*2)); | ||

| imagePoints = imagePoints.reshape(1, static_cast<int>(projectedPoints.size()*2)); | ||

| Mat rmse = projectedPoints_ - imagePoints; | ||

| rmse = rmse.t() * rmse; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

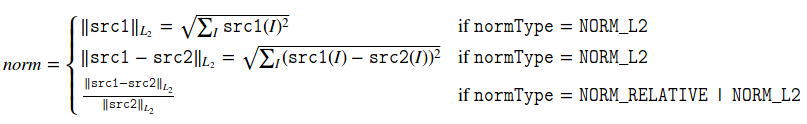

Last 4 lines: norm(projectedPoints, imagePoints, NORM_L2);

NORM_L2 | NORM_RELATIVE - for rmse_.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I did not manage to get the same results and I don't think it is the same formula.

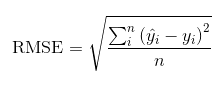

For RMSE, I use:

whereas in the doc:

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

the lines:

Mat rmse = projectedPoints_ - imagePoints;

rmse = rmse.t() * rmse;

are misleading - they definitely do not comptue RMSE according to its standard definition

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You are right!

rmse_ = norm(projectedPoints_, imagePoints, NORM_L2) / sqrt(projectedPoints_.total());

?

89711d0 to

a06770e

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you for update!

modules/calib3d/src/ippe.cpp

Outdated

| double rz = R.at<double>(2, 0) * objectPoints.at<Vec2d>(i)(0) + R.at<double>(2, 1) * objectPoints.at<Vec2d>(i)(1); | ||

|

|

||

| double a2 = -imgPoints.at<Vec2d>(i)(0); | ||

| double b2 = -imgPoints.at<Vec2d>(i)(1); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

BTW, We can cache imgPoints.at<Vec2d>(i) in variable (value or "const reference") here and above.

modules/calib3d/src/solvepnp.cpp

Outdated

| projectedPoints_ = projectedPoints_.reshape(1, static_cast<int>(projectedPoints.size()*2)); | ||

| imagePoints = imagePoints.reshape(1, static_cast<int>(projectedPoints.size()*2)); | ||

| Mat rmse = projectedPoints_ - imagePoints; | ||

| rmse = rmse.t() * rmse; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You are right!

rmse_ = norm(projectedPoints_, imagePoints, NORM_L2) / sqrt(projectedPoints_.total());

?

2fe724c to

60636b2

Compare

|

I have reverted from: to Somehow, with my compiler ( I have captured some data that lead code

Matx31d rvec(0.7136616552834075, 0.3059808644026911, 0.7114660999233302);

Matx31d tvec(-0.6493577793527734, 0.6633761657486326, 0.04733051191856578);

// Matx33d cameraMatrix(44.63448589236783, 0, 37.87596253836421,

// 0, 15.90508774946769, 24.92036090687411,

// 0, 0, 1);

Matx33d cameraMatrix = Matx33d::eye();

Matx41d distCoeffs(4.402010239854638e-07, 7.729938301986059e-07, 5.257442644446706e-07, 0);

std::vector<Point3f> objectPoints;

objectPoints.push_back(Point3f(-0.0747386, 0.0747386, 0));

objectPoints.push_back(Point3f(0.0747386, 0.0747386, 0));

objectPoints.push_back(Point3f(0.0747386, -0.0747386, 0));

objectPoints.push_back(Point3f(-0.0747386, -0.0747386, 0));

{

std::vector<Point2f> imagePoints1, imagePoints2, imagePoints_undist;

projectPoints(objectPoints, rvec, tvec, cameraMatrix, noArray(), imagePoints1);

projectPoints(objectPoints, rvec, tvec, cameraMatrix, distCoeffs, imagePoints2);

undistortPoints(imagePoints2, imagePoints_undist, cameraMatrix, distCoeffs);

for (size_t i = 0; i < imagePoints1.size(); i++) {

std::cout << i << ") " << imagePoints1[i] << std::endl;

std::cout << i << ") " << imagePoints2[i] << std::endl;

std::cout << i << ") " << imagePoints_undist[i] << std::endl;

}

}

{

Mat R;

Rodrigues(rvec, R);

std::cout << std::endl;

for (size_t i = 0; i < objectPoints.size(); i++) {

Matx31d obj(objectPoints[i].x, objectPoints[i].y, objectPoints[i].z);

Mat cameraPoint = R * obj + tvec;

std::cout << i << ") " << cameraPoint.t() << std::endl;

}

}

std::vector<Point2f> imagePoints;

projectPoints(objectPoints, rvec, tvec, cameraMatrix, distCoeffs, imagePoints);

IPPE::PoseSolver planePoseSolver;

Mat rvec1, tvec1, rvec2, tvec2;

float reprojErr1, reprojErr2;

planePoseSolver.solveSquare(0.0747386*2, imagePoints, cameraMatrix, distCoeffs, rvec1, tvec1, reprojErr1, rvec2, tvec2, reprojErr2);

std::cout << "rvec: " << rvec.t() << std::endl;

std::cout << "rvec1: " << rvec1.t() << std::endl;

std::cout << "rvec2: " << rvec2.t() << std::endl;

std::cout << "tvec: " << tvec.t() << std::endl;

std::cout << "tvec1: " << tvec1.t() << std::endl;

std::cout << "tvec2: " << tvec2.t() << std::endl;output

0) [-7.37631, 6.50044]

0) [-7.42996, 6.54776]

0) [-7.37631, 6.50044]

1) [-6.49895, 7.7687]

1) [-6.55217, 7.83237]

1) [-6.49895, 7.7687]

2) [98.4054, -118.826]

2) [43198.8, -52163.4]

2) [2.80155e-09, -3.53119e-09]

3) [266.336, -228.28]

3) [3.11734e+06, -2.67191e+06]

3) [5.40652e-14, -8.66878e-14]

0) [-0.7401207358070793, 0.6522378308313299, 0.1003374897566741]

1) [-0.6314791721351084, 0.7548555043856755, 0.09716626690503738]

2) [-0.5585948228984675, 0.6745145006659352, -0.00567646591954258]

3) [-0.6672363865704384, 0.5718968271115896, -0.002505243067905831]

!!!!!!!!gamma = 0

gamme: 1.0077253436729412e-07

rvec: [0.7136616552834075, 0.3059808644026911, 0.7114660999233302]

rvec1: [1.704411329846153, 0.6396593763041216, -0.588295149917103]

rvec2: [-1.704411318685205, -0.6396593664899685, -0.5882951462729119]

tvec: [-0.6493577793527734, 0.6633761657486326, 0.04733051191856578]

tvec1: [-0.0002003314847303823, -0.001230874564702886, -0.1413944711470152]

tvec2: [-0.0002003314326244324, -0.001230874243820202, 0.1413943734881941]Looks like the |

|

Looks like tz is pretty small. Are you sure that the model points in camera coordinates are all in front of the camera? |

|

@tobycollins

I have added a check in the test to discard these types of pose. |

…ction that returns all the pose solutions and the reprojection errors.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Well done! Thank you 👍

Merge with opencv_extra: opencv/opencv_extra#606

This pullrequest changes

@tobycollins This is the (draft) pull request following tobycollins/IPPE#4

Main differences with the original IPPE code:

CV_32F/CV_64Finput typesCV_Assert, ...Main changes:

SOLVEPNP_IPPEfor planar pose estimationSOLVEPNP_IPPE_SQUAREfor tag pose estimation (z=0, frame is centered)solvePnPGenericthat returns all the solutions and optionally the reprojection error