|

|---|

| Final Render |

This is my journey to learn ray tracing following Peter Shirley's first book on the subject. In this readme, I will try to explain how it went, both as a reminder for myself and a help for those who choose to follow the same course in Rust. Well, I am doing this mostly for myself, but explaining what you're doing is a good way of ensuring you understand your subject.

As I said, this is my implementation of Peter Shirley's guide to ray tracing. So of course I followed his book, but I also relied on previous Rust implementations:

- Ray Tracing in One Weekend (first of three books)

- akinnane's Rust implementation

- Nelarius' Rust implementation

As a side note, I also began watching Dr. Károly Zsolnai-Fehér's course on YouTube (TU Wien Rendering / Ray Tracing Course). I was at the 12th video by the end of this project.

Why Ray Tracing in One Weekend? And why Rust? As I was learning Rust by myself, I wondered what kind of project I could do to practice. I always had an interest in CG graphics but never went further than some basic Blender, Unity and a few vulgarisation videos. I though it could be interesting to learn Rust through a 3D engine and Ray Tracing in One Weekend seems like a good start.

The reason I thought it was important to write this is to give you some context. I have some theoretical knowledge about CGI, but I am a complete beginner in both computer graphics and Rust. My only experience with the language come from the rustlings exercises which I haven't even finished before getting to work.

What I am trying to say is instead of an efficient implementation expect some weird experiments.

I wrote this readme after completing this project over a period of one week. As I didn't use git from the start nor progressed in a methodical way some early code issue description and code illustration may be inaccurate.

- The first image

- Vec3 implementation (I should have use TDD)

- Hittable abstraction with Traits

- Utilities for Rust

- Materials through enums

- Multithreading with Rayon

- FOV Distortion, Movable Camera & Blur

- Final render

- Closing thoughts

The first step was to create an image. For simplicity, the book uses the PPM format. As a text description of an image, it is indeed easy to manipulate.

The image renders were PPM files, but I converted them to PNG for display. You can find the renders here (./render).

At first I relied on the format! macro to concatenate the strings which make up the PPM file with the new pixels at every loop. However, it proved to grow very slow with higher resolutions (we are talking about seconds for a simple color gradient).

let mut render = format!("P3\n{} {}\n255", img_width, img_height);

for j in (0..img_height).rev() {

for i in 0..img_width {

// Color logic

render = format!("{render}\n{r} {g} {b}");

}

}

renderAlthough I really should dive deeper into string manipulation in Rust, I worked around the issue by using a Vec<String> instead and joining it at the end.

let mut render = Vec::new();

render.push(format!("P3\n{} {}\n255", img_width, img_height));

for j in (0..img_height).rev() {

for i in 0..img_width {

// Color logic

render.push(format!("{r} {g} {b}"));

}

}

render.join("\n")I use ranges for my loops, mainly for readability and laziness. But from my understanding it shouldn't cost much if any performance (Comparing Performance: Loops vs. Iterators).

Implementing Vec3 is straight forward. Rust operators are "syntactic sugar for method calls" (Operator Overloading) so as long as you know which trait correspond to which operator it is easy to implement them. At least that's what I thought.

The thing is, there are many operators to override if you want a complete coverage and due to some quick copy and paste I mistakenly put 'y's in place of 'z's in some places.

The "Hello world" background rendering showcased a different color as well as some weird round effects on the center of the picture. I could fix the artefact at the center by not normalizing (to unit vector) the raycast, but I didn't investigate the discoloration further.

That was dumb. Always question why your code does not give you the expected result.

I only caught the real issue when I tried to display the first sphere as it resulted in weird abstract pieces :

|

|---|

| "Ceci n'est pas une sphère - Or how to fail your ray tracer" Nicolas Guillaume Soulié |

After some time, I managed to find the mistake using unit tests. So I do advise you to use unit tests and/or TDD (Test Driven Development) for straight forward yet important part of your code like Vec3.

I have initially written my own tests for dot and cross products. However, I didn't expect typos on simpler operations. To debug faster I "stole" Nelarius' unit tests. However, one mistake did manage to pass all the tests. I caught it when I started to deal with multi-sampling anti-aliasing as it whitened the image based on the number of rays.

|

|---|

| Normals after correction |

In Rust there is no inheritance. Instead the language uses Traits implementations.

"Traits are similar to a feature often called interfaces in other languages, although with some differences."

To abstract Hittables in Rust, traits seem like the implementation closest to a parent class in C++. You have to use the dyn keyword to explicit that you use dynamic types and wrap the Hittables structs in your list in smart pointers (like Box or Arc).

// Dyn type in smart pointer for list (Vec)

pub struct HittableList {

pub objects: Vec<Arc<dyn Hittable>>,

}

// Dyn type used in functions/methods prototypes

pub fn render(world: &(dyn Hittable), samples: u16, max_depht: u16 ) -> String;In functions, we can only pass references to a dynamic type. To quote the Rust compiler:

"Function arguments must have a statically known size, borrowed types always have a known size:

&"

Another possible implementation would be to use Rust enums as a type wrapper. However, in my opinion, this implementation would make the addition of new hittable classes more tedious so I chose against it. I still used this approach with materials if you are interested.

Multiple of the constants and utility functions needed in the book are already available in Rust. You just need to import some of them.

// Maximum float (f64 in my case)

f64::INFINITY

// PI (not needed)

std::f64::consts::PI

// Degree to radians

let degree: f64 = 90.0;

let radian: f64 = degree.to_radians();// Import

use rand::{thread_rng, Rng};

// Get a reference to the thread-local generator

let mut rng: ThreadRng = thread_rng();

// Generate a number in range

let random_number = rng.gen_range(min..max);For the materials implementation, I chose to rely on an enum wrapper. The scatter method of the enum use a match control flow to call the scatter method of the wrapped material type.

pub enum Material {

Lambertian(Lambertian),

// ...

}

impl Material {

pub fn scatter(&self, r_in: &Ray, rec: &mut HitRecord, rng: &mut ThreadRng) -> (Vec3, Ray) {

match self {

Material::Lambertian(l) => l.scatter(rec, rng),

// ...

}

}

}The diffuse material implementation was relatively without issue. At first my results were very dark compared to the book, but I couldn't find why. After the shadow acne fix I obtained closer results.

|

|

|

|---|---|---|

| Diffuse | Gamma correction | Shadow acne fix |

|

|

|---|---|

| Lambertian diffuse | Colored lambertian diffuse |

Implementing a metallic material was uneventful. I already explained my abstraction of materials previously so all I can give you are pretty visuals:

|

|

|---|---|

| Metallic reflection | Fuzzy reflection |

Implementing a dielectric material proved to be challenging. Well, first I decided to provide an actual proof of the formulas used for computing the refracting ray. The second challenge came from a bug I spent hours searching.

The book gives us formulas for computing

We want to demonstrate:

|

|---|

| Refraction |

We have

With

Therefor we can define

And since:

We have:

$$R^′_{⊥} = \frac{η}{η′}⋅(R + Ncosθ)$$

Next we can express

Because

$$R^′_{∥} = -N\sqrt{1 -|R^′_{⊥}|^2}$$

Dielectric too should be rather simple to implement by following the book. Alas, my old stealthy mistake surfaced in all its glory. Basically, while implementing refractions, I faced a bug which turned my glass ball black.

|

|---|

| Where is refraction? |

I spent hours checking my code. I followed the debugger step by step, surveilling how and where the rays were cast, visualising them with a pen and paper, to understand what was happening.

If you are unfamiliar with breakpoints, they allow you to pause execution of a program at a chosen instruction. Then you can check the stack trace as well as variable values.

If you want to follow a ray on a specific material without stopping at each ray cast, put your breakpoint on said material call (like its

fn scatter()method) and then look at the stack trace, or progress step by step onward.

Looking at the ray values and hit distances, I realised the first scattered ray would detect a hit at its origin. Then every successive refraction would repeat the same mistake until depth reached its maximum. Hence the black final color.

The issue came from an oversight in the sphere hit detection. While I did check both roots for intersection, I created a new variable for the second root inside the first if statement which was then discarded. So if the second root was the right one, I still used the first one.

That was a failed attempt at shadowing on my part. Well, at least I now know how it works. I hope so.

let root = (-half_b - sqrtd) / a; // Define root

if root < t_min || t_max < root {

let root = (-half_b + sqrtd) / a; // (INNER) Shadow the root

if root < t_min || t_max < root {

return false;

}

} // The second root goes out of scope

// The inner shadowing loose its effectOnce fixed it look like this:

let mut root = (-half_b - sqrtd) / a; // Define a mutable root

if root < t_min || t_max < root {

root = (-half_b + sqrtd) / a; // Store new root in mutable root

if root < t_min || t_max < root {

return false;

}

} // The second root is still in scope |

|

|---|---|

| Refraction working as it should (with fuzzy metal) |

Refraction working as it should (with less fuzzy metal) |

|

|---|

| Schlick approximation |

The rendering loop in other Rust projects (akinnane and Nelarius) used an iterator in a way I never saw. Doing a bit of research I learned that they used rayon for easy multithreading. At that point, rendering started to slow down so I decided to try it myself. While it is fairly simple to use, I still had to face a few difficulties.

For an object to be passed around threads, it needs to implement the traits Send and Sync. Those traits are automatically implemented when the compiler determines it’s appropriate.

"Any type composed entirely of

Sendtypes is automatically marked asSendas well. Almost all primitive types areSend, aside from raw pointers"."Similar to

Send, primitive types areSync, and types composed entirely of types that areSyncare alsoSync."The Rust "book", Extensible Concurrency with the Sync and Send Traits

Thanks to that, my structs already implements Send and Sync.

However, that is not the case for dyn Hittable structs, whose true type is only known at runtime.

Therefor, I had to precise that I wanted my structs to implement Send and Sync in my definitions.

I also changed my Box smart pointers to Arc.

// HittableList became a vec of smart pointers to Hittable, Send and Sync structs

pub struct HittableList {

pub objects: Vec<Arc<dyn Hittable + Send + Sync>>,

}

// render now asks for Sync Hittable structs

pub fn render(world: &(dyn Hittable + Sync), // ...

) -> String;I also used an Arc<Mutex> to track the progress of the render despite the chaotic nature of multithreading. It probably slows down the process, but since I never really measured the render time I cannot judge the loss or gain of this whole implementation. So long as I learn new things I am satisfied, but I really should look into performance measuring in the future.

|

|---|

| No changes in the result Multithreading is working |

I don't have much to say about those last few steps. I had no issue and the port from the book C++ implementation to Rust is too simple to involve another architecture choice.

|

|

|---|---|

| FOV 50 | FOV 120 |

However, I do find the distortion in high FOV a bit weird, especially in the 160 and further (I should have saved those...).

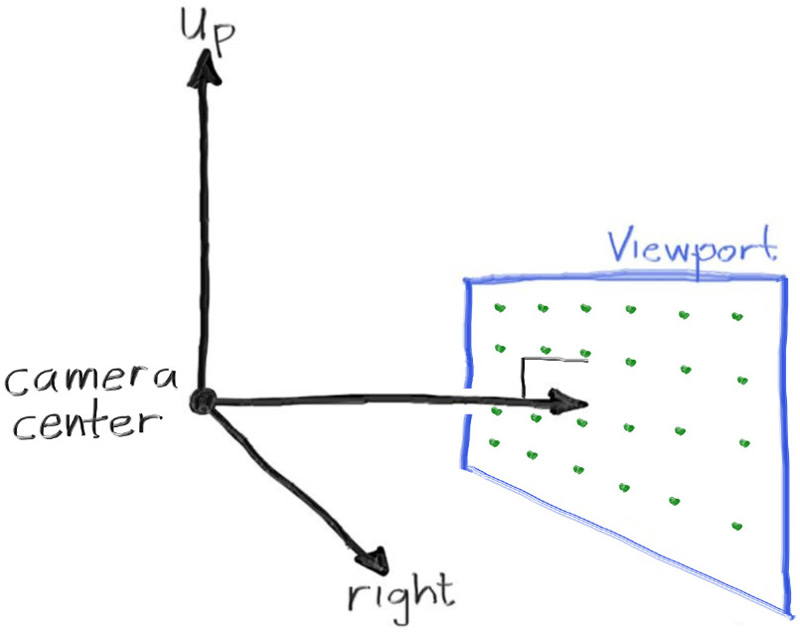

I believe it is due to the way we cast our rays from an origin to a plane using a constant distance in the x and y axis for pixels.

Because of that we have smaller angular differences between neighboring pixels at the edges.

The higher the FOV the greater the angular step differ between the center and the borders.

|

|---|

| Ray Tracing in One Weekend, "Figure 3: Camera geometry" |

Or maybe I am just not used to high FOV and a camera ray cast with constant angular step between nearby pixels might just look as weird. I should try it out, but for now I get results that match the book so I will keep plane projection.

|

|---|

| FOV 90 |

|

|---|

| FOV 20 |

|

|---|

| FOV 20, Apperture 0.5 |

| Test Render |  |

|

|---|---|---|

| Resolution | 720x405px | 720x405px |

| Samples | 128/px | 16/px |

| Depth | 50 | 16 |

| FOV | 20 | 20 |

| Apperture | 0.1 | 0.1 |

| Final Render |  |

|---|---|

| Resolution | 1920x1080px |

| Samples | 512/px |

| Depth | 64 |

| FOV | 20 |

| Apperture | 0.1 |

The final render took approximately 5 hours to render with an

i7 4770k(16Go of RAM). Thanks to rayon, all 8 threads showed a 100% utilisation.While other programs were running during the process, the rendering still used more than 90% of CPU utilisation.