| CARVIEW |

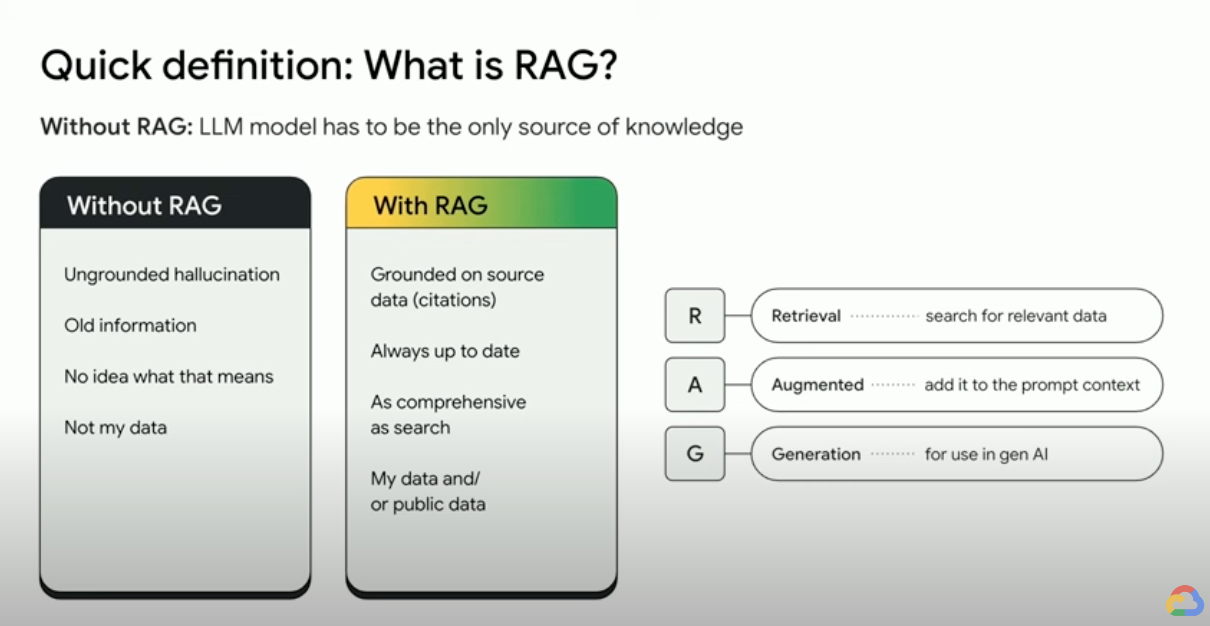

What is Retrieval-Augmented Generation (RAG)?

RAG (Retrieval-Augmented Generation) is an AI framework that combines the strengths of traditional information retrieval systems (such as search and databases) with the capabilities of generative large language models (LLMs). By combining your data and world knowledge with LLM language skills, grounded generation is more accurate, up-to-date, and relevant to your specific needs. Check out this ebook to unlock your “Enterprise Truth.”

How does Retrieval-Augmented Generation work?

RAGs operate with a few main steps to help enhance generative AI outputs:

- Retrieval and pre-processing: RAGs leverage powerful search algorithms to query external data, such as web pages, knowledge bases, and databases. Once retrieved, the relevant information undergoes pre-processing, including tokenization, stemming, and removal of stop words.

- Grounded generation: The pre-processed retrieved information is then seamlessly incorporated into the pre-trained LLM. This integration enhances the LLM's context, providing it with a more comprehensive understanding of the topic. This augmented context enables the LLM to generate more precise, informative, and engaging responses.

Why use RAG?

RAG offers several advantages augmenting traditional methods of text generation, especially when dealing with factual information or data-driven responses. Here are some key reasons why using RAG can be beneficial:

Access to fresh information

LLMs are limited to their pre-trained data. This leads to outdated and potentially inaccurate responses. RAG overcomes this by providing up-to-date information to LLMs.

Factual grounding

LLMs are powerful tools for generating creative and engaging text, but they can sometimes struggle with factual accuracy. This is because LLMs are trained on massive amounts of text data, which may contain inaccuracies or biases.

Providing “facts” to the LLM as part of the input prompt can mitigate “gen AI hallucinations.” The crux of this approach is ensuring that the most relevant facts are provided to the LLM, and that the LLM output is entirely grounded on those facts while also answering the user’s question and adhering to system instructions and safety constraints.

Using Gemini’s long context window (LCW) is a great way to provide source materials to the LLM. If you need to provide more information than fits into the LCW, or if you need to scale up performance, you can use a RAG approach that will reduce the number of tokens, saving you time and cost.

Search with vector databases and relevancy re-rankers

RAGs usually retrieve facts via search, and modern search engines now leverage vector databases to efficiently retrieve relevant documents. Vector databases store documents as embeddings in a high-dimensional space, allowing for fast and accurate retrieval based on semantic similarity. Multi-modal embeddings can be used for images, audio and video, and more and these media embeddings can be retrieved alongside text embeddings or multi-language embeddings.

Advanced search engines like Vertex AI Search use semantic search and keyword search together (called hybrid search), and a re-ranker which scores search results to ensure the top returned results are the most relevant. Additionally searches perform better with a clear, focused query without misspellings; so prior to lookup, sophisticated search engines will transform a query and fix spelling mistakes.

Relevance, accuracy, and quality

The retrieval mechanism in RAG is critically important. You need the best semantic search on top of a curated knowledge base to ensure that the retrieved information is relevant to the input query or context. If your retrieved information is irrelevant, your generation could be grounded but off-topic or incorrect.

By fine-tuning or prompt-engineering the LLM to generate text entirely based on the retrieved knowledge, RAG helps to minimize contradictions and inconsistencies in the generated text. This significantly improves the quality of the generated text, and improves the user experience.

The Vertex Eval Service now scores LLM generated text and retrieved chunks on metrics like “coherence,” “fluency,” “groundedness,” "safety," “instruction_following,” “question_answering_quality,” and more. These metrics help you measure the grounded text you get from the LLM (for some metrics that is a comparison to a ground truth answer you have provided). Implementing these evaluations gives you a baseline measurement and you can optimize for RAG quality by configuring your search engine, curating your source data, improving source layout parsing or chunking strategies, or refining the user’s question prior to search. A RAG Ops, metrics driven approach like this will help you hill climb to high quality RAG and grounded generation.

RAGs, agents, and chatbots

RAG and grounding can be integrated into any LLM application or agent which needs access to fresh, private, or specialized data. By accessing external information, RAG-powered chatbots and conversational agents leverage external knowledge to provide more comprehensive, informative, and context-aware responses, improving the overall user experience.

Your data and your use case are what differentiate what you are building with gen AI. RAG and grounding bring your data to LLMs efficiently and scalably.

What Google Cloud products and services are related to RAG?

The following Google Cloud products are related to Retrieval-Augmented Generation:

- Vertex AI RAG EngineData framework for developing context-augmented LLM applications, and facilitates retrieval-augmented generation (RAG.)

- Vertex AI SearchVertex AI Search is Google Search for your data, a fully managed, out-of-the-box search and RAG builder.

- Vertex AI Vector SearchThe ultra performant vector index that powers Vertex AI Search; it enables semantic and hybrid search and retrieval from huge collections of embeddings with high recall at high query rate.

- BigQueryLarge datasets that you can use to train machine learning models, including models for Vertex AI Vector Search.

- Grounded Generation APIGemini high-fidelity mode grounded with Google Search or inline facts or bring your own search engine.

- AlloyDB AIRun models in Vertex AI and access them in your application using familiar SQL queries. Use Google models, such as Gemini, or your own custom models.

Further reading

Learn more about using retrieval augmented generation with these resources.

- Using Vertex AI to build next-gen search applications

- RAGs powered by Google Search technology

- RAG with databases on Google Cloud

- APIs to build your own search and Retrieval Augmented Generation (RAG) systems

- How to use RAG in BigQuery to bolster LLMs

- Code sample and quickstart to get familiar with RAG

- Infrastructure for a RAG-capable generative AI application using Vertex AI and Vector Search

- Infrastructure for a RAG-capable generative AI application using Vertex AI and AlloyDB for PostgreSQL

- Infrastructure for a RAG-capable generative AI application using GKE

Take the next step

Start building on Google Cloud with $300 in free credits and 20+ always free products.

Need help getting started?

Contact salesWork with a trusted partner

Find a partnerContinue browsing

See all products

- Accelerate your digital transformation

- Whether your business is early in its journey or well on its way to digital transformation, Google Cloud can help solve your toughest challenges.

- Key benefits

- Not seeing what you're looking for?

- See all industry solutions

- Featured Products

- AI and Machine Learning

- Business Intelligence

- Compute

- Containers

- Data Analytics

- Databases

- Developer Tools

- Distributed Cloud

- Hybrid and Multicloud

- Industry Specific

- Integration Services

- Management Tools

- Maps and Geospatial

- Media Services

- Migration

- Mixed Reality

- Networking

- Operations

- Productivity and Collaboration

- Security and Identity

- Serverless

- Storage

- Web3

- Featured Products

- Not seeing what you're looking for?

- See all products (100+)

- Not seeing what you're looking for?

- See all AI and machine learning products

- Business Intelligence

- Not seeing what you're looking for?

- See all compute products

- Not seeing what you're looking for?

- See all data analytics products

- Not seeing what you're looking for?

- See all developer tools

- Hybrid and Multicloud

- Industry Specific

- Not seeing what you're looking for?

- See all management tools

- Media Services

- Not seeing what you're looking for?

- See all networking products

- Productivity and Collaboration

- Not seeing what you're looking for?

- See all security and identity products

- Save money with our transparent approach to pricing

- Google Cloud's pay-as-you-go pricing offers automatic savings based on monthly usage and discounted rates for prepaid resources. Contact us today to get a quote.

- Pricing overview and tools

- Learn & build

- Connect

- Accelerate your digital transformation

- Learn more

- Key benefits

- Why Google Cloud

- AI and ML

- Multicloud

- Global infrastructure

- Data Cloud

- Modern Infrastructure Cloud

- Security

- Productivity and collaboration

- Reports and insights

- Executive insights

- Analyst reports

- Whitepapers

- Customer stories

- Industry Solutions

- Retail

- Consumer Packaged Goods

- Financial Services

- Healthcare and Life Sciences

- Media and Entertainment

- Telecommunications

- Games

- Manufacturing

- Supply Chain and Logistics

- Government

- Education

- See all industry solutions

- See all solutions

- Application Modernization

- CAMP

- Modernize Traditional Applications

- Migrate from PaaS: Cloud Foundry, Openshift

- Migrate from Mainframe

- Modernize Software Delivery

- DevOps Best Practices

- SRE Principles

- Day 2 Operations for GKE

- FinOps and Optimization of GKE

- Run Applications at the Edge

- Architect for Multicloud

- Go Serverless

- Artificial Intelligence

- Customer Engagement Suite with Google AI

- Document AI

- Vertex AI Search for retail

- Gemini for Google Cloud

- Generative AI on Google Cloud

- APIs and Applications

- New Business Channels Using APIs

- Unlocking Legacy Applications Using APIs

- Open Banking APIx

- Data Analytics

- Data Migration

- Data Lake Modernization

- Stream Analytics

- Marketing Analytics

- Datasets

- Business Intelligence

- AI for Data Analytics

- Databases

- Database Migration

- Database Modernization

- Databases for Games

- Google Cloud Databases

- Migrate Oracle workloads to Google Cloud

- Open Source Databases

- SQL Server on Google Cloud

- Gemini for Databases

- Infrastructure Modernization

- Application Migration

- SAP on Google Cloud

- High Performance Computing

- Windows on Google Cloud

- Data Center Migration

- Active Assist

- Virtual Desktops

- Rapid Migration and Modernization Program

- Backup and Disaster Recovery

- Red Hat on Google Cloud

- Cross-Cloud Network

- Observability

- Productivity and Collaboration

- Google Workspace

- Google Workspace Essentials

- Cloud Identity

- Chrome Enterprise

- Security

- Security Analytics and Operations

- Web App and API Protection

- Security and Resilience Framework

- Risk and compliance as code (RCaC)

- Software Supply Chain Security

- Security Foundation

- Google Cloud Cybershield™

- Startups and SMB

- Startup Program

- Small and Medium Business

- Software as a Service

- Featured Products

- Compute Engine

- Cloud Storage

- BigQuery

- Cloud Run

- Google Kubernetes Engine

- Vertex AI

- Looker

- Apigee API Management

- Cloud SQL

- Gemini

- Cloud CDN

- See all products (100+)

- AI and Machine Learning

- Vertex AI Platform

- Vertex AI Studio

- Vertex AI Agent Builder

- Conversational Agents

- Vertex AI Search

- Speech-to-Text

- Text-to-Speech

- Translation AI

- Document AI

- Vision AI

- Contact Center as a Service

- See all AI and machine learning products

- Business Intelligence

- Looker

- Looker Studio

- Compute

- Compute Engine

- App Engine

- Cloud GPUs

- Migrate to Virtual Machines

- Spot VMs

- Batch

- Sole-Tenant Nodes

- Bare Metal

- Recommender

- VMware Engine

- Cloud Run

- See all compute products

- Containers

- Google Kubernetes Engine

- Cloud Run

- Cloud Build

- Artifact Registry

- Cloud Code

- Cloud Deploy

- Migrate to Containers

- Deep Learning Containers

- Knative

- Data Analytics

- BigQuery

- Looker

- Dataflow

- Pub/Sub

- Dataproc

- Cloud Data Fusion

- Cloud Composer

- BigLake

- Dataplex

- Dataform

- Analytics Hub

- See all data analytics products

- Databases

- AlloyDB for PostgreSQL

- Cloud SQL

- Firestore

- Spanner

- Bigtable

- Datastream

- Database Migration Service

- Bare Metal Solution

- Memorystore

- Developer Tools

- Artifact Registry

- Cloud Code

- Cloud Build

- Cloud Deploy

- Cloud Deployment Manager

- Cloud SDK

- Cloud Scheduler

- Cloud Source Repositories

- Infrastructure Manager

- Cloud Workstations

- Gemini Code Assist

- See all developer tools

- Distributed Cloud

- Google Distributed Cloud Connected

- Google Distributed Cloud Air-gapped

- Hybrid and Multicloud

- Google Kubernetes Engine

- Apigee API Management

- Migrate to Containers

- Cloud Build

- Observability

- Cloud Service Mesh

- Google Distributed Cloud

- Industry Specific

- Anti Money Laundering AI

- Cloud Healthcare API

- Device Connect for Fitbit

- Telecom Network Automation

- Telecom Data Fabric

- Telecom Subscriber Insights

- Spectrum Access System (SAS)

- Integration Services

- Application Integration

- Workflows

- Apigee API Management

- Cloud Tasks

- Cloud Scheduler

- Dataproc

- Cloud Data Fusion

- Cloud Composer

- Pub/Sub

- Eventarc

- Management Tools

- Cloud Shell

- Cloud console

- Cloud Endpoints

- Cloud IAM

- Cloud APIs

- Service Catalog

- Cost Management

- Observability

- Carbon Footprint

- Config Connector

- Active Assist

- See all management tools

- Maps and Geospatial

- Earth Engine

- Google Maps Platform

- Media Services

- Cloud CDN

- Live Stream API

- OpenCue

- Transcoder API

- Video Stitcher API

- Migration

- Migration Center

- Application Migration

- Migrate to Virtual Machines

- Cloud Foundation Toolkit

- Database Migration Service

- Migrate to Containers

- BigQuery Data Transfer Service

- Rapid Migration and Modernization Program

- Transfer Appliance

- Storage Transfer Service

- VMware Engine

- Mixed Reality

- Immersive Stream for XR

- Networking

- Cloud Armor

- Cloud CDN and Media CDN

- Cloud DNS

- Cloud Load Balancing

- Cloud NAT

- Cloud Connectivity

- Network Connectivity Center

- Network Intelligence Center

- Network Service Tiers

- Virtual Private Cloud

- Private Service Connect

- See all networking products

- Operations

- Cloud Logging

- Cloud Monitoring

- Error Reporting

- Managed Service for Prometheus

- Cloud Trace

- Cloud Profiler

- Cloud Quotas

- Productivity and Collaboration

- AppSheet

- AppSheet Automation

- Google Workspace

- Google Workspace Essentials

- Gemini for Workspace

- Cloud Identity

- Chrome Enterprise

- Security and Identity

- Cloud IAM

- Sensitive Data Protection

- Mandiant Managed Defense

- Google Threat Intelligence

- Security Command Center

- Cloud Key Management

- Mandiant Incident Response

- Chrome Enterprise Premium

- Assured Workloads

- Google Security Operations

- Mandiant Consulting

- See all security and identity products

- Serverless

- Cloud Run

- Cloud Functions

- App Engine

- Workflows

- API Gateway

- Storage

- Cloud Storage

- Block Storage

- Filestore

- Persistent Disk

- Cloud Storage for Firebase

- Local SSD

- Storage Transfer Service

- Parallelstore

- Google Cloud NetApp Volumes

- Backup and DR Service

- Web3

- Blockchain Node Engine

- Blockchain RPC

- Save money with our transparent approach to pricing

- Request a quote

- Pricing overview and tools

- Google Cloud pricing

- Pricing calculator

- Google Cloud free tier

- Cost optimization framework

- Cost management tools

- Product-specific Pricing

- Compute Engine

- Cloud SQL

- Google Kubernetes Engine

- Cloud Storage

- BigQuery

- See full price list with 100+ products

- Learn & build

- Google Cloud Free Program

- Solution Generator

- Quickstarts

- Blog

- Learning Hub

- Google Cloud certification

- Cloud computing basics

- Cloud Architecture Center

- Connect

- Innovators

- Developer Center

- Events and webinars

- Google Cloud Community

- Consulting and Partners

- Google Cloud Consulting

- Google Cloud Marketplace

- Google Cloud partners

- Become a partner