| CARVIEW |

September 03, 2019 —

Posted by Da-Cheng Juan (Senior Software Engineer) and Sujith Ravi (Senior Staff Research Scientist)

We are excited to introduce Neural Structured Learning in TensorFlow, an easy-to-use framework that both novice and advanced developers can use for training neural networks with structured signals. Neural Structured Learning (NSL) can be applied to construct accurate and robust models for vision, l…

We are excited to introduce Neural Structured Learning in TensorFlow, an easy-to-use framework that both novice and advanced developers can use for training neural networks with structured signals. Neural Structured Learning (NSL) can be applied to construct accurate and robust models for vision, language understanding, and prediction in general.

How Neural Structured Learning (NSL) Works

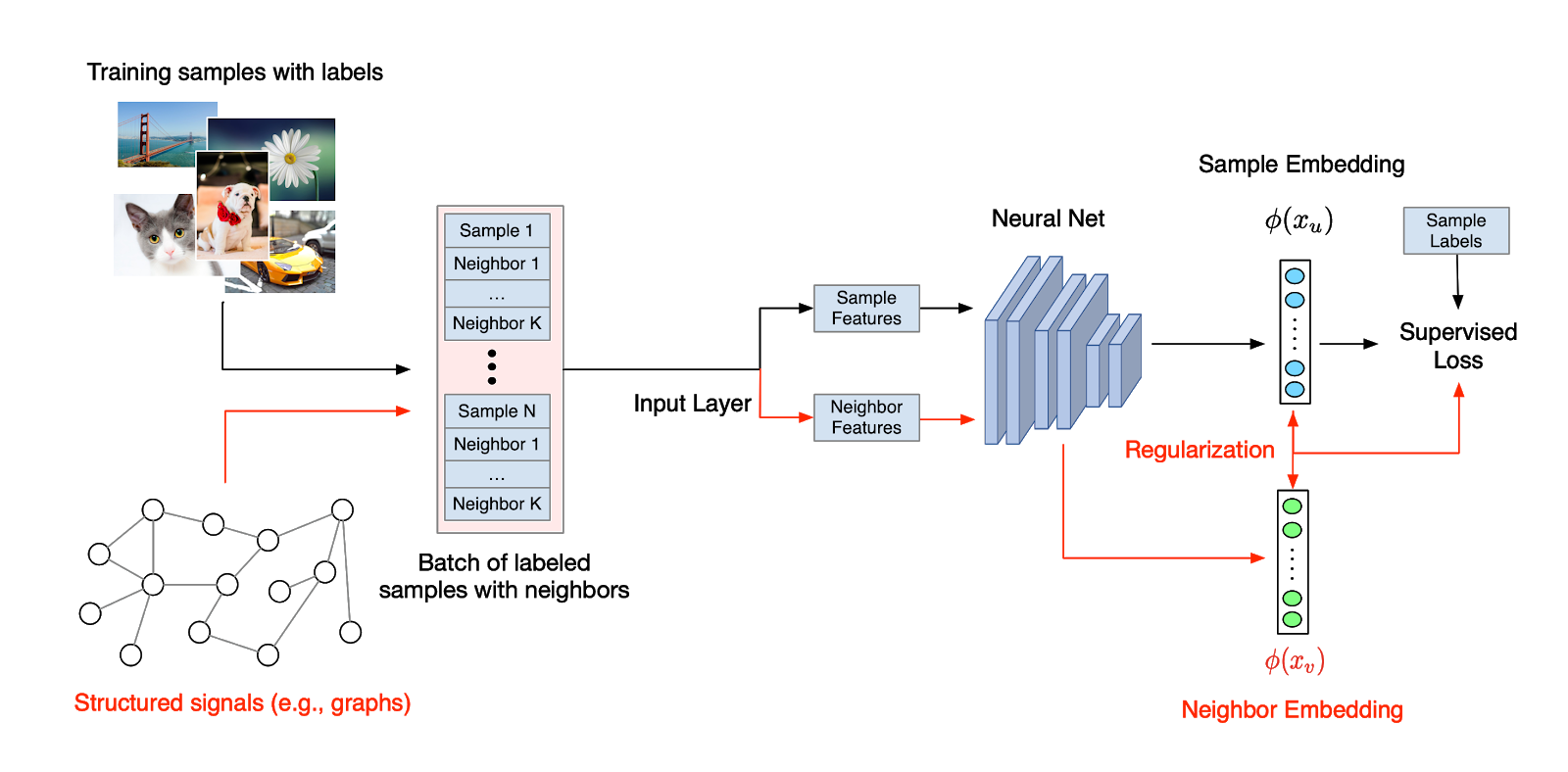

In Neural Structured Learning (NSL), the structured signals─whether explicitly defined as a graph or implicitly learned as adversarial examples─are used to regularize the training of a neural network, forcing the model to learn accurate predictions (by minimizing supervised loss), while at the same time maintaining the similarity among inputs from the same structure (by minimizing the neighbor loss, see the figure above). This technique is generic and can be applied on arbitrary neural architectures, such as Feed-forward NNs, Convolutional NNs and Recurrent NNs.Create a Model with Neural Structured Learning (NSL)

python pack_nbrs.py --max_nbrs=5 \

labeled_data.tfr \

unlabeled_data.tfr \

graph.tsv \

merged_examples.tfr

import neural_structured_learning as nsl

# Create a custom model — sequential, functional, or subclass.

base_model = tf.keras.Sequential(…)

# Wrap the custom model with graph regularization.

graph_config = nsl.configs.GraphRegConfig(neighbor_config=nsl.configs.GraphNeighborConfig(max_neighbors=1))

graph_model = nsl.keras.GraphRegularization(base_model, graph_config)

# Compile, train, and evaluate.

graph_model.compile(optimizer=’adam’, loss=tf.keras.losses.SparseCategoricalCrossentropy(), metrics=[‘accuracy’])

graph_model.fit(train_dataset, epochs=5)

graph_model.evaluate(test_dataset)

What if No Explicit Structure is Given?

import neural_structured_learning as nsl

# Create a base model — sequential, functional, or subclass.

model = tf.keras.Sequential(…)

# Wrap the model with adversarial regularization.

adv_config = nsl.configs.make_adv_reg_config(multiplier=0.2, adv_step_size=0.05)

adv_model = nsl.keras.AdversarialRegularization(model, adv_config=adv_config)

# Compile, train, and evaluate.

adv_model.compile(optimizer=’adam’, loss=’sparse_categorical_crossentropy’, metrics=[‘accuracy’])

adv_model.fit({‘feature’: x_train, ‘label’: y_train}, epochs=5)

adv_model.evaluate({‘feature’: x_test, ‘label’: y_test})

With less than 5 additional lines (again, including the comment), we obtain a neural model that trains with adversarial examples providing an implicit structure. Empirically, models trained without adversarial examples suffer from significant accuracy loss (e.g., 30% lower) when malicious yet not human-detectable perturbations are added to inputs.

Acknowledgements

September 03, 2019

—

Posted by Da-Cheng Juan (Senior Software Engineer) and Sujith Ravi (Senior Staff Research Scientist)

We are excited to introduce Neural Structured Learning in TensorFlow, an easy-to-use framework that both novice and advanced developers can use for training neural networks with structured signals. Neural Structured Learning (NSL) can be applied to construct accurate and robust models for vision, l…