| CARVIEW |

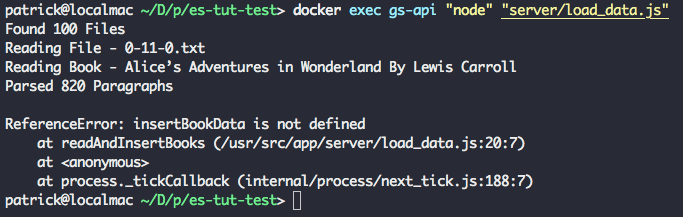

Open Cryptochat - A Tutorial

Cryptography is important. Without encryption, the internet as we know it would not be possible - data sent online would be as vulnerable to interception as a message shouted across a crowded room. Cryptography is also a major topic in current events, increasingly playing a central role in law enforcement investigations and government legislation.

Encryption is an invaluable tool for journalists, activists, nation-states, businesses, and everyday people who need to protect their data from the ever-present threat of hackers, spies, and advertising agencies.

An understanding of how to utilize strong encryption is essential for modern software development. We will not be delving much into the underlying math and theory of cryptography for this tutorial; instead, the focus will be on how to harness these techniques for your own applications.

In this tutorial, we will walk through the basic concepts and implementation of an end-to-end 2048-bit RSA encrypted messenger. We'll be utilizing Vue.js for coordinating the frontend functionality along with a Node.js backend using Socket.io for sending messages between users.

- Live Preview - https://chat.patricktriest.com

- Github Repository - https://github.com/triestpa/Open-Cryptochat

The concepts that we are covering in this tutorial are implemented in Javascript and are mostly intended to be platform-agnostic. We will be building a traditional browser-based web app, but you can adapt this code to work within a pre-built desktop (using Electron) or mobile ( React Native, Ionic, Cordova) application binary if you are concerned about browser-based application security.[1] Likewise, implementing similar functionality in another programming language should be relatively straightforward since most languages have reputable open-source encryption libraries available; the base syntax will change but the core concepts remain universal.

Disclaimer - This is meant to be a primer in end-to-end encryption implementation, not a definitive guide to building the Fort Knox of browser chat applications. I've worked to provide useful information on adding cryptography to your Javascript applications, but I cannot 100% guarantee the security of the resulting app. There's a lot that can go wrong at all stages of the process, especially at the stages not covered by this tutorial such as setting up web hosting and securing the server(s). If you are a security expert, and you find vulnerabilities in the tutorial code, please feel free to reach out to me by email (patrick.triest@gmail.com) or in the comments section below.

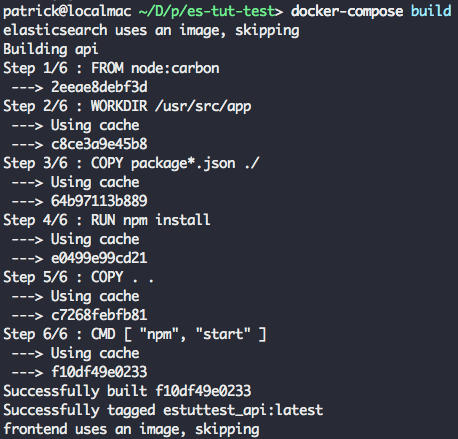

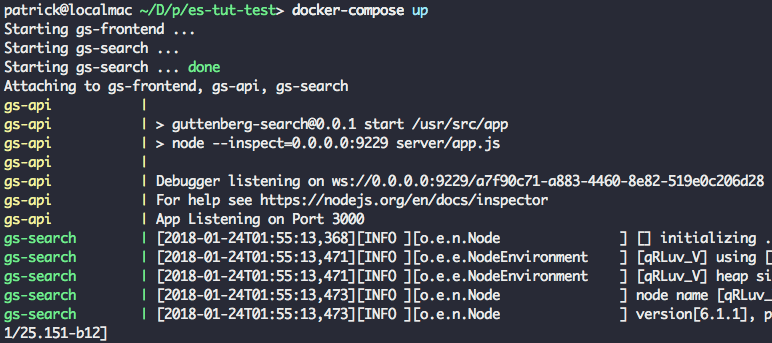

1 - Project Setup

1.0 - Install Dependencies

You'll need to have Node.js (version 6 or higher) installed in order to run the backend for this app.

Create an empty directory for the project and add a package.json file with the following contents.

{

"name": "open-cryptochat",

"version": "1.0.0",

"node":"8.1.4",

"license": "MIT",

"author": "patrick.triest@gmail.com",

"description": "End-to-end RSA-2048 encrypted chat application.",

"main": "app.js",

"engines": {

"node": ">=7.6"

},

"scripts": {

"start": "node app.js"

},

"dependencies": {

"express": "4.15.3",

"socket.io": "2.0.3"

}

}

Run npm install on the command line to install the two Node.js dependencies.

1.1 - Create Node.js App

Create a file called app.js, and add the following contents.

const express = require('express')

// Setup Express server

const app = express()

const http = require('http').Server(app)

// Attach Socket.io to server

const io = require('socket.io')(http)

// Serve web app directory

app.use(express.static('public'))

// INSERT SOCKET.IO CODE HERE

// Start server

const port = process.env.PORT || 3000

http.listen(port, () => {

console.log(`Chat server listening on port ${port}.`)

})

This is the core server logic. Right now, all it will do is start a server and make all of the files in the local /public directory accessible to web clients.

In production, I would strongly recommend serving your frontend code separately from the Node.js app, using battle-hardened server software such Apache and Nginx, or hosting the website on file storage service such as AWS S3. For this tutorial, however, using the Express static file server is the simplest way to get the app running.

1.2 - Add Frontend

Create a new directory called public. This is where we'll put all of the frontend web app code.

1.2.0 - Add HTML Template

Create a new file, /public/index.html, and add these contents.

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8">

<title>Open Cryptochat</title>

<meta name="description" content="A minimalist, open-source, end-to-end RSA-2048 encrypted chat application.">

<meta name="viewport" content="width=device-width, initial-scale=1, maximum-scale=1, user-scalable=no">

<link href="https://fonts.googleapis.com/css?family=Montserrat:300,400" rel="stylesheet">

<link href="https://fonts.googleapis.com/css?family=Roboto+Mono" rel="stylesheet">

<link href="/styles.css" rel="stylesheet">

</head>

<body>

<div id="vue-instance">

<!-- Add Chat Container Here -->

<div class="info-container full-width">

<!-- Add Room UI Here -->

<div class="notification-list" ref="notificationContainer">

<h1>NOTIFICATION LOG</h1>

<div class="notification full-width" v-for="notification in notifications">

<div class="notification-timestamp">{{ notification.timestamp }}</div>

<div class="notification-message">{{ notification.message }}</div>

</div>

</div>

<div class="flex-fill"></div>

<!-- Add Encryption Key UI Here -->

</div>

<!-- Add Bottom Bar Here -->

</div>

<script src="https://cdnjs.cloudflare.com/ajax/libs/vue/2.4.1/vue.min.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/socket.io/2.0.3/socket.io.slim.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/immutable/3.8.1/immutable.min.js"></script>

<script src="/page.js"></script>

</body>

</html>

This template sets up the baseline HTML structure and downloads the client-side JS dependencies. It will also display a simple list of notifications once we add the client-side JS code.

1.2.1 - Create Vue.js App

Add the following contents to a new file, /public/page.js.

/** The core Vue instance controlling the UI */

const vm = new Vue ({

el: '#vue-instance',

data () {

return {

cryptWorker: null,

socket: null,

originPublicKey: null,

destinationPublicKey: null,

messages: [],

notifications: [],

currentRoom: null,

pendingRoom: Math.floor(Math.random() * 1000),

draft: ''

}

},

created () {

this.addNotification('Hello World')

},

methods: {

/** Append a notification message in the UI */

addNotification (message) {

const timestamp = new Date().toLocaleTimeString()

this.notifications.push({ message, timestamp })

},

}

})

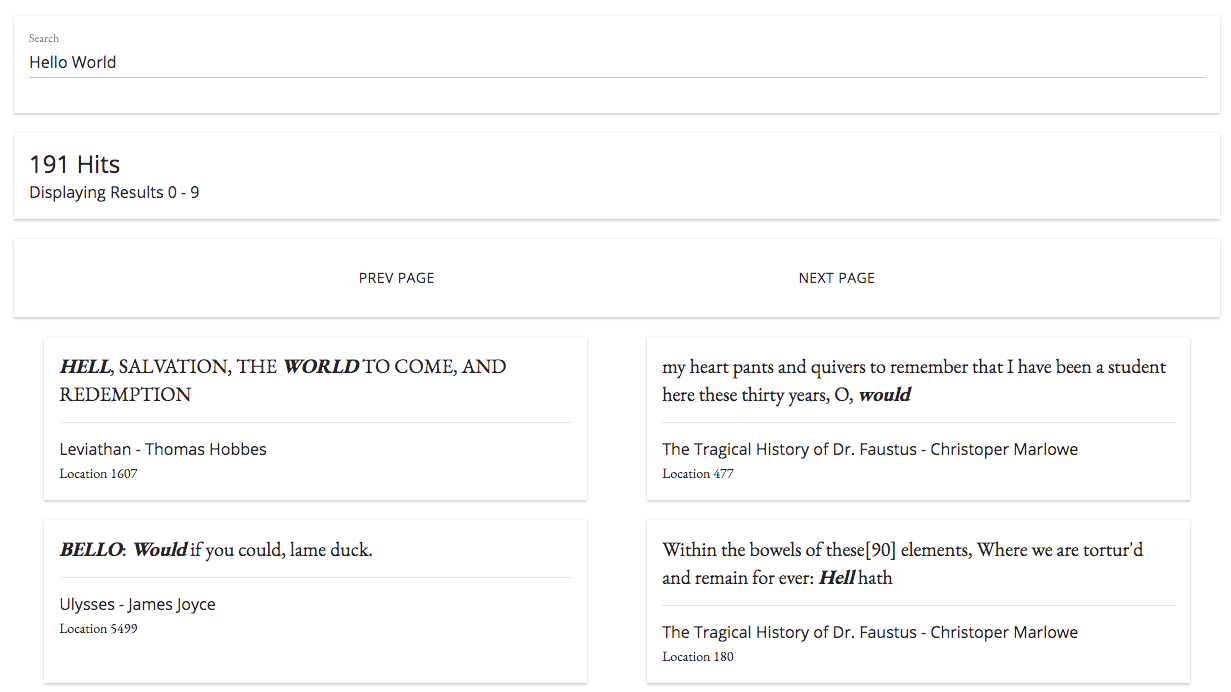

This script will initialize the Vue.js application and will add a "Hello World" notification to the UI.

1.2.2 - Add Styling

Create a new file, /public/styles.css and paste in the following stylesheet.

/* Global */

:root {

--black: #111111;

--light-grey: #d6d6d6;

--highlight: yellow;

}

body {

background: var(--black);

color: var(--light-grey);

font-family: 'Roboto Mono', monospace;

height: 100vh;

display: flex;

padding: 0;

margin: 0;

}

div { box-sizing: border-box; }

input, textarea, select { font-family: inherit; font-size: small; }

textarea:focus, input:focus { outline: none; }

.full-width { width: 100%; }

.green { color: green; }

.red { color: red; }

.yellow { color: yellow; }

.center-x { margin: 0 auto; }

.center-text { width: 100%; text-align: center; }

h1, h2, h3 { font-family: 'Montserrat', sans-serif; }

h1 { font-size: medium; }

h2 { font-size: small; font-weight: 300; }

h3 { font-size: x-small; font-weight: 300; }

p { font-size: x-small; }

.clearfix:after {

visibility: hidden;

display: block;

height: 0;

clear: both;

}

#vue-instance {

display: flex;

flex-direction: row;

flex: 1 0 100%;

overflow-x: hidden;

}

/** Chat Window **/

.chat-container {

flex: 0 0 60%;

word-wrap: break-word;

overflow-x: hidden;

overflow-y: scroll;

padding: 6px;

margin-bottom: 50px;

}

.message > p { font-size: small; }

.title-header > p {

font-family: 'Montserrat', sans-serif;

font-weight: 300;

}

/* Info Panel */

.info-container {

flex: 0 0 40%;

border-left: solid 1px var(--light-grey);

padding: 12px;

overflow-x: hidden;

overflow-y: scroll;

margin-bottom: 50px;

position: relative;

justify-content: space-around;

display: flex;

flex-direction: column;

}

.divider {

padding-top: 1px;

max-height: 0px;

min-width: 200%;

background: var(--light-grey);

margin: 12px -12px;

flex: 1 0;

}

.notification-list {

display: flex;

flex-direction: column;

overflow: scroll;

padding-bottom: 24px;

flex: 1 0 40%;

}

.notification {

font-family: 'Montserrat', sans-serif;

font-weight: 300;

font-size: small;

padding: 4px 0;

display: inline-flex;

}

.notification-timestamp {

flex: 0 0 20%;

padding-right: 12px;

}

.notification-message { flex: 0 0 80%; }

.notification:last-child {

margin-bottom: 24px;

}

.keys {

display: block;

font-size: xx-small;

overflow-x: hidden;

overflow-y: scroll;

}

.keys > .divider {

width: 75%;

min-width: 0;

margin: 16px auto;

}

.key { overflow: scroll; }

.room-select {

display: flex;

min-height: 24px;

font-family: 'Montserrat', sans-serif;

font-weight: 300;

}

#room-input {

flex: 0 0 60%;

background: none;

border: none;

border-bottom: 1px solid var(--light-grey);

border-top: 1px solid var(--light-grey);

border-left: 1px solid var(--light-grey);

color: var(--light-grey);

padding: 4px;

}

.yellow-button {

flex: 0 0 30%;

background: none;

border: 1px solid var(--highlight);

color: var(--highlight);

cursor: pointer;

}

.yellow-button:hover {

background: var(--highlight);

color: var(--black);

}

.yellow > a { color: var(--highlight); }

.loader {

border: 4px solid black;

border-top: 4px solid var(--highlight);

border-radius: 50%;

width: 48px;

height: 48px;

animation: spin 2s linear infinite;

}

@keyframes spin {

0% { transform: rotate(0deg); }

100% { transform: rotate(360deg); }

}

/* Message Input Bar */

.message-input {

background: none;

border: none;

color: var(--light-grey);

width: 90%;

}

.bottom-bar {

border-top: solid 1px var(--light-grey);

background: var(--black);

position: fixed;

bottom: 0;

left: 0;

padding: 12px;

height: 48px;

}

.message-list {

margin-bottom: 40px;

}

We won't really be going into the CSS, but I can assure you that it's all fairly straight-forward.

For the sake of simplicity, we won't bother to add a build system to our frontend. A build system, in my opinion, is just not really necessary for an app this simple (the total gzipped payload of the completed app is under 100kb). You are very welcome (and encouraged, since it will allow the app to be backwards compatible with outdated browsers) to add a build system such as Webpack, Gulp, or Rollup to the application if you decide to fork this code into your own project.

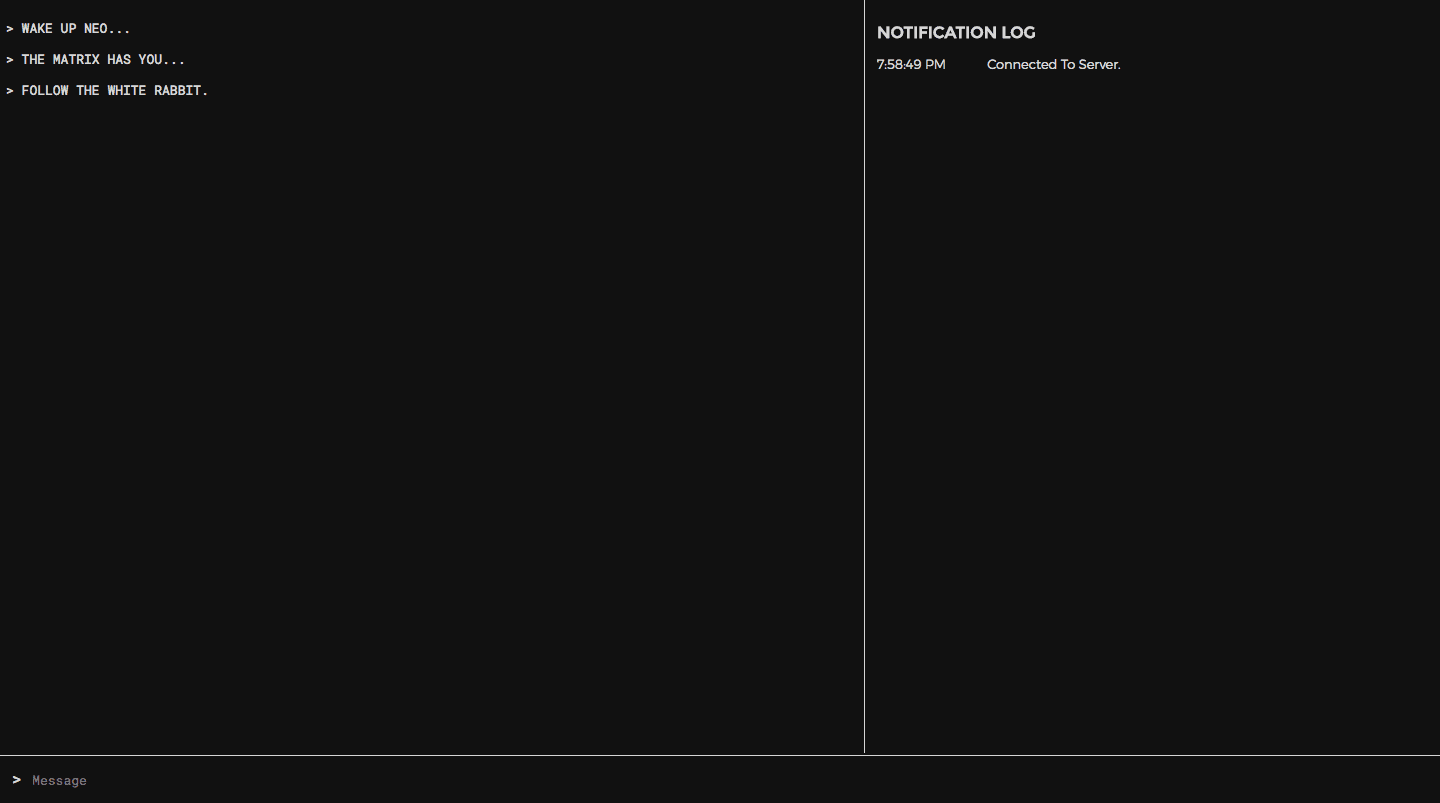

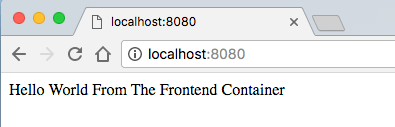

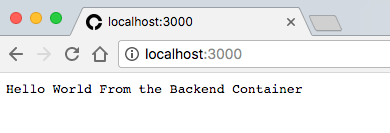

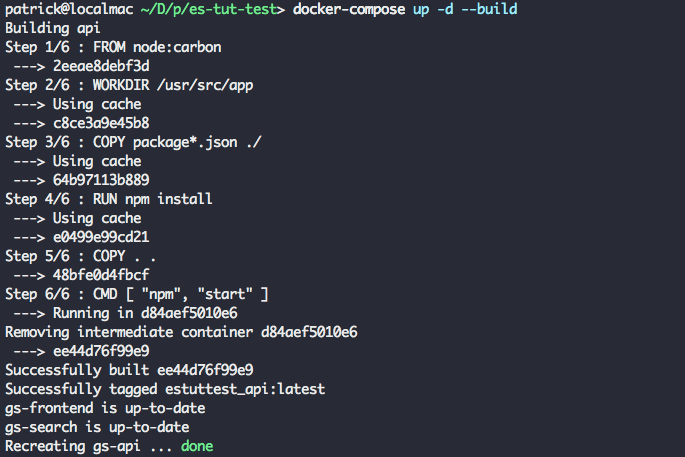

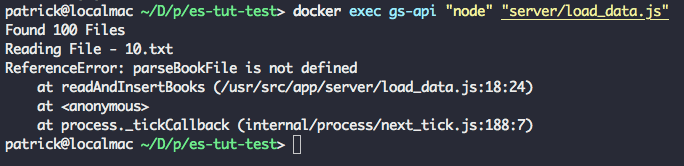

1.3 - Try it out

Try running npm start on the command-line. You should see the command-line output Chat server listening on port 3000.. Open https://localhost:3000 in your browser, and you should see a very dark, empty web app displaying "Hello World" on the right side of the page.

2 - Basic Messaging

Now that the baseline project scaffolding is in place, we'll start by adding basic (unencrypted) real-time messaging.

2.0 - Setup Server-Side Socket Listeners

In /app.js, add the follow code directly below the // INSERT SOCKET.IO CODE HERE marker.

/** Manage behavior of each client socket connection */

io.on('connection', (socket) => {

console.log(`User Connected - Socket ID ${socket.id}`)

// Store the room that the socket is connected to

let currentRoom = 'DEFAULT'

/** Process a room join request. */

socket.on('JOIN', (roomName) => {

socket.join(currentRoom)

// Notify user of room join success

io.to(socket.id).emit('ROOM_JOINED', currentRoom)

// Notify room that user has joined

socket.broadcast.to(currentRoom).emit('NEW_CONNECTION', null)

})

/** Broadcast a received message to the room */

socket.on('MESSAGE', (msg) => {

console.log(`New Message - ${msg.text}`)

socket.broadcast.to(currentRoom).emit('MESSAGE', msg)

})

})

This code-block will create a connection listener that will manage any clients who connect to the server from the front-end application. Currently, it just adds them to a DEFAULT chat room, and retransmits any message that it receives to the rest of the users in the room.

2.1 - Setup Client-Side Socket Listeners

Within the frontend, we'll add some code to connect to the server. Replace the created function in /public/page.js with the following.

created () {

// Initialize socket.io

this.socket = io()

this.setupSocketListeners()

},

Next, we'll need to add a few custom functions to manage the client-side socket connection and to send/receive messages. Add the following to /public/page.js inside the methods block of the Vue app object.

/** Setup Socket.io event listeners */

setupSocketListeners () {

// Automatically join default room on connect

this.socket.on('connect', () => {

this.addNotification('Connected To Server.')

this.joinRoom()

})

// Notify user that they have lost the socket connection

this.socket.on('disconnect', () => this.addNotification('Lost Connection'))

// Display message when recieved

this.socket.on('MESSAGE', (message) => {

this.addMessage(message)

})

},

/** Send the current draft message */

sendMessage () {

// Don't send message if there is nothing to send

if (!this.draft || this.draft === '') { return }

const message = this.draft

// Reset the UI input draft text

this.draft = ''

// Instantly add message to local UI

this.addMessage(message)

// Emit the message

this.socket.emit('MESSAGE', message)

},

/** Join the chatroom */

joinRoom () {

this.socket.emit('JOIN')

},

/** Add message to UI */

addMessage (message) {

this.messages.push(message)

},

2.2 - Display Messages in UI

Finally, we'll need to provide a UI to send and display messages.

In order to display all messages in the current chat, add the following to /public/index.html after the <!-- Add Chat Container Here --> comment.

<div class="chat-container full-width" ref="chatContainer">

<div class="message-list">

<div class="message full-width" v-for="message in messages">

<p>

> {{ message }}

</p>

</div>

</div>

</div>

To add a text input bar for the user to write messages in, add the following to /public/index.html, after the <!-- Add Bottom Bar Here --> comment.

<div class="bottom-bar full-width">

> <input class="message-input" type="text" placeholder="Message" v-model="draft" @keyup.enter="sendMessage()">

</div>

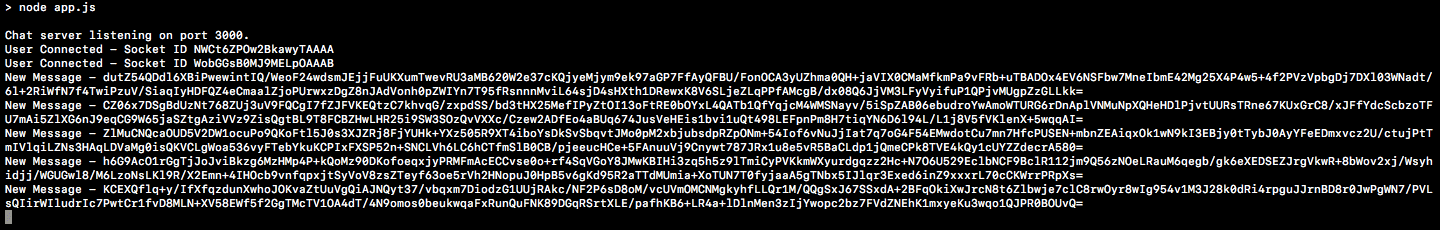

Now, restart the server and open https://localhost:3000 in two separate tabs/windows. Try sending messages back and forth between the tabs. In the command-line, you should be able to see a server log of messages being sent.

Encryption 101

Cool, now we have a real-time messaging application. Before adding end-to-end encryption, it's important to have a basic understanding of how asymmetric encryption works.

Symetric Encryption & One Way Functions

Let's say we're trading secret numbers. We're sending the numbers through a third party, but we don't want the third party to know which number we are exchanging.

In order to accomplish this, we'll exchange a shared secret first - let's use 7.

To encrypt the message, we'll first multiply our shared secret (7) by a random number n, and add a value x to the result. In this equation, x represents the number that we want to send and y represents the encrypted result.

(7 * n) + x = y

We can then use modular arithmetic in order to transform an encrypted input into the decrypted output.

y mod 7 = x

Here, y as the exposed (encrypted) message and x is the original unencrypted message.

If one of us wants to exchange the number 2, we could compute (7*4) + 2 and send 30 as a message. We both know the secret key (7), so we'll both be able to calculate 30 mod 7 and determine that 2 was the original number.

The original number (2), is effectively hidden from anyone listening in the middle since the only message passed between us was 30. If a third party is able to retrieve both the unencrypted result (30) and the encrypted value (2), they would still not know the value of the secret key. In this example, 30 mod 14 and 30 mod 28 are also equal to 2, so an interceptor could not know for certain whether the secret key is 7, 14, or 28, and therefore could not dependably decipher the next message.

Modulo is thus considered a "one-way" function since it cannot be trivially reversed.

Modern encryption algorithms are, to vastly simplify and generalize, very complex applications of this general principle. Through the use of large prime numbers, modular exponentiation, long private keys, and multiple rounds of cipher transformations, these algorithms generally take a very inconvenient amount a time (1+ million years) to crack.

Quantum computers could, theoretically, crack these ciphers more quickly. You can read more about this here. This technology is still in its infancy, so we probably don't need to worry about encrypted data being compromised in this manner just yet.

The above example assumes that both parties were able to exchange a secret (in this case 7) ahead of time. This is called symmetric encryption since the same secret key is used for both encrypting and decrypting the message. On the internet, however, this is often not a viable option - we need a way to send encrypted messages without requiring offline coordination to decide on a shared secret. This is where asymmetric encryption comes into play.

Public Key Cryptography

In contrast to symmetric encryption, public key cryptography (asymmetric encryption) uses pairs of keys (one public, one private) instead of a single shared secret - public keys are for encrypting data, and private keys are for decrypting data.

A public key is like an open box with an unbreakable lock. If someone wants to send you a message, they can place that message in your public box, and close the lid to lock it. The message can now be sent, to be delivered by an untrusted party without needing to worry about the contents being exposed. Once I receive the box, I'll unlock it with my private key - the only existing key which can open that box.

Exchanging public keys is like exchanging those boxes - each private key is kept safe with the original owner, so the contents of the box are safe in transit.

This is, of course, a bare-bones simplification of how public key cryptography works. If you're curious to learn more (especially regarding the history and mathematical basis for these techniques) I would strongly recommend starting with these two videos.

3 - Crypto Web Worker

Cryptographic operations tend to be computationally intensive. Since Javascript is single-threaded, doing these operations on the main UI thread will cause the browser to freeze for a few seconds.

Wrapping the operations in a promise will not help, since promises are for managing asynchronous operations on a single-thread, and do not provide any performance benefit for computationally intensive tasks.

In order to keep the application performant, we will use a Web Worker to perform cryptographic computations on a separate browser thread. We'll be using JSEncrypt, a reputable Javascript RSA implementation originating from Stanford. Using JSEncrypt, we'll create a few helper functions for encryption, decryption, and key pair generation.

3.0 - Create Web Worker To Wrap the JSencrypt Methods

Add a new file called crypto-worker.js in the public directory. This file will store our web worker code in order to perform encryption operations on a separate browser thread.

self.window = self // This is required for the jsencrypt library to work within the web worker

// Import the jsencrypt library

self.importScripts('https://cdnjs.cloudflare.com/ajax/libs/jsencrypt/2.3.1/jsencrypt.min.js');

let crypt = null

let privateKey = null

/** Webworker onmessage listener */

onmessage = function(e) {

const [ messageType, messageId, text, key ] = e.data

let result

switch (messageType) {

case 'generate-keys':

result = generateKeypair()

break

case 'encrypt':

result = encrypt(text, key)

break

case 'decrypt':

result = decrypt(text)

break

}

// Return result to the UI thread

postMessage([ messageId, result ])

}

/** Generate and store keypair */

function generateKeypair () {

crypt = new JSEncrypt({default_key_size: 2056})

privateKey = crypt.getPrivateKey()

// Only return the public key, keep the private key hidden

return crypt.getPublicKey()

}

/** Encrypt the provided string with the destination public key */

function encrypt (content, publicKey) {

crypt.setKey(publicKey)

return crypt.encrypt(content)

}

/** Decrypt the provided string with the local private key */

function decrypt (content) {

crypt.setKey(privateKey)

return crypt.decrypt(content)

}

This web worker will receive messages from the UI thread in the onmessage listener, perform the requested operation, and post the result back to the UI thread. The private encryption key is never directly exposed to the UI thread, which helps to mitigate the potential for key theft from a cross-site scripting (XSS) attack.

3.1 - Configure Vue App To Communicate with Web Worker

Next, we'll configure the UI controller to communicate with the web worker. Sequential call/response communications using event listeners can be painful to synchronize. To simplify this, we'll create a utility function that wraps the entire communication lifecycle in a promise. Add the following code to the methods block in /public/page.js.

/** Post a message to the web worker and return a promise that will resolve with the response. */

getWebWorkerResponse (messageType, messagePayload) {

return new Promise((resolve, reject) => {

// Generate a random message id to identify the corresponding event callback

const messageId = Math.floor(Math.random() * 100000)

// Post the message to the webworker

this.cryptWorker.postMessage([messageType, messageId].concat(messagePayload))

// Create a handler for the webworker message event

const handler = function (e) {

// Only handle messages with the matching message id

if (e.data[0] === messageId) {

// Remove the event listener once the listener has been called.

e.currentTarget.removeEventListener(e.type, handler)

// Resolve the promise with the message payload.

resolve(e.data[1])

}

}

// Assign the handler to the webworker 'message' event.

this.cryptWorker.addEventListener('message', handler)

})

}

This code will allow us to trigger an operation on the web worker thread and receive the result in a promise. This can be a very useful helper function in any project that outsources call/response processing to web workers.

4 - Key Exchange

In our app, the first step will be generating a public-private key pair for each user. Then, once the users are in the same chat, we will exchange public keys so that each user can encrypt messages which only the other user can decrypt. Hence, we will always encrypt messages using the recipient's public key, and we will always decrypt messages using the recipient's private key.

4.0 - Add Server-Side Socket Listener To Transmit Public Keys

On the server-side, we'll need a new socket listener that will receive a public-key from a client and re-broadcast this key to the rest of the room. We'll also add a listener to let clients know when someone has disconnected from the current room.

Add the following listeners to /app.js within the io.on('connection', (socket) => { ... } callback.

/** Broadcast a new publickey to the room */

socket.on('PUBLIC_KEY', (key) => {

socket.broadcast.to(currentRoom).emit('PUBLIC_KEY', key)

})

/** Broadcast a disconnection notification to the room */

socket.on('disconnect', () => {

socket.broadcast.to(currentRoom).emit('USER_DISCONNECTED', null)

})

4.1 - Generate Key Pair

Next, we'll replace the created function in /public/page.js to initialize the web worker and generate a new key pair.

async created () {

this.addNotification('Welcome! Generating a new keypair now.')

// Initialize crypto webworker thread

this.cryptWorker = new Worker('crypto-worker.js')

// Generate keypair and join default room

this.originPublicKey = await this.getWebWorkerResponse('generate-keys')

this.addNotification('Keypair Generated')

// Initialize socketio

this.socket = io()

this.setupSocketListeners()

},

We are using the async/await syntax to receive the web worker promise result with a single line of code.

4.2 - Add Public Key Helper Functions

We'll also add a few new functions to /public/page.js for sending the public key, and to trim down the key to a human-readable identifier.

/** Emit the public key to all users in the chatroom */

sendPublicKey () {

if (this.originPublicKey) {

this.socket.emit('PUBLIC_KEY', this.originPublicKey)

}

},

/** Get key snippet for display purposes */

getKeySnippet (key) {

return key.slice(400, 416)

},

4.3 - Send and Receive Public Key

Next, we'll add some listeners to the client-side socket code, in order to send the local public key whenever a new user joins the room, and to save the public key sent by the other user.

Add the following to /public/page.js within the setupSocketListeners function.

// When a user joins the current room, send them your public key

this.socket.on('NEW_CONNECTION', () => {

this.addNotification('Another user joined the room.')

this.sendPublicKey()

})

// Broadcast public key when a new room is joined

this.socket.on('ROOM_JOINED', (newRoom) => {

this.currentRoom = newRoom

this.addNotification(`Joined Room - ${this.currentRoom}`)

this.sendPublicKey()

})

// Save public key when received

this.socket.on('PUBLIC_KEY', (key) => {

this.addNotification(`Public Key Received - ${this.getKeySnippet(key)}`)

this.destinationPublicKey = key

})

// Clear destination public key if other user leaves room

this.socket.on('user disconnected', () => {

this.notify(`User Disconnected - ${this.getKeySnippet(this.destinationKey)}`)

this.destinationPublicKey = null

})

4.4 - Show Public Keys In UI

Finally, we'll add some HTML to display the two public keys.

Add the following to /public/index.html, directly below the <!-- Add Encryption Key UI Here --> comment.

<div class="divider"></div>

<div class="keys full-width">

<h1>KEYS</h1>

<h2>THEIR PUBLIC KEY</h2>

<div class="key red" v-if="destinationPublicKey">

<h3>TRUNCATED IDENTIFIER - {{ getKeySnippet(destinationPublicKey) }}</h3>

<p>{{ destinationPublicKey }}</p>

</div>

<h2 v-else>Waiting for second user to join room...</h2>

<div class="divider"></div>

<h2>YOUR PUBLIC KEY</h2>

<div class="key green" v-if="originPublicKey">

<h3>TRUNCATED IDENTIFIER - {{ getKeySnippet(originPublicKey) }}</h3>

<p>{{ originPublicKey }}</p>

</div>

<div class="keypair-loader full-width" v-else>

<div class="center-x loader"></div>

<h2 class="center-text">Generating Keypair...</h2>

</div>

</div>

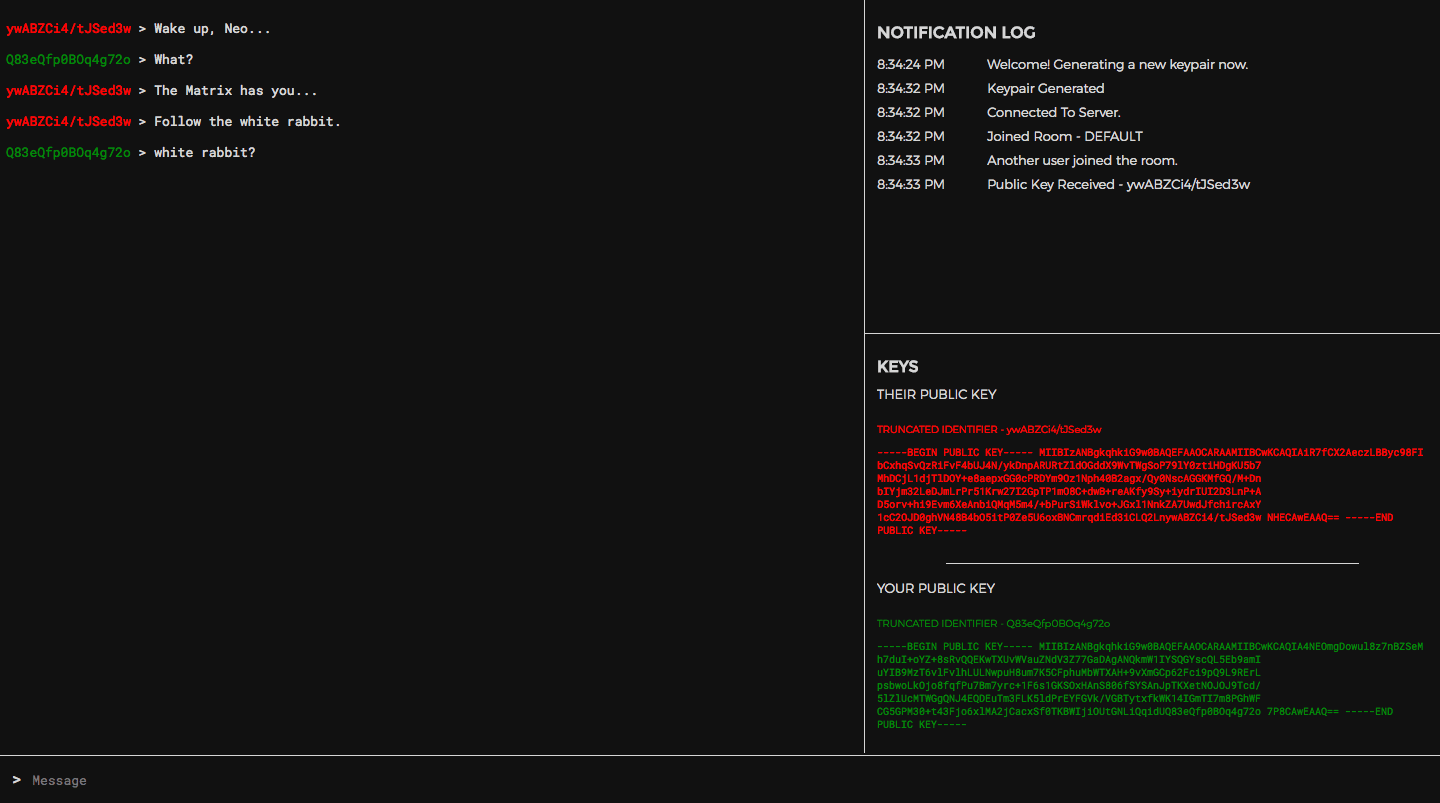

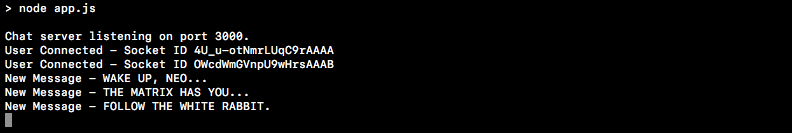

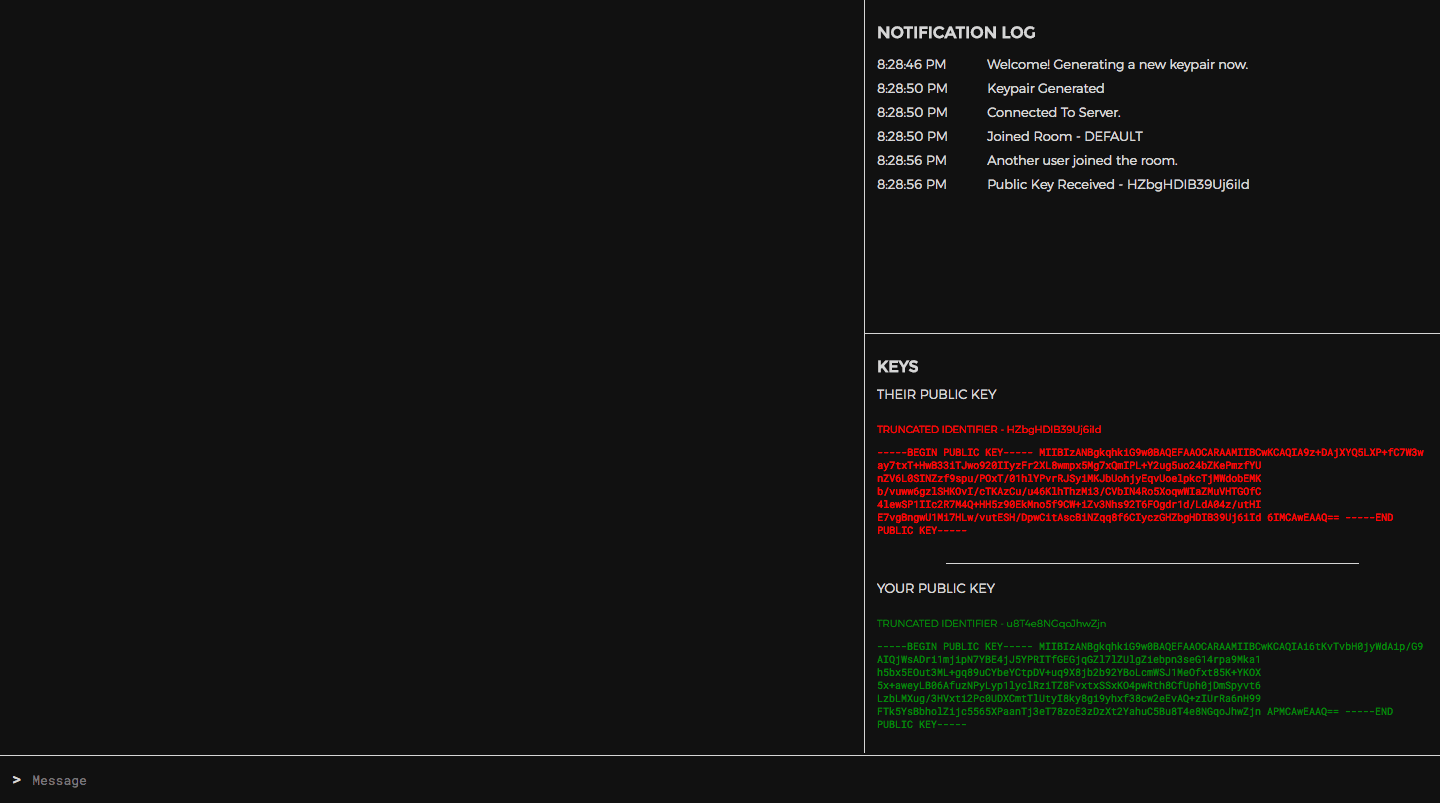

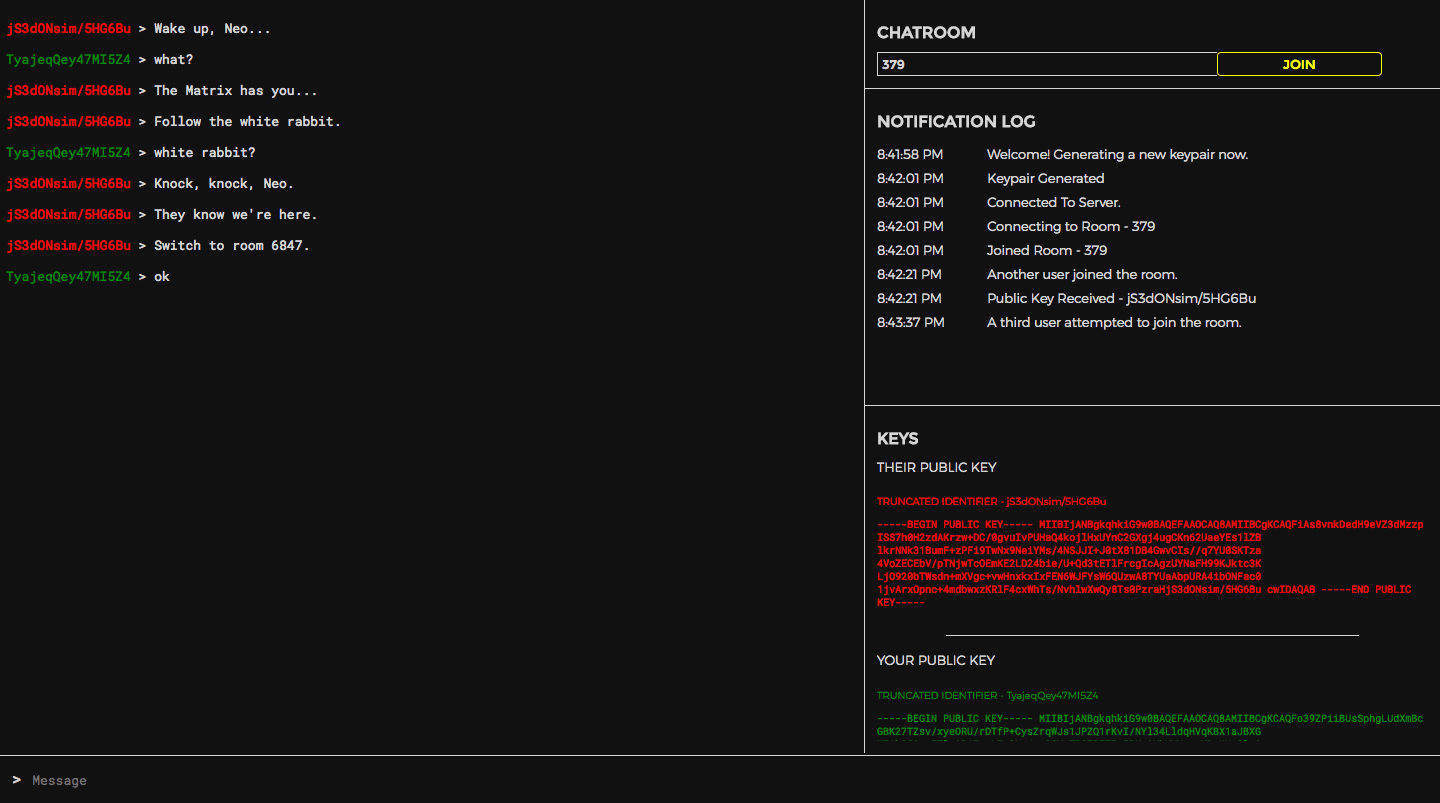

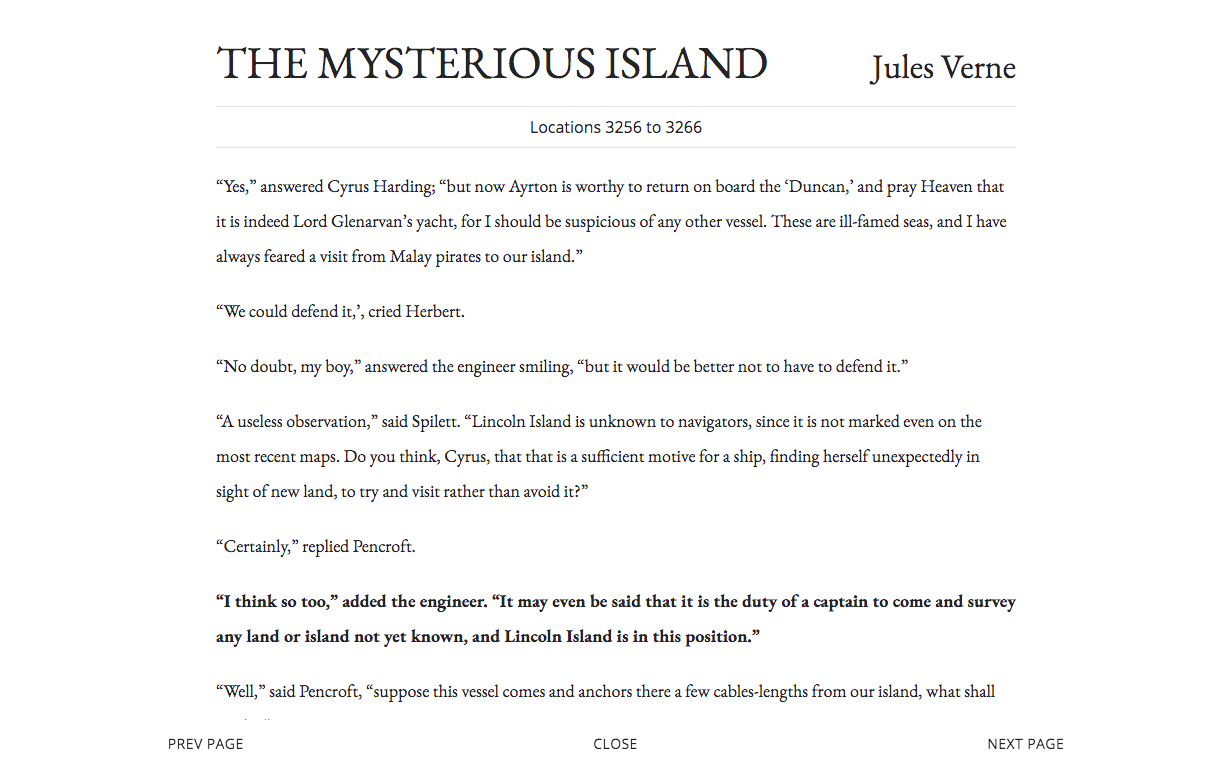

Try restarting the app and reloading https://localhost:3000. You should be able to simulate a successful key exchange by opening two browser tabs.

Having more than two pages with web app running will break the key-exchange. We'll fix this further down.

5 - Message Encryption

Now that the key-exchange is complete, encrypting and decrypting messages within the web app is rather straight-forward.

5.0 - Encrypt Message Before Sending

Replace the sendMessage function in /public/page.js with the following.

/** Encrypt and emit the current draft message */

async sendMessage () {

// Don't send message if there is nothing to send

if (!this.draft || this.draft === '') { return }

// Use immutable.js to avoid unintended side-effects.

let message = Immutable.Map({

text: this.draft,

recipient: this.destinationPublicKey,

sender: this.originPublicKey

})

// Reset the UI input draft text

this.draft = ''

// Instantly add (unencrypted) message to local UI

this.addMessage(message.toObject())

if (this.destinationPublicKey) {

// Encrypt message with the public key of the other user

const encryptedText = await this.getWebWorkerResponse(

'encrypt', [ message.get('text'), this.destinationPublicKey ])

const encryptedMsg = message.set('text', encryptedText)

// Emit the encrypted message

this.socket.emit('MESSAGE', encryptedMsg.toObject())

}

},

5.1 - Receive and Decrypt Message

Modify the client-side message listener in /public/page.js to decrypt the message once it is received.

// Decrypt and display message when received

this.socket.on('MESSAGE', async (message) => {

// Only decrypt messages that were encrypted with the user's public key

if (message.recipient === this.originPublicKey) {

// Decrypt the message text in the webworker thread

message.text = await this.getWebWorkerResponse('decrypt', message.text)

// Instantly add (unencrypted) message to local UI

this.addMessage(message)

}

})

5.2 - Display Message List

Modify the message list UI in /public/index.html (inside the chat-container) to display the decrypted message and the abbreviated public key of the sender.

<div class="message full-width" v-for="message in messages">

<p>

<span v-bind:class="(message.sender == originPublicKey) ? 'green' : 'red'">{{ getKeySnippet(message.sender) }}</span>

> {{ message.text }}

</p>

</div>

5.3 - Try It Out

Try restarting the server and reloading the page at https://localhost:3000. The UI should look mostly unchanged from how it was before, besides displaying the public key snippet of whoever sent each message.

In command-line output, the messages are no longer readable - they now display as garbled encrypted text.

6 - Chatrooms

You may have noticed a massive flaw in the current app - if we open a third tab running the web app then the encryption system breaks. Asymmetric-encryption is designed to work in one-to-one scenarios; there's no way to encrypt the message once and have it be decryptable by two separate users.

This leaves us with two options -

- Encrypt and send a separate copy of the message to each user, if there is more than one.

- Restrict each chat room to only allow two users at a time.

Since this tutorial is already quite long, we'll be going with second, simpler option.

6.0 - Server-side Room Join Logic

In order to enforce this new 2-user limit, we'll modify the server-side socket JOIN listener in /app.js, at the top of socket connection listener block.

// Store the room that the socket is connected to

// If you need to scale the app horizontally, you'll need to store this variable in a persistent store such as Redis.

// For more info, see here: https://github.com/socketio/socket.io-redis

let currentRoom = null

/** Process a room join request. */

socket.on('JOIN', (roomName) => {

// Get chatroom info

let room = io.sockets.adapter.rooms[roomName]

// Reject join request if room already has more than 1 connection

if (room && room.length > 1) {

// Notify user that their join request was rejected

io.to(socket.id).emit('ROOM_FULL', null)

// Notify room that someone tried to join

socket.broadcast.to(roomName).emit('INTRUSION_ATTEMPT', null)

} else {

// Leave current room

socket.leave(currentRoom)

// Notify room that user has left

socket.broadcast.to(currentRoom).emit('USER_DISCONNECTED', null)

// Join new room

currentRoom = roomName

socket.join(currentRoom)

// Notify user of room join success

io.to(socket.id).emit('ROOM_JOINED', currentRoom)

// Notify room that user has joined

socket.broadcast.to(currentRoom).emit('NEW_CONNECTION', null)

}

})

This modified socket logic will prevent a user from joining any room that already has two users.

6.1 - Join Room From The Client Side

Next, we'll modify our client-side joinRoom function in /public/page.js, in order to reset the state of the chat when switching rooms.

/** Join the specified chatroom */

joinRoom () {

if (this.pendingRoom !== this.currentRoom && this.originPublicKey) {

this.addNotification(`Connecting to Room - ${this.pendingRoom}`)

// Reset room state variables

this.messages = []

this.destinationPublicKey = null

// Emit room join request.

this.socket.emit('JOIN', this.pendingRoom)

}

},

6.2 - Add Notifications

Let's create two more client-side socket listeners (within the setupSocketListeners function in /public/page.js), to notify us whenever a join request is rejected.

// Notify user that the room they are attempting to join is full

this.socket.on('ROOM_FULL', () => {

this.addNotification(`Cannot join ${this.pendingRoom}, room is full`)

// Join a random room as a fallback

this.pendingRoom = Math.floor(Math.random() * 1000)

this.joinRoom()

})

// Notify room that someone attempted to join

this.socket.on('INTRUSION_ATTEMPT', () => {

this.addNotification('A third user attempted to join the room.')

})

6.3 - Add Room Join UI

Finally, we'll add some HTML to provide an interface for the user to join a room of their choosing.

Add the following to /public/index.html below the <!-- Add Room UI Here --> comment.

<h1>CHATROOM</h1>

<div class="room-select">

<input type="text" class="full-width" placeholder="Room Name" id="room-input" v-model="pendingRoom" @keyup.enter="joinRoom()">

<input class="yellow-button full-width" type="submit" v-on:click="joinRoom()" value="JOIN">

</div>

<div class="divider"></div>

6.4 - Add Autoscroll

An annoying bug remaining in the app is that the notification and chat lists do not yet auto-scroll to display new messages.

In /public/page.js, add the following function to the methods block.

/** Autoscoll DOM element to bottom */

autoscroll (element) {

if (element) { element.scrollTop = element.scrollHeight }

},

To auto-scroll the notification and message lists, we'll call autoscroll at the end of their respective add methods.

/** Add message to UI and scroll the view to display the new message. */

addMessage (message) {

this.messages.push(message)

this.autoscroll(this.$refs.chatContainer)

},

/** Append a notification message in the UI */

addNotification (message) {

const timestamp = new Date().toLocaleTimeString()

this.notifications.push({ message, timestamp })

this.autoscroll(this.$refs.notificationContainer)

},

6.5 - Try it out

That was the last step! Try restarting the node app and reloading the page at localhost:3000. You should now be able to freely switch between rooms, and any attempt to join the same room from a third browser tab will be rejected.

7 - What next?

Congrats! You have just built a completely functional end-to-end encrypted messaging app.

Github Repository - https://github.com/triestpa/Open-Cryptochat

Live Preview - https://chat.patricktriest.com

Using this baseline source code you could deploy a private messaging app on your own servers. In order to coordinate which room to meet in, one slick option could be using a time-based pseudo-random number generator (such as Google Authenticator), with a shared seed between you and a second party (I've got a Javascript "Google Authenticator" clone tutorial in the works - stay tuned).

Further Improvements

There are lots of ways to build up the app from here:

- Group chats, by storing multiple public keys, and encrypting the message for each user individually.

- Multimedia messages, by encrypting a byte-array containing the media file.

- Import and export key pairs as local files.

- Sign messages with the private key for sender identity verification. This is a trade-off because it increases the difficulty of fabricating messages, but also undermines the goal of "deniable authentication" as outlined in the OTR messaging standard.

- Experiment with different encryption systems such as:

- AES - Symmetric encryption, with a shared secret between the users. This is the only publicly available algorithm that is in use by the NSA and US Military.

- ElGamal - Similar to RSA, but with smaller cyphertexts, faster decryption, and slower encryption. This is the core algorithm that is used in PGP.

- Implement a Diffie-Helman key exchange. This is a technique of using asymmetric encryption (such as ElGamal) to exchange a shared secret, such as a symmetric encryption key (for AES). Building this on top of our existing project and exchanging a new shared secret before each message is a good way to improve the security of the app (see Perfect Forward Security).

- Build an app for virtually any use-case where intermediate servers should never have unencrypted access to the transmitted data, such as password-managers and P2P (peer-to-peer) networks.

- Refactor the app for React Native, Ionic, Cordova, or Electron in order to provide a secure pre-built application bundle for mobile and/or desktop environments.

Feel free to comment below with questions, responses, and/or feedback on the tutorial.

Security Implications Of Browser Based Encryption

Please remember to be careful. The use of these protocols in a browser-based Javascript app is a great way to experiment and understand how they work in practice, but this app is not a suitable replacement for established, peer-reviewed encryption protocol implementations such as OpenSSL and GnuPG.

Client-side browser Javascript encryption is a controversial topic among security experts due to the vulnerabilities present in web application delivery versus pre-packaged software distributions that run outside the browser. Many of these issues can be mitigated by utilizing HTTPS to prevent man-in-the-middle resource injection attacks, and by avoiding persistent storage of unencrypted sensitive data within the browser, but it is important to stay aware of potential vulnerabilities in the web platform. ↩︎

Data Science, Politics, and Police

The intersection of science, politics, personal opinion, and social policy can be rather complex. This junction of ideas and disciplines is often rife with controversies, strongly held viewpoints, and agendas that are often more based on belief than on empirical evidence. Data science is particularly important in this area since it provides a methodology for examining the world in a pragmatic fact-first manner, and is capable of providing insight into some of the most important issues that we face today.

The recent high-profile police shootings of unarmed black men, such as Michael Brown (2014), Tamir Rice (2014), Anton Sterling (2016), and Philando Castile (2016), have triggered a divisive national dialog on the issue of racial bias in policing.

These shootings have spurred the growth of large social movements seeking to raise awareness of what is viewed as the systemic targeting of people-of-color by police forces across the country. On the other side of the political spectrum, many hold a view that the unbalanced targeting of non-white citizens is a myth created by the media based on a handful of extreme cases, and that these highly-publicized stories are not representative of the national norm.

In June 2017, a team of researchers at Stanford University collected and released an open-source data set of 60 million state police patrol stops from 20 states across the US. In this tutorial, we will walk through how to analyze and visualize this data using Python.

The source code and figures for this analysis can be found in the companion Github repository - https://github.com/triestpa/Police-Analysis-Python

To preview the completed IPython notebook, visit the page here.

This tutorial and analysis would not be possible without the work performed by The Stanford Open Policing Project. Much of the analysis performed in this tutorial is based on the work that has already performed by this team. A short tutorial for working with the data using the R programming language is provided on the official project website.

The Data

In the United States there are more than 50,000 traffic stops on a typical day. The potential number of data points for each stop is huge, from the demographics (age, race, gender) of the driver, to the location, time of day, stop reason, stop outcome, car model, and much more. Unfortunately, not every state makes this data available, and those that do often have different standards for which information is reported. Different counties and districts within each state can also be inconstant in how each traffic stop is recorded. The research team at Stanford has managed to gather traffic-stop data from twenty states, and has worked to regularize the reporting standards for 11 fields.

- Stop Date

- Stop Time

- Stop Location

- Driver Race

- Driver Gender

- Driver Age

- Stop Reason

- Search Conducted

- Search Type

- Contraband Found

- Stop Outcome

Most states do not have data available for every field, but there is enough overlap between the data sets to provide a solid foundation for some very interesting analysis.

0 - Getting Started

We'll start with analyzing the data set for Vermont. We're looking at Vermont first for a few reasons.

- The Vermont dataset is small enough to be very manageable and quick to operate on, with only 283,285 traffic stops (compared to the Texas data set, for instance, which contains almost 24 million records).

- There is not much missing data, as all eleven fields mentioned above are covered.

- Vermont is 94% white, but is also in a part of the country known for being very liberal (disclaimer - I grew up in the Boston area, and I've spent a quite a bit of time in Vermont). Many in this area consider this state to be very progressive and might like to believe that their state institutions are not as prone to systemic racism as the institutions in other parts of the country. It will be interesting to determine if the data validates this view.

0.0 - Download Datset

First, download the Vermont traffic stop data - https://stacks.stanford.edu/file/druid:py883nd2578/VT-clean.csv.gz

0.1 - Setup Project

Create a new directory for the project, say police-data-analysis, and move the downloaded file into a /data directory within the project.

0.2 - Optional: Create new virtualenv (or Anaconda) environment

If you want to keep your Python dependencies neat and separated between projects, now would be the time to create and activate a new environment for this analysis, using either virtualenv or Anaconda.

Here are some tutorials to help you get set up.

virtualenv - https://virtualenv.pypa.io/en/stable/

Anaconda - https://conda.io/docs/user-guide/install/index.html

0.3 - Install dependencies

We'll need to install a few Python packages to perform our analysis.

On the command line, run the following command to install the required libraries.

pip install numpy pandas matplotlib ipython jupyter

If you're using Anaconda, you can replace the

pipcommand here withconda. Also, depending on your installation, you might need to usepip3instead ofpipin order to install the Python 3 versions of the packages.

0.4 - Start Jupyter Notebook

Start a new local Jupyter notebook server from the command line.

jupyter notebook

Open your browser to the specified URL (probably localhost:8888, unless you have a special configuration) and create a new notebook.

I used Python 3.6 for writing this tutorial. If you want to use another Python version, that's fine, most of the code that we'll cover should work on any Python 2.x or 3.x distribution.

0.5 - Load Dependencies

In the first cell of the notebook, import our dependencies.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

figsize = (16,8)

We're also setting a shared variable figsize that we'll reuse later on in our data visualization logic.

0.6 - Load Dataset

In the next cell, load Vermont police stop data set into a Pandas dataframe.

df_vt = pd.read_csv('./data/VT-clean.csv.gz', compression='gzip', low_memory=False)

This command assumes that you are storing the data set in the

datadirectory of the project. If you are not, you can adjust the data file path accordingly.

1 - Vermont Data Exploration

Now begins the fun part.

1.0 - Preview the Available Data

We can get a quick preview of the first ten rows of the data set with the head() method.

df_vt.head()

| id | state | stop_date | stop_time | location_raw | county_name | county_fips | fine_grained_location | police_department | driver_gender | driver_age_raw | driver_age | driver_race_raw | driver_race | violation_raw | violation | search_conducted | search_type_raw | search_type | contraband_found | stop_outcome | is_arrested | officer_id | is_white | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | VT-2010-00001 | VT | 2010-07-01 | 00:10 | East Montpelier | Washington County | 50023.0 | COUNTY RD | MIDDLESEX VSP | M | 22.0 | 22.0 | White | White | Moving Violation | Moving violation | False | No Search Conducted | N/A | False | Citation | False | -1.562157e+09 | True |

| 3 | VT-2010-00004 | VT | 2010-07-01 | 00:11 | Whiting | Addison County | 50001.0 | N MAIN ST | NEW HAVEN VSP | F | 18.0 | 18.0 | White | White | Moving Violation | Moving violation | False | No Search Conducted | N/A | False | Arrest for Violation | True | -3.126844e+08 | True |

| 4 | VT-2010-00005 | VT | 2010-07-01 | 00:35 | Hardwick | Caledonia County | 50005.0 | i91 nb mm 62 | ROYALTON VSP | M | 18.0 | 18.0 | White | White | Moving Violation | Moving violation | False | No Search Conducted | N/A | False | Written Warning | False | 9.225661e+08 | True |

| 5 | VT-2010-00006 | VT | 2010-07-01 | 00:44 | Hardwick | Caledonia County | 50005.0 | 64000 I 91 N; MM64 I 91 N | ROYALTON VSP | F | 20.0 | 20.0 | White | White | Vehicle Equipment | Equipment | False | No Search Conducted | N/A | False | Written Warning | False | -6.032327e+08 | True |

| 8 | VT-2010-00009 | VT | 2010-07-01 | 01:10 | Rochester | Windsor County | 50027.0 | 36000 I 91 S; MM36 I 91 S | ROCKINGHAM VSP | M | 24.0 | 24.0 | Black | Black | Moving Violation | Moving violation | False | No Search Conducted | N/A | False | Written Warning | False | 2.939526e+08 | False |

We can also list the available fields by reading the columns property.

df_vt.columns

Index(['id', 'state', 'stop_date', 'stop_time', 'location_raw', 'county_name',

'county_fips', 'fine_grained_location', 'police_department',

'driver_gender', 'driver_age_raw', 'driver_age', 'driver_race_raw',

'driver_race', 'violation_raw', 'violation', 'search_conducted',

'search_type_raw', 'search_type', 'contraband_found', 'stop_outcome',

'is_arrested', 'officer_id'],

dtype='object')

1.1 - Drop Missing Values

Let's do a quick count of each column to determine how consistently populated the data is.

df_vt.count()

id 283285

state 283285

stop_date 283285

stop_time 283285

location_raw 282591

county_name 282580

county_fips 282580

fine_grained_location 282938

police_department 283285

driver_gender 281573

driver_age_raw 282114

driver_age 281999

driver_race_raw 279301

driver_race 278468

violation_raw 281107

violation 281107

search_conducted 283285

search_type_raw 281045

search_type 3419

contraband_found 283251

stop_outcome 280960

is_arrested 283285

officer_id 283273

dtype: int64

We can see that most columns have similar numbers of values besides search_type, which is not present for most of the rows, likely because most stops do not result in a search.

For our analysis, it will be best to have the exact same number of values for each field. We'll go ahead now and make sure that every single cell has a value.

# Fill missing search type values with placeholder

df_vt['search_type'].fillna('N/A', inplace=True)

# Drop rows with missing values

df_vt.dropna(inplace=True)

df_vt.count()

When we count the values again, we'll see that each column has the exact same number of entries.

id 273181

state 273181

stop_date 273181

stop_time 273181

location_raw 273181

county_name 273181

county_fips 273181

fine_grained_location 273181

police_department 273181

driver_gender 273181

driver_age_raw 273181

driver_age 273181

driver_race_raw 273181

driver_race 273181

violation_raw 273181

violation 273181

search_conducted 273181

search_type_raw 273181

search_type 273181

contraband_found 273181

stop_outcome 273181

is_arrested 273181

officer_id 273181

dtype: int64

1.2 - Stops By County

Let's get a list of all counties in the data set, along with how many traffic stops happened in each.

df_vt['county_name'].value_counts()

Windham County 37715

Windsor County 36464

Chittenden County 24815

Orange County 24679

Washington County 24633

Rutland County 22885

Addison County 22813

Bennington County 22250

Franklin County 19715

Caledonia County 16505

Orleans County 10344

Lamoille County 8604

Essex County 1239

Grand Isle County 520

Name: county_name, dtype: int64

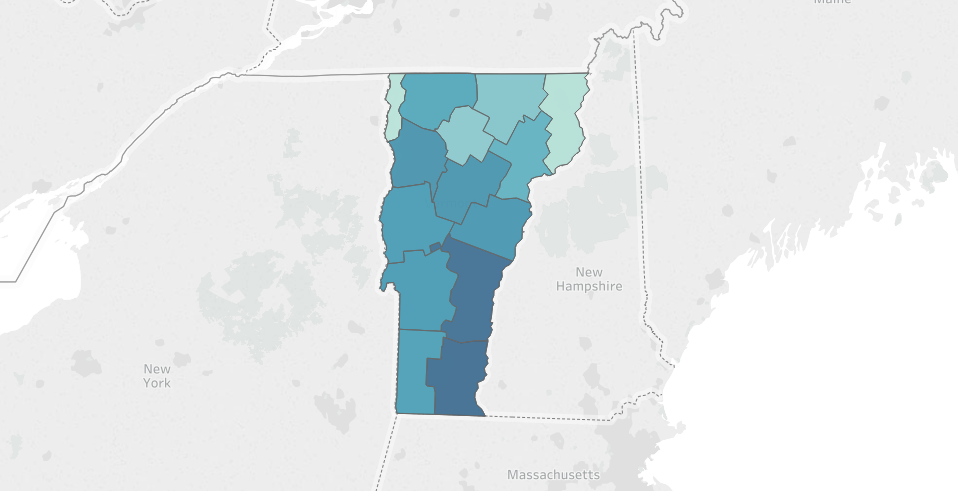

If you're familiar with Vermont's geography, you'll notice that the police stops seem to be more concentrated in counties in the southern-half of the state. The southern-half of the state is also where much of the cross-state traffic flows in transit to and from New Hampshire, Massachusetts, and New York. Since the traffic stop data is from the state troopers, this interstate highway traffic could potentially explain why we see more traffic stops in these counties.

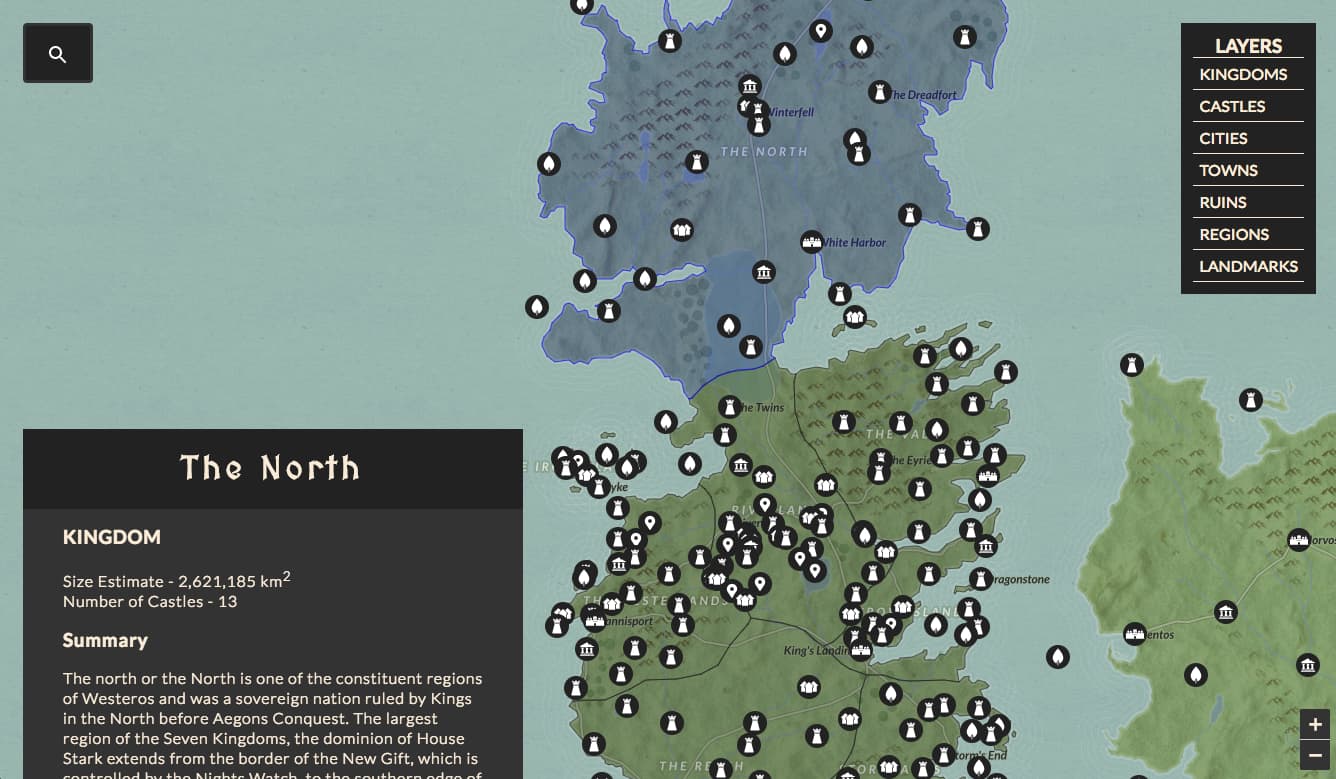

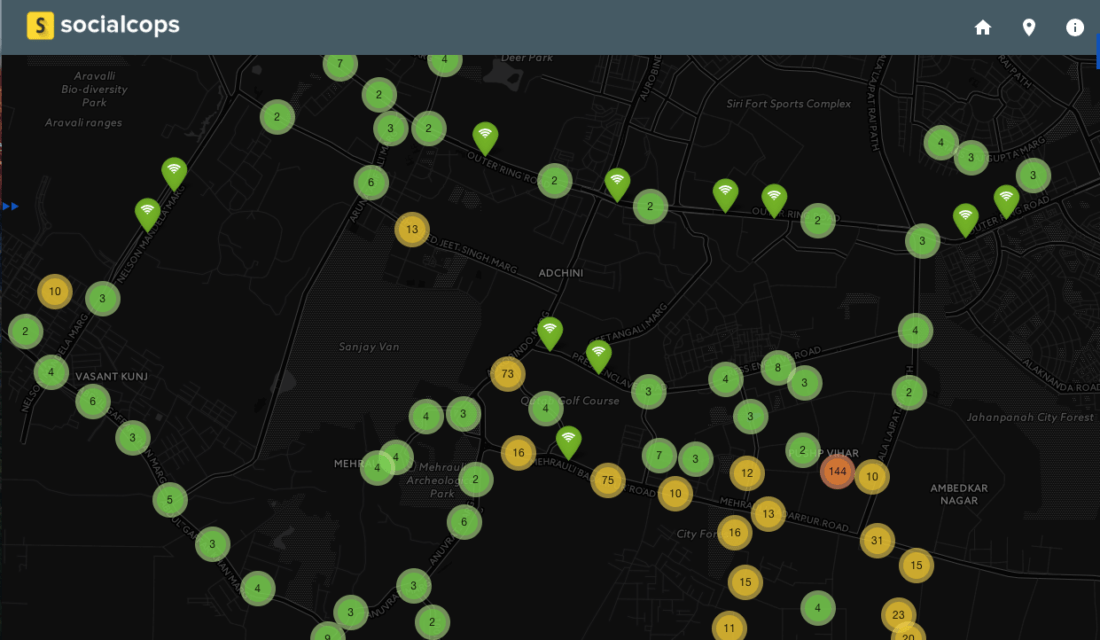

Here's a quick map generated with Tableau to visualize this regional distribution.

1.3 - Violations

We can also check out the distribution of traffic stop reasons.

df_vt['violation'].value_counts()

Moving violation 212100

Equipment 50600

Other 9768

DUI 711

Other (non-mapped) 2

Name: violation, dtype: int64

Unsurprisingly, the top reason for a traffic stop is Moving Violation (speeding, reckless driving, etc.), followed by Equipment (faulty lights, illegal modifications, etc.).

By using the violation_raw fields as reference, we can see that the Other category includes "Investigatory Stop" (the police have reason to suspect that the driver of the vehicle has committed a crime) and "Externally Generated Stop" (possibly as a result of a 911 call, or a referral from municipal police departments).

DUI ("driving under the influence", i.e. drunk driving) is surprisingly the least prevalent, with only 711 total recorded stops for this reason over the five year period (2010-2015) that the dataset covers. This seems low, since Vermont had 2,647 DUI arrests in 2015, so I suspect that a large proportion of these arrests were performed by municipal police departments, and/or began with a Moving Violation stop, instead of a more specific DUI stop.

1.4 - Outcomes

We can also examine the traffic stop outcomes.

df_vt['stop_outcome'].value_counts()

Written Warning 166488

Citation 103401

Arrest for Violation 3206

Warrant Arrest 76

Verbal Warning 10

Name: stop_outcome, dtype: int64

A majority of stops result in a written warning - which goes on the record but carries no direct penalty. A bit over 1/3 of the stops result in a citation (commonly known as a ticket), which comes with a direct fine and can carry other negative side-effects such as raising a driver's auto insurance premiums.

The decision to give a warning or a citation is often at the discretion of the police officer, so this could be a good source for studying bias.

1.5 - Stops By Gender

Let's break down the traffic stops by gender.

df_vt['driver_gender'].value_counts()

M 179678

F 101895

Name: driver_gender, dtype: int64

We can see that approximately 36% of the stops are of women drivers, and 64% are of men.

1.6 - Stops By Race

Let's also examine the distribution by race.

df_vt['driver_race'].value_counts()

White 266216

Black 5741

Asian 3607

Hispanic 2625

Other 279

Name: driver_race, dtype: int64

Most traffic stops are of white drivers, which is to be expected since Vermont is around 94% white (making it the 2nd-least diverse state in the nation, behind Maine). Since white drivers make up approximately 94% of the traffic stops, there's no obvious bias here for pulling over non-white drivers vs white drivers. Using the same methodology, however, we can also see that while black drivers make up roughly 2% of all traffic stops, only 1.3% of Vermont's population is black.

Let's keep on analyzing the data to see what else we can learn.

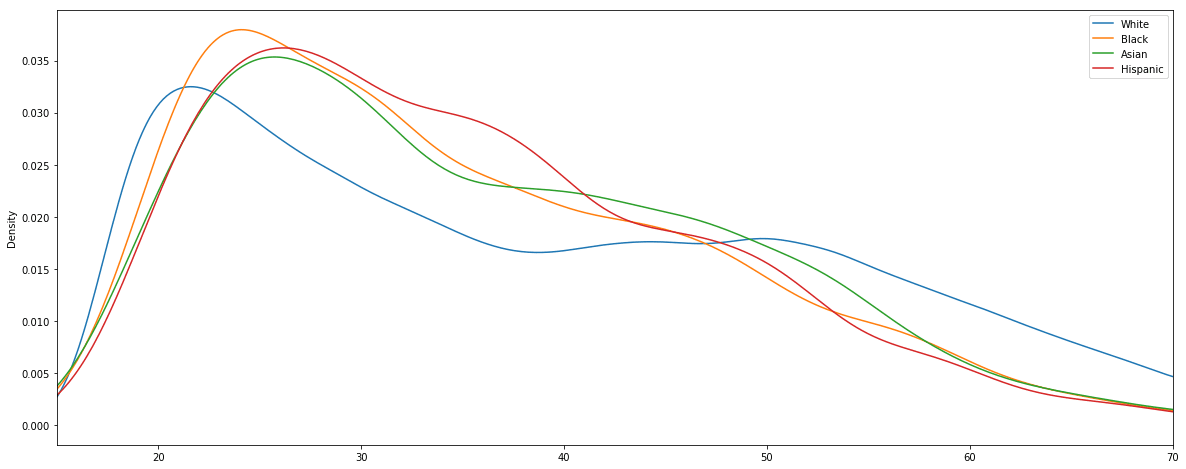

1.7 - Police Stop Frequency by Race and Age

It would be interesting to visualize how the frequency of police stops breaks down by both race and age.

fig, ax = plt.subplots()

ax.set_xlim(15, 70)

for race in df_vt['driver_race'].unique():

s = df_vt[df_vt['driver_race'] == race]['driver_age']

s.plot.kde(ax=ax, label=race)

ax.legend()

We can see that young drivers in their late teens and early twenties are the most likely to be pulled over. Between ages 25 and 35, the stop rate of each demographic drops off quickly. As far as the racial comparison goes, the most interesting disparity is that for white drivers between the ages of 35 and 50 the pull-over rate stays mostly flat, whereas for other races it continues to drop steadily.

2 - Violation and Outcome Analysis

Now that we've got a feel for the dataset, we can start getting into some more advanced analysis.

One interesting topic that we touched on earlier is the fact that the decision to penalize a driver with a ticket or a citation is often at the discretion of the police officer. With this in mind, let's see if there are any discernable patterns in driver demographics and stop outcome.

2.0 - Analysis Helper Function

In order to assist in this analysis, we'll define a helper function to aggregate a few important statistics from our dataset.

citations_per_warning- The ratio of citations to warnings. A higher number signifies a greater likelihood of being ticketed instead of getting off with a warning.arrest_rate- The percentage of stops that end in an arrest.

def compute_outcome_stats(df):

"""Compute statistics regarding the relative quanties of arrests, warnings, and citations"""

n_total = len(df)

n_warnings = len(df[df['stop_outcome'] == 'Written Warning'])

n_citations = len(df[df['stop_outcome'] == 'Citation'])

n_arrests = len(df[df['stop_outcome'] == 'Arrest for Violation'])

citations_per_warning = n_citations / n_warnings

arrest_rate = n_arrests / n_total

return(pd.Series(data = {

'n_total': n_total,

'n_warnings': n_warnings,

'n_citations': n_citations,

'n_arrests': n_arrests,

'citations_per_warning': citations_per_warning,

'arrest_rate': arrest_rate

}))

Let's test out this helper function by applying it to the entire dataframe.

compute_outcome_stats(df_vt)

arrest_rate 0.011721

citations_per_warning 0.620751

n_arrests 3199.000000

n_citations 103270.000000

n_total 272918.000000

n_warnings 166363.000000

dtype: float64

In the above result, we can see that about 1.17% of traffic stops result in an arrest, and there are on-average 0.62 citations (tickets) issued per warning. This data passes the sanity check, but it's too coarse to provide many interesting insights. Let's dig deeper.

2.1 - Breakdown By Gender

Using our helper function, along with the Pandas dataframe groupby method, we can easily compare these stats for male and female drivers.

df_vt.groupby('driver_gender').apply(compute_outcome_stats)

| arrest_rate | citations_per_warning | n_arrests | n_citations | n_total | n_warnings | |

|---|---|---|---|---|---|---|

| driver_gender | ||||||

| F | 0.007038 | 0.548033 | 697.0 | 34805.0 | 99036.0 | 63509.0 |

| M | 0.014389 | 0.665652 | 2502.0 | 68465.0 | 173882.0 | 102854.0 |

This is a simple example of the common split-apply-combine technique. We'll be building on this pattern for the remainder of the tutorial, so make sure that you understand how this comparison table is generated before continuing.

We can see here that men are, on average, twice as likely to be arrested during a traffic stop, and are also slightly more likely to be given a citation than women. It is, of course, not clear from the data whether this is indicative of any bias by the police officers, or if it reflects that men are being pulled over for more serious offenses than women on average.

2.2 - Breakdown By Race

Let's now compute the same comparison, grouping by race.

df_vt.groupby('driver_race').apply(compute_outcome_stats)

| arrest_rate | citations_per_warning | n_arrests | n_citations | n_total | n_warnings | |

|---|---|---|---|---|---|---|

| driver_race | ||||||

| Asian | 0.006384 | 1.002339 | 22.0 | 1714.0 | 3446.0 | 1710.0 |

| Black | 0.019925 | 0.802379 | 111.0 | 2428.0 | 5571.0 | 3026.0 |

| Hispanic | 0.016393 | 0.865827 | 42.0 | 1168.0 | 2562.0 | 1349.0 |

| White | 0.011571 | 0.611188 | 3024.0 | 97960.0 | 261339.0 | 160278.0 |

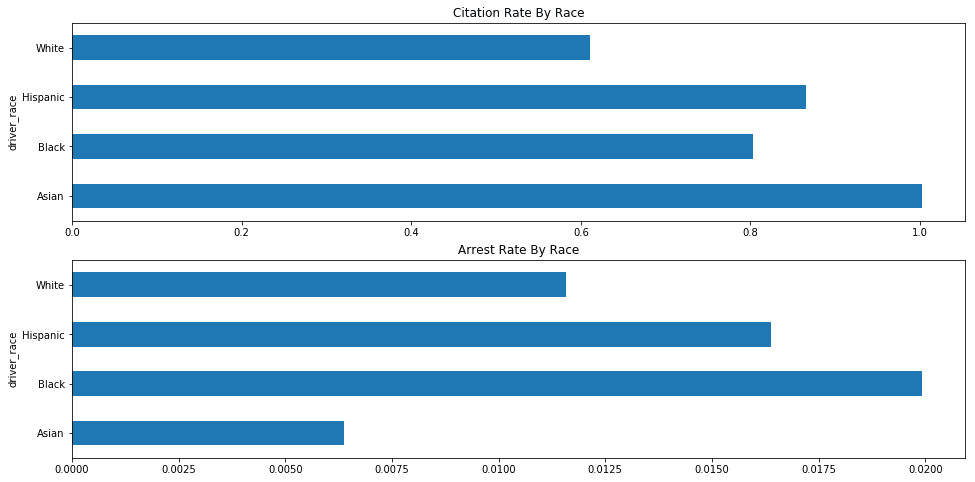

Ok, this is interesting. We can see that Asian drivers are arrested at the lowest rate, but receive tickets at the highest rate (roughly 1 ticket per warning). Black and Hispanic drivers are both arrested at a higher rate and ticketed at a higher rate than white drivers.

Let's visualize these results.

race_agg = df_vt.groupby(['driver_race']).apply(compute_outcome_stats)

fig, axes = plt.subplots(nrows=2, ncols=1, figsize=figsize)

race_agg['citations_per_warning'].plot.barh(ax=axes[0], figsize=figsize, title="Citation Rate By Race")

race_agg['arrest_rate'].plot.barh(ax=axes[1], figsize=figsize, title='Arrest Rate By Race')

2.3 - Group By Outcome and Violation

We'll deepen our analysis by grouping each statistic by the violation that triggered the traffic stop.

df_vt.groupby(['driver_race','violation']).apply(compute_outcome_stats)

| arrest_rate | citations_per_warning | n_arrests | n_citations | n_total | n_warnings | ||

|---|---|---|---|---|---|---|---|

| driver_race | violation | ||||||

| Asian | DUI | 0.200000 | 0.333333 | 2.0 | 2.0 | 10.0 | 6.0 |

| Equipment | 0.006270 | 0.132143 | 2.0 | 37.0 | 319.0 | 280.0 | |

| Moving violation | 0.005563 | 1.183190 | 17.0 | 1647.0 | 3056.0 | 1392.0 | |

| Other | 0.016393 | 0.875000 | 1.0 | 28.0 | 61.0 | 32.0 | |

| Black | DUI | 0.200000 | 0.142857 | 2.0 | 1.0 | 10.0 | 7.0 |

| Equipment | 0.029181 | 0.220651 | 26.0 | 156.0 | 891.0 | 707.0 | |

| Moving violation | 0.016052 | 0.942385 | 71.0 | 2110.0 | 4423.0 | 2239.0 | |

| Other | 0.048583 | 2.205479 | 12.0 | 161.0 | 247.0 | 73.0 | |

| Hispanic | DUI | 0.200000 | 3.000000 | 2.0 | 6.0 | 10.0 | 2.0 |

| Equipment | 0.023560 | 0.187898 | 9.0 | 59.0 | 382.0 | 314.0 | |

| Moving violation | 0.012422 | 1.058824 | 26.0 | 1062.0 | 2093.0 | 1003.0 | |

| Other | 0.064935 | 1.366667 | 5.0 | 41.0 | 77.0 | 30.0 | |

| White | DUI | 0.192364 | 0.455026 | 131.0 | 172.0 | 681.0 | 378.0 |

| Equipment | 0.012233 | 0.190486 | 599.0 | 7736.0 | 48965.0 | 40612.0 | |

| Moving violation | 0.008635 | 0.732720 | 1747.0 | 84797.0 | 202321.0 | 115729.0 | |

| Other | 0.058378 | 1.476672 | 547.0 | 5254.0 | 9370.0 | 3558.0 | |

| Other (non-mapped) | 0.000000 | 1.000000 | 0.0 | 1.0 | 2.0 | 1.0 |

Ok, well this table looks interesting, but it's rather large and visually overwhelming. Let's trim down that dataset in order to retrieve a more focused subset of information.

# Create new column to represent whether the driver is white

df_vt['is_white'] = df_vt['driver_race'] == 'White'

# Remove violation with too few data points

df_vt_filtered = df_vt[~df_vt['violation'].isin(['Other (non-mapped)', 'DUI'])]

We're generating a new column to represent whether or not the driver is white. We are also generating a filtered version of the dataframe that strips out the two violation types with the fewest datapoints.

We not assigning the filtered dataframe to

df_vtsince we'll want to keep using the complete unfiltered dataset in the next sections.

Let's redo our race + violation aggregation now, using our filtered dataset.

df_vt_filtered.groupby(['is_white','violation']).apply(compute_outcome_stats)

| arrest_rate | citations_per_warning | n_arrests | n_citations | n_total | n_warnings | ||

|---|---|---|---|---|---|---|---|

| is_white | violation | ||||||

| False | Equipment | 0.023241 | 0.193697 | 37.0 | 252.0 | 1592.0 | 1301.0 |

| Moving violation | 0.011910 | 1.039922 | 114.0 | 4819.0 | 9572.0 | 4634.0 | |

| Other | 0.046753 | 1.703704 | 18.0 | 230.0 | 385.0 | 135.0 | |

| True | Equipment | 0.012233 | 0.190486 | 599.0 | 7736.0 | 48965.0 | 40612.0 |

| Moving violation | 0.008635 | 0.732720 | 1747.0 | 84797.0 | 202321.0 | 115729.0 | |

| Other | 0.058378 | 1.476672 | 547.0 | 5254.0 | 9370.0 | 3558.0 |

Ok great, this is much easier to read.

In the above table, we can see that non-white drivers are more likely to be arrested during a stop that was initiated due to an equipment or moving violation, but white drivers are more likely to be arrested for a traffic stop resulting from "Other" reasons. Non-white drivers are more likely than white drivers to be given tickets for each violation.

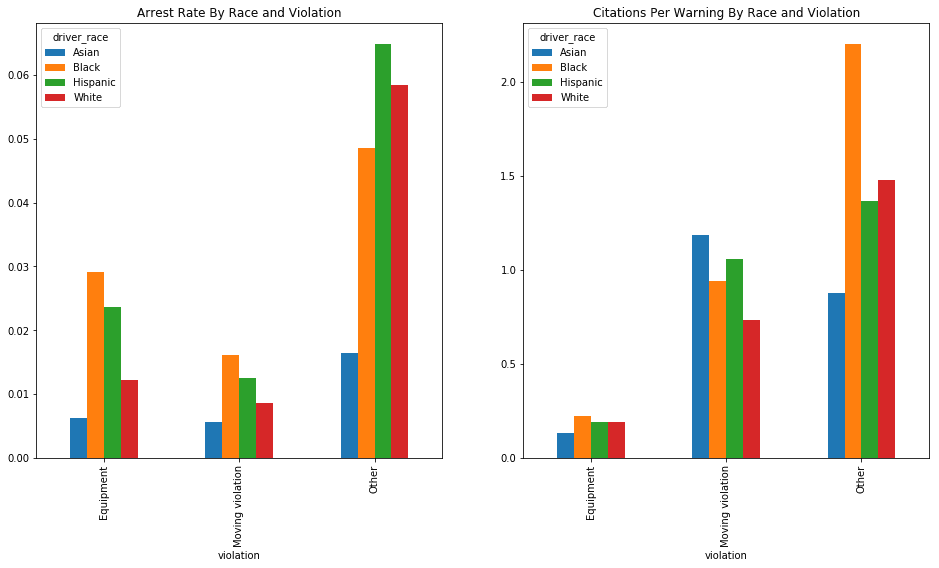

2.4 - Visualize Stop Outcome and Violation Results

Let's generate a bar chart now in order to visualize this data broken down by race.

race_stats = df_vt_filtered.groupby(['violation', 'driver_race']).apply(compute_outcome_stats).unstack()

fig, axes = plt.subplots(nrows=1, ncols=2, figsize=figsize)

race_stats.plot.bar(y='arrest_rate', ax=axes[0], title='Arrest Rate By Race and Violation')

race_stats.plot.bar(y='citations_per_warning', ax=axes[1], title='Citations Per Warning By Race and Violation')

We can see in these charts that Hispanic and Black drivers are generally arrested at a higher rate than white drivers (with the exception of the rather ambiguous "Other" category). and that Black drivers are more likely, across the board, to be issued a citation than white drivers. Asian drivers are arrested at very low rates, and their citation rates are highly variable.

These results are compelling, and are suggestive of potential racial bias, but they are too inconsistent across violation types to provide any definitive answers. Let's dig deeper to see what else we can find.

3 - Search Outcome Analysis

Two of the more interesting fields available to us are search_conducted and contraband_found.

In the analysis by the "Stanford Open Policing Project", they use these two fields to perform what is known as an "outcome test".

On the project website, the "outcome test" is summarized clearly.

In the 1950s, the Nobel prize-winning economist Gary Becker proposed an elegant method to test for bias in search decisions: the outcome test.

Becker proposed looking at search outcomes. If officers don’t discriminate, he argued, they should find contraband — like illegal drugs or weapons — on searched minorities at the same rate as on searched whites. If searches of minorities turn up contraband at lower rates than searches of whites, the outcome test suggests officers are applying a double standard, searching minorities on the basis of less evidence."

Findings, Stanford Open Policing Project

The authors of the project also make the point that only using the "hit rate", or the rate of searches where contraband is found, can be misleading. For this reason, we'll also need to use the "search rate" in our analysis - the rate at which a traffic stop results in a search.

We'll now use the available data to perform our own outcome test, in order to determine whether minorities in Vermont are routinely searched on the basis of less evidence than white drivers.

3.0 Compute Search Rate and Hit Rate

We'll define a new function to compute the search rate and hit rate for the traffic stops in our dataframe.

- Search Rate - The rate at which a traffic stop results in a search. A search rate of

0.20would signify that out of 100 traffic stops, 20 resulted in a search. - Hit Rate - The rate at which contraband is found in a search. A hit rate of

0.80would signify that out of 100 searches, 80 searches resulted in contraband (drugs, unregistered weapons, etc.) being found.

def compute_search_stats(df):

"""Compute the search rate and hit rate"""

search_conducted = df['search_conducted']

contraband_found = df['contraband_found']

n_stops = len(search_conducted)

n_searches = sum(search_conducted)

n_hits = sum(contraband_found)

# Filter out counties with too few stops

if (n_stops) < 50:

search_rate = None

else:

search_rate = n_searches / n_stops

# Filter out counties with too few searches

if (n_searches) < 5:

hit_rate = None

else:

hit_rate = n_hits / n_searches

return(pd.Series(data = {

'n_stops': n_stops,

'n_searches': n_searches,

'n_hits': n_hits,

'search_rate': search_rate,

'hit_rate': hit_rate

}))

3.1 - Compute Search Stats For Entire Dataset

We can test our new function to determine the search rate and hit rate for the entire state.

compute_search_stats(df_vt)

hit_rate 0.796865

n_hits 2593.000000

n_searches 3254.000000

n_stops 272918.000000

search_rate 0.011923

dtype: float64

Here we can see that each traffic stop had a 1.2% change of resulting in a search, and each search had an 80% chance of yielding contraband.

3.2 - Compare Search Stats By Driver Gender

Using the Pandas groupby method, we can compute how the search stats differ by gender.

df_vt.groupby('driver_gender').apply(compute_search_stats)

| hit_rate | n_hits | n_searches | n_stops | search_rate | |

|---|---|---|---|---|---|

| driver_gender | |||||

| F | 0.789392 | 506.0 | 641.0 | 99036.0 | 0.006472 |

| M | 0.798699 | 2087.0 | 2613.0 | 173882.0 | 0.015027 |

We can see here that men are three times as likely to be searched as women, and that 80% of searches for both genders resulted in contraband being found. The data shows that men are searched and caught with contraband more often than women, but it is unclear whether there is any gender discrimination in deciding who to search since the hit rate is equal.

3.3 - Compare Search Stats By Age

We can split the dataset into age buckets and perform the same analysis.

age_groups = pd.cut(df_vt["driver_age"], np.arange(15, 70, 5))

df_vt.groupby(age_groups).apply(compute_search_stats)

| hit_rate | n_hits | n_searches | n_stops | search_rate | |

|---|---|---|---|---|---|

| driver_age | |||||

| (15, 20] | 0.847988 | 569.0 | 671.0 | 27418.0 | 0.024473 |

| (20, 25] | 0.838000 | 838.0 | 1000.0 | 43275.0 | 0.023108 |

| (25, 30] | 0.788462 | 492.0 | 624.0 | 34759.0 | 0.017952 |

| (30, 35] | 0.766756 | 286.0 | 373.0 | 27746.0 | 0.013443 |

| (35, 40] | 0.742991 | 159.0 | 214.0 | 23203.0 | 0.009223 |

| (40, 45] | 0.692913 | 88.0 | 127.0 | 24055.0 | 0.005280 |

| (45, 50] | 0.575472 | 61.0 | 106.0 | 24103.0 | 0.004398 |

| (50, 55] | 0.706667 | 53.0 | 75.0 | 22517.0 | 0.003331 |

| (55, 60] | 0.833333 | 30.0 | 36.0 | 17502.0 | 0.002057 |

| (60, 65] | 0.500000 | 6.0 | 12.0 | 12514.0 | 0.000959 |

We can see here that the search rate steadily declines as drivers get older, and that the hit rate also declines rapidly for older drivers.

3.4 - Compare Search Stats By Race

Now for the most interesting part - comparing search data by race.

df_vt.groupby('driver_race').apply(compute_search_stats)

| hit_rate | n_hits | n_searches | n_stops | search_rate | |

|---|---|---|---|---|---|

| driver_race | |||||

| Asian | 0.785714 | 22.0 | 28.0 | 3446.0 | 0.008125 |

| Black | 0.686620 | 195.0 | 284.0 | 5571.0 | 0.050978 |

| Hispanic | 0.644231 | 67.0 | 104.0 | 2562.0 | 0.040593 |

| White | 0.813601 | 2309.0 | 2838.0 | 261339.0 | 0.010859 |

Black and Hispanic drivers are searched at much higher rates than White drivers (5% and 4% of traffic stops respectively, versus 1% for white drivers), but the searches of these drivers only yield contraband 60-70% of the time, compared to 80% of the time for White drivers.

Let's rephrase these results.

Black drivers are 500% more likely to be searched than white drivers during a traffic stop, but are 13% less likely to be caught with contraband in the event of a search.

Hispanic drivers are 400% more likely to be searched than white drivers during a traffic stop, but are 17% less likely to be caught with contraband in the event of a search.

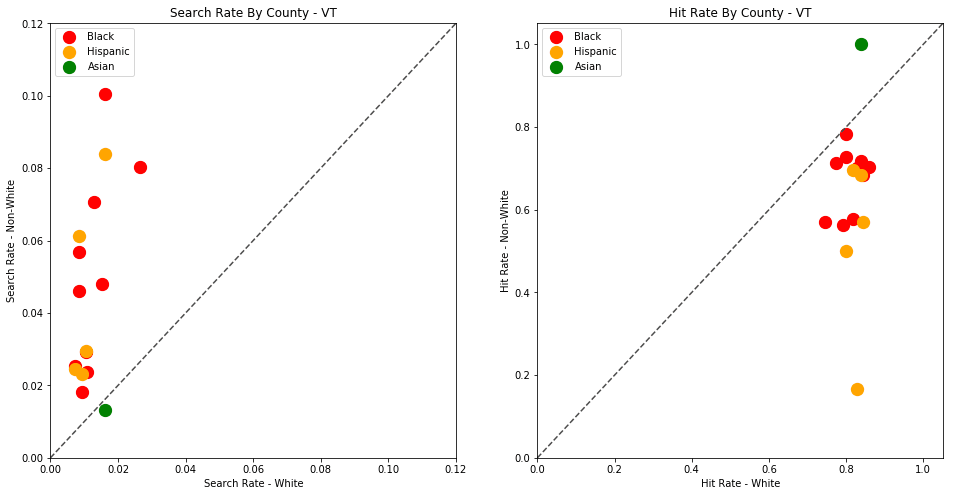

3.5 - Compare Search Stats By Race and Location

Let's add in location as another factor. It's possible that some counties (such as those with larger towns or with interstate highways where opioid trafficking is prevalent) have a much higher search rate / lower hit rates for both white and non-white drivers, but also have greater racial diversity, leading to distortion in the overall stats. By controlling for location, we can determine if this is the case.

We'll define three new helper functions to generate the visualizations.

def generate_comparison_scatter(df, ax, state, race, field, color):

"""Generate scatter plot comparing field for white drivers with minority drivers"""

race_location_agg = df.groupby(['county_fips','driver_race']).apply(compute_search_stats).reset_index().dropna()

race_location_agg = race_location_agg.pivot(index='county_fips', columns='driver_race', values=field)

ax = race_location_agg.plot.scatter(ax=ax, x='White', y=race, s=150, label=race, color=color)

return ax

def format_scatter_chart(ax, state, field):

"""Format and label to scatter chart"""

ax.set_xlabel('{} - White'.format(field))

ax.set_ylabel('{} - Non-White'.format(field, race))

ax.set_title("{} By County - {}".format(field, state))

lim = max(ax.get_xlim()[1], ax.get_ylim()[1])

ax.set_xlim(0, lim)

ax.set_ylim(0, lim)

diag_line, = ax.plot(ax.get_xlim(), ax.get_ylim(), ls="--", c=".3")

ax.legend()

return ax

def generate_comparison_scatters(df, state):

"""Generate scatter plots comparing search rates of white drivers with black and hispanic drivers"""

fig, axes = plt.subplots(nrows=1, ncols=2, figsize=figsize)

generate_comparison_scatter(df, axes[0], state, 'Black', 'search_rate', 'red')

generate_comparison_scatter(df, axes[0], state, 'Hispanic', 'search_rate', 'orange')

generate_comparison_scatter(df, axes[0], state, 'Asian', 'search_rate', 'green')

format_scatter_chart(axes[0], state, 'Search Rate')

generate_comparison_scatter(df, axes[1], state, 'Black', 'hit_rate', 'red')

generate_comparison_scatter(df, axes[1], state, 'Hispanic', 'hit_rate', 'orange')

generate_comparison_scatter(df, axes[1], state, 'Asian', 'hit_rate', 'green')

format_scatter_chart(axes[1], state, 'Hit Rate')

return fig

We can now generate the scatter plots using the generate_comparison_scatters function.

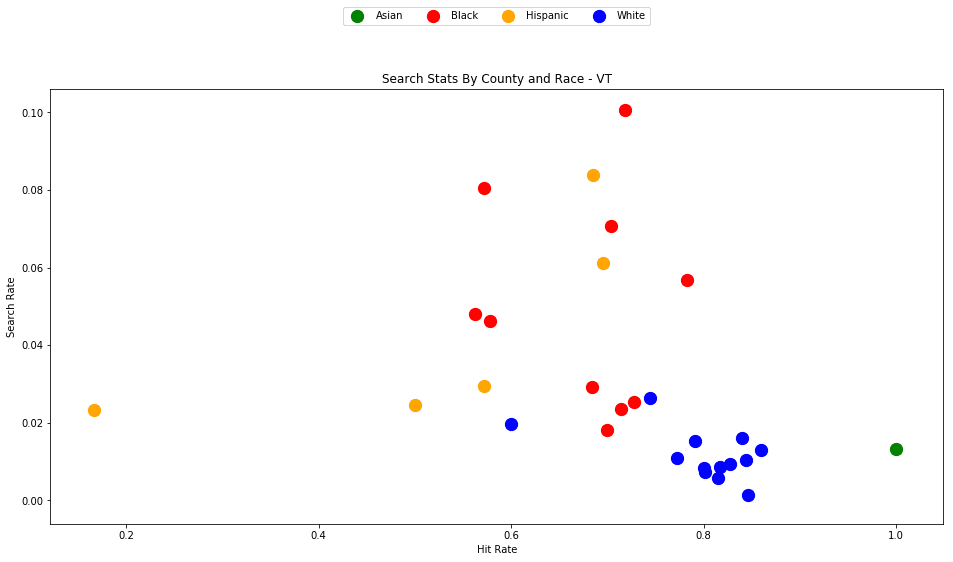

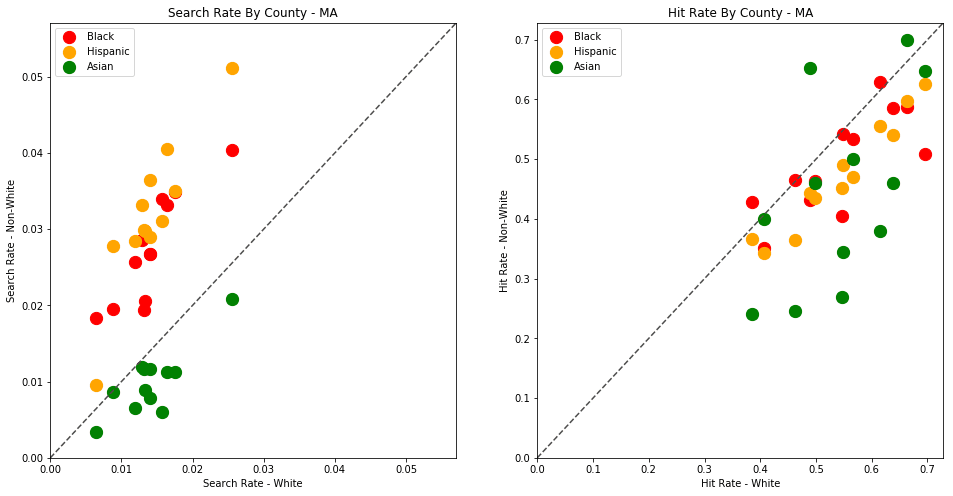

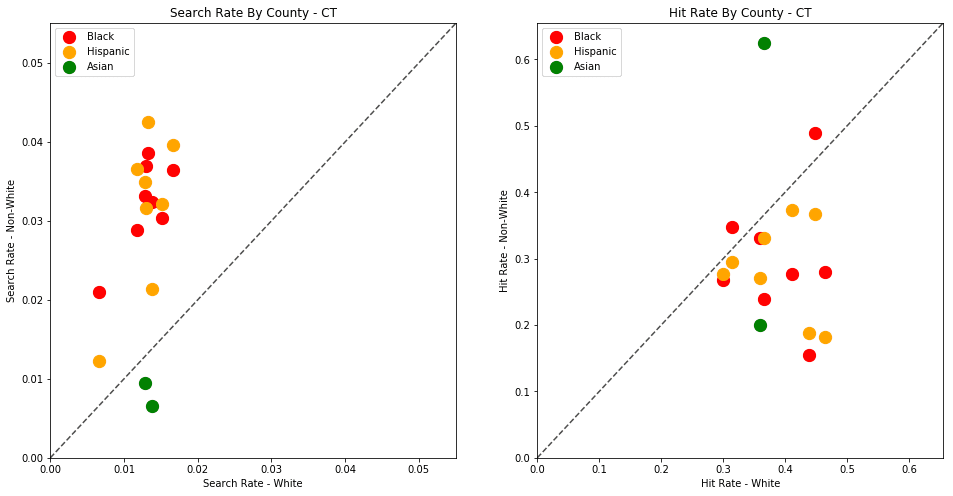

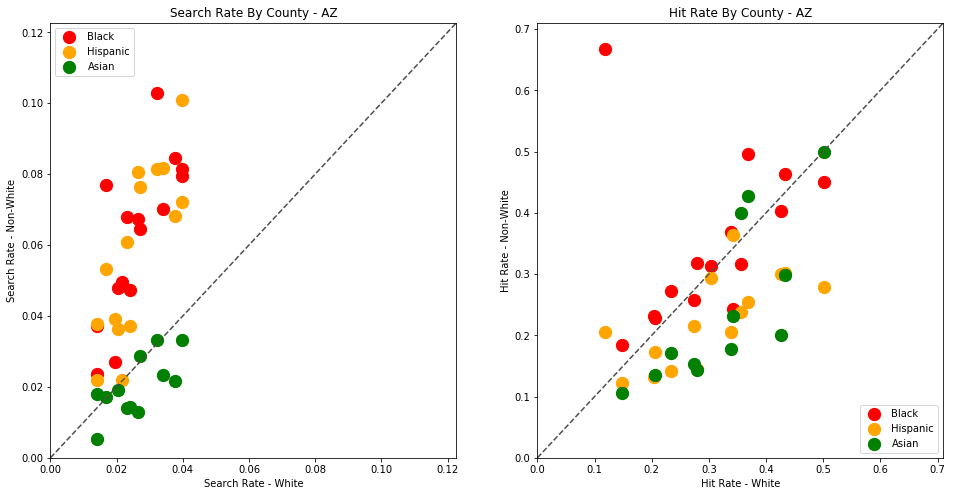

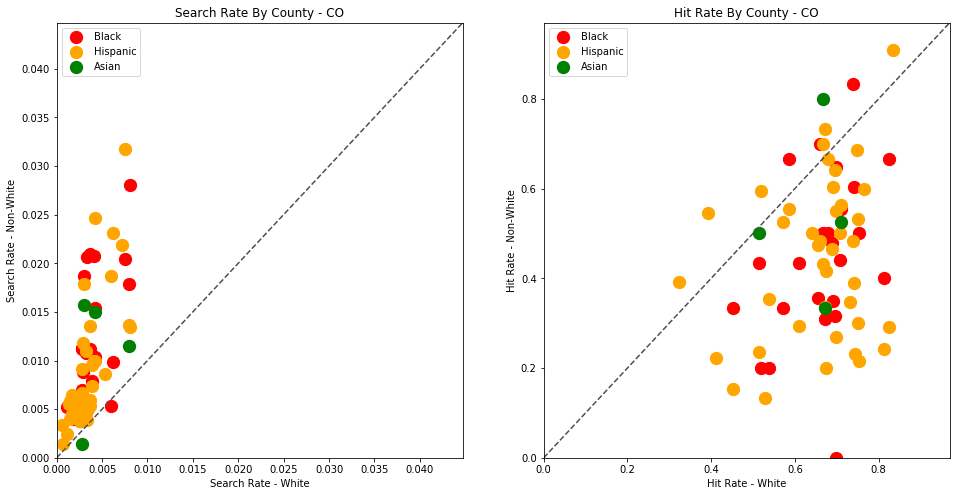

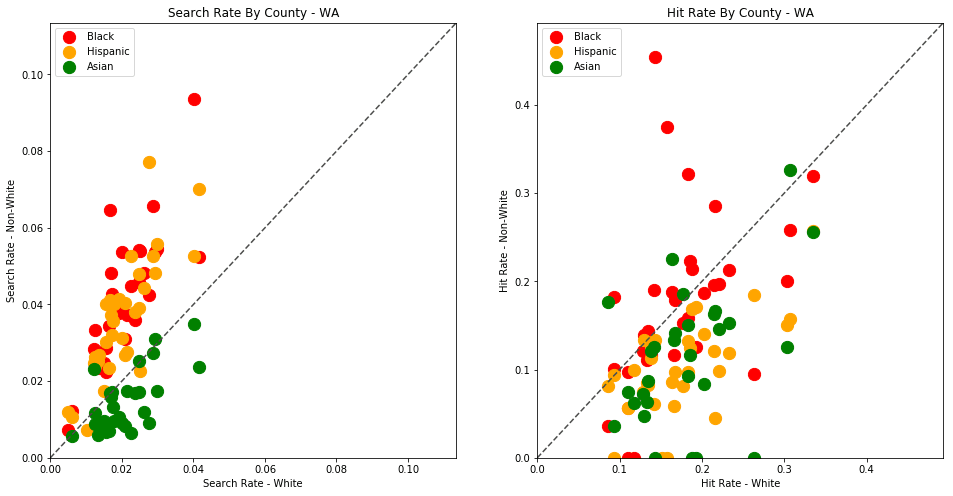

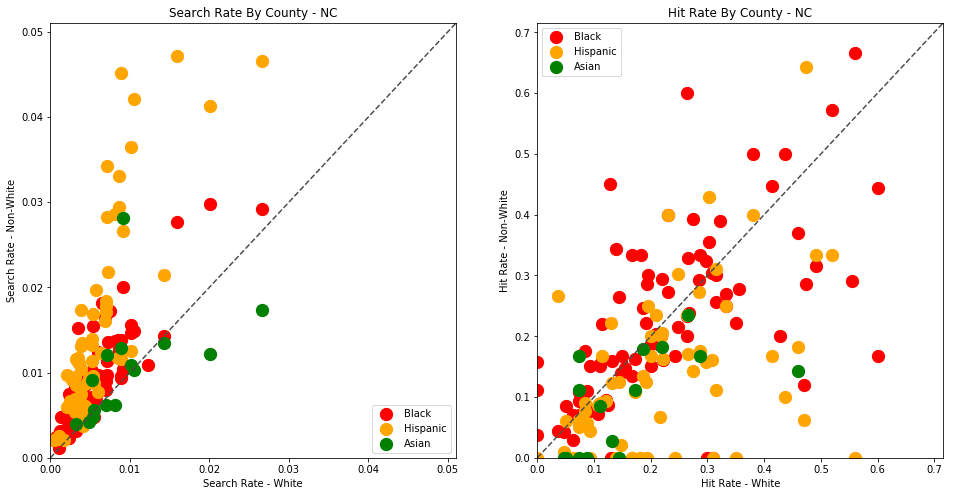

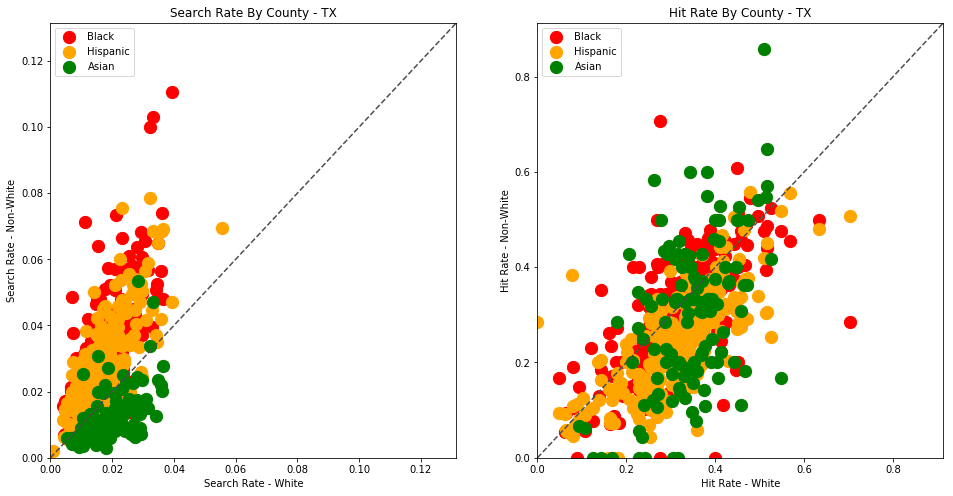

generate_comparison_scatters(df_vt, 'VT')

The plots above are comparing search_rate (left) and hit_rate (right) for minority drivers compared with white drivers in each county. If all of the dots (each of which represents the stats for a single county and race) followed the diagonal center line, the implication would be that white drivers and non-white drivers are searched at the exact same rate with the exact same standard of evidence.

Unfortunately, this is not the case. In the above charts, we can see that, for every county, the search rate is higher for Black and Hispanic drivers even though the hit rate is lower.

Let's define one more visualization helper function, to show all of these results on a single scatter plot.

def generate_county_search_stats_scatter(df, state):

"""Generate a scatter plot of search rate vs. hit rate by race and county"""

race_location_agg = df.groupby(['county_fips','driver_race']).apply(compute_search_stats)

colors = ['blue','orange','red', 'green']

fig, ax = plt.subplots(figsize=figsize)

for c, frame in race_location_agg.groupby(level='driver_race'):

ax.scatter(x=frame['hit_rate'], y=frame['search_rate'], s=150, label=c, color=colors.pop())

ax.legend(loc='upper center', bbox_to_anchor=(0.5, 1.2), ncol=4, fancybox=True)

ax.set_xlabel('Hit Rate')

ax.set_ylabel('Search Rate')

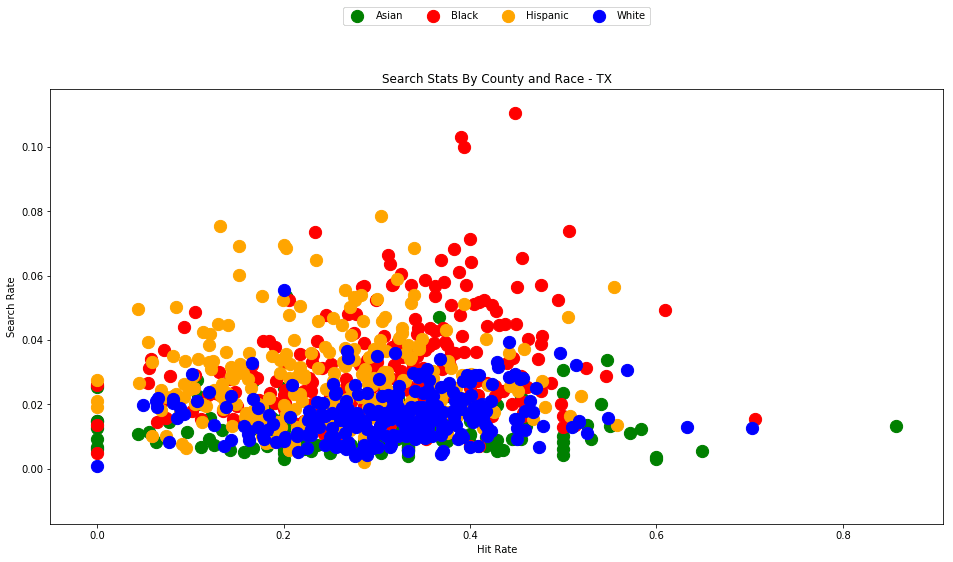

ax.set_title("Search Stats By County and Race - {}".format(state))

return fig

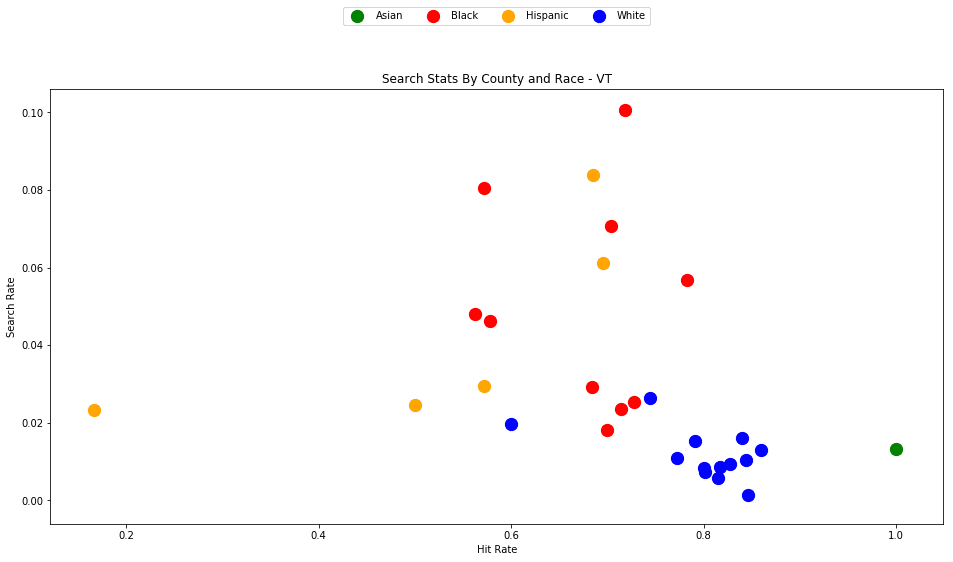

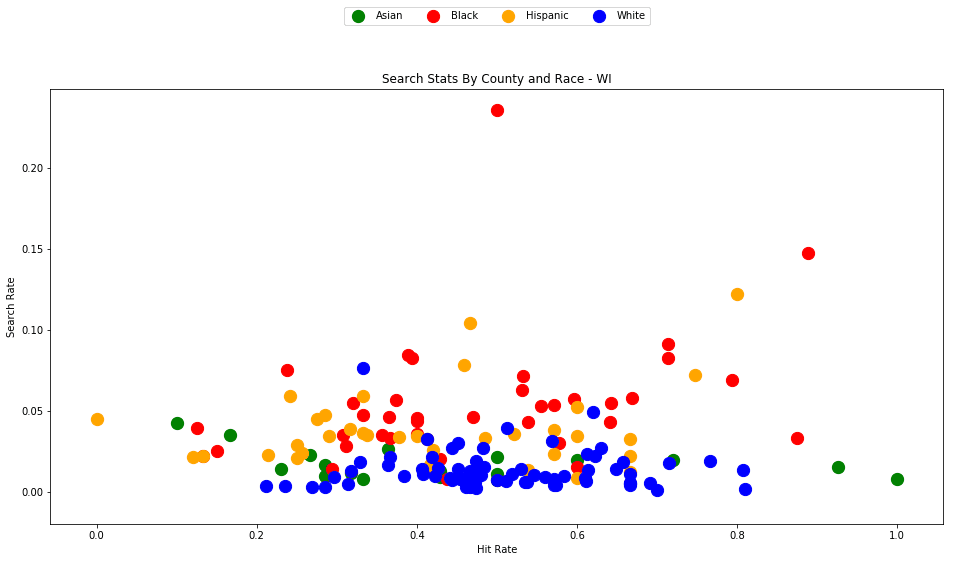

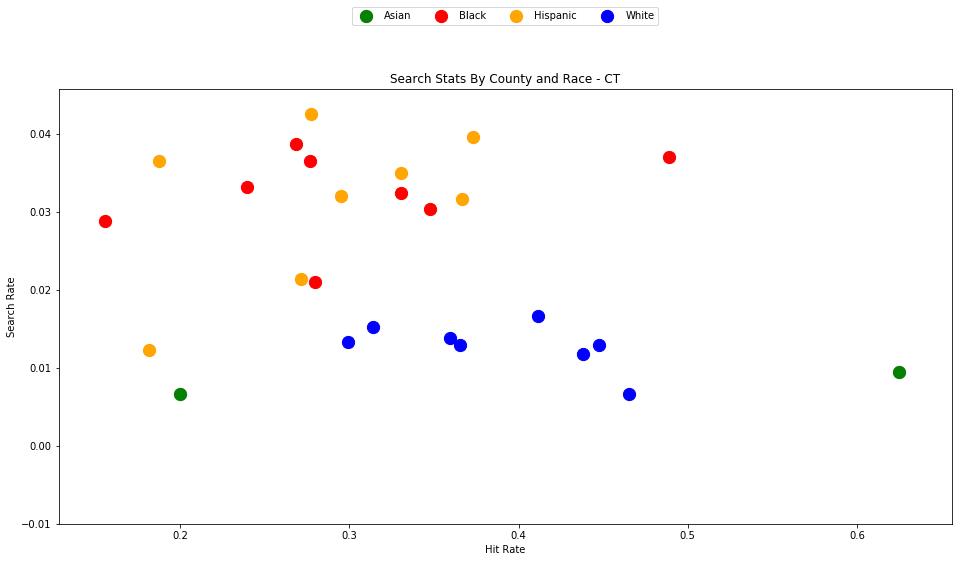

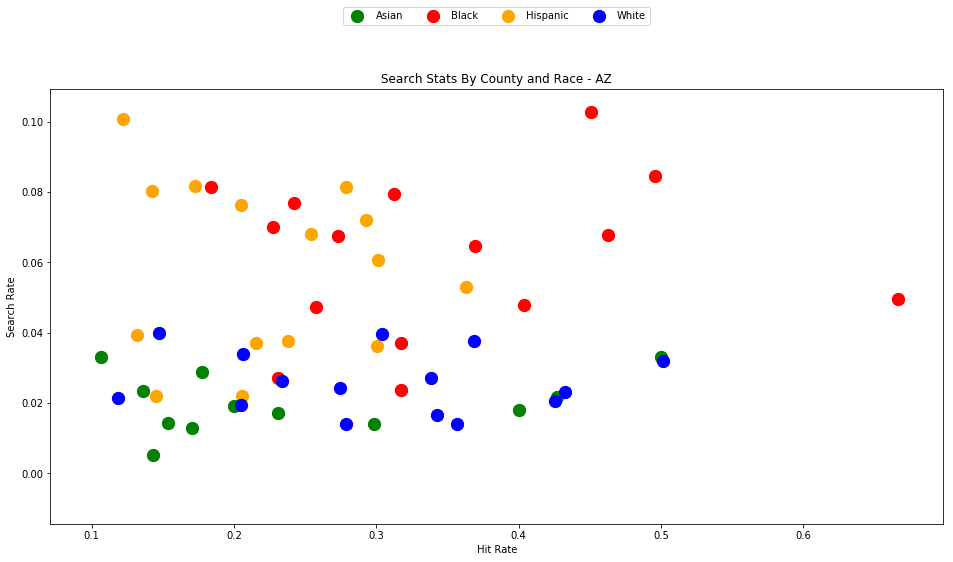

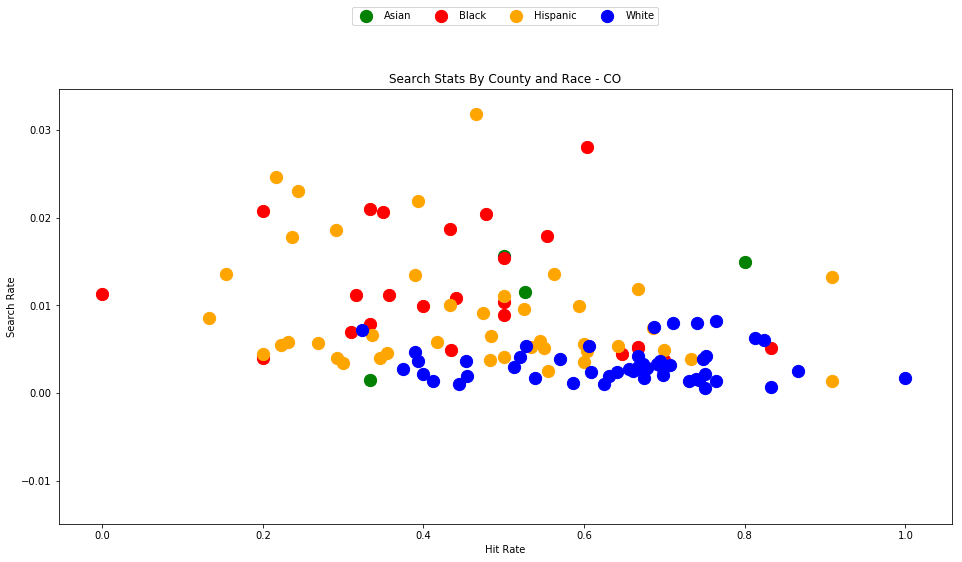

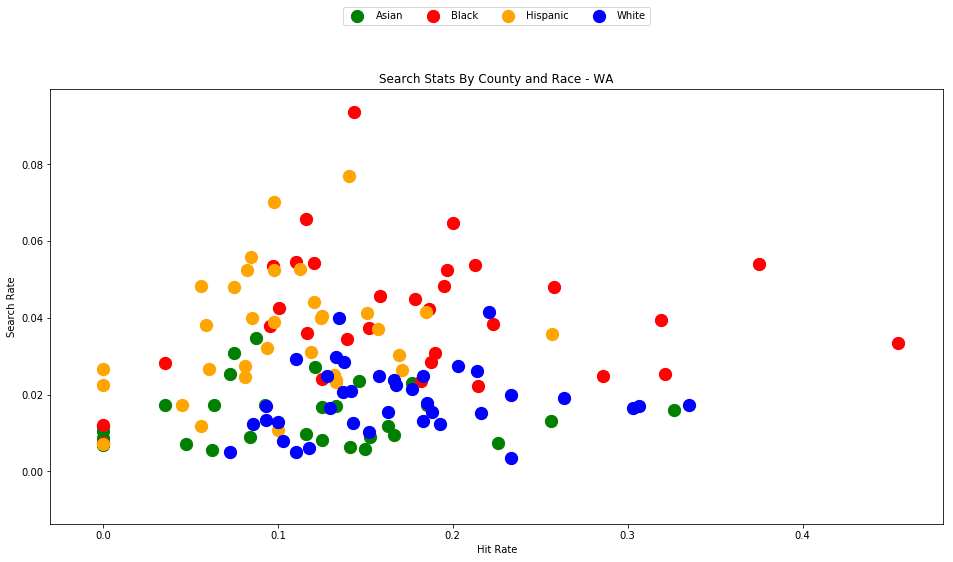

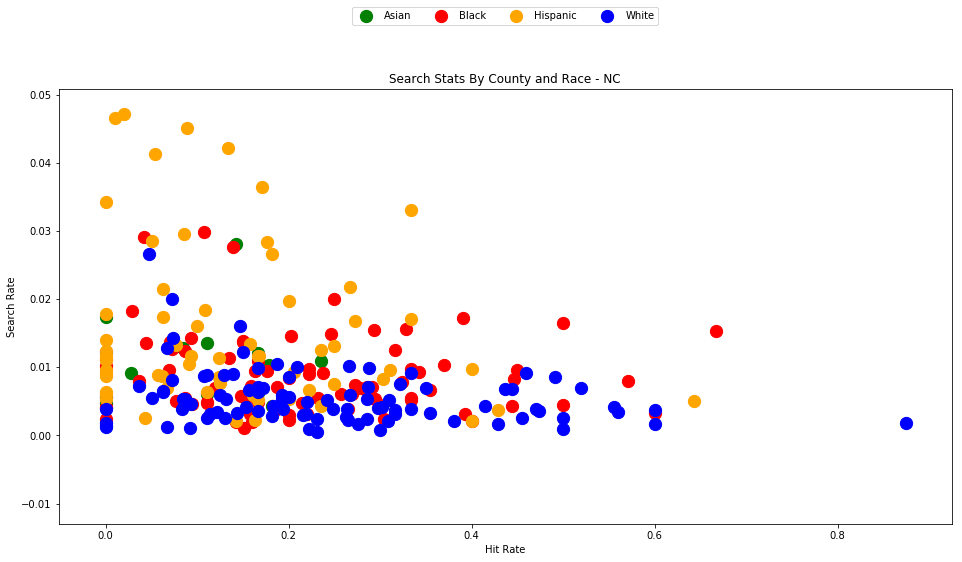

generate_county_search_stats_scatter(df_vt, "VT")

As the old idiom goes - a picture is worth a thousand words. The above chart is one of those pictures - and the name of the picture is "Systemic Racism".

The search rates and hit rates for white drivers in most counties are consistently clustered around 80% and 1% respectively. We can see, however, that nearly every county searches Black and Hispanic drivers at a higher rate, and that these searches uniformly have a lower hit rate than those on White drivers.

This state-wide pattern of a higher search rate combined with a lower hit rate suggests that a lower standard of evidence is used when deciding to search Black and Hispanic drivers compared to when searching White drivers.

You might notice that only one county is represented by Asian drivers - this is due to the lack of data for searches of Asian drivers in other counties.

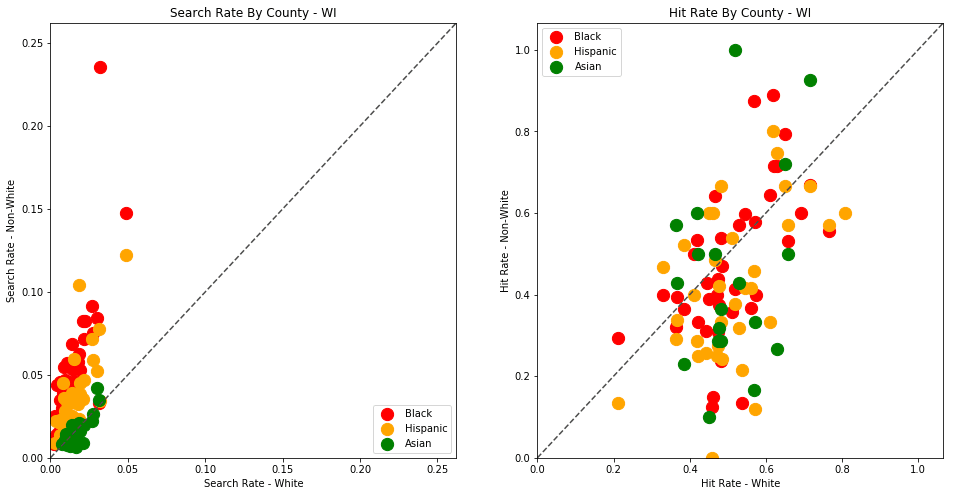

4 - Analyzing Other States

Vermont is a great state to test out our analysis on, but the dataset size is relatively small. Let's now perform the same analysis on other states to determine if this pattern persists across state lines.

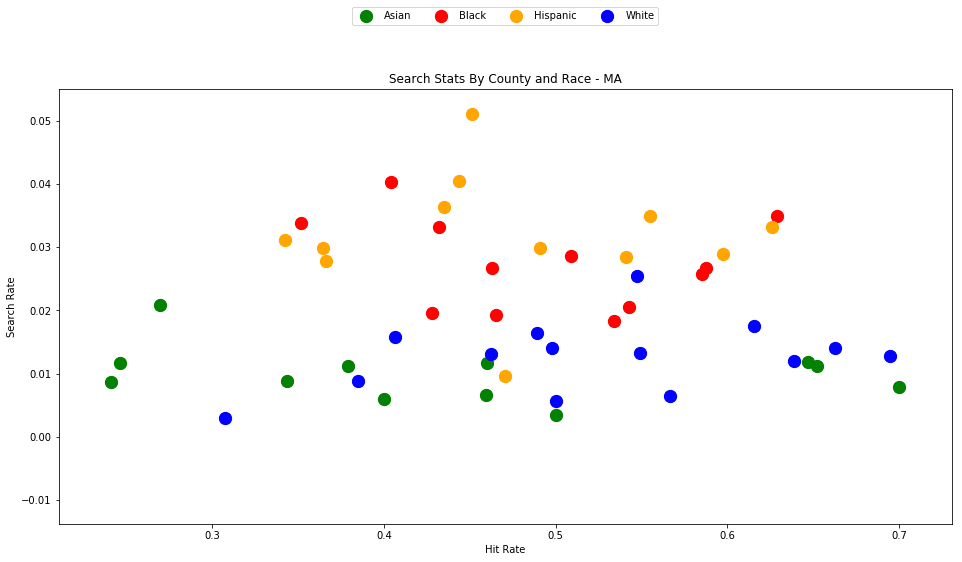

4.0 - Massachusetts

First we'll generate the analysis for my home state, Massachusetts. This time we'll have more data to work with - roughly 3.4 million traffic stops.

Download the dataset to your project's /data directory - https://stacks.stanford.edu/file/druid:py883nd2578/MA-clean.csv.gz

We've developed a solid reusable formula for reading and visualizing each state's dataset, so let's wrap the entire recipe in a new helper function.

fields = ['county_fips', 'driver_race', 'search_conducted', 'contraband_found']

types = {

'contraband_found': bool,

'county_fips': float,

'driver_race': object,

'search_conducted': bool

}

def analyze_state_data(state):

df = pd.read_csv('./data/{}-clean.csv.gz'.format(state), compression='gzip', low_memory=True, dtype=types, usecols=fields)

df.dropna(inplace=True)