| CARVIEW |

Select Language

HTTP/2 302

strict-transport-security: max-age=86400; includeSubDomains

location: https://blog.research.google/2017/06/mobilenets-open-source-models-for.html

content-type: text/html; charset=UTF-8

content-encoding: gzip

date: Tue, 15 Jul 2025 10:25:47 GMT

expires: Tue, 15 Jul 2025 10:25:47 GMT

cache-control: private, max-age=0

x-content-type-options: nosniff

x-frame-options: SAMEORIGIN

content-security-policy: frame-ancestors 'self'

x-xss-protection: 1; mode=block

content-length: 233

server: GSE

alt-svc: h3=":443"; ma=2592000,h3-29=":443"; ma=2592000

HTTP/2 301

content-type: text/html; charset=UTF-8

location: https://research.google/blog/mobilenets-open-source-models-for-efficient-on-device-vision/

content-encoding: gzip

date: Tue, 15 Jul 2025 10:25:47 GMT

expires: Tue, 15 Jul 2025 10:25:47 GMT

cache-control: private, max-age=0

x-content-type-options: nosniff

x-xss-protection: 1; mode=block

content-length: 237

server: GSE

alt-svc: h3=":443"; ma=2592000,h3-29=":443"; ma=2592000

HTTP/2 200

content-type: text/html; charset=utf-8

vary: Accept-Encoding

content-security-policy: style-src 'self' 'unsafe-inline' *.google.com *.gstatic.com fonts.googleapis.com; frame-src 'self' *.google.com *.withgoogle.com www.youtube.com https://google.earthengine.app/view/ocean https://mmeka-ee.projects.earthengine.app/view/temporal-demo https://storage.googleapis.com; default-src 'self' *.gstatic.com https://www.youtube.com/embed/kTvHIDKLFqc https://www.youtube.com/embed/Qh-4qF07V1s https://www.youtube.com/embed/gBfynvifkOY https://www.youtube.com/embed/ZMZr83rwdNI https://www.youtube.com/embed/LVFe6P-C7iY https://www.youtube.com/embed/OY2vWMtSsIM https://www.youtube.com/embed/wRCPCNtViGA https://www.youtube.com/embed/iGTM6xs2sck; connect-src 'self' *.google-analytics.com *.googletagmanager.com *.analytics.google.com *.gstatic.com *.google.com; script-src 'self' 'unsafe-inline' 'strict-dynamic' http: https: 'sha256-zBmfIicekWsk+Q02/57n6lzm2HIgbBeWN/st19KJYBM=' 'sha256-nKvv2YwBUD93NJaZ6VA5aP7XwmGV/S3G2FkCSI49/gE=' 'sha256-8Tmnm4NhLMrRqh1ZhctvStRyWVVRfk4CHaicfEzZUuI=' 'sha256-Nj7VfcL03AiQQy3lfhSluB1hFwylXDUm+VI2NCh34/w=' 'sha256-HbfYgUUu54uUYLd8WNbMYbcHGHThlfdYPhZmxdlxx3k=' 'sha256-h+sPBVMkWSsyFrQfEmLAhGUET0J7IU8+e68UpCsNdWE=' 'sha256-xdXe7bsAE8jwMFwvzClLp6sF7kElTj3p6FLnfy5neGc=' 'sha256-F+KNqDpRAu0lnbnkzC0Nkgg/m4aDWLk0PCZJY+T4oiM=' 'sha256-x2q8GGYj0PIvCV8AfX2Lv4CKDmK6d3w8YhMV8BwCGqg=' 'sha256-+ca+p72V6FhQN/MYJ6FbcNL2IHSYLHtCQemkNYuFvXo=' 'sha256-kLLsobFR3ZKrR9qraGoy7hF440PlIvBklS1xchJX9iw=' 'sha256-KO07c+2Siu0kHdu/DmM+rvrdVUgTcNPjkSbmTAO8QrE='; img-src 'self' data: https://storage.cloud.google.com/gweb-research2023-stg-media-mvp/ https://*.googleusercontent.com/ https://storage.googleapis.com/gweb-research2023-stg-media-mvp/ https://storage.googleapis.com/gweb-research2023-stg-media/ https://storage.googleapis.com/gweb-research2023-media/ https://research.google *.googletagmanager.com *.google-analytics.com https://*.googleusercontent.com/ https://blogger.googleusercontent.com *.ytimg.com *.bp.blogspot.com https://docs.google.com/a/google.com/ https://i.imgur.com/WZocAi7.png https://i.imgur.com/oPCeEcZ.png https://i.imgur.com/eVbbGwD.png https://upload.wikimedia.org/wikipedia/commons/e/ed/Becky_Hammon.jpg https://ngrams.googlelabs.com/ https://research.googleblog.com/uploaded_images/first06-777007.jpg https://googleresearch.blogspot.com/uploaded_images/first06-777007.jpg https://blog.research.google/uploaded_images/first06-777007.jpg https://work.fife.usercontent.google.com/fife/; base-uri 'none'; media-src 'self' https://*.googleusercontent.com/ https://storage.googleapis.com/gweb-research2023-stg-media-mvp/ https://storage.googleapis.com/gweb-research2023-stg-media/ https://storage.googleapis.com/gweb-research2023-media/ https://gstatic.com/ https://storage.googleapis.com/bioacoustics-www1/ https://storage.googleapis.com/chirp-public-bucket/ https://storage.googleapis.com/h01-release/ https://storage.googleapis.com/brain-genomics-public/ https://github.com/ https://implicitbc.github.io/ https://google.github.io/ https://dynibar.github.io/ https://google-research.github.io/ https://innermonologue.github.io/ https://iterative-refinement.github.io/ https://infinite-nature-zero.github.io/ https://google-research-datasets.github.io/ https://language-to-reward.github.io/ https://*.gstatic.com/ https://raw.githubusercontent.com/ https://karolhausman.github.io/mt-opt/img/mt-opt-grid.mp4 https://palm-e.github.io/videos/palm-e-teaser.mp4 https://research-il.github.io/ https://transporternets.github.io/ https://code-as-policies.github.io https://robotics-transformer.github.io/ https://michelleramanovich.github.io/ https://interactive-language.github.io/video/realtime_30.mp4 https://services.google.com/fh/files/blogs/aiblog_cinematicphotos.mp4 https://vlmaps.github.io/static/images/vlmaps_blog_post.mp4

x-frame-options: DENY

strict-transport-security: max-age=31536000; includeSubDomains; preload

x-content-type-options: nosniff

referrer-policy: same-origin

cross-origin-opener-policy: same-origin

expires: Tue, 15 Jul 2025 10:42:25 GMT

cache-control: max-age=1800

x-wagtail-cache: hit

content-encoding: gzip

x-cloud-trace-context: 881aef0bd2b836aa592b1252b69a3e0b

date: Tue, 15 Jul 2025 10:25:48 GMT

server: Google Frontend

content-length: 15542

alt-svc: h3=":443"; ma=2592000,h3-29=":443"; ma=2592000

MobileNets: Open-Source Models for Efficient On-Device Vision

Jump to Content

(Cross-posted on the Google Open Source Blog)

Deep learning has fueled tremendous progress in the field of computer vision in recent years, with neural networks repeatedly pushing the frontier of visual recognition technology. While many of those technologies such as object, landmark, logo and text recognition are provided for internet-connected devices through the Cloud Vision API, we believe that the ever-increasing computational power of mobile devices can enable the delivery of these technologies into the hands of our users, anytime, anywhere, regardless of internet connection. However, visual recognition for on device and embedded applications poses many challenges — models must run quickly with high accuracy in a resource-constrained environment making use of limited computation, power and space.

Today we are pleased to announce the release of MobileNets, a family of mobile-first computer vision models for TensorFlow, designed to effectively maximize accuracy while being mindful of the restricted resources for an on-device or embedded application. MobileNets are small, low-latency, low-power models parameterized to meet the resource constraints of a variety of use cases. They can be built upon for classification, detection, embeddings and segmentation similar to how other popular large scale models, such as Inception, are used.

This release contains the model definition for MobileNets in TensorFlow using TF-Slim, as well as 16 pre-trained ImageNet classification checkpoints for use in mobile projects of all sizes. The models can be run efficiently on mobile devices with TensorFlow Mobile.

We are excited to share MobileNets with the open-source community. Information for getting started can be found at the TensorFlow-Slim Image Classification Library. To learn how to run models on-device please go to TensorFlow Mobile. You can read more about the technical details of MobileNets in our paper, MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications.

Acknowledgements

MobileNets were made possible with the hard work of many engineers and researchers throughout Google. Specifically we would like to thank:

Core Contributors: Andrew G. Howard, Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, Marco Andreetto, Hartwig Adam

Special thanks to: Benoit Jacob, Skirmantas Kligys, George Papandreou, Liang-Chieh Chen, Derek Chow, Sergio Guadarrama, Jonathan Huang, Andre Hentz, Pete Warden

MobileNets: Open-Source Models for Efficient On-Device Vision

June 14, 2017

Posted by Andrew G. Howard, Senior Software Engineer and Menglong Zhu, Software Engineer

(Cross-posted on the Google Open Source Blog)

Deep learning has fueled tremendous progress in the field of computer vision in recent years, with neural networks repeatedly pushing the frontier of visual recognition technology. While many of those technologies such as object, landmark, logo and text recognition are provided for internet-connected devices through the Cloud Vision API, we believe that the ever-increasing computational power of mobile devices can enable the delivery of these technologies into the hands of our users, anytime, anywhere, regardless of internet connection. However, visual recognition for on device and embedded applications poses many challenges — models must run quickly with high accuracy in a resource-constrained environment making use of limited computation, power and space.

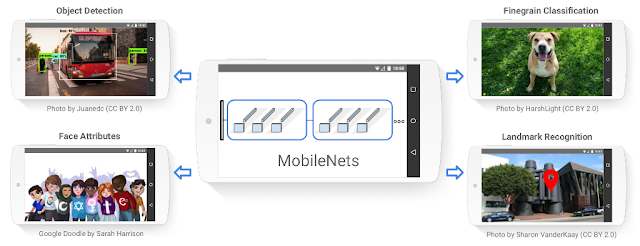

Today we are pleased to announce the release of MobileNets, a family of mobile-first computer vision models for TensorFlow, designed to effectively maximize accuracy while being mindful of the restricted resources for an on-device or embedded application. MobileNets are small, low-latency, low-power models parameterized to meet the resource constraints of a variety of use cases. They can be built upon for classification, detection, embeddings and segmentation similar to how other popular large scale models, such as Inception, are used.

|

| Example use cases include detection, fine-grain classification, attributes and geo-localization. |

Model Checkpoint | Million MACs | Million Parameters | Top-1 Accuracy | Top-5 Accuracy |

569 | 4.24 | 70.7 | 89.5 | |

418 | 4.24 | 69.3 | 88.9 | |

291 | 4.24 | 67.2 | 87.5 | |

186 | 4.24 | 64.1 | 85.3 | |

317 | 2.59 | 68.4 | 88.2 | |

233 | 2.59 | 67.4 | 87.3 | |

162 | 2.59 | 65.2 | 86.1 | |

104 | 2.59 | 61.8 | 83.6 | |

150 | 1.34 | 64.0 | 85.4 | |

110 | 1.34 | 62.1 | 84.0 | |

77 | 1.34 | 59.9 | 82.5 | |

49 | 1.34 | 56.2 | 79.6 | |

41 | 0.47 | 50.6 | 75.0 | |

34 | 0.47 | 49.0 | 73.6 | |

21 | 0.47 | 46.0 | 70.7 | |

14 | 0.47 | 41.3 | 66.2 |

| Choose the right MobileNet model to fit your latency and size budget. The size of the network in memory and on disk is proportional to the number of parameters. The latency and power usage of the network scales with the number of Multiply-Accumulates (MACs) which measures the number of fused Multiplication and Addition operations. Top-1 and Top-5 accuracies are measured on the ILSVRC dataset. |

Acknowledgements

MobileNets were made possible with the hard work of many engineers and researchers throughout Google. Specifically we would like to thank:

Core Contributors: Andrew G. Howard, Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, Marco Andreetto, Hartwig Adam

Special thanks to: Benoit Jacob, Skirmantas Kligys, George Papandreou, Liang-Chieh Chen, Derek Chow, Sergio Guadarrama, Jonathan Huang, Andre Hentz, Pete Warden

Other posts of interest

-

June 23, 2025

Unlocking rich genetic insights through multimodal AI with M-REGLE- Generative AI ·

- Health & Bioscience ·

- Machine Intelligence ·

- Open Source Models & Datasets

-

May 6, 2025

Making complex text understandable: Minimally-lossy text simplification with Gemini- Generative AI ·

- Health & Bioscience ·

- Product

-

May 2, 2025

Amplify Initiative: Localized data for globalized AI- Generative AI ·

- Global ·

- Open Source Models & Datasets ·

- Responsible AI