Simplify

I was messing about with some images on a website recently and while I was happy enough with the arrangement on large screens, I thought it would be better to have the images in a kind of carousel on smaller screens—a swipable gallery.

My old brain immediately thought this would be fairly complicated to do, but actually it’s ludicrously straightforward. Just stick this bit of CSS on the containing element inside a media query (or better yet, a container query):

display: flex;

overflow-x: auto;

That’s it.

Oh, and you can swap out overflow-x for overflow-inline if, like me, you’re a fan of logical properties. But support for that only just landed in Safari so I’d probably wait a little while before removing the old syntax.

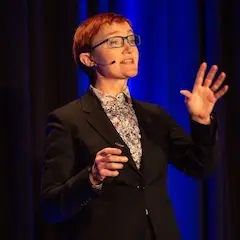

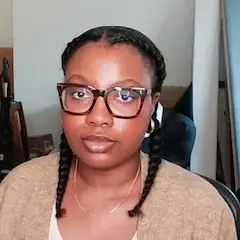

Here’s an example using pictures of some of the lovely people who will be speaking at Web Day Out:

While you’re at it, add this:

overscroll-behavior-inline: contain;

Thats prevents the user accidentally triggering a backwards/forwards navigation when they’re swiping.

You could add some more little niceties like this, but you don’t have to:

scroll-snap-type: inline mandatory;

scroll-behavior: smooth;

And maybe this on the individual items:

scroll-snap-align: center;

You could progressively enhance even more with the new pseudo-elements like ::scroll-button() and ::scroll-marker for Chromium browsers.

Apart from that last bit, none of this is particularly new or groundbreaking. But it was a pleasant reminder for me that interactions that used to be complicated to implement are now very straightforward indeed.

Here’s another example that Ana Tudor brought up yesterday:

You have a

sectionwith apon the left & animgon the right. How do you make theimgheight always be determined by thepwith the tiniest bit of CSS? 😼No changing the HTML structure in any way, no pseudos, no background declarations, no JS. Just a tiny bit of #CSS.

Old me would’ve said it can’t be done. But with a little bit of investigating, I found a nice straightforward solution:

section > img {

contain: size;

place-self: stretch;

object-fit: cover;

}

That’ll work whether the section has its display set to flex or grid.

There’s something very, very satisfying in finding a simple solution to something you thought would be complicated.

Honestly, I feel like web developers are constantly being gaslit into thinking that complex over-engineered solutions are the only option. When the discourse is being dominated by people invested in frameworks and libraries, all our default thinking will involve frameworks and libraries. That’s not good for users, and I don’t think it’s good for us either.

Of course, the trick is knowing that the simpler solution exists. The information probably isn’t going to fall in your lap—especially when the discourse is dominated by overly-complex JavaScript.

So get yourself a ticket for Web Day Out. It’s on Thursday, March 12th, 2026 right here in Brighton.

I guarantee you’ll hear about some magnificent techniques that will allow you to rip out plenty of complex code in favour of letting the browser do the work.