🐧 Linux (Recommended) |

🪟 Windows | 🐋 | 🐋 |

|---|---|---|---|

|

|

|

|

|

|

- | - |

| Method 1: Download Executable File | Download .exe File |

- | - |

Method 2: cargo install autogpt --all-features |

cargo install autogpt --all-features |

docker pull kevinrsdev/autogpt:0.1.14 |

docker pull kevinrsdev/orchgpt:0.1.14 |

| Set Environment Variables | Set Environment Variables | Set Environment Variables | Set Environment Variables |

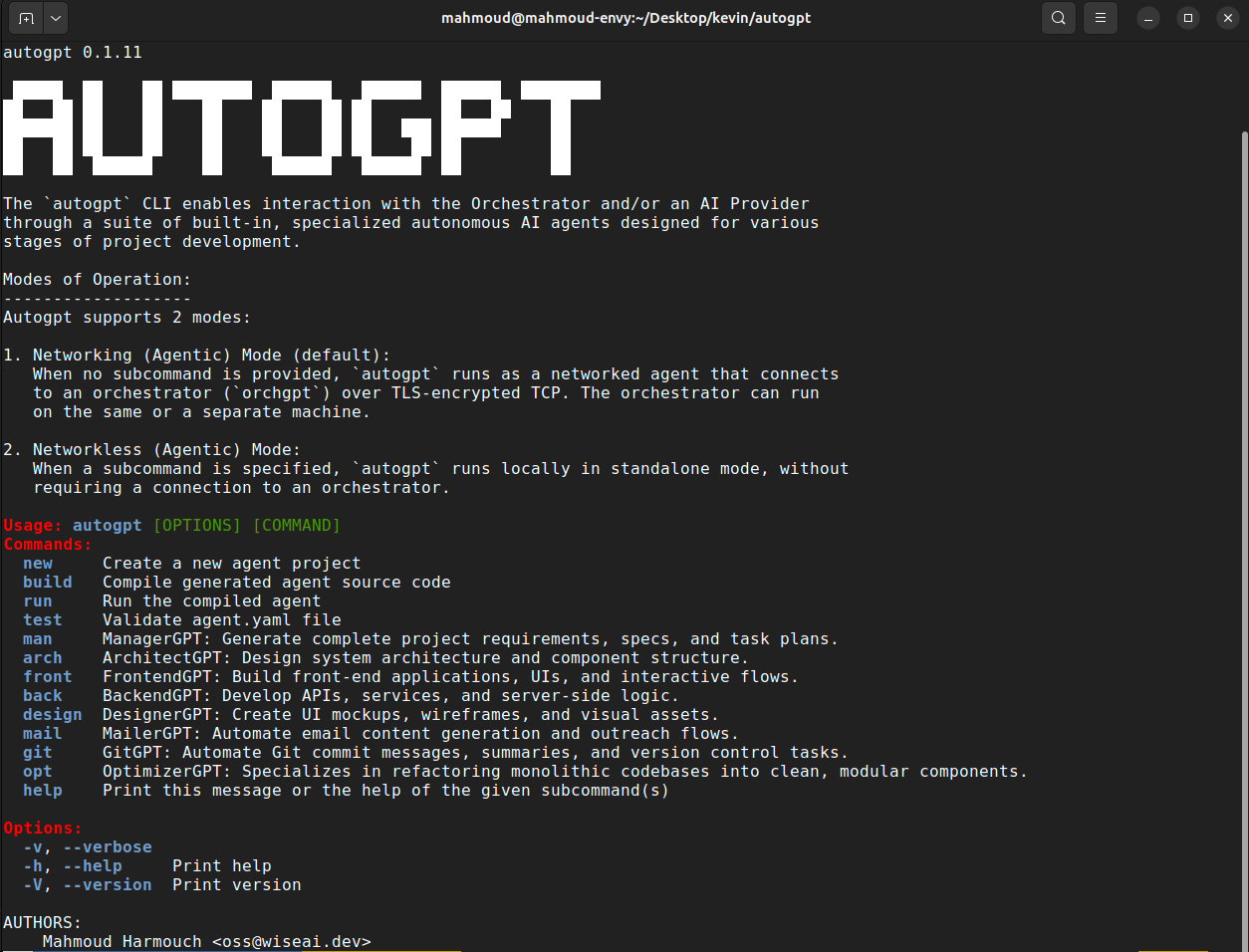

autogpt -h orchgpt -h |

autogpt.exe -h |

docker run kevinrsdev/autogpt:0.1.14 -h |

docker run kevinrsdev/orchgpt:0.1.14 -h |

autogpt-orch-mode.mp4

AutoGPT is a pure rust framework that simplifies AI agent creation and management for various tasks. Its remarkable speed and versatility are complemented by a mesh of built-in interconnected GPTs, ensuring exceptional performance and adaptability.

AutoGPT agents are modular and autonomous, built from composable components:

- 🔌 Tools & Sensors: Interface with the real world via actions (e.g., file I/O, APIs) and perception (e.g., audio, video, data).

- 🧠 Memory & Knowledge: Combines long-term vector memory with structured knowledge bases for reasoning and recall.

- 📝 No-Code Agent Configs: Define agents and their behaviors with simple, declarative YAML, no coding required.

- 🧭 Planner & Goals: Breaks down complex tasks into subgoals and tracks progress dynamically.

- 🧍 Persona & Capabilities: Customizable behavior profiles and access controls define how agents act.

- 🧑🤝🧑 Collaboration: Agents can delegate, swarm, or work in teams with other agents.

- 🪞 Self-Reflection: Introspection module to debug, adapt, or evolve internal strategies.

- 🔄 Context Management: Manages active memory (context window) for ongoing tasks and conversations.

- 📅 Scheduler: Time-based or reactive triggers for agent actions.

AutoGPT is designed for flexibility, integration, and scalability:

- 🧪 Custom Agent Creation: Build tailored agents for different roles or domains.

- 📋 Task Orchestration: Manage and distribute tasks across agents efficiently.

- 🧱 Extensibility: Add new tools, behaviors, or agent types with ease.

- 💻 CLI Tools: Command-line interface for rapid experimentation and control.

- 🧰 SDK Support: Embed AutoGPT into existing projects or systems seamlessly.

Please refer to our tutorial for guidance on installing, running, and/or building the CLI from source using either Cargo or Docker.

Note

For optimal performance and compatibility, we strongly advise utilizing a Linux operating system to install this CLI.

AutoGPT supports 3 modes of operation, non agentic and both standalone and distributed agentic use cases:

response-stream.mp4

In this mode, you can use the CLI to interact with the LLM directly, no need to define or configure agents. Use the -p flag to send prompts to your preferred LLM provider quickly and easily.

autogpt-stand-mode.mp4

In this mode, the user runs an individual autogpt agent directly via a subcommand (e.g., autogpt arch). Each agent operates independently without needing a networked orchestrator.

+------------------------------------+

| User |

| Provides |

| Project Prompt |

+------------------+-----------------+

|

v

+------------------+-----------------+

| ManagerGPT |

| Distributes Tasks |

| to Backend, Frontend, |

| Designer, Architect |

+------------------+-----------------+

|

v

+--------------------------+-----------+----------+----------------------+

| | | |

| v v v

+--+---------+ +--------+--------+ +-----+-------+ +-----+-------+

| Backend | | Frontend | | Designer | | Architect |

| GPT | | GPT | ... | GPT | | GPT |

| | | | | (Optional) | | |

+--+---------+ +-----------------+ +-------------+ +-------------+

| | | |

v v v v

(Backend Logic) (Frontend Logic) ... (Designer Logic) (Architect Logic)

| | | |

+--------------------------+----------+------------+-----------------------+

|

v

+------------------+-----------------+

| ManagerGPT |

| Collects and Consolidates |

| Results from Agents |

+------------------+-----------------+

|

v

+------------------+-----------------+

| User |

| Receives Final |

| Output from |

| ManagerGPT |

+------------------------------------+- ✍️ User Input: Provide a project's goal (e.g. "Develop a full stack app that fetches today's weather. Use the axum web framework for the backend and the Yew rust framework for the frontend.").

- 🚀 Initialization: AutoGPT initializes based on the user's input, creating essential components such as the

ManagerGPTand individual agent instances (ArchitectGPT, BackendGPT, FrontendGPT). - 🛠️ Agent Configuration: Each agent is configured with its unique objectives and capabilities, aligning them with the project's defined goals. This configuration ensures that agents contribute effectively to the project's objectives.

- 📋 Task Allocation: ManagerGPT distributes tasks among agents considering their capabilities and project requirements.

- ⚙️ Task Execution: Agents execute tasks asynchronously, leveraging their specialized functionalities.

- 🔄 Feedback Loop: Continuous feedback updates users on project progress and addresses issues.

autogpt-orch-mode.mp4

In networking mode, autogpt connects to an external orchestrator (orchgpt) over a secure TLS-encrypted TCP channel. This orchestrator manages agent lifecycles, routes commands, and enables rich inter-agent collaboration using a unified protocol.

AutoGPT introduces a novel and scalable communication protocol called IAC (Inter/Intra-Agent Communication), enabling seamless and secure interactions between agents and orchestrators, inspired by operating system IPC mechanisms.

In networking mode, AutoGPT utilizes a layered architecture:

+------------------------------------+

| User |

| Sends Prompt via CLI |

+------------------+-----------------+

|

v

TLS + Protobuf over TCP to:

+------------------+-----------------+

| Orchestrator |

| Receives and Routes Commands |

+-----------+----------+-------------+

| |

+-----------------------------+ +----------------------------+

| |

v v

+--------------------+ +--------------------+

| ArchitectGPT |<---------------- IAC ------------------>| ManagerGPT |

+--------------------+ +--------------------+

| Agent Layer: |

| (BackendGPT, FrontendGPT, DesignerGPT) |

+-------------------------------------+---------------------------------+

|

v

Task Execution & Collection

|

v

+---------------------------+

| User |

| Receives Final Output |

+---------------------------+All communication happens securely over TLS + TCP, with messages encoded in Protocol Buffers (protobuf) for efficiency and structure.

-

User Input: The user provides a project prompt like:

/architect create "fastapi app" | python

This is securely sent to the Orchestrator over TLS.

-

Initialization: The Orchestrator parses the command and initializes the appropriate agent (e.g.,

ArchitectGPT). -

Agent Configuration: Each agent is instantiated with its specialized goals:

- ArchitectGPT: Plans system structure

- BackendGPT: Generates backend logic

- FrontendGPT: Builds frontend UI

- DesignerGPT: Handles design

-

Task Allocation:

ManagerGPTdynamically assigns subtasks to agents using the IAC protocol. It determines which agent should perform what based on capabilities and the original user goal. -

Task Execution: Agents execute their tasks, communicate with their subprocesses or other agents via IAC (inter/intra communication), and push updates or results back to the orchestrator.

-

Feedback Loop: Throughout execution, agents return status reports. The

ManagerGPTcollects all output, and the Orchestrator sends it back to the user.

At the current release, Autogpt consists of 8 built-in specialized autonomous AI agents ready to assist you in bringing your ideas to life! Refer to our guide to learn more about how the built-in agents work.

Your can refer to our examples for guidance on how to use the cli in a jupyter environment.

For detailed usage instructions and API documentation, refer to the AutoGPT Documentation.

Contributions are welcome! See the Contribution Guidelines for more information on how to get started.

This project is licensed under the MIT License - see the LICENSE file for details.